Jun Wu

FakeSwarm: Improving Fake News Detection with Swarming Characteristics

May 30, 2023Abstract:The proliferation of fake news poses a serious threat to society, as it can misinform and manipulate the public, erode trust in institutions, and undermine democratic processes. To address this issue, we present FakeSwarm, a fake news identification system that leverages the swarming characteristics of fake news. To extract the swarm behavior, we propose a novel concept of fake news swarming characteristics and design three types of swarm features, including principal component analysis, metric representation, and position encoding. We evaluate our system on a public dataset and demonstrate the effectiveness of incorporating swarm features in fake news identification, achieving an f1-score and accuracy of over 97% by combining all three types of swarm features. Furthermore, we design an online learning pipeline based on the hypothesis of the temporal distribution pattern of fake news emergence, validated on a topic with early emerging fake news and a shortage of text samples, showing that swarm features can significantly improve recall rates in such cases. Our work provides a new perspective and approach to fake news detection and highlights the importance of considering swarming characteristics in detecting fake news.

MedLens: Improve mortality prediction via medical signs selecting and regression interpolation

May 19, 2023Abstract:Monitoring the health status of patients and predicting mortality in advance is vital for providing patients with timely care and treatment. Massive medical signs in electronic health records (EHR) are fitted into advanced machine learning models to make predictions. However, the data-quality problem of original clinical signs is less discussed in the literature. Based on an in-depth measurement of the missing rate and correlation score across various medical signs and a large amount of patient hospital admission records, we discovered the comprehensive missing rate is extremely high, and a large number of useless signs could hurt the performance of prediction models. Then we concluded that only improving data-quality could improve the baseline accuracy of different prediction algorithms. We designed MEDLENS, with an automatic vital medical signs selection approach via statistics and a flexible interpolation approach for high missing rate time series. After augmenting the data-quality of original medical signs, MEDLENS applies ensemble classifiers to boost the accuracy and reduce the computation overhead at the same time. It achieves a very high accuracy performance of 0.96% AUC-ROC and 0.81% AUC-PR, which exceeds the previous benchmark.

FineEHR: Refine Clinical Note Representations to Improve Mortality Prediction

May 04, 2023Abstract:Monitoring the health status of patients in the Intensive Care Unit (ICU) is a critical aspect of providing superior care and treatment. The availability of large-scale electronic health records (EHR) provides machine learning models with an abundance of clinical text and vital sign data, enabling them to make highly accurate predictions. Despite the emergence of advanced Natural Language Processing (NLP) algorithms for clinical note analysis, the complex textual structure and noise present in raw clinical data have posed significant challenges. Coarse embedding approaches without domain-specific refinement have limited the accuracy of these algorithms. To address this issue, we propose FINEEHR, a system that utilizes two representation learning techniques, namely metric learning and fine-tuning, to refine clinical note embeddings, while leveraging the intrinsic correlations among different health statuses and note categories. We evaluate the performance of FINEEHR using two metrics, namely Area Under the Curve (AUC) and AUC-PR, on a real-world MIMIC III dataset. Our experimental results demonstrate that both refinement approaches improve prediction accuracy, and their combination yields the best results. Moreover, our proposed method outperforms prior works, with an AUC improvement of over 10%, achieving an average AUC of 96.04% and an average AUC-PR of 96.48% across various classifiers.

BotTriNet: A Unified and Efficient Embedding for Social Bots Detection via Metric Learning

Apr 18, 2023Abstract:A persistently popular topic in online social networks is the rapid and accurate discovery of bot accounts to prevent their invasion and harassment of genuine users. We propose a unified embedding framework called BotTriNet, which utilizes textual content posted by accounts for bot detection based on the assumption that contexts naturally reveal account personalities and habits. Content is abundant and valuable if the system efficiently extracts bot-related information using embedding techniques. Beyond the general embedding framework that generates word, sentence, and account embeddings, we design a triplet network to tune the raw embeddings (produced by traditional natural language processing techniques) for better classification performance. We evaluate detection accuracy and f1score on a real-world dataset CRESCI2017, comprising three bot account categories and five bot sample sets. Our system achieves the highest average accuracy of 98.34% and f1score of 97.99% on two content-intensive bot sets, outperforming previous work and becoming state-of-the-art. It also makes a breakthrough on four content-less bot sets, with an average accuracy improvement of 11.52% and an average f1score increase of 16.70%.

BotShape: A Novel Social Bots Detection Approach via Behavioral Patterns

Mar 17, 2023Abstract:An essential topic in online social network security is how to accurately detect bot accounts and relieve their harmful impacts (e.g., misinformation, rumor, and spam) on genuine users. Based on a real-world data set, we construct behavioral sequences from raw event logs. After extracting critical characteristics from behavioral time series, we observe differences between bots and genuine users and similar patterns among bot accounts. We present a novel social bot detection system BotShape, to automatically catch behavioral sequences and characteristics as features for classifiers to detect bots. We evaluate the detection performance of our system in ground-truth instances, showing an average accuracy of 98.52% and an average f1-score of 96.65% on various types of classifiers. After comparing it with other research, we conclude that BotShape is a novel approach to profiling an account, which could improve performance for most methods by providing significant behavioral features.

Non-IID Transfer Learning on Graphs

Dec 15, 2022Abstract:Transfer learning refers to the transfer of knowledge or information from a relevant source domain to a target domain. However, most existing transfer learning theories and algorithms focus on IID tasks, where the source/target samples are assumed to be independent and identically distributed. Very little effort is devoted to theoretically studying the knowledge transferability on non-IID tasks, e.g., cross-network mining. To bridge the gap, in this paper, we propose rigorous generalization bounds and algorithms for cross-network transfer learning from a source graph to a target graph. The crucial idea is to characterize the cross-network knowledge transferability from the perspective of the Weisfeiler-Lehman graph isomorphism test. To this end, we propose a novel Graph Subtree Discrepancy to measure the graph distribution shift between source and target graphs. Then the generalization error bounds on cross-network transfer learning, including both cross-network node classification and link prediction tasks, can be derived in terms of the source knowledge and the Graph Subtree Discrepancy across domains. This thereby motivates us to propose a generic graph adaptive network (GRADE) to minimize the distribution shift between source and target graphs for cross-network transfer learning. Experimental results verify the effectiveness and efficiency of our GRADE framework on both cross-network node classification and cross-domain recommendation tasks.

RING++: Roto-translation Invariant Gram for Global Localization on a Sparse Scan Map

Oct 12, 2022

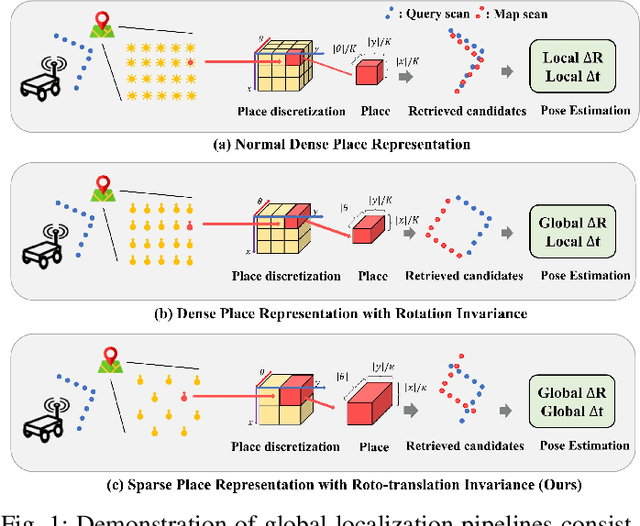

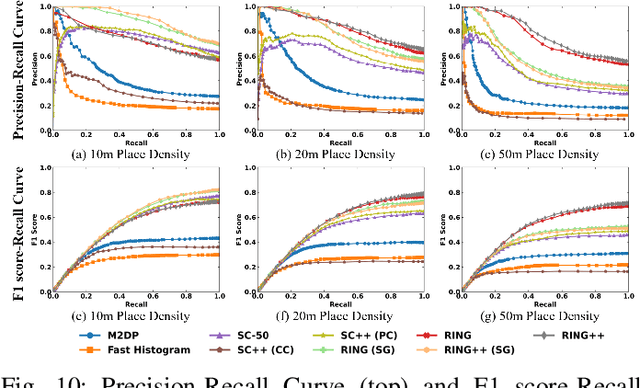

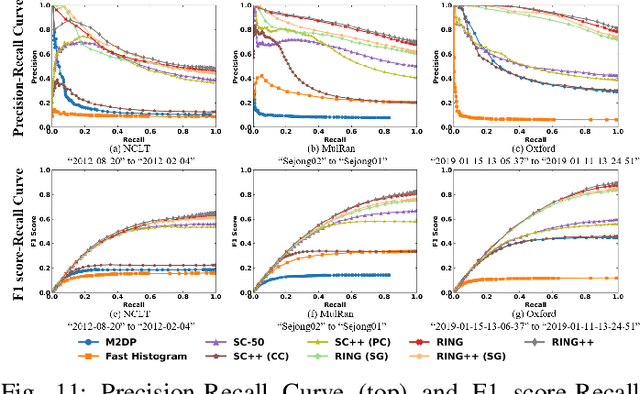

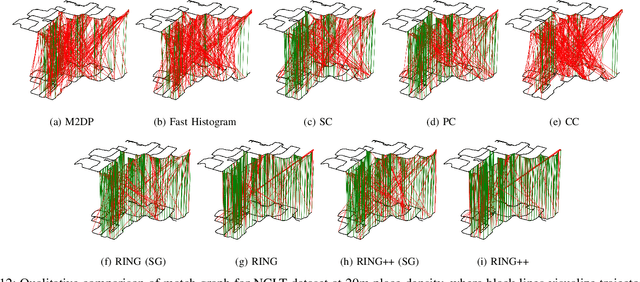

Abstract:Global localization plays a critical role in many robot applications. LiDAR-based global localization draws the community's focus with its robustness against illumination and seasonal changes. To further improve the localization under large viewpoint differences, we propose RING++ which has roto-translation invariant representation for place recognition, and global convergence for both rotation and translation estimation. With the theoretical guarantee, RING++ is able to address the large viewpoint difference using a lightweight map with sparse scans. In addition, we derive sufficient conditions of feature extractors for the representation preserving the roto-translation invariance, making RING++ a framework applicable to generic multi-channel features. To the best of our knowledge, this is the first learning-free framework to address all subtasks of global localization in the sparse scan map. Validations on real-world datasets show that our approach demonstrates better performance than state-of-the-art learning-free methods, and competitive performance with learning-based methods. Finally, we integrate RING++ into a multi-robot/session SLAM system, performing its effectiveness in collaborative applications.

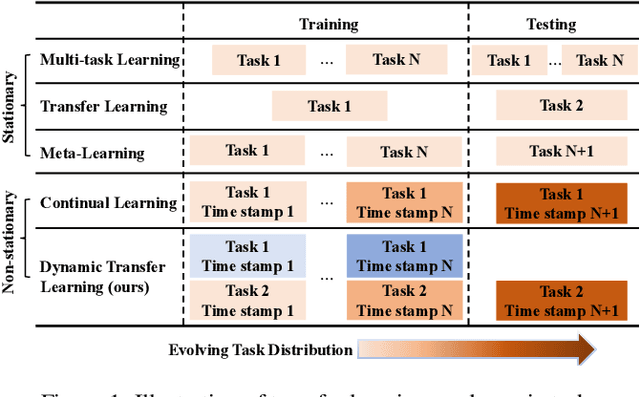

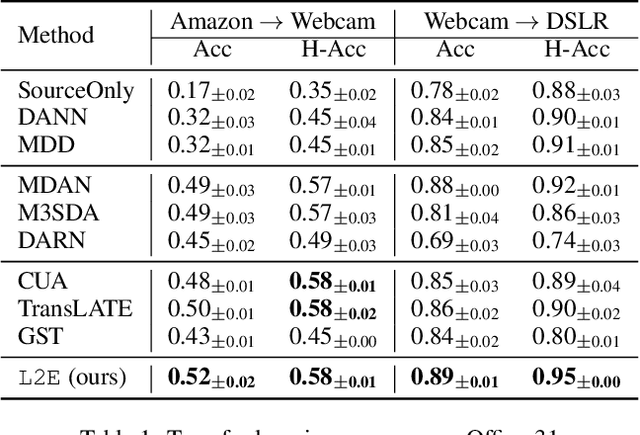

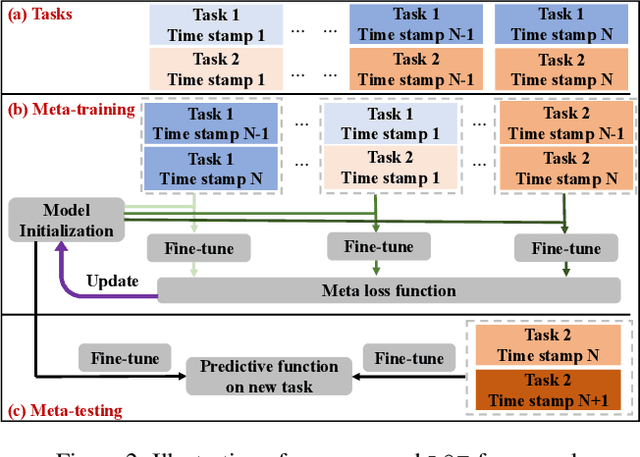

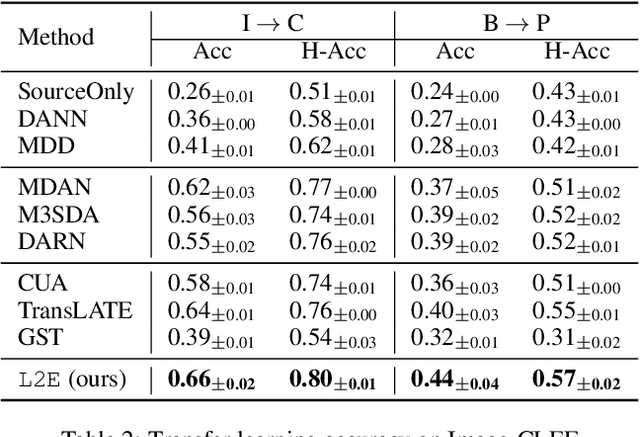

A Unified Meta-Learning Framework for Dynamic Transfer Learning

Jul 05, 2022

Abstract:Transfer learning refers to the transfer of knowledge or information from a relevant source task to a target task. However, most existing works assume both tasks are sampled from a stationary task distribution, thereby leading to the sub-optimal performance for dynamic tasks drawn from a non-stationary task distribution in real scenarios. To bridge this gap, in this paper, we study a more realistic and challenging transfer learning setting with dynamic tasks, i.e., source and target tasks are continuously evolving over time. We theoretically show that the expected error on the dynamic target task can be tightly bounded in terms of source knowledge and consecutive distribution discrepancy across tasks. This result motivates us to propose a generic meta-learning framework L2E for modeling the knowledge transferability on dynamic tasks. It is centered around a task-guided meta-learning problem with a group of meta-pairs of tasks, based on which we are able to learn the prior model initialization for fast adaptation on the newest target task. L2E enjoys the following properties: (1) effective knowledge transferability across dynamic tasks; (2) fast adaptation to the new target task; (3) mitigation of catastrophic forgetting on historical target tasks; and (4) flexibility in incorporating any existing static transfer learning algorithms. Extensive experiments on various image data sets demonstrate the effectiveness of the proposed L2E framework.

Towards Two-view 6D Object Pose Estimation: A Comparative Study on Fusion Strategy

Jul 01, 2022

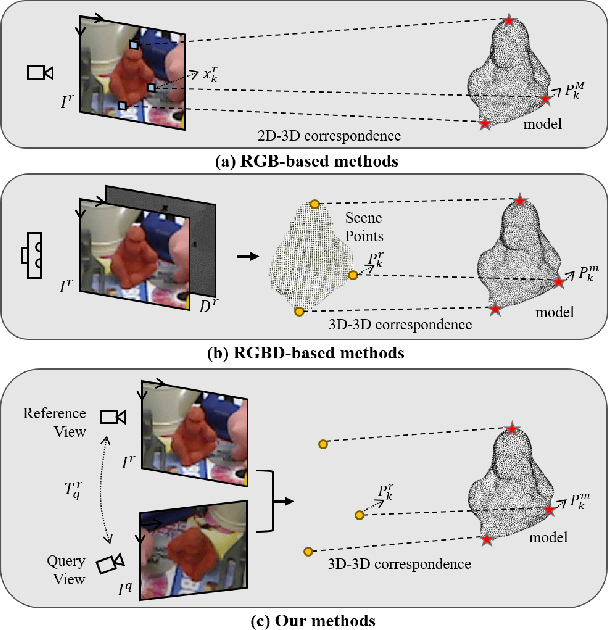

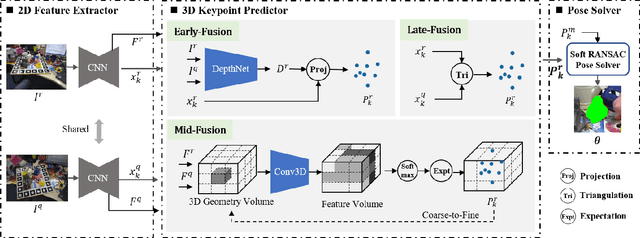

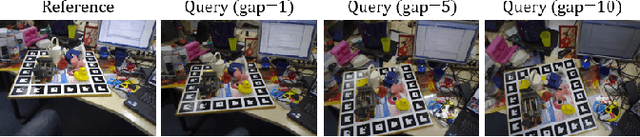

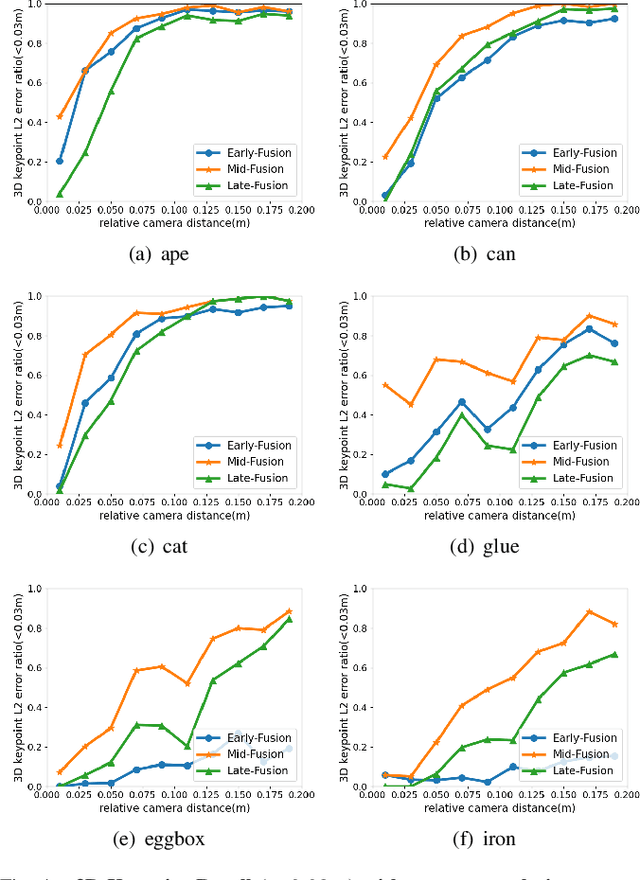

Abstract:Current RGB-based 6D object pose estimation methods have achieved noticeable performance on datasets and real world applications. However, predicting 6D pose from single 2D image features is susceptible to disturbance from changing of environment and textureless or resemblant object surfaces. Hence, RGB-based methods generally achieve less competitive results than RGBD-based methods, which deploy both image features and 3D structure features. To narrow down this performance gap, this paper proposes a framework for 6D object pose estimation that learns implicit 3D information from 2 RGB images. Combining the learned 3D information and 2D image features, we establish more stable correspondence between the scene and the object models. To seek for the methods best utilizing 3D information from RGB inputs, we conduct an investigation on three different approaches, including Early- Fusion, Mid-Fusion, and Late-Fusion. We ascertain the Mid- Fusion approach is the best approach to restore the most precise 3D keypoints useful for object pose estimation. The experiments show that our method outperforms state-of-the-art RGB-based methods, and achieves comparable results with RGBD-based methods.

LightFR: Lightweight Federated Recommendation with Privacy-preserving Matrix Factorization

Jun 23, 2022

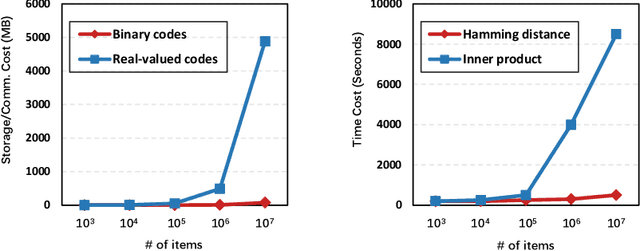

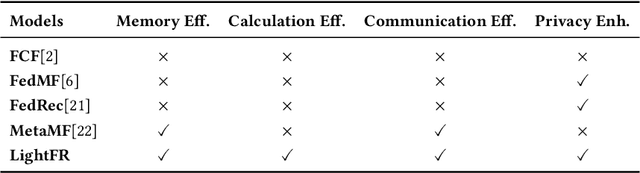

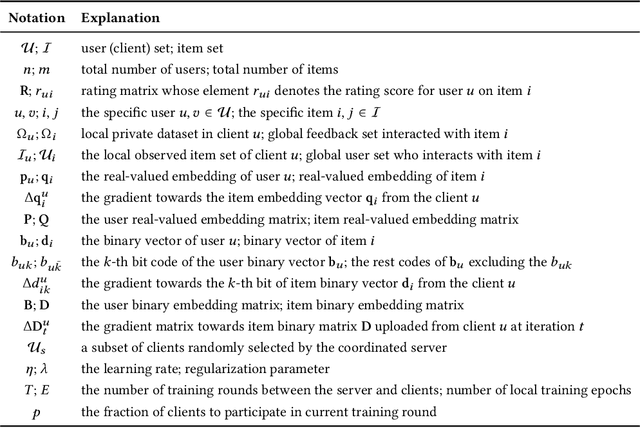

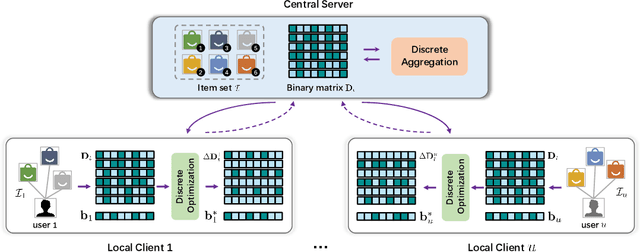

Abstract:Federated recommender system (FRS), which enables many local devices to train a shared model jointly without transmitting local raw data, has become a prevalent recommendation paradigm with privacy-preserving advantages. However, previous work on FRS performs similarity search via inner product in continuous embedding space, which causes an efficiency bottleneck when the scale of items is extremely large. We argue that such a scheme in federated settings ignores the limited capacities in resource-constrained user devices (i.e., storage space, computational overhead, and communication bandwidth), and makes it harder to be deployed in large-scale recommender systems. Besides, it has been shown that the transmission of local gradients in real-valued form between server and clients may leak users' private information. To this end, we propose a lightweight federated recommendation framework with privacy-preserving matrix factorization, LightFR, that is able to generate high-quality binary codes by exploiting learning to hash techniques under federated settings, and thus enjoys both fast online inference and economic memory consumption. Moreover, we devise an efficient federated discrete optimization algorithm to collaboratively train model parameters between the server and clients, which can effectively prevent real-valued gradient attacks from malicious parties. Through extensive experiments on four real-world datasets, we show that our LightFR model outperforms several state-of-the-art FRS methods in terms of recommendation accuracy, inference efficiency and data privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge