John Galeotti

Automatic Cannulation of Femoral Vessels in a Porcine Shock Model

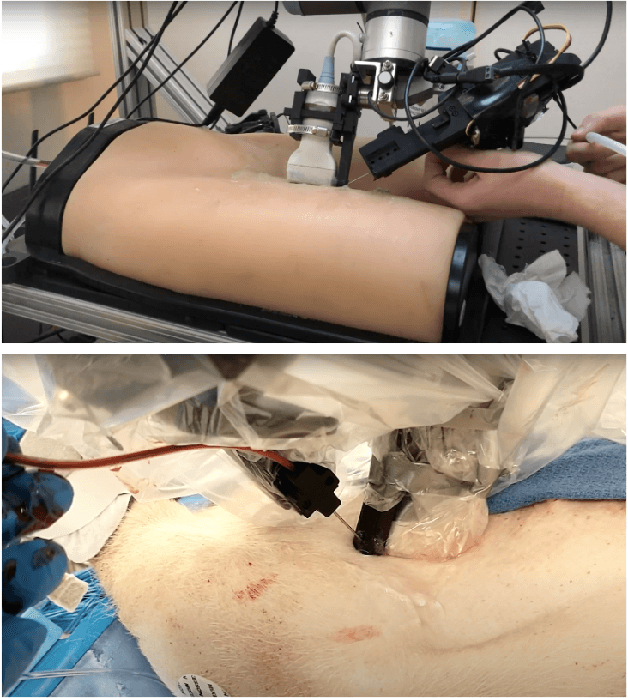

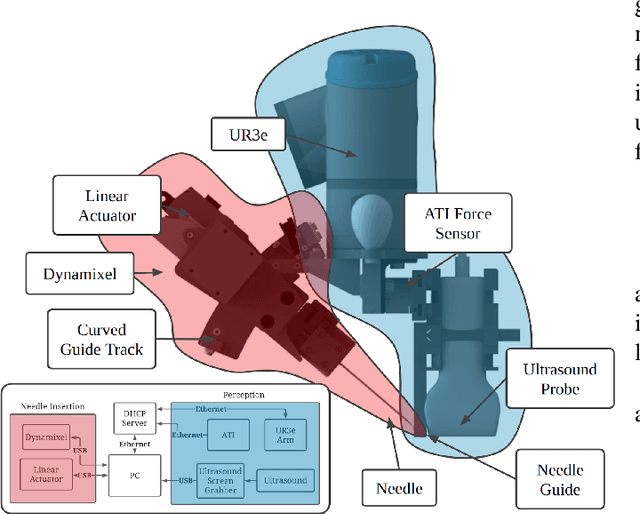

Jun 17, 2025Abstract:Rapid and reliable vascular access is critical in trauma and critical care. Central vascular catheterization enables high-volume resuscitation, hemodynamic monitoring, and advanced interventions like ECMO and REBOA. While peripheral access is common, central access is often necessary but requires specialized ultrasound-guided skills, posing challenges in prehospital settings. The complexity arises from deep target vessels and the precision needed for needle placement. Traditional techniques, like the Seldinger method, demand expertise to avoid complications. Despite its importance, ultrasound-guided central access is underutilized due to limited field expertise. While autonomous needle insertion has been explored for peripheral vessels, only semi-autonomous methods exist for femoral access. This work advances toward full automation, integrating robotic ultrasound for minimally invasive emergency procedures. Our key contribution is the successful femoral vein and artery cannulation in a porcine hemorrhagic shock model.

* 2 pages, 2 figures, conference

LEARNER: Learning Granular Labels from Coarse Labels using Contrastive Learning

Nov 02, 2024

Abstract:A crucial question in active patient care is determining if a treatment is having the desired effect, especially when changes are subtle over short periods. We propose using inter-patient data to train models that can learn to detect these fine-grained changes within a single patient. Specifically, can a model trained on multi-patient scans predict subtle changes in an individual patient's scans? Recent years have seen increasing use of deep learning (DL) in predicting diseases using biomedical imaging, such as predicting COVID-19 severity using lung ultrasound (LUS) data. While extensive literature exists on successful applications of DL systems when well-annotated large-scale datasets are available, it is quite difficult to collect a large corpus of personalized datasets for an individual. In this work, we investigate the ability of recent computer vision models to learn fine-grained differences while being trained on data showing larger differences. We evaluate on an in-house LUS dataset and a public ADNI brain MRI dataset. We find that models pre-trained on clips from multiple patients can better predict fine-grained differences in scans from a single patient by employing contrastive learning.

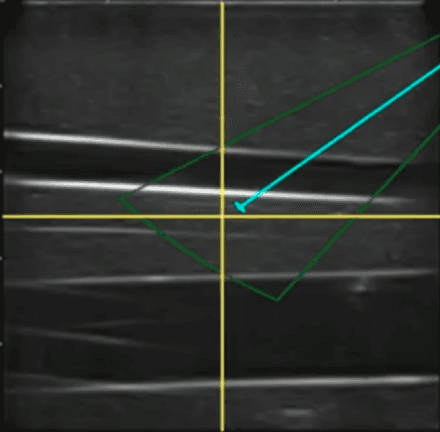

Motion Informed Needle Segmentation in Ultrasound Images

Dec 05, 2023Abstract:Segmenting a moving needle in ultrasound images is challenging due to the presence of artifacts, noise, and needle occlusion. This task becomes even more demanding in scenarios where data availability is limited. Convolutional Neural Networks (CNNs) have been successful in many computer vision applications, but struggle to accurately segment needles without considering their motion. In this paper, we present a novel approach for needle segmentation that combines classical Kalman Filter (KF) techniques with data-driven learning, incorporating both needle features and needle motion. Our method offers two key contributions. First, we propose a compatible framework that seamlessly integrates into commonly used encoder-decoder style architectures. Second, we demonstrate superior performance compared to recent state-of-the-art needle segmentation models using our novel convolutional neural network (CNN) based KF-inspired block, achieving a 15\% reduction in pixel-wise needle tip error and an 8\% reduction in length error. Third, to our knowledge we are the first to implement a learnable filter to incorporate non-linear needle motion for improving needle segmentation.

Unsupervised Deformable Ultrasound Image Registration and Its Application for Vessel Segmentation

Jun 23, 2023Abstract:This paper presents a deep-learning model for deformable registration of ultrasound images at online rates, which we call U-RAFT. As its name suggests, U-RAFT is based on RAFT, a convolutional neural network for estimating optical flow. U-RAFT, however, can be trained in an unsupervised manner and can generate synthetic images for training vessel segmentation models. We propose and compare the registration quality of different loss functions for training U-RAFT. We also show how our approach, together with a robot performing force-controlled scans, can be used to generate synthetic deformed images to significantly expand the size of a femoral vessel segmentation training dataset without the need for additional manual labeling. We validate our approach on both a silicone human tissue phantom as well as on in-vivo porcine images. We show that U-RAFT generates synthetic ultrasound images with 98% and 81% structural similarity index measure (SSIM) to the real ultrasound images for the phantom and porcine datasets, respectively. We also demonstrate that synthetic deformed images from U-RAFT can be used as a data augmentation technique for vessel segmentation models to improve intersection-over-union (IoU) segmentation performance

Unsupervised Deformable Image Registration for Respiratory Motion Compensation in Ultrasound Images

Jun 23, 2023Abstract:In this paper, we present a novel deep-learning model for deformable registration of ultrasound images and an unsupervised approach to training this model. Our network employs recurrent all-pairs field transforms (RAFT) and a spatial transformer network (STN) to generate displacement fields at online rates (apprx. 30 Hz) and accurately track pixel movement. We call our approach unsupervised recurrent all-pairs field transforms (U-RAFT). In this work, we use U-RAFT to track pixels in a sequence of ultrasound images to cancel out respiratory motion in lung ultrasound images. We demonstrate our method on in-vivo porcine lung videos. We show a reduction of 76% in average pixel movement in the porcine dataset using respiratory motion compensation strategy. We believe U-RAFT is a promising tool for compensating different kinds of motions like respiration and heartbeat in ultrasound images of deformable tissue.

Toward Robotically Automated Femoral Vascular Access

Jul 06, 2021

Abstract:Advanced resuscitative technologies, such as Extra Corporeal Membrane Oxygenation (ECMO) cannulation or Resuscitative Endovascular Balloon Occlusion of the Aorta (REBOA), are technically difficult even for skilled medical personnel. This paper describes the core technologies that comprise a teleoperated system capable of granting femoral vascular access, which is an important step in both of these procedures and a major roadblock in their wider use in the field. These technologies include a kinematic manipulator, various sensing modalities, and a user interface. In addition, we evaluate our system on a surgical phantom as well as in-vivo porcine experiments. These resulted in, to the best of our knowledge, the first robot-assisted arterial catheterizations; a major step towards our eventual goal of automatic catheter insertion through the Seldinger technique.

Good and Bad Boundaries in Ultrasound Compounding: Preserving Anatomic Boundaries While Suppressing Artifacts

Nov 24, 2020

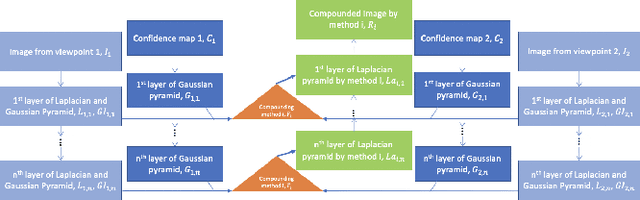

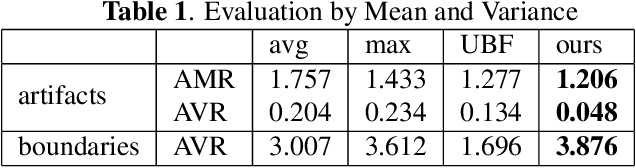

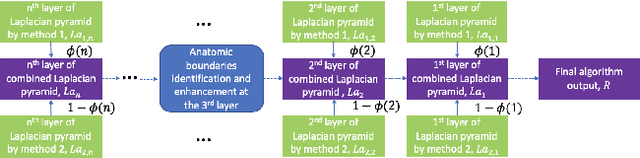

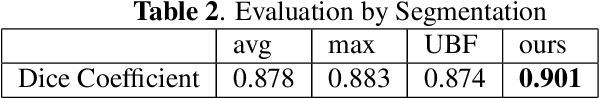

Abstract:Ultrasound 3D compounding is important for volumetric reconstruction, but as of yet there is no consensus on best practices for compounding. Ultrasound images depend on probe direction and the path sound waves pass through, so when multiple intersecting B-scans of the same spot from different perspectives yield different pixel values, there is not a single, ideal representation for compounding (i.e. combining) the overlapping pixel values. Current popular methods inevitably suppress or altogether leave out bright or dark regions that are useful, and potentially introduce new artifacts. In this work, we establish a new algorithm to compound the overlapping pixels from different view points in ultrasound. We uniquely leverage Laplacian and Gaussian Pyramids to preserve the maximum boundary contrast without overemphasizing noise and speckle. We evaluate our algorithm by comparing ours with previous algorithms, and we show that our approach not only preserves both light and dark details, but also somewhat suppresses artifacts, rather than amplifying them.

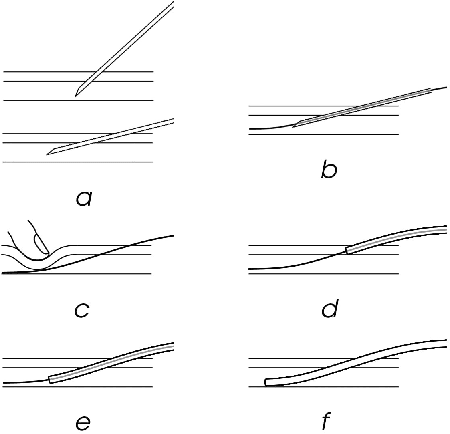

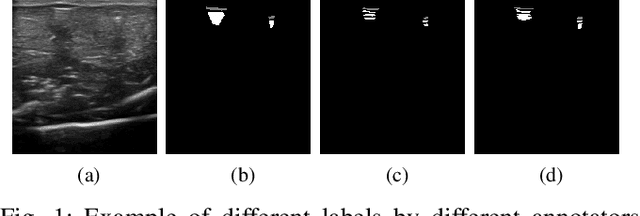

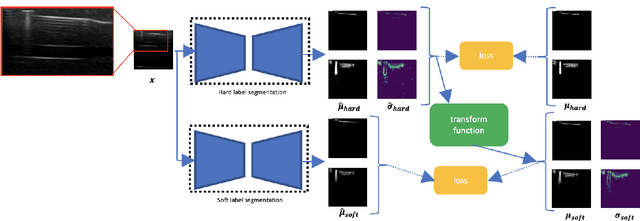

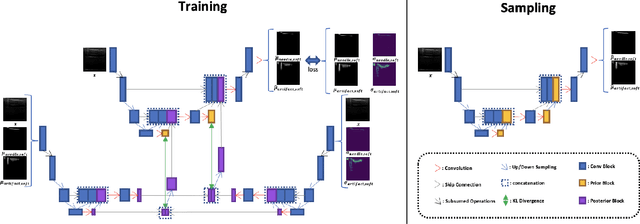

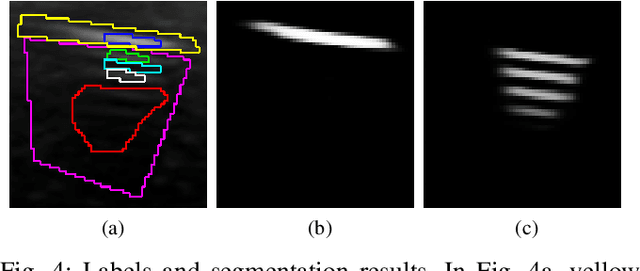

Weakly- and Semi-Supervised Probabilistic Segmentation and Quantification of Ultrasound Needle-Reverberation Artifacts to Allow Better AI Understanding of Tissue Beneath Needles

Nov 24, 2020

Abstract:Ultrasound image quality has been continually improving. However, when needles or other metallic objects are operating inside the tissue, the resulting reverberation artifacts can severely corrupt the surrounding image quality. Such effects are challenging for existing computer vision algorithms for medical image analysis. Needle reverberation artifacts can be hard to identify at times and affect various pixel values to different degrees. The boundaries of such artifacts are ambiguous, leading to disagreement among human experts labeling the artifacts. We purpose a weakly- and semi-supervised, probabilistic needle-and-needle-artifact segmentation algorithm to separate the desired tissue-based pixel values from the superimposed artifacts. Our method models the intensity decay of artifact intensities and is designed to minimize the human labeling error. We demonstrate the applicability of the approach, comparing it against other segmentation algorithms. Our method is capable of differentiating the reverberations from artifact-free patches between reverberations, as well as modeling the intensity fall-off in the artifacts. Our method matches state-of-the-art artifact segmentation performance, and sets a new standard in estimating the per-pixel contributions of artifact vs underlying anatomy, especially in the immediately adjacent regions between reverberation lines.

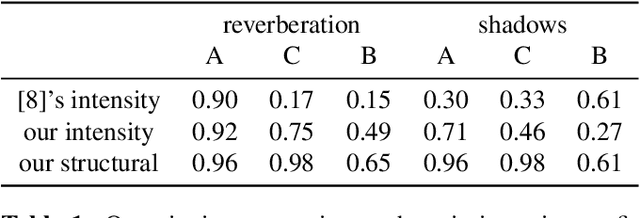

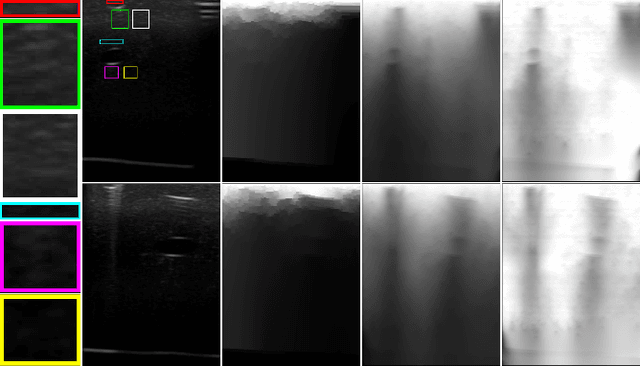

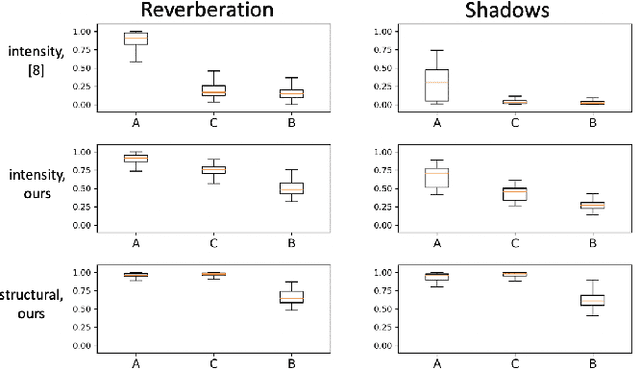

Ultrasound Confidence Maps of Intensity and Structure Based on Directed Acyclic Graphs and Artifact Models

Nov 24, 2020

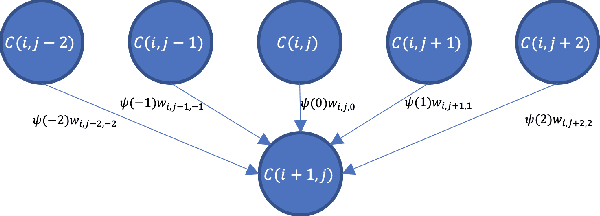

Abstract:Ultrasound imaging has been improving, but continues to suffer from inherent artifacts that are challenging to model, such as attenuation, shadowing, diffraction, speckle, etc. These artifacts can potentially confuse image analysis algorithms unless an attempt is made to assess the certainty of individual pixel values. Our novel confidence algorithms analyze pixel values using a directed acyclic graph based on acoustic physical properties of ultrasound imaging. We demonstrate unique capabilities of our approach and compare it against previous confidence-measurement algorithms for shadow-detection and image-compounding tasks.

A Study of Domain Generalization on Ultrasound-based Multi-Class Segmentation of Arteries, Veins, Ligaments, and Nerves Using Transfer Learning

Nov 13, 2020

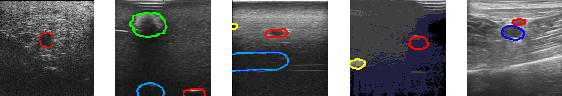

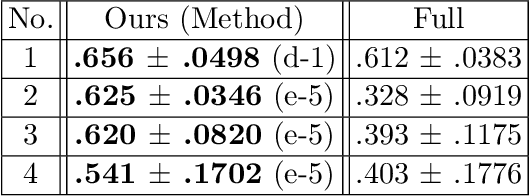

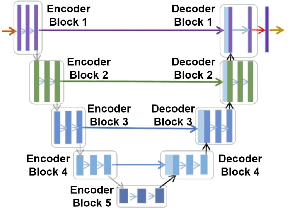

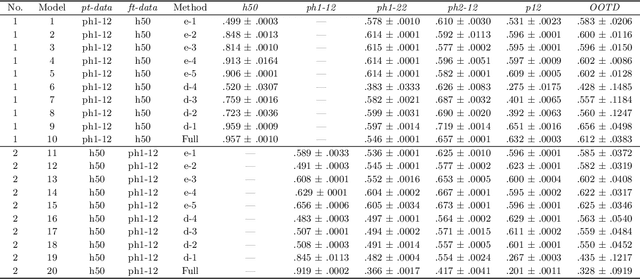

Abstract:Identifying landmarks in the femoral area is crucial for ultrasound (US) -based robot-guided catheter insertion, and their presentation varies when imaged with different scanners. As such, the performance of past deep learning-based approaches is also narrowly limited to the training data distribution; this can be circumvented by fine-tuning all or part of the model, yet the effects of fine-tuning are seldom discussed. In this work, we study the US-based segmentation of multiple classes through transfer learning by fine-tuning different contiguous blocks within the model, and evaluating on a gamut of US data from different scanners and settings. We propose a simple method for predicting generalization on unseen datasets and observe statistically significant differences between the fine-tuning methods while working towards domain generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge