Jihong Zhu

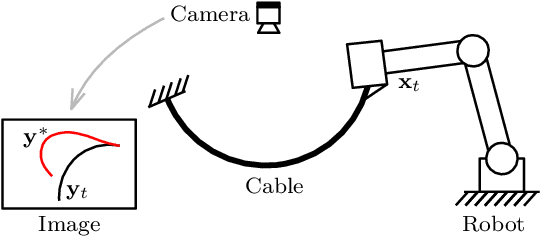

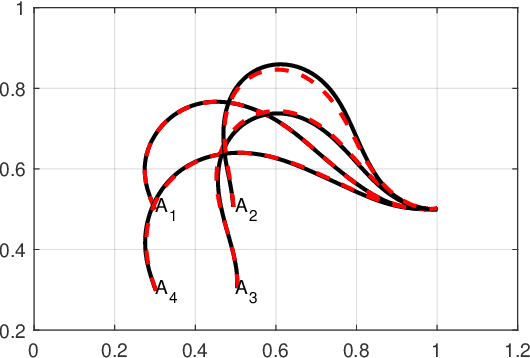

Shape Control of Elastic Objects Based on Implicit Sensorimotor Models and Data-Driven Geometric Features

Jan 06, 2021

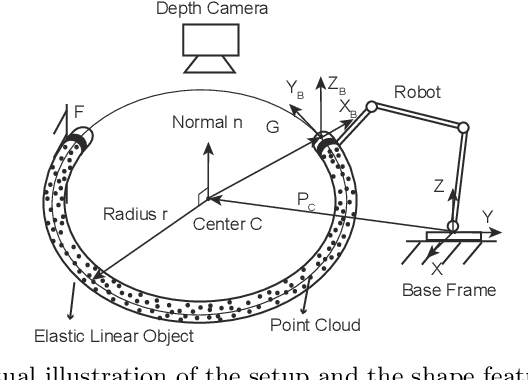

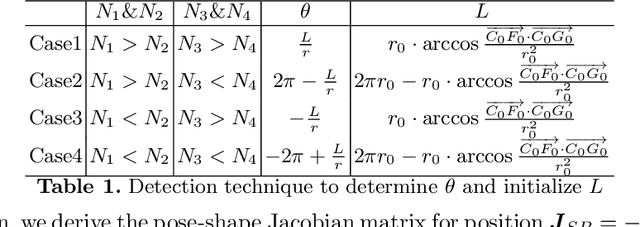

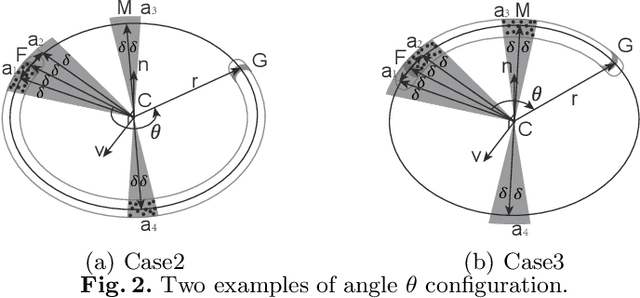

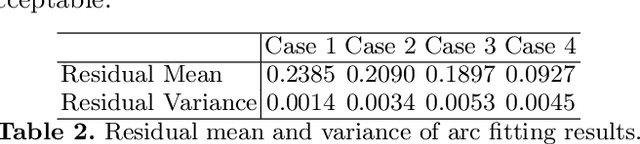

Abstract:This paper proposes a general approach to design automatic controls to manipulate elastic objects into desired shapes. The object's geometric model is defined as the shape feature based on the specific task to globally describe the deformation. Raw visual feedback data is processed using classic regression methods to identify parameters of data-driven geometric models in real-time. Our proposed method is able to analytically compute a pose-shape Jacobian matrix based on implicit functions. This model is then used to derive a shape servoing controller. To validate the proposed method, we report a detailed experimental study with robotic manipulators deforming an elastic rod.

LaSeSOM: A Latent Representation Framework for Semantic Soft Object Manipulation

Dec 10, 2020

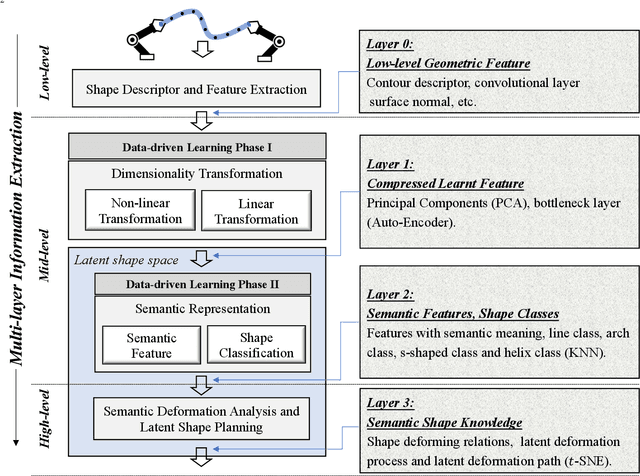

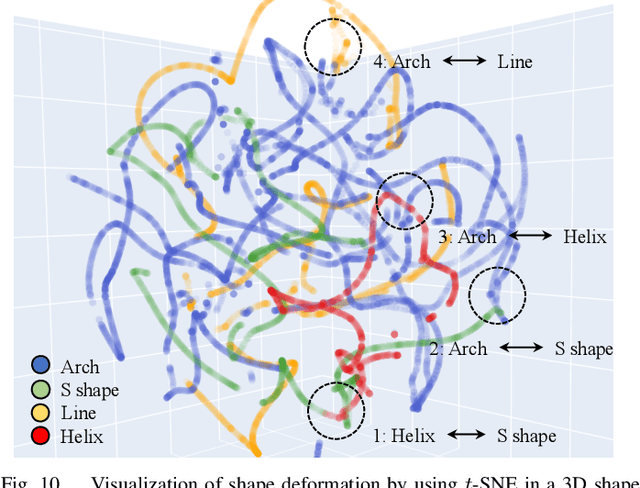

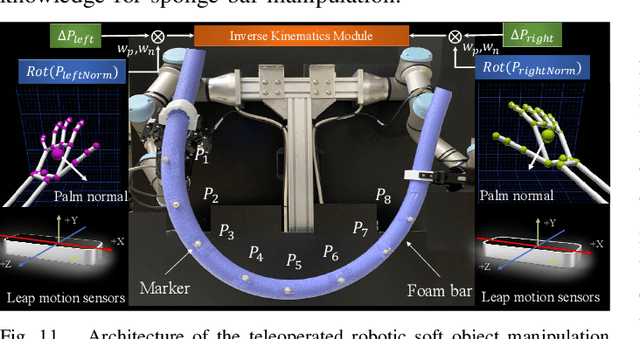

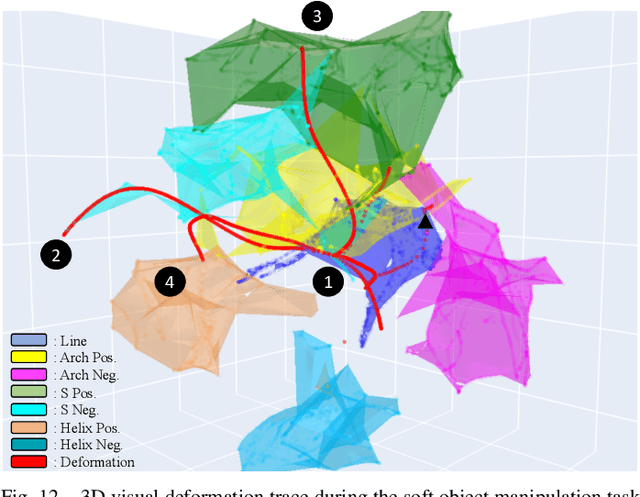

Abstract:Soft object manipulation has recently gained popularity within the robotics community due to its potential applications in many economically important areas. Although great progress has been recently achieved in these types of tasks, most state-of-the-art methods are case-specific; They can only be used to perform a single deformation task (e.g. bending), as their shape representation algorithms typically rely on "hard-coded" features. In this paper, we present LaSeSOM, a new feedback latent representation framework for semantic soft object manipulation. Our new method introduces internal latent representation layers between low-level geometric feature extraction and high-level semantic shape analysis; This allows the identification of each compressed semantic function and the formation of a valid shape classifier from different feature extraction levels. The proposed latent framework makes soft object representation more generic (independent from the object's geometry and its mechanical properties) and scalable (it can work with 1D/2D/3D tasks). Its high-level semantic layer enables to perform (quasi) shape planning tasks with soft objects, a valuable and underexplored capability in many soft manipulation tasks. To validate this new methodology, we report a detailed experimental study with robotic manipulators.

Robust Autonomous Landing of UAV in Non-Cooperative Environments based on Dynamic Time Camera-LiDAR Fusion

Nov 27, 2020

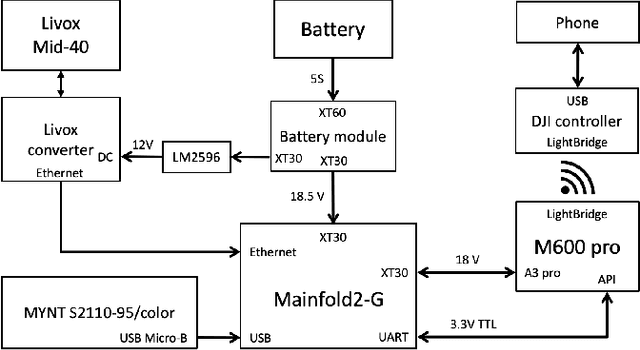

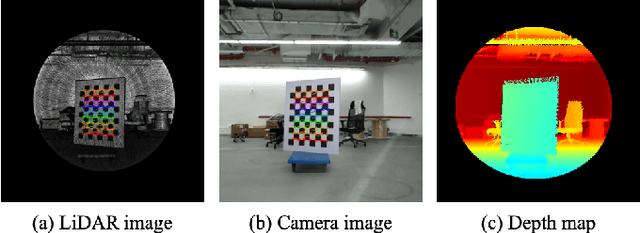

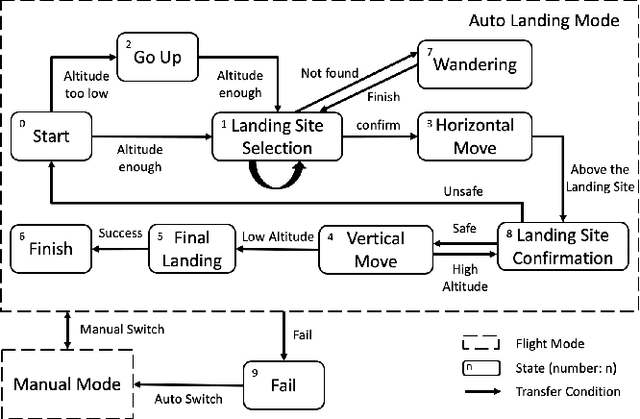

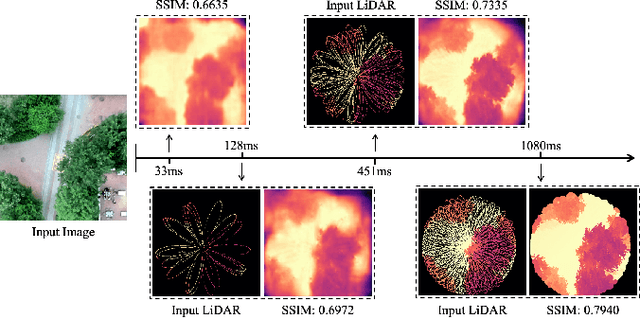

Abstract:Selecting safe landing sites in non-cooperative environments is a key step towards the full autonomy of UAVs. However, the existing methods have the common problems of poor generalization ability and robustness. Their performance in unknown environments is significantly degraded and the error cannot be self-detected and corrected. In this paper, we construct a UAV system equipped with low-cost LiDAR and binocular cameras to realize autonomous landing in non-cooperative environments by detecting the flat and safe ground area. Taking advantage of the non-repetitive scanning and high FOV coverage characteristics of LiDAR, we come up with a dynamic time depth completion algorithm. In conjunction with the proposed self-evaluation method of the depth map, our model can dynamically select the LiDAR accumulation time at the inference phase to ensure an accurate prediction result. Based on the depth map, the high-level terrain information such as slope, roughness, and the size of the safe area are derived. We have conducted extensive autonomous landing experiments in a variety of familiar or completely unknown environments, verifying that our model can adaptively balance the accuracy and speed, and the UAV can robustly select a safe landing site.

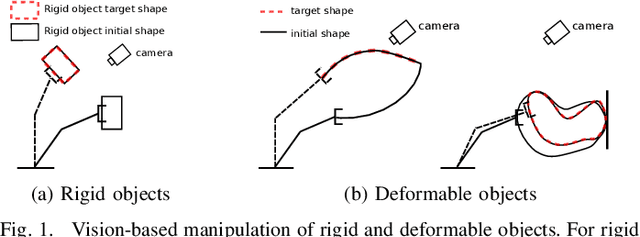

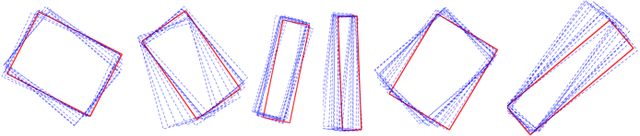

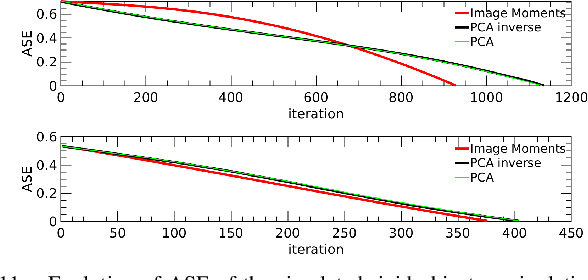

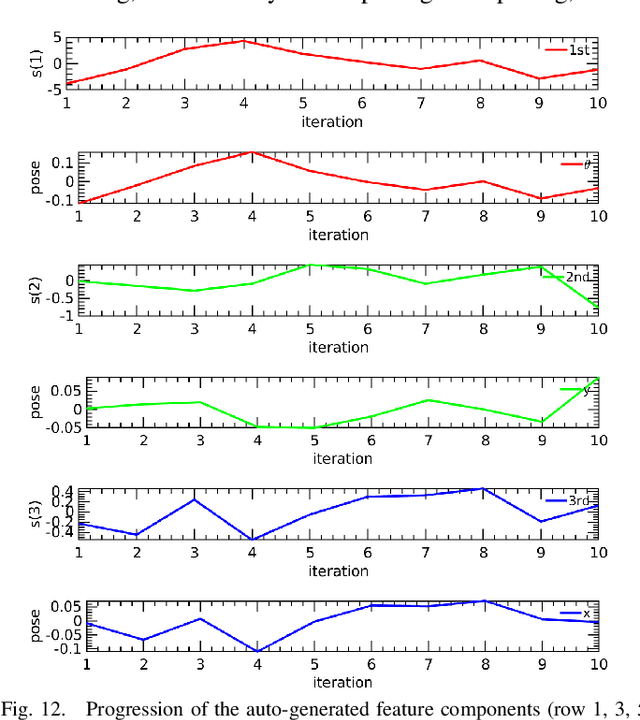

Vision-based Manipulation of Deformable and Rigid Objects Using Subspace Projections of 2D Contours

Jun 16, 2020

Abstract:This paper proposes a unified vision-based manipulation framework using image contours of deformable/rigid objects. Instead of using human-defined cues, the robot automatically learns the features from processed vision data. Our method simultaneously generates---from the same data---both, visual features and the interaction matrix that relates them to the robot control inputs. Extraction of the feature vector and control commands is done online and adaptively, with little data for initialization. The method allows the robot to manipulate an object without knowing whether it is rigid or deformable. To validate our approach, we conduct numerical simulations and experiments with both deformable and rigid objects.

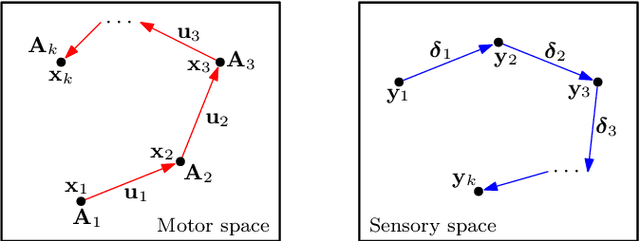

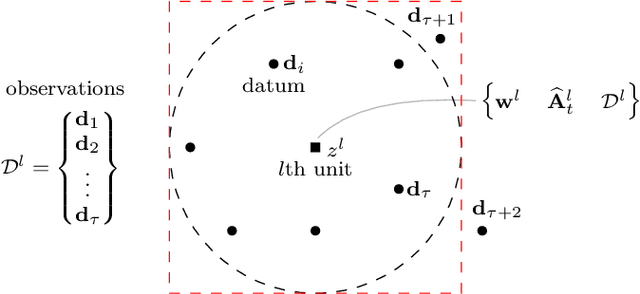

A Lyapunov-Stable Adaptive Method to Approximate Sensorimotor Models for Sensor-Based Control

Apr 25, 2020

Abstract:In this article, we present a new scheme that approximates unknown sensorimotor models of robots by using feedback signals only. The formulation of the uncalibrated sensor-based control problem is first formulated, then, we develop a computational method that distributes the model estimation problem amongst multiple adaptive units that specialise in a local sensorimotor map. Different from traditional estimation algorithms, the proposed method requires little data to train and constrain it (the number of required data points can be analytically determined) and has rigorous stability properties (the conditions to satisfy Lyapunov stability are derived). Numerical simulations and experimental results are presented to validate the proposed method.

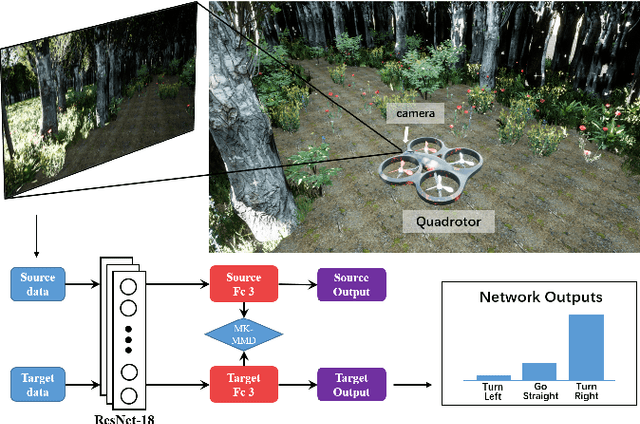

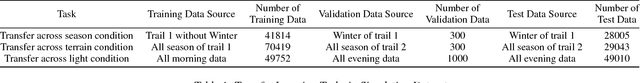

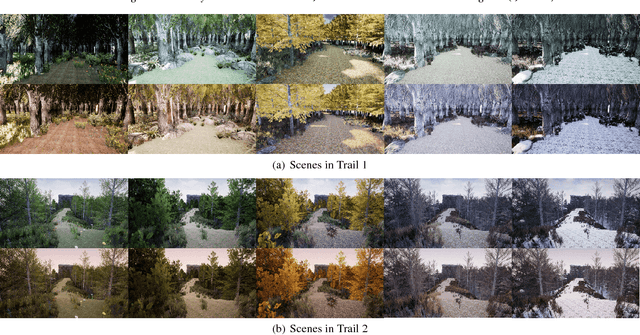

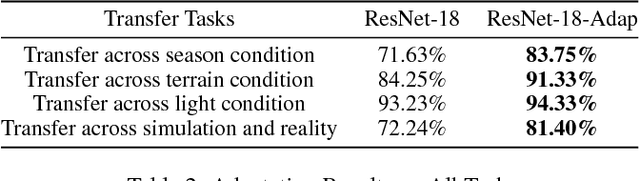

Learning Transferable UAV for Forest Visual Perception

Jun 10, 2018

Abstract:In this paper, we propose a new pipeline of training a monocular UAV to fly a collision-free trajectory along the dense forest trail. As gathering high-precision images in the real world is expensive and the off-the-shelf dataset has some deficiencies, we collect a new dense forest trail dataset in a variety of simulated environment in Unreal Engine. Then we formulate visual perception of forests as a classification problem. A ResNet-18 model is trained to decide the moving direction frame by frame. To transfer the learned strategy to the real world, we construct a ResNet-18 adaptation model via multi-kernel maximum mean discrepancies to leverage the relevant labelled data and alleviate the discrepancy between simulated and real environment. Simulation and real-world flight with a variety of appearance and environment changes are both tested. The ResNet-18 adaptation and its variant model achieve the best result of 84.08% accuracy in reality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge