Jan-Willem van de Meent

Towards Reducing Diagnostic Errors with Interpretable Risk Prediction

Feb 15, 2024Abstract:Many diagnostic errors occur because clinicians cannot easily access relevant information in patient Electronic Health Records (EHRs). In this work we propose a method to use LLMs to identify pieces of evidence in patient EHR data that indicate increased or decreased risk of specific diagnoses; our ultimate aim is to increase access to evidence and reduce diagnostic errors. In particular, we propose a Neural Additive Model to make predictions backed by evidence with individualized risk estimates at time-points where clinicians are still uncertain, aiming to specifically mitigate delays in diagnosis and errors stemming from an incomplete differential. To train such a model, it is necessary to infer temporally fine-grained retrospective labels of eventual "true" diagnoses. We do so with LLMs, to ensure that the input text is from before a confident diagnosis can be made. We use an LLM to retrieve an initial pool of evidence, but then refine this set of evidence according to correlations learned by the model. We conduct an in-depth evaluation of the usefulness of our approach by simulating how it might be used by a clinician to decide between a pre-defined list of differential diagnoses.

Topological Obstructions and How to Avoid Them

Dec 12, 2023

Abstract:Incorporating geometric inductive biases into models can aid interpretability and generalization, but encoding to a specific geometric structure can be challenging due to the imposed topological constraints. In this paper, we theoretically and empirically characterize obstructions to training encoders with geometric latent spaces. We show that local optima can arise due to singularities (e.g. self-intersection) or due to an incorrect degree or winding number. We then discuss how normalizing flows can potentially circumvent these obstructions by defining multimodal variational distributions. Inspired by this observation, we propose a new flow-based model that maps data points to multimodal distributions over geometric spaces and empirically evaluate our model on 2 domains. We observe improved stability during training and a higher chance of converging to a homeomorphic encoder.

One-shot Imitation Learning via Interaction Warping

Jun 21, 2023

Abstract:Imitation learning of robot policies from few demonstrations is crucial in open-ended applications. We propose a new method, Interaction Warping, for learning SE(3) robotic manipulation policies from a single demonstration. We infer the 3D mesh of each object in the environment using shape warping, a technique for aligning point clouds across object instances. Then, we represent manipulation actions as keypoints on objects, which can be warped with the shape of the object. We show successful one-shot imitation learning on three simulated and real-world object re-arrangement tasks. We also demonstrate the ability of our method to predict object meshes and robot grasps in the wild.

String Diagrams with Factorized Densities

May 04, 2023

Abstract:A growing body of research on probabilistic programs and causal models has highlighted the need to reason compositionally about model classes that extend directed graphical models. Both probabilistic programs and causal models define a joint probability density over a set of random variables, and exhibit sparse structure that can be used to reason about causation and conditional independence. This work builds on recent work on Markov categories of probabilistic mappings to define a category whose morphisms combine a joint density, factorized over each sample space, with a deterministic mapping from samples to return values. This is a step towards closing the gap between recent category-theoretic descriptions of probability measures, and the operational definitions of factorized densities that are commonly employed in probabilistic programming and causal inference.

CHiLL: Zero-shot Custom Interpretable Feature Extraction from Clinical Notes with Large Language Models

Feb 23, 2023

Abstract:Large Language Models (LLMs) have yielded fast and dramatic progress in NLP, and now offer strong few- and zero-shot capabilities on new tasks, reducing the need for annotation. This is especially exciting for the medical domain, in which supervision is often scant and expensive. At the same time, model predictions are rarely so accurate that they can be trusted blindly. Clinicians therefore tend to favor "interpretable" classifiers over opaque LLMs. For example, risk prediction tools are often linear models defined over manually crafted predictors that must be laboriously extracted from EHRs. We propose CHiLL (Crafting High-Level Latents), which uses LLMs to permit natural language specification of high-level features for linear models via zero-shot feature extraction using expert-composed queries. This approach has the promise to empower physicians to use their domain expertise to craft features which are clinically meaningful for a downstream task of interest, without having to manually extract these from raw EHR (as often done now). We are motivated by a real-world risk prediction task, but as a reproducible proxy, we use MIMIC-III and MIMIC-CXR data and standard predictive tasks (e.g., 30-day readmission) to evaluate our approach. We find that linear models using automatically extracted features are comparably performant to models using reference features, and provide greater interpretability than linear models using "Bag-of-Words" features. We verify that learned feature weights align well with clinical expectations.

Verified Reversible Programming for Verified Lossless Compression

Nov 02, 2022

Abstract:Lossless compression implementations typically contain two programs, an encoder and a decoder, which are required to be inverse to one another. Maintaining consistency between two such programs during development requires care, and incorrect data decoding can be costly and difficult to debug. We observe that a significant class of compression methods, based on asymmetric numeral systems (ANS), have shared structure between the encoder and decoder -- the decoder program is the 'reverse' of the encoder program -- allowing both to be simultaneously specified by a single, reversible, 'codec' function. To exploit this, we have implemented a small reversible language, embedded in Agda, which we call 'Flipper'. Agda supports formal verification of program properties, and the compiler for our reversible language (which is implemented as an Agda macro), produces not just an encoder/decoder pair of functions but also a proof that they are inverse to one another. Thus users of the language get formal verification 'for free'. We give a small example use-case of Flipper in this paper, and plan to publish a full compression implementation soon.

A Variational Perspective on Generative Flow Networks

Oct 14, 2022

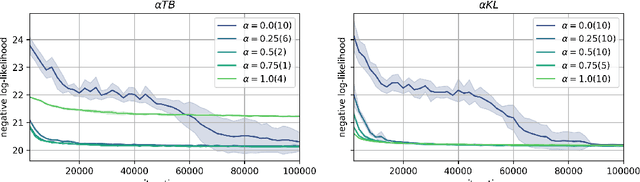

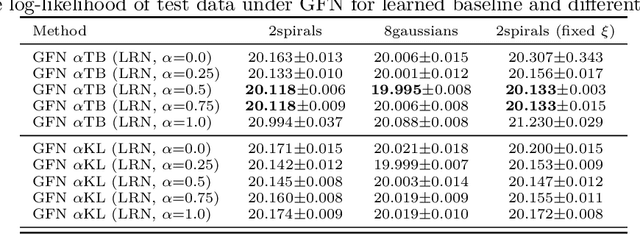

Abstract:Generative flow networks (GFNs) are a class of models for sequential sampling of composite objects, which approximate a target distribution that is defined in terms of an energy function or a reward. GFNs are typically trained using a flow matching or trajectory balance objective, which matches forward and backward transition models over trajectories. In this work, we define variational objectives for GFNs in terms of the Kullback-Leibler (KL) divergences between the forward and backward distribution. We show that variational inference in GFNs is equivalent to minimizing the trajectory balance objective when sampling trajectories from the forward model. We generalize this approach by optimizing a convex combination of the reverse- and forward KL divergence. This insight suggests variational inference methods can serve as a means to define a more general family of objectives for training generative flow networks, for example by incorporating control variates, which are commonly used in variational inference, to reduce the variance of the gradients of the trajectory balance objective. We evaluate our findings and the performance of the proposed variational objective numerically by comparing it to the trajectory balance objective on two synthetic tasks.

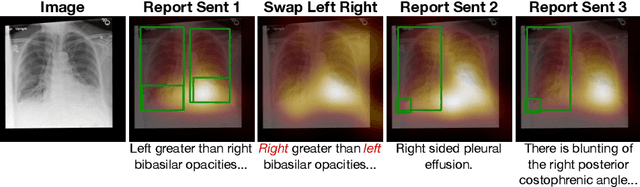

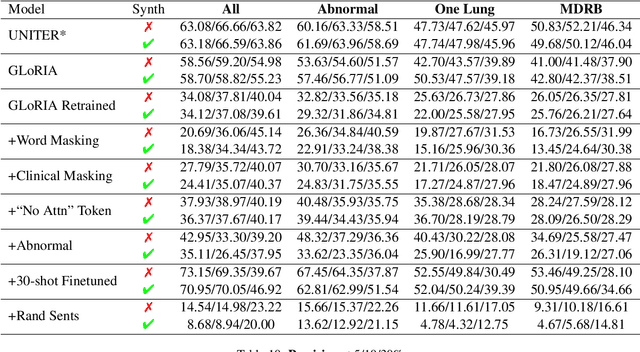

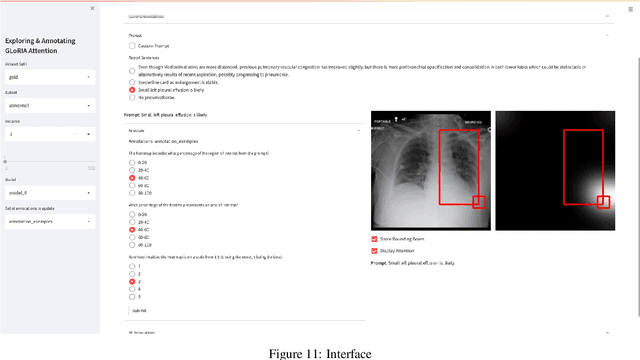

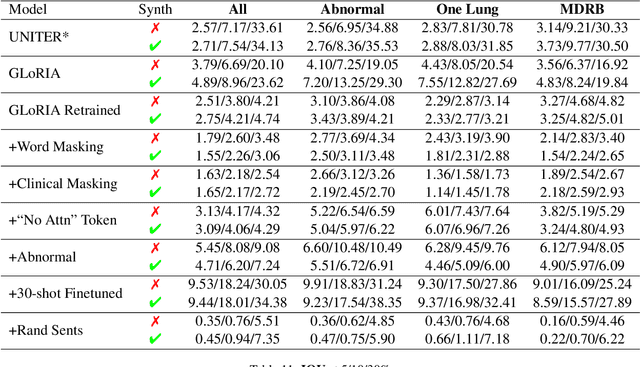

That's the Wrong Lung! Evaluating and Improving the Interpretability of Unsupervised Multimodal Encoders for Medical Data

Oct 12, 2022

Abstract:Pretraining multimodal models on Electronic Health Records (EHRs) provides a means of learning representations that can transfer to downstream tasks with minimal supervision. Recent multimodal models induce soft local alignments between image regions and sentences. This is of particular interest in the medical domain, where alignments might highlight regions in an image relevant to specific phenomena described in free-text. While past work has suggested that attention "heatmaps" can be interpreted in this manner, there has been little evaluation of such alignments. We compare alignments from a state-of-the-art multimodal (image and text) model for EHR with human annotations that link image regions to sentences. Our main finding is that the text has an often weak or unintuitive influence on attention; alignments do not consistently reflect basic anatomical information. Moreover, synthetic modifications -- such as substituting "left" for "right" -- do not substantially influence highlights. Simple techniques such as allowing the model to opt out of attending to the image and few-shot finetuning show promise in terms of their ability to improve alignments with very little or no supervision.

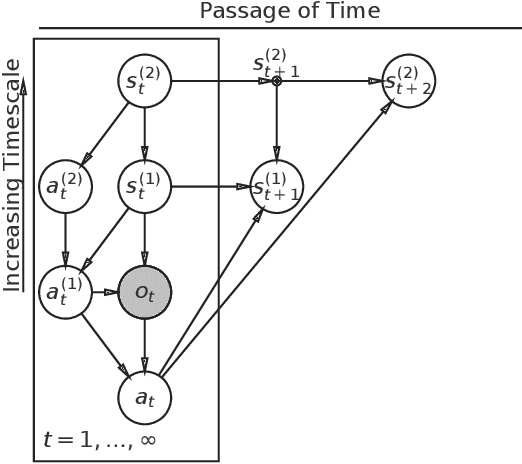

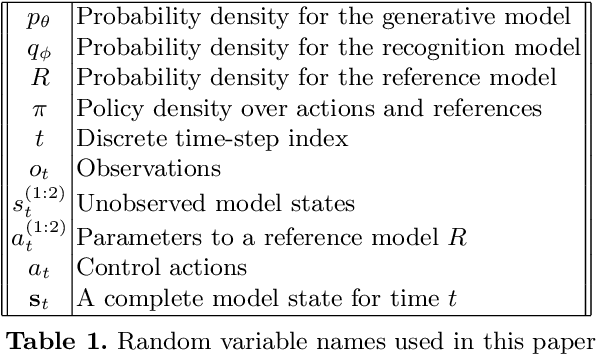

Deriving time-averaged active inference from control principles

Aug 22, 2022

Abstract:Active inference offers a principled account of behavior as minimizing average sensory surprise over time. Applications of active inference to control problems have heretofore tended to focus on finite-horizon or discounted-surprise problems, despite deriving from the infinite-horizon, average-surprise imperative of the free-energy principle. Here we derive an infinite-horizon, average-surprise formulation of active inference from optimal control principles. Our formulation returns to the roots of active inference in neuroanatomy and neurophysiology, formally reconnecting active inference to optimal feedback control. Our formulation provides a unified objective functional for sensorimotor control and allows for reference states to vary over time.

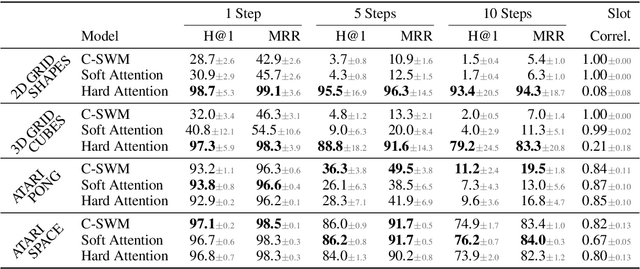

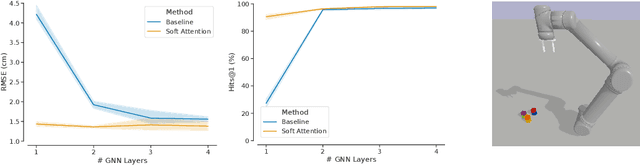

Binding Actions to Objects in World Models

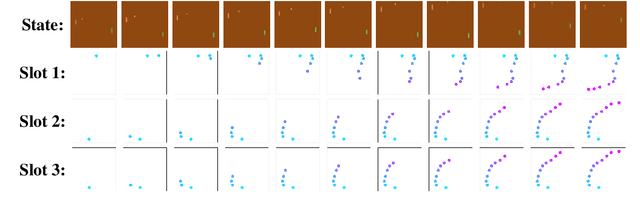

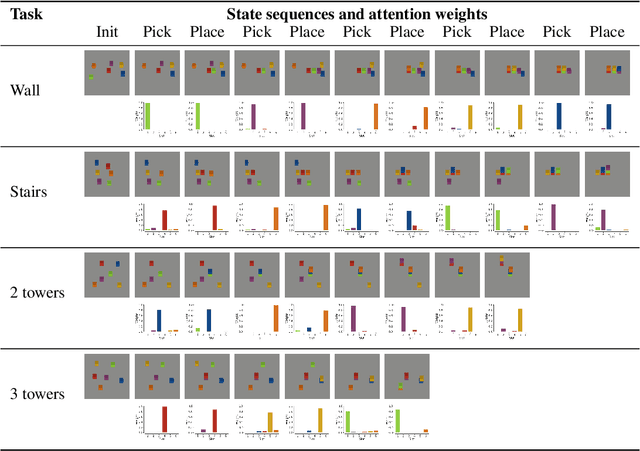

Apr 27, 2022

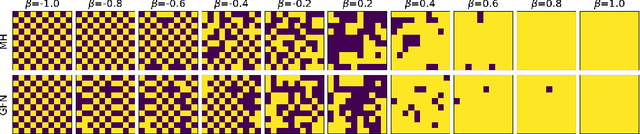

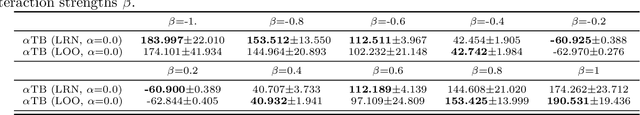

Abstract:We study the problem of binding actions to objects in object-factored world models using action-attention mechanisms. We propose two attention mechanisms for binding actions to objects, soft attention and hard attention, which we evaluate in the context of structured world models for five environments. Our experiments show that hard attention helps contrastively-trained structured world models to learn to separate individual objects in an object-based grid-world environment. Further, we show that soft attention increases performance of factored world models trained on a robotic manipulation task. The learned action attention weights can be used to interpret the factored world model as the attention focuses on the manipulated object in the environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge