Put Chatbot into Its Interlocutor's Shoes: New Framework to Learn Chatbot Responding with Intention

Apr 23, 2021Hsuan Su, Jiun-Hao Jhan, Fan-yun Sun, Saurav Sahay, Hung-yi Lee

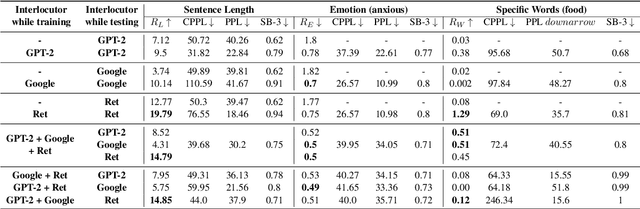

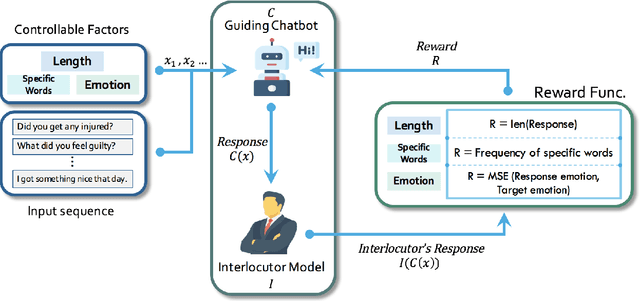

Most chatbot literature that focuses on improving the fluency and coherence of a chatbot, is dedicated to making chatbots more human-like. However, very little work delves into what really separates humans from chatbots -- humans intrinsically understand the effect their responses have on the interlocutor and often respond with an intention such as proposing an optimistic view to make the interlocutor feel better. This paper proposes an innovative framework to train chatbots to possess human-like intentions. Our framework includes a guiding chatbot and an interlocutor model that plays the role of humans. The guiding chatbot is assigned an intention and learns to induce the interlocutor to reply with responses matching the intention, for example, long responses, joyful responses, responses with specific words, etc. We examined our framework using three experimental setups and evaluated the guiding chatbot with four different metrics to demonstrate flexibility and performance advantages. Additionally, we performed trials with human interlocutors to substantiate the guiding chatbot's effectiveness in influencing the responses of humans to a certain extent. Code will be made available to the public.

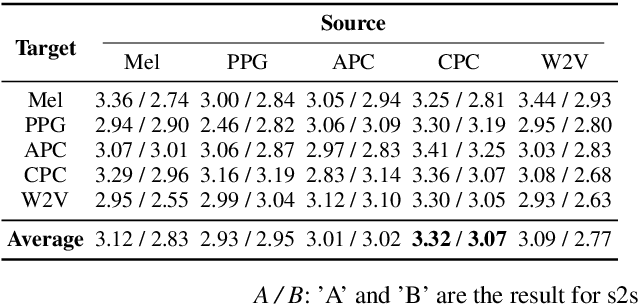

Utilizing Self-supervised Representations for MOS Prediction

Apr 21, 2021Wei-Cheng Tseng, Chien-yu Huang, Wei-Tsung Kao, Yist Y. Lin, Hung-yi Lee

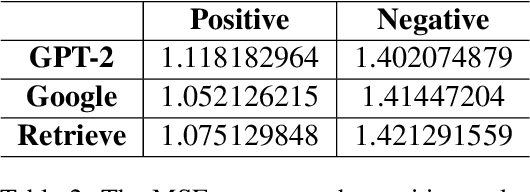

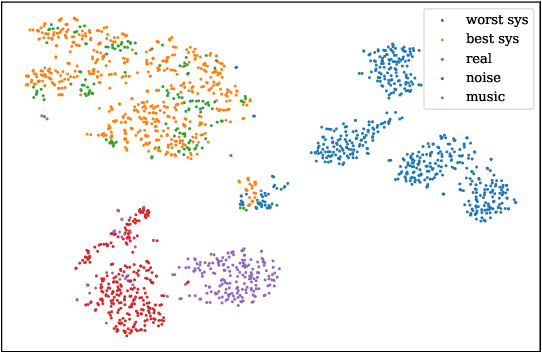

Speech quality assessment has been a critical issue in speech processing for decades. Existing automatic evaluations usually require clean references or parallel ground truth data, which is infeasible when the amount of data soars. Subjective tests, on the other hand, do not need any additional clean or parallel data and correlates better to human perception. However, such a test is expensive and time-consuming because crowd work is necessary. It thus becomes highly desired to develop an automatic evaluation approach that correlates well with human perception while not requiring ground truth data. In this paper, we use self-supervised pre-trained models for MOS prediction. We show their representations can distinguish between clean and noisy audios. Then, we fine-tune these pre-trained models followed by simple linear layers in an end-to-end manner. The experiment results showed that our framework outperforms the two previous state-of-the-art models by a significant improvement on Voice Conversion Challenge 2018 and achieves comparable or superior performance on Voice Conversion Challenge 2016. We also conducted an ablation study to further investigate how each module benefits the task. The experiment results are implemented and reproducible with publicly available toolkits.

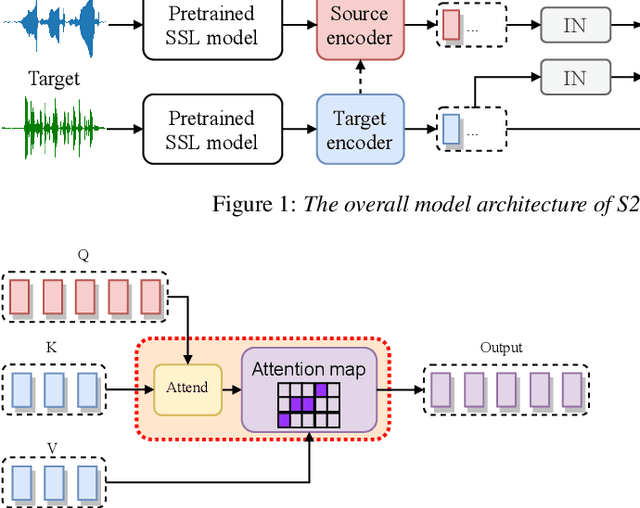

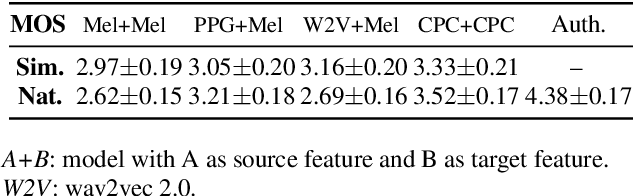

S2VC: A Framework for Any-to-Any Voice Conversion with Self-Supervised Pretrained Representations

Apr 07, 2021Jheng-hao Lin, Yist Y. Lin, Chung-Ming Chien, Hung-yi Lee

Any-to-any voice conversion (VC) aims to convert the timbre of utterances from and to any speakers seen or unseen during training. Various any-to-any VC approaches have been proposed like AUTOVC, AdaINVC, and FragmentVC. AUTOVC, and AdaINVC utilize source and target encoders to disentangle the content and speaker information of the features. FragmentVC utilizes two encoders to encode source and target information and adopts cross attention to align the source and target features with similar phonetic content. Moreover, pre-trained features are adopted. AUTOVC used dvector to extract speaker information, and self-supervised learning (SSL) features like wav2vec 2.0 is used in FragmentVC to extract the phonetic content information. Different from previous works, we proposed S2VC that utilizes Self-Supervised features as both source and target features for VC model. Supervised phoneme posteriororgram (PPG), which is believed to be speaker-independent and widely used in VC to extract content information, is chosen as a strong baseline for SSL features. The objective evaluation and subjective evaluation both show models taking SSL feature CPC as both source and target features outperforms that taking PPG as source feature, suggesting that SSL features have great potential in improving VC.

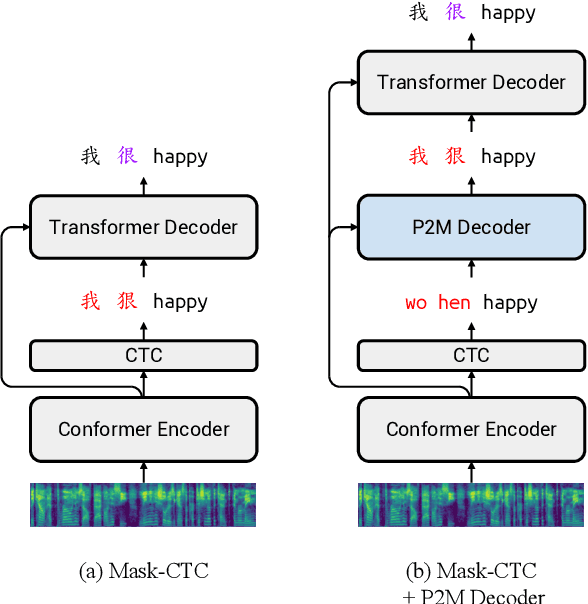

Non-autoregressive Mandarin-English Code-switching Speech Recognition with Pinyin Mask-CTC and Word Embedding Regularization

Apr 06, 2021Shun-Po Chuang, Heng-Jui Chang, Sung-Feng Huang, Hung-yi Lee

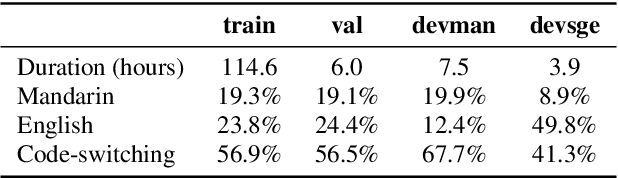

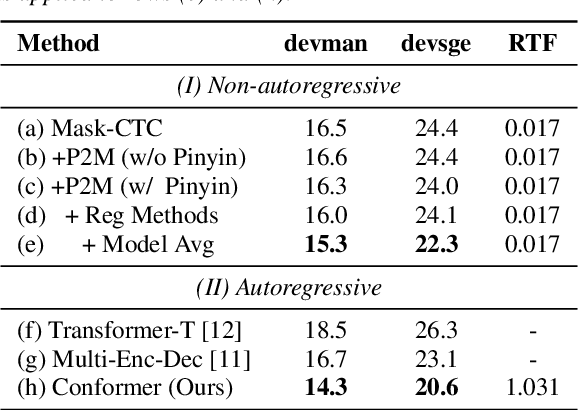

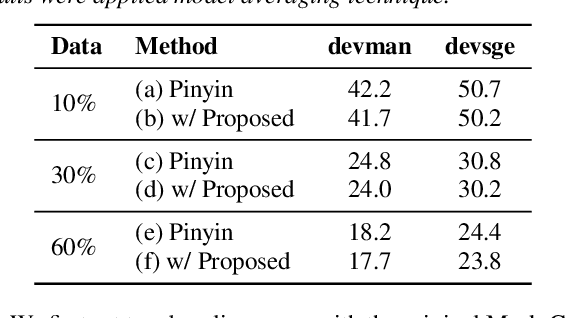

Mandarin-English code-switching (CS) is frequently used among East and Southeast Asian people. However, the intra-sentence language switching of the two very different languages makes recognizing CS speech challenging. Meanwhile, the recent successful non-autoregressive (NAR) ASR models remove the need for left-to-right beam decoding in autoregressive (AR) models and achieved outstanding performance and fast inference speed. Therefore, in this paper, we took advantage of the Mask-CTC NAR ASR framework to tackle the CS speech recognition issue. We propose changing the Mandarin output target of the encoder to Pinyin for faster encoder training, and introduce Pinyin-to-Mandarin decoder to learn contextualized information. Moreover, we propose word embedding label smoothing to regularize the decoder with contextualized information and projection matrix regularization to bridge that gap between the encoder and decoder. We evaluate the proposed methods on the SEAME corpus and achieved exciting results.

Towards Lifelong Learning of End-to-end ASR

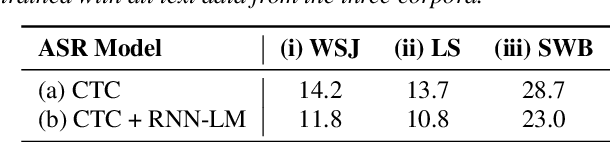

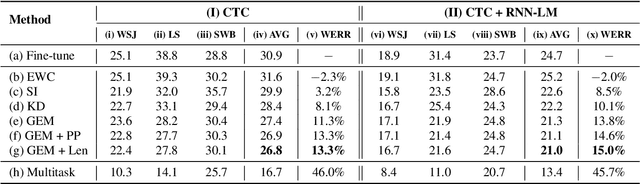

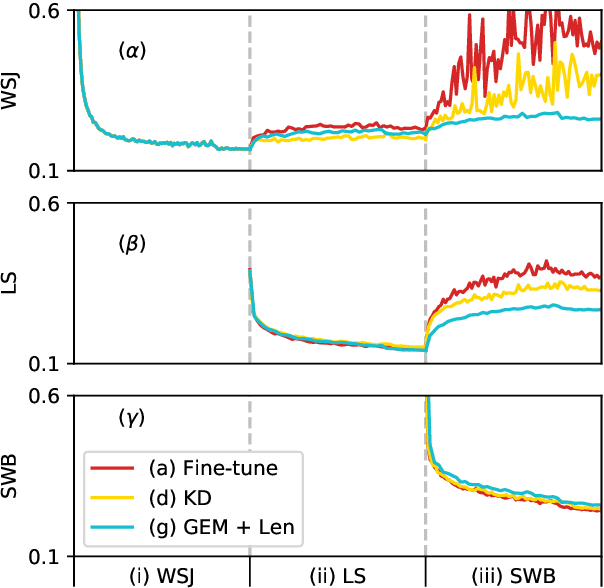

Apr 04, 2021Heng-Jui Chang, Hung-yi Lee, Lin-shan Lee

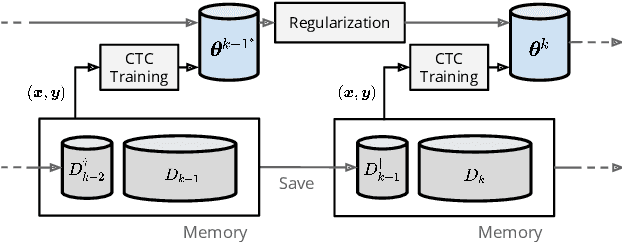

Automatic speech recognition (ASR) technologies today are primarily optimized for given datasets; thus, any changes in the application environment (e.g., acoustic conditions or topic domains) may inevitably degrade the performance. We can collect new data describing the new environment and fine-tune the system, but this naturally leads to higher error rates for the earlier datasets, referred to as catastrophic forgetting. The concept of lifelong learning (LLL) aiming to enable a machine to sequentially learn new tasks from new datasets describing the changing real world without forgetting the previously learned knowledge is thus brought to attention. This paper reports, to our knowledge, the first effort to extensively consider and analyze the use of various approaches of LLL in end-to-end (E2E) ASR, including proposing novel methods in saving data for past domains to mitigate the catastrophic forgetting problem. An overall relative reduction of 28.7% in WER was achieved compared to the fine-tuning baseline when sequentially learning on three very different benchmark corpora. This can be the first step toward the highly desired ASR technologies capable of synchronizing with the continuously changing real world.

Auto-KWS 2021 Challenge: Task, Datasets, and Baselines

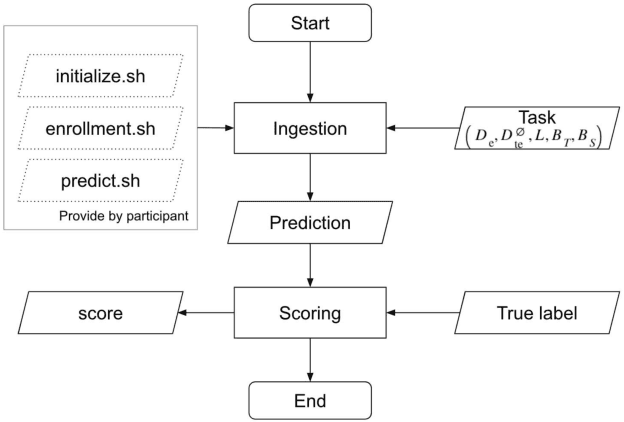

Mar 31, 2021Jingsong Wang, Yuxuan He, Chunyu Zhao, Qijie Shao, Wei-Wei Tu, Tom Ko, Hung-yi Lee, Lei Xie

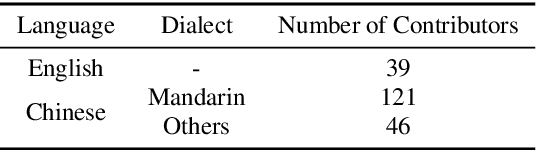

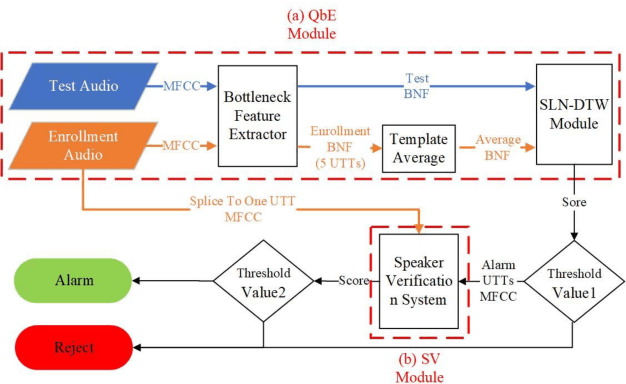

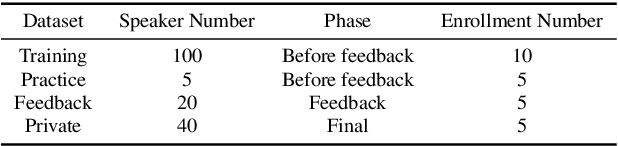

Auto-KWS 2021 challenge calls for automated machine learning (AutoML) solutions to automate the process of applying machine learning to a customized keyword spotting task. Compared with other keyword spotting tasks, Auto-KWS challenge has the following three characteristics: 1) The challenge focuses on the problem of customized keyword spotting, where the target device can only be awakened by an enrolled speaker with his specified keyword. The speaker can use any language and accent to define his keyword. 2) All dataset of the challenge is recorded in realistic environment. It is to simulate different user scenarios. 3) Auto-KWS is a "code competition", where participants need to submit AutoML solutions, then the platform automatically runs the enrollment and prediction steps with the submitted code.This challenge aims at promoting the development of a more personalized and flexible keyword spotting system. Two baseline systems are provided to all participants as references.

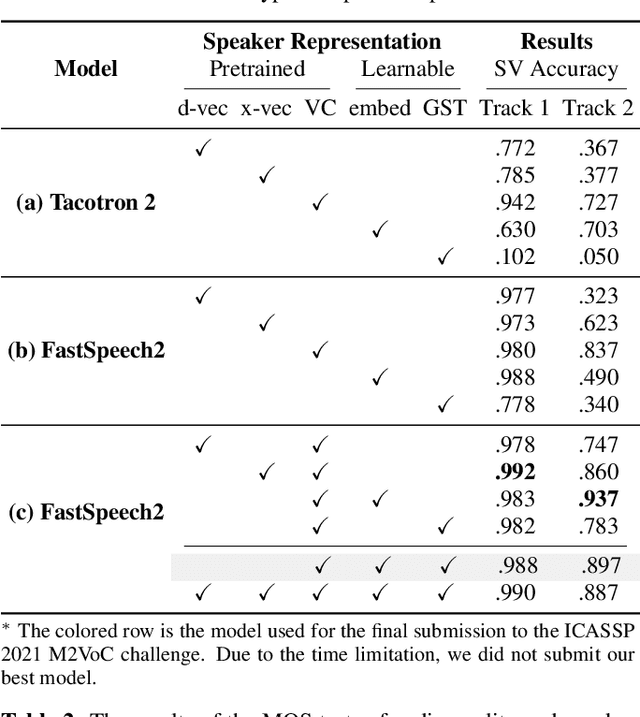

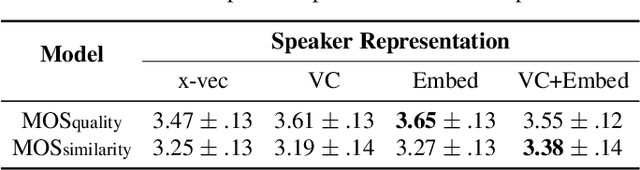

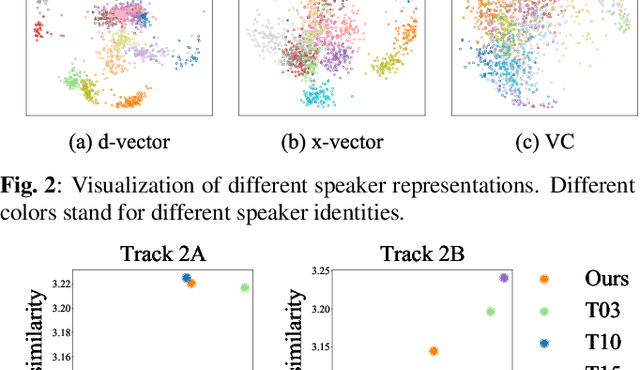

Investigating on Incorporating Pretrained and Learnable Speaker Representations for Multi-Speaker Multi-Style Text-to-Speech

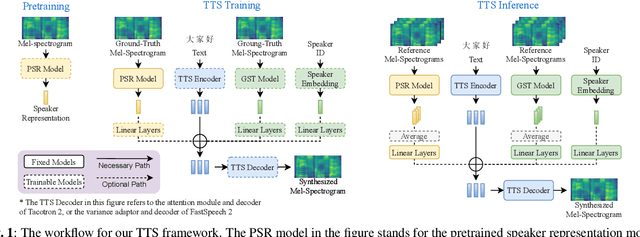

Mar 20, 2021Chung-Ming Chien, Jheng-Hao Lin, Chien-yu Huang, Po-chun Hsu, Hung-yi Lee

The few-shot multi-speaker multi-style voice cloning task is to synthesize utterances with voice and speaking style similar to a reference speaker given only a few reference samples. In this work, we investigate different speaker representations and proposed to integrate pretrained and learnable speaker representations. Among different types of embeddings, the embedding pretrained by voice conversion achieves the best performance. The FastSpeech 2 model combined with both pretrained and learnable speaker representations shows great generalization ability on few-shot speakers and achieved 2nd place in the one-shot track of the ICASSP 2021 M2VoC challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge