Hai Zhao

Department of Computer Science and Engineering, Shanghai Jiao Tong University, Key Laboratory of Shanghai Education Commission for Intelligent Interaction and Cognitive Engineering, Shanghai Jiao Tong University, MoE Key Lab of Artificial Intelligence, AI Institute, Shanghai Jiao Tong University

Pre-training Universal Language Representation

May 30, 2021

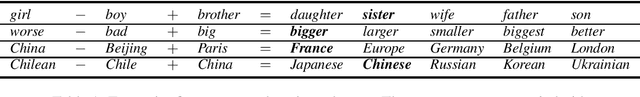

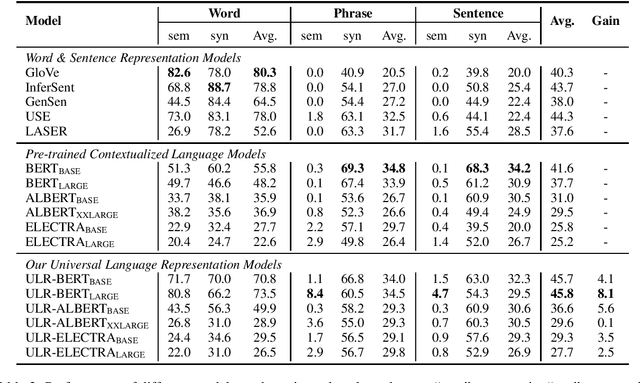

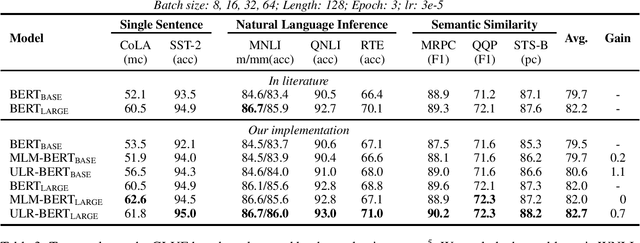

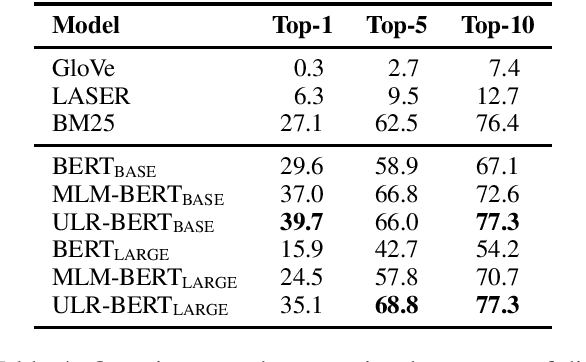

Abstract:Despite the well-developed cut-edge representation learning for language, most language representation models usually focus on specific levels of linguistic units. This work introduces universal language representation learning, i.e., embeddings of different levels of linguistic units or text with quite diverse lengths in a uniform vector space. We propose the training objective MiSAD that utilizes meaningful n-grams extracted from large unlabeled corpus by a simple but effective algorithm for pre-trained language models. Then we empirically verify that well designed pre-training scheme may effectively yield universal language representation, which will bring great convenience when handling multiple layers of linguistic objects in a unified way. Especially, our model achieves the highest accuracy on analogy tasks in different language levels and significantly improves the performance on downstream tasks in the GLUE benchmark and a question answering dataset.

Grammatical Error Correction as GAN-like Sequence Labeling

May 29, 2021

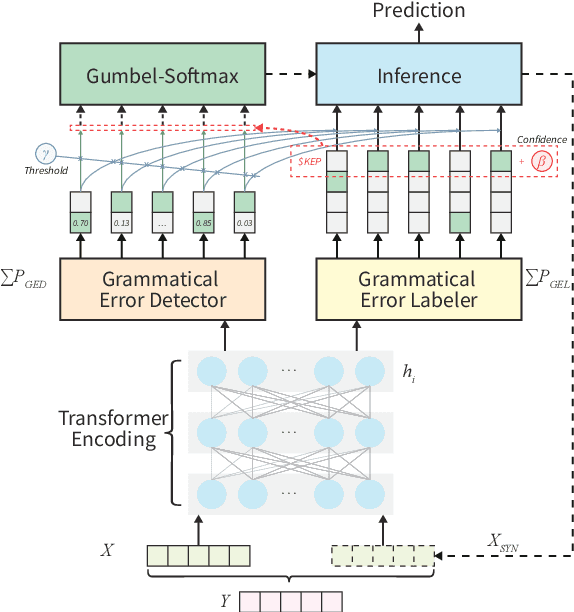

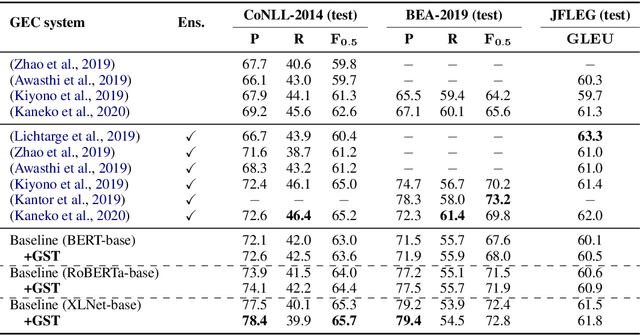

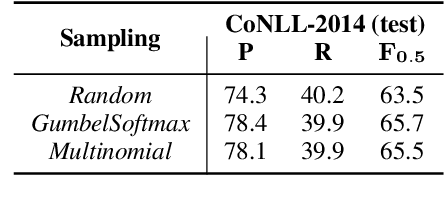

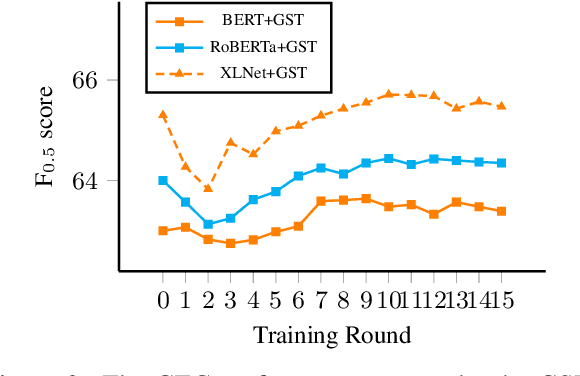

Abstract:In Grammatical Error Correction (GEC), sequence labeling models enjoy fast inference compared to sequence-to-sequence models; however, inference in sequence labeling GEC models is an iterative process, as sentences are passed to the model for multiple rounds of correction, which exposes the model to sentences with progressively fewer errors at each round. Traditional GEC models learn from sentences with fixed error rates. Coupling this with the iterative correction process causes a mismatch between training and inference that affects final performance. In order to address this mismatch, we propose a GAN-like sequence labeling model, which consists of a grammatical error detector as a discriminator and a grammatical error labeler with Gumbel-Softmax sampling as a generator. By sampling from real error distributions, our errors are more genuine compared to traditional synthesized GEC errors, thus alleviating the aforementioned mismatch and allowing for better training. Our results on several evaluation benchmarks demonstrate that our proposed approach is effective and improves the previous state-of-the-art baseline.

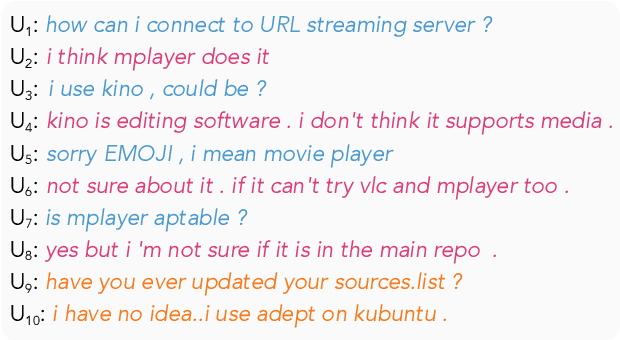

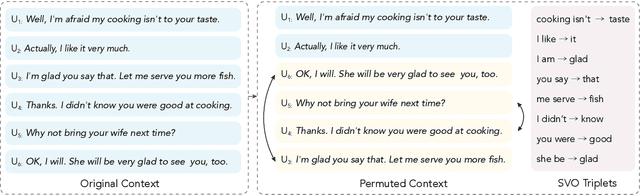

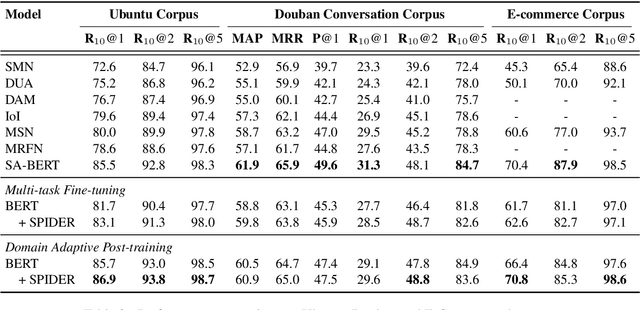

Structural Pre-training for Dialogue Comprehension

May 23, 2021

Abstract:Pre-trained language models (PrLMs) have demonstrated superior performance due to their strong ability to learn universal language representations from self-supervised pre-training. However, even with the help of the powerful PrLMs, it is still challenging to effectively capture task-related knowledge from dialogue texts which are enriched by correlations among speaker-aware utterances. In this work, we present SPIDER, Structural Pre-traIned DialoguE Reader, to capture dialogue exclusive features. To simulate the dialogue-like features, we propose two training objectives in addition to the original LM objectives: 1) utterance order restoration, which predicts the order of the permuted utterances in dialogue context; 2) sentence backbone regularization, which regularizes the model to improve the factual correctness of summarized subject-verb-object triplets. Experimental results on widely used dialogue benchmarks verify the effectiveness of the newly introduced self-supervised tasks.

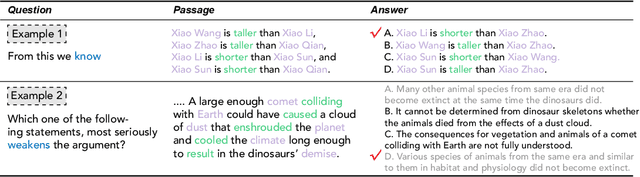

Fact-driven Logical Reasoning

May 21, 2021

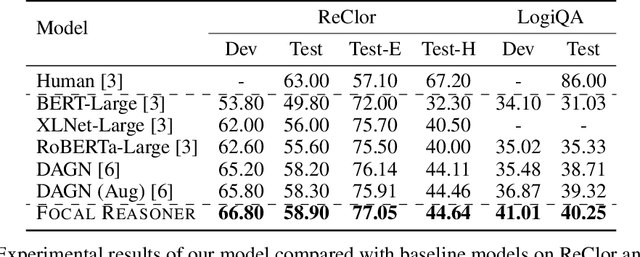

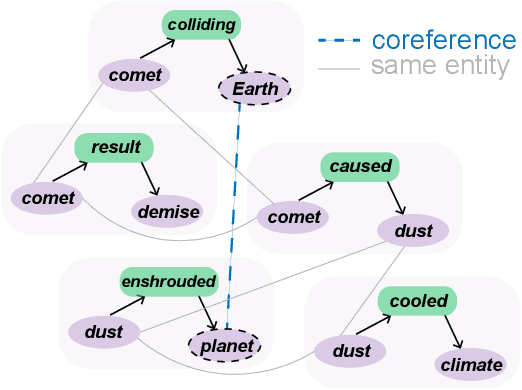

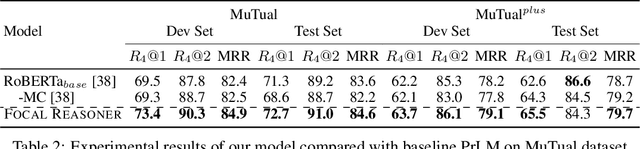

Abstract:Logical reasoning, which is closely related to human cognition, is of vital importance in human's understanding of texts. Recent years have witnessed increasing attentions on machine's logical reasoning abilities. However, previous studies commonly apply ad-hoc methods to model pre-defined relation patterns, such as linking named entities, which only considers global knowledge components that are related to commonsense, without local perception of complete facts or events. Such methodology is obviously insufficient to deal with complicated logical structures. Therefore, we argue that the natural logic units would be the group of backbone constituents of the sentence such as the subject-verb-object formed "facts", covering both global and local knowledge pieces that are necessary as the basis for logical reasoning. Beyond building the ad-hoc graphs, we propose a more general and convenient fact-driven approach to construct a supergraph on top of our newly defined fact units, and enhance the supergraph with further explicit guidance of local question and option interactions. Experiments on two challenging logical reasoning benchmark datasets, ReClor and LogiQA, show that our proposed model, \textsc{Focal Reasoner}, outperforms the baseline models dramatically. It can also be smoothly applied to other downstream tasks such as MuTual, a dialogue reasoning dataset, achieving competitive results.

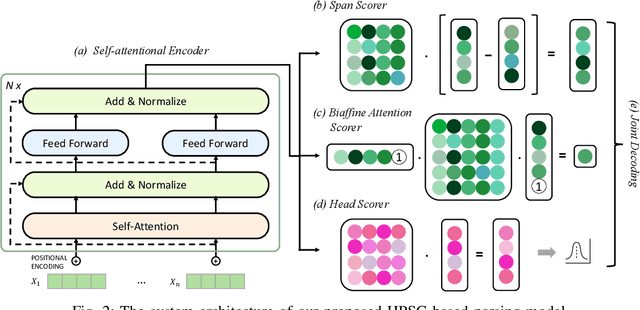

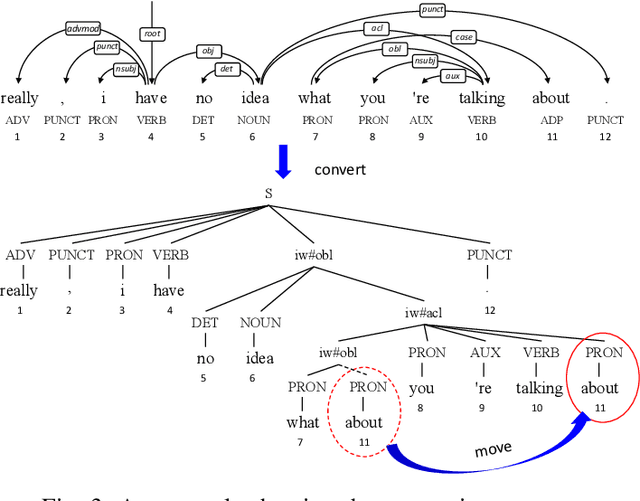

Head-driven Phrase Structure Parsing in O Time Complexity

May 20, 2021

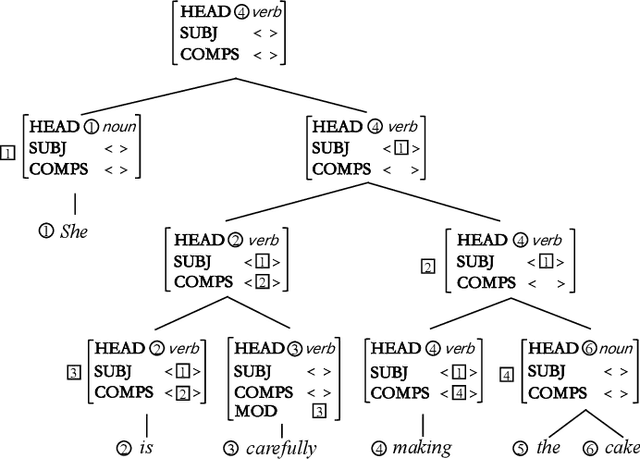

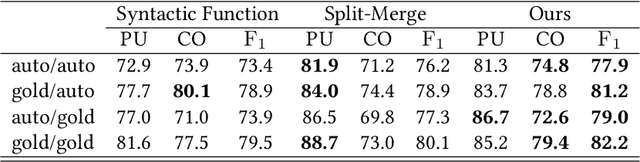

Abstract:Constituent and dependency parsing, the two classic forms of syntactic parsing, have been found to benefit from joint training and decoding under a uniform formalism, Head-driven Phrase Structure Grammar (HPSG). However, decoding this unified grammar has a higher time complexity ($O(n^5)$) than decoding either form individually ($O(n^3)$) since more factors have to be considered during decoding. We thus propose an improved head scorer that helps achieve a novel performance-preserved parser in $O$($n^3$) time complexity. Furthermore, on the basis of this proposed practical HPSG parser, we investigated the strengths of HPSG-based parsing and explored the general method of training an HPSG-based parser from only a constituent or dependency annotations in a multilingual scenario. We thus present a more effective, more in-depth, and general work on HPSG parsing.

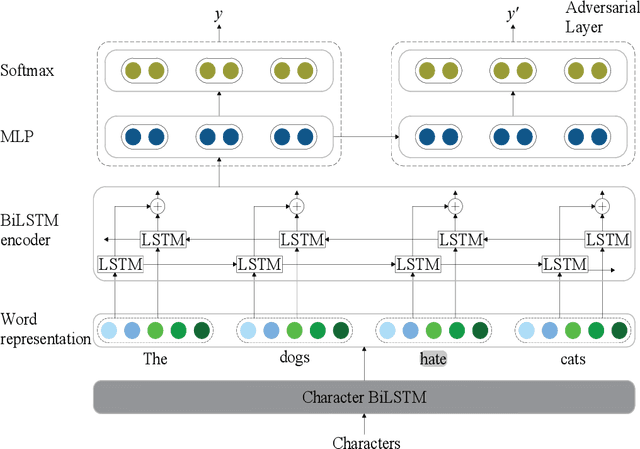

Neural Unsupervised Semantic Role Labeling

Apr 19, 2021

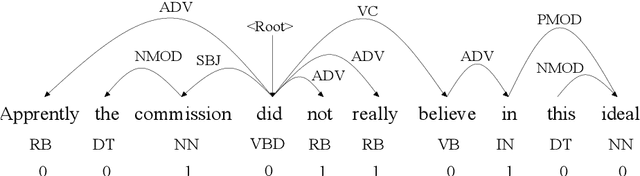

Abstract:The task of semantic role labeling (SRL) is dedicated to finding the predicate-argument structure. Previous works on SRL are mostly supervised and do not consider the difficulty in labeling each example which can be very expensive and time-consuming. In this paper, we present the first neural unsupervised model for SRL. To decompose the task as two argument related subtasks, identification and clustering, we propose a pipeline that correspondingly consists of two neural modules. First, we train a neural model on two syntax-aware statistically developed rules. The neural model gets the relevance signal for each token in a sentence, to feed into a BiLSTM, and then an adversarial layer for noise-adding and classifying simultaneously, thus enabling the model to learn the semantic structure of a sentence. Then we propose another neural model for argument role clustering, which is done through clustering the learned argument embeddings biased towards their dependency relations. Experiments on CoNLL-2009 English dataset demonstrate that our model outperforms previous state-of-the-art baseline in terms of non-neural models for argument identification and classification.

Not All Attention Is All You Need

Apr 10, 2021

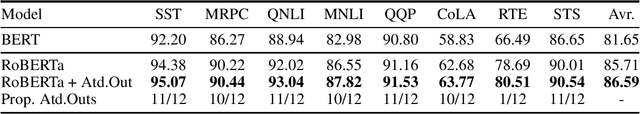

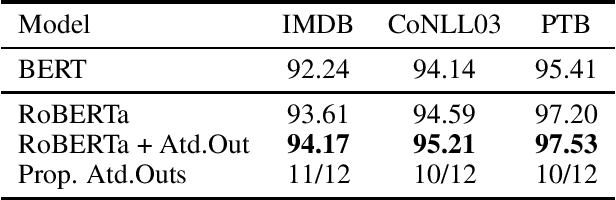

Abstract:Self-attention based models have achieved remarkable success in natural language processing. However, the self-attention network design is questioned as suboptimal in recent studies, due to its veiled validity and high redundancy. In this paper, we focus on pre-trained language models with self-pruning training design on task-specific tuning. We demonstrate that the lighter state-of-the-art models with nearly 80% of self-attention layers pruned, may achieve even better results on multiple tasks, including natural language understanding, document classification, named entity recognition and POS tagging, with nearly twice faster inference.

Text Compression-aided Transformer Encoding

Feb 11, 2021

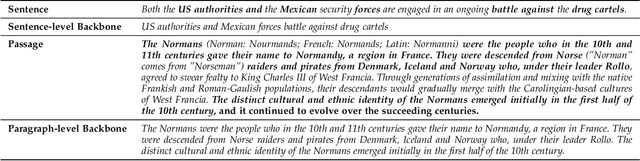

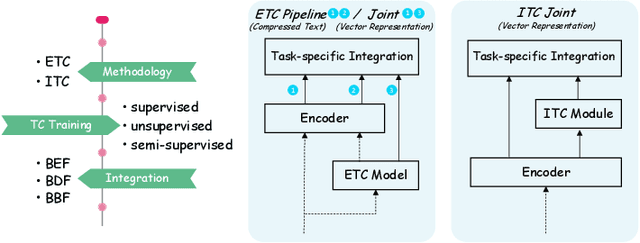

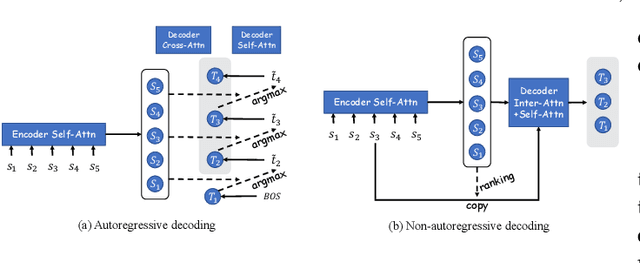

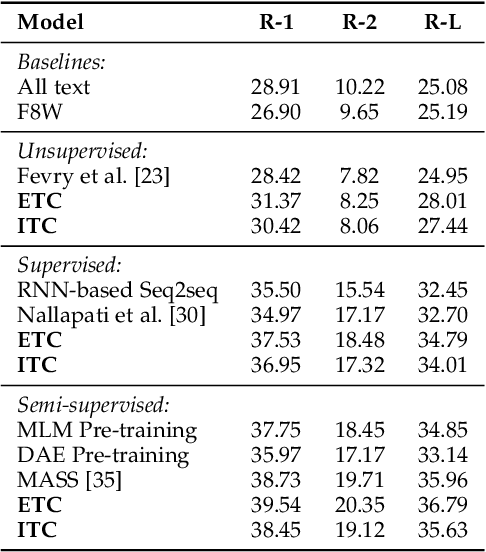

Abstract:Text encoding is one of the most important steps in Natural Language Processing (NLP). It has been done well by the self-attention mechanism in the current state-of-the-art Transformer encoder, which has brought about significant improvements in the performance of many NLP tasks. Though the Transformer encoder may effectively capture general information in its resulting representations, the backbone information, meaning the gist of the input text, is not specifically focused on. In this paper, we propose explicit and implicit text compression approaches to enhance the Transformer encoding and evaluate models using this approach on several typical downstream tasks that rely on the encoding heavily. Our explicit text compression approaches use dedicated models to compress text, while our implicit text compression approach simply adds an additional module to the main model to handle text compression. We propose three ways of integration, namely backbone source-side fusion, target-side fusion, and both-side fusion, to integrate the backbone information into Transformer-based models for various downstream tasks. Our evaluation on benchmark datasets shows that the proposed explicit and implicit text compression approaches improve results in comparison to strong baselines. We therefore conclude, when comparing the encodings to the baseline models, text compression helps the encoders to learn better language representations.

Multi-turn Dialogue Reading Comprehension with Pivot Turns and Knowledge

Feb 10, 2021

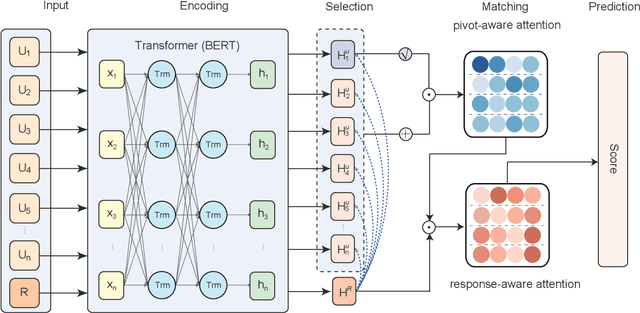

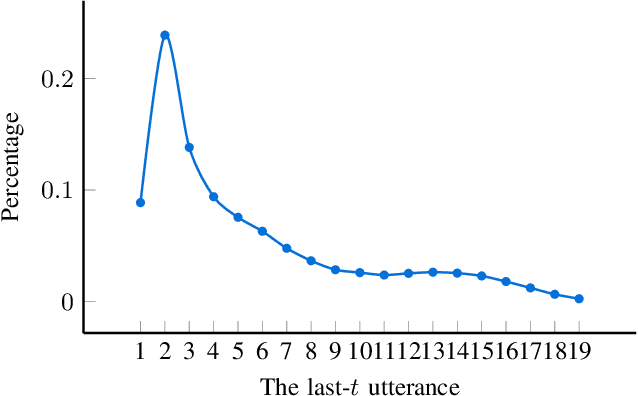

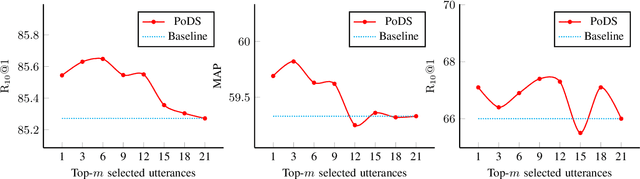

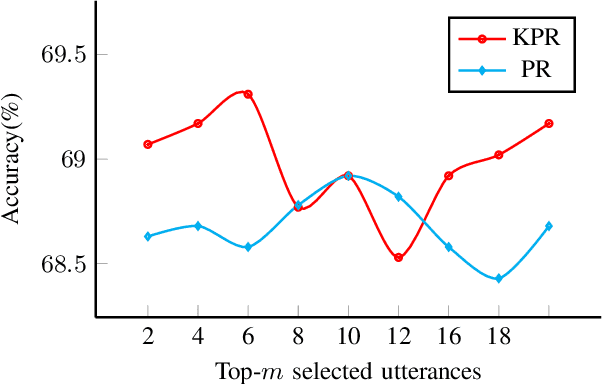

Abstract:Multi-turn dialogue reading comprehension aims to teach machines to read dialogue contexts and solve tasks such as response selection and answering questions. The major challenges involve noisy history contexts and especial prerequisites of commonsense knowledge that is unseen in the given material. Existing works mainly focus on context and response matching approaches. This work thus makes the first attempt to tackle the above two challenges by extracting substantially important turns as pivot utterances and utilizing external knowledge to enhance the representation of context. We propose a pivot-oriented deep selection model (PoDS) on top of the Transformer-based language models for dialogue comprehension. In detail, our model first picks out the pivot utterances from the conversation history according to the semantic matching with the candidate response or question, if any. Besides, knowledge items related to the dialogue context are extracted from a knowledge graph as external knowledge. Then, the pivot utterances and the external knowledge are combined with a well-designed mechanism for refining predictions. Experimental results on four dialogue comprehension benchmark tasks show that our proposed model achieves great improvements on baselines. A series of empirical comparisons are conducted to show how our selection strategies and the extra knowledge injection influence the results.

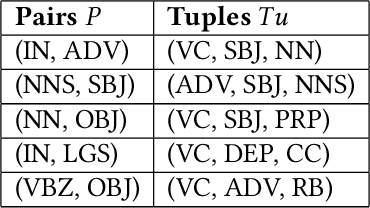

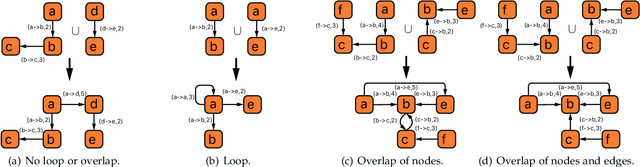

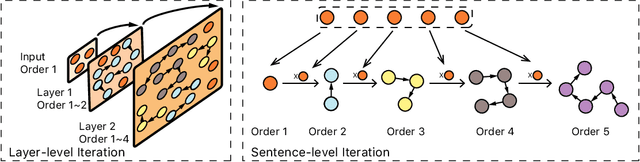

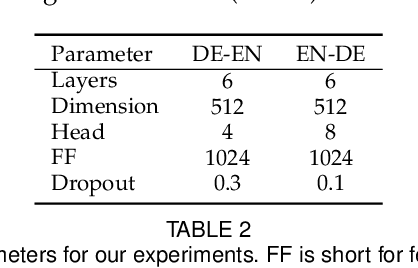

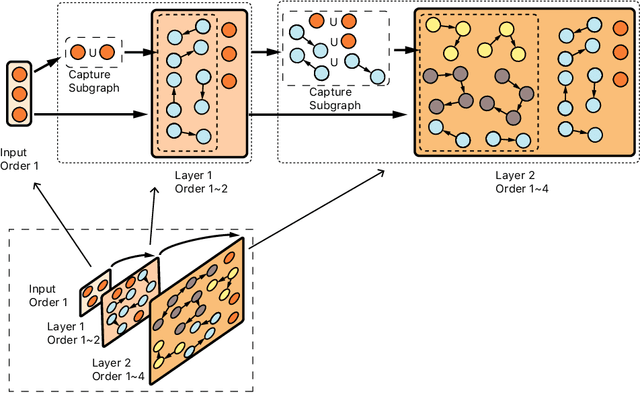

To Understand Representation of Layer-aware Sequence Encoders as Multi-order-graph

Jan 16, 2021

Abstract:In this paper, we propose a unified explanation of representation for layer-aware neural sequence encoders, which regards the representation as a revisited multigraph called multi-order-graph (MoG), so that model encoding can be viewed as a processing to capture all subgraphs in MoG. The relationship reflected by Multi-order-graph, called $n$-order dependency, can present what existing simple directed graph explanation cannot present. Our proposed MoG explanation allows to precisely observe every step of the generation of representation, put diverse relationship such as syntax into a unifiedly depicted framework. Based on the proposed MoG explanation, we further propose a graph-based self-attention network empowered Graph-Transformer by enhancing the ability of capturing subgraph information over the current models. Graph-Transformer accommodates different subgraphs into different groups, which allows model to focus on salient subgraphs. Result of experiments on neural machine translation tasks show that the MoG-inspired model can yield effective performance improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge