Grzegorz Cielniak

Leaving the Lines Behind: Vision-Based Crop Row Exit for Agricultural Robot Navigation

Jun 09, 2023Abstract:Usage of purely vision based solutions for row switching is not well explored in existing vision based crop row navigation frameworks. This method only uses RGB images for local feature matching based visual feedback to exit crop row. Depth images were used at crop row end to estimate the navigation distance within headland. The algorithm was tested on diverse headland areas with soil and vegetation. The proposed method could reach the end of the crop row and then navigate into the headland completely leaving behind the crop row with an error margin of 50 cm.

End-to-end Unsupervised Learning of Long-Term 3D Stable objects

Jan 26, 2023

Abstract:3D point cloud semantic classification is an important task in robotics as it enables a better understanding of the mapped environment. This work proposes to learn the long-term stability of the 3D objects using a neural network based on PointNet++, where the long-term stable object refers to a static object that cannot move on its own (e.g. tree, pole, building). The training data is generated in an unsupervised manner by assigning a continuous label to individual points by exploiting multiple time slices of the same environment. Instead of using discrete labels, i.e. static/dynamic, we propose to use a continuous label value indicating point temporal stability to train a regression PointNet++ network. We evaluated our approach on point cloud data of two parking lots from the NCLT dataset. The experiments' performance reveals that static vs dynamic object classification is best performed by training a regression model, followed by thresholding, compared to directly training a classification model.

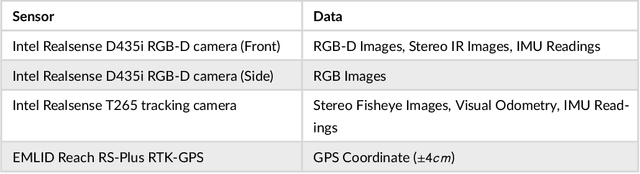

Collection and Evaluation of a Long-Term 4D Agri-Robotic Dataset

Nov 25, 2022Abstract:Long-term autonomy is one of the most demanded capabilities looked into a robot. The possibility to perform the same task over and over on a long temporal horizon, offering a high standard of reproducibility and robustness, is appealing. Long-term autonomy can play a crucial role in the adoption of robotics systems for precision agriculture, for example in assisting humans in monitoring and harvesting crops in a large orchard. With this scope in mind, we report an ongoing effort in the long-term deployment of an autonomous mobile robot in a vineyard for data collection across multiple months. The main aim is to collect data from the same area at different points in time so to be able to analyse the impact of the environmental changes in the mapping and localisation tasks. In this work, we present a map-based localisation study taking 4 data sessions. We identify expected failures when the pre-built map visually differs from the environment's current appearance and we anticipate LTS-Net, a solution pointed at extracting stable temporal features for improving long-term 4D localisation results.

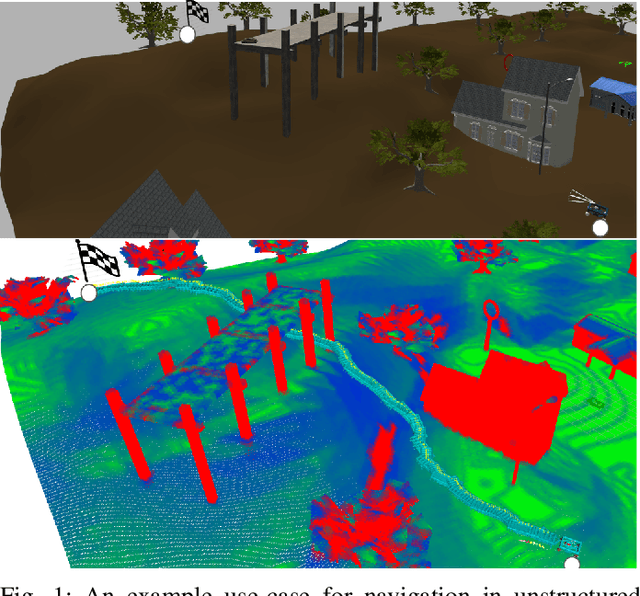

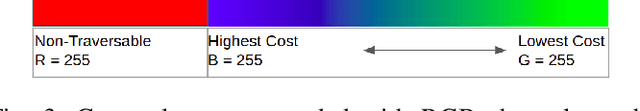

Cloud Hopping; Navigating in 3D Uneven Environments via Supervoxels and Control Lyapunov Function

Oct 06, 2022

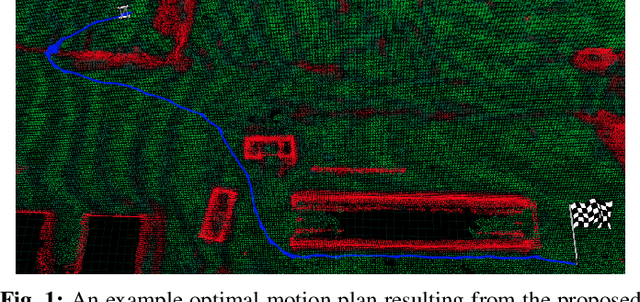

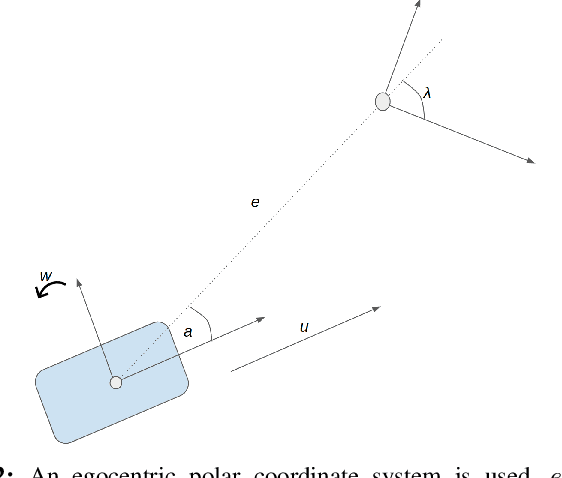

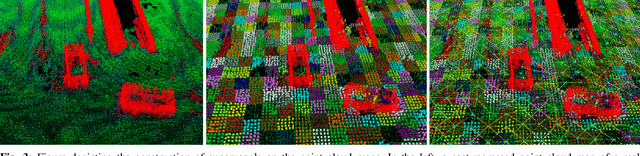

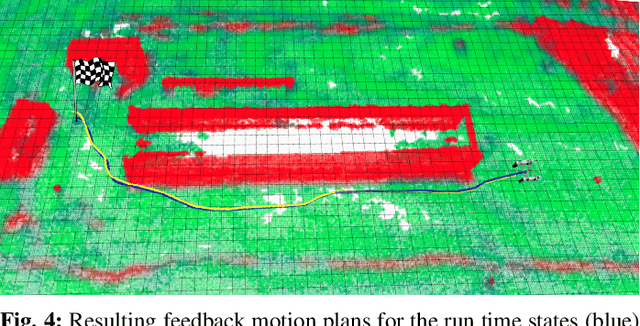

Abstract:This paper presents a novel feedback motion planning method for mobile robot navigation in 3D uneven terrains. We take advantage of the \textit{supervoxel} representation of point clouds, which enables a compact connectivity graph of traversable regions on the point cloud maps. Given this graph of traversable areas, our approach navigates the robot to any reachable goal pose using a control Lyapunov function (cLf) and a navigation function. The cLf ensures the kinodynamic feasibility and target convergence of the generated motion plans, while the navigation function optimizes the resulting feedback motion plans. We carried out navigation experiments in real and simulated 3D uneven terrains. In all circumstances, the experimental findings show that our approach performs superior to the baselines, proving the approach's efficiency and adaptability to navigate a robot in challenging uneven 3D terrains. The proposed method can also navigate a robot with a particular objective, e.g., shortest-distance or least-inclined plan. We compared our approach to well-established sampling-based motion planners in which our method outperformed all other planners in terms of execution time and resulting path length. Finally, we provide an open-source implementation of the proposed method to benefit the robotics community.

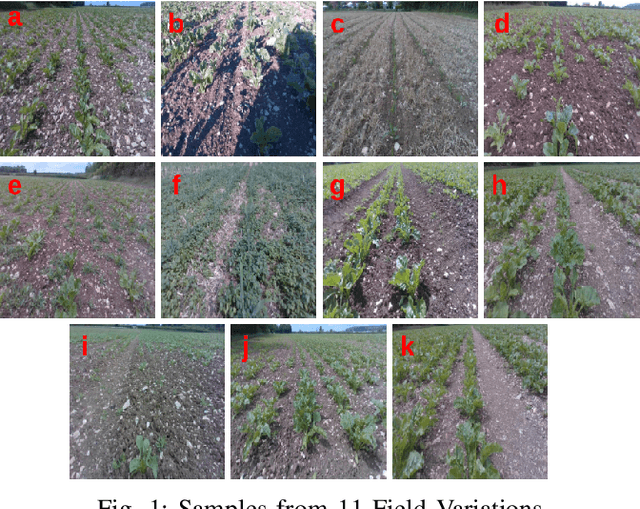

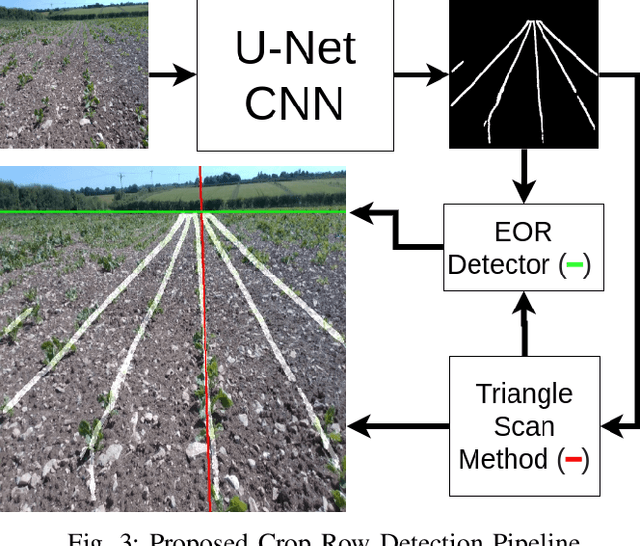

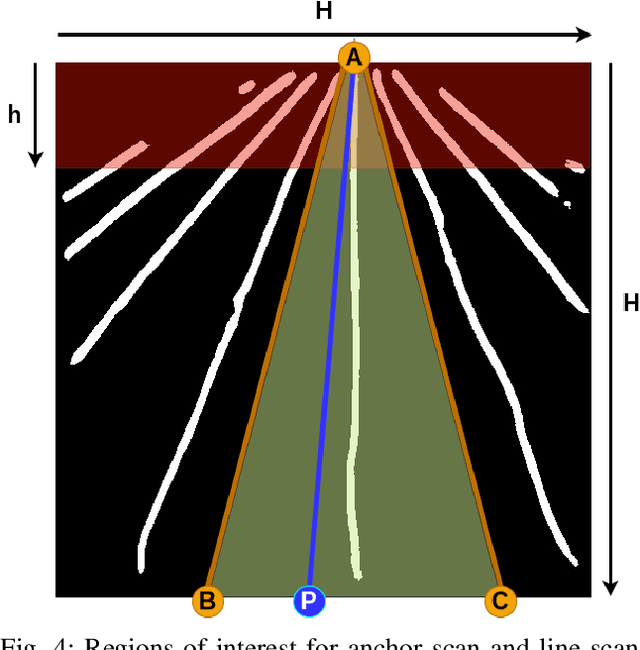

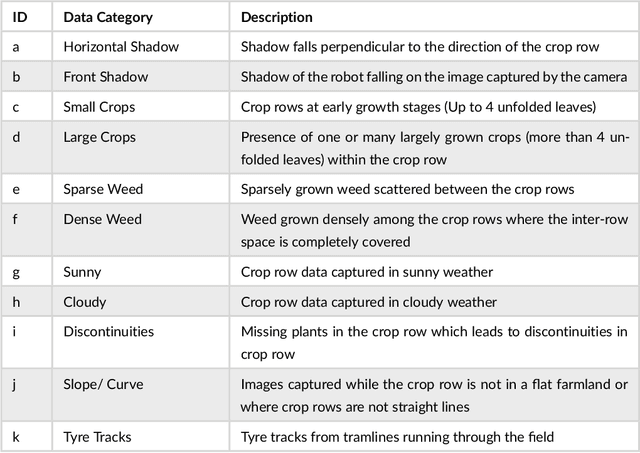

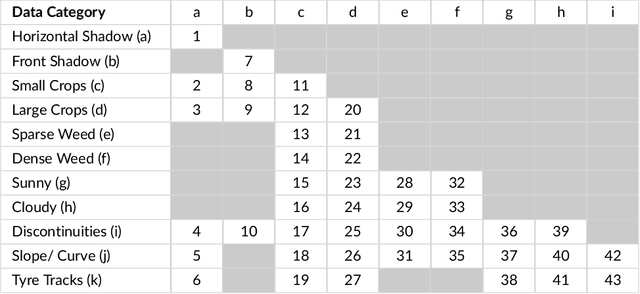

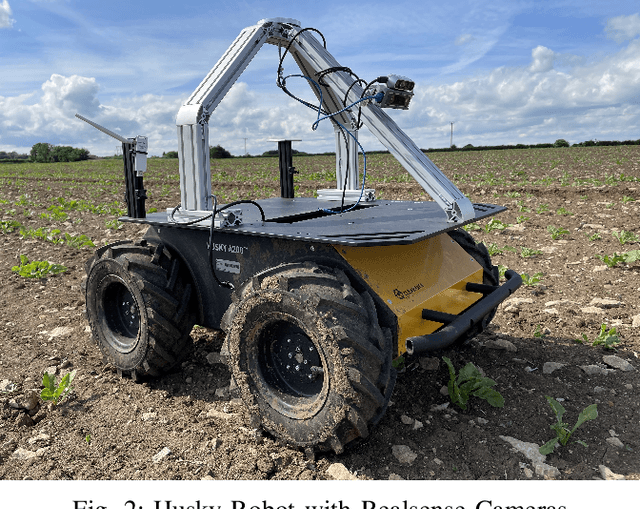

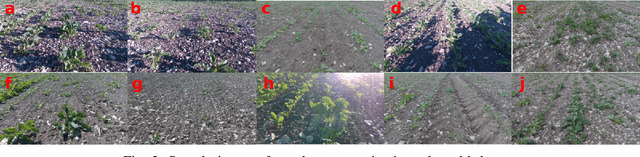

Vision based Crop Row Navigation under Varying Field Conditions in Arable Fields

Sep 28, 2022

Abstract:Accurate crop row detection is often challenged by the varying field conditions present in real-world arable fields. Traditional colour based segmentation is unable to cater for all such variations. The lack of comprehensive datasets in agricultural environments limits the researchers from developing robust segmentation models to detect crop rows. We present a dataset for crop row detection with 11 field variations from Sugar Beet and Maize crops. We also present a novel crop row detection algorithm for visual servoing in crop row fields. Our algorithm can detect crop rows against varying field conditions such as curved crop rows, weed presence, discontinuities, growth stages, tramlines, shadows and light levels. Our method only uses RGB images from a front-mounted camera on a Husky robot to predict crop rows. Our method outperformed the classic colour based crop row detection baseline. Dense weed presence within inter-row space and discontinuities in crop rows were the most challenging field conditions for our crop row detection algorithm. Our method can detect the end of the crop row and navigate the robot towards the headland area when it reaches the end of the crop row.

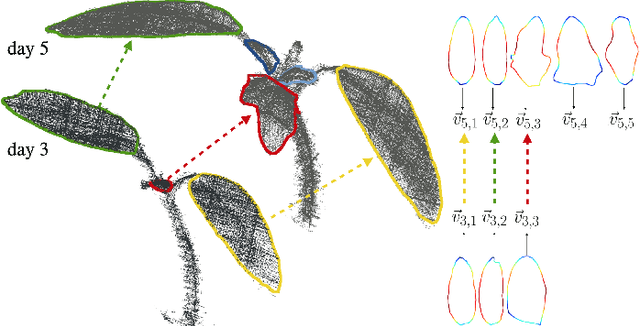

Statistical shape representations for temporal registration of plant components in 3D

Sep 23, 2022

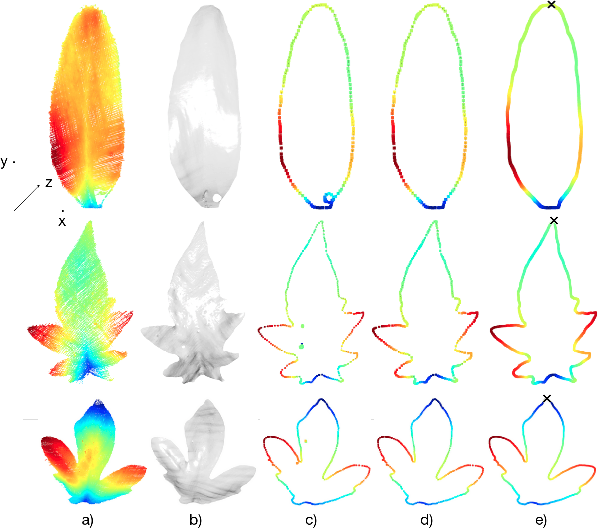

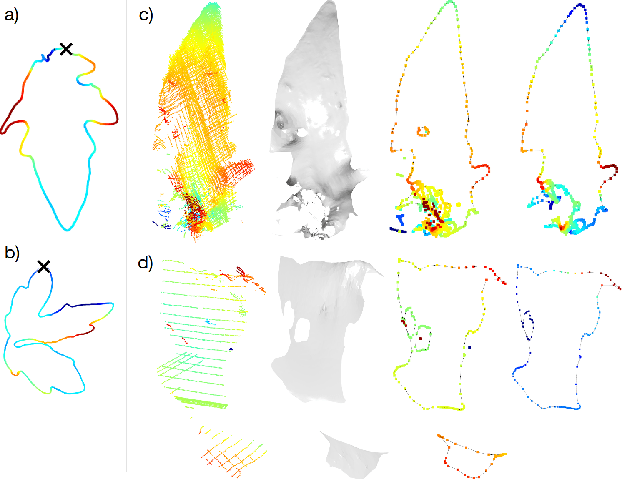

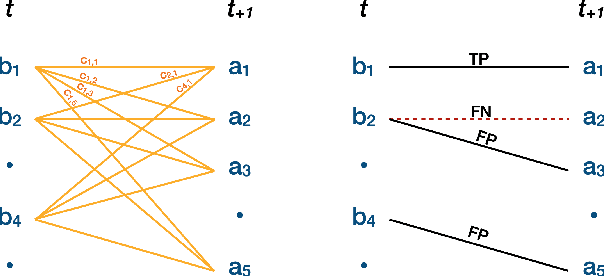

Abstract:Plants are dynamic organisms. Understanding temporal variations in vegetation is an essential problem for all robots in the wild. However, associating repeated 3D scans of plants across time is challenging. A key step in this process is re-identifying and tracking the same individual plant components over time. Previously, this has been achieved by comparing their global spatial or topological location. In this work, we demonstrate how using shape features improves temporal organ matching. We present a landmark-free shape compression algorithm, which allows for the extraction of 3D shape features of leaves, characterises leaf shape and curvature efficiently in few parameters, and makes the association of individual leaves in feature space possible. The approach combines 3D contour extraction and further compression using Principal Component Analysis (PCA) to produce a shape space encoding, which is entirely learned from data and retains information about edge contours and 3D curvature. Our evaluation on temporal scan sequences of tomato plants shows, that incorporating shape features improves temporal leaf-matching. A combination of shape, location, and rotation information proves most informative for recognition of leaves over time and yields a true positive rate of 75%, a 15% improvement on sate-of-the-art methods. This is essential for robotic crop monitoring, which enables whole-of-lifecycle phenotyping.

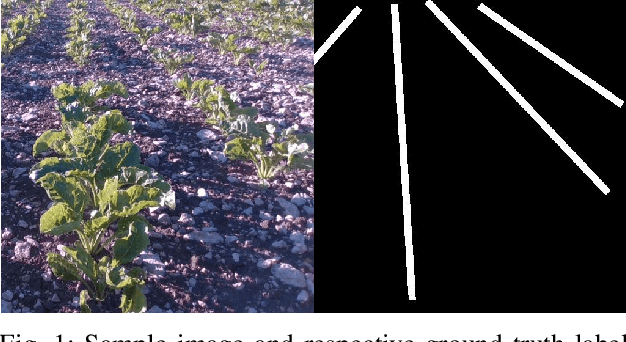

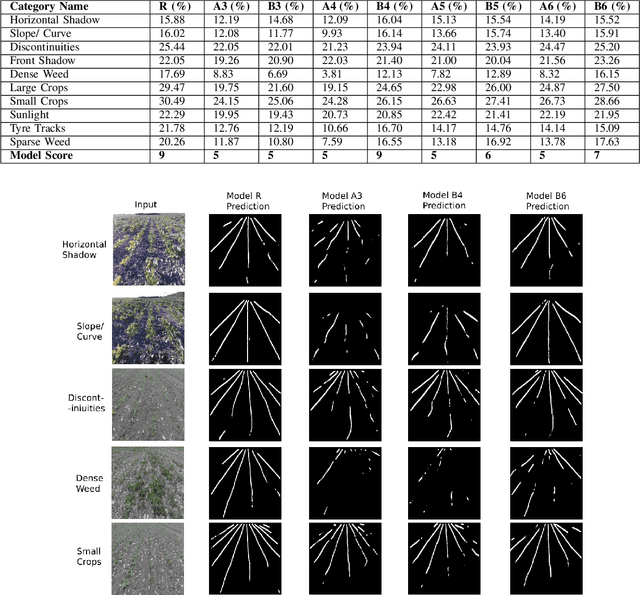

Deep learning-based Crop Row Following for Infield Navigation of Agri-Robots

Sep 09, 2022

Abstract:Autonomous navigation in agricultural environments is often challenged by varying field conditions that may arise in arable fields. The state-of-the-art solutions for autonomous navigation in these agricultural environments will require expensive hardware such as RTK-GPS. This paper presents a robust crop row detection algorithm that can withstand those variations while detecting crop rows for visual servoing. A dataset of sugar beet images was created with 43 combinations of 11 field variations found in arable fields. The novel crop row detection algorithm is tested both for the crop row detection performance and also the capability of visual servoing along a crop row. The algorithm only uses RGB images as input and a convolutional neural network was used to predict crop row masks. Our algorithm outperformed the baseline method which uses colour-based segmentation for all the combinations of field variations. We use a combined performance indicator that accounts for the angular and displacement errors of the crop row detection. Our algorithm exhibited the worst performance during the early growth stages of the crop.

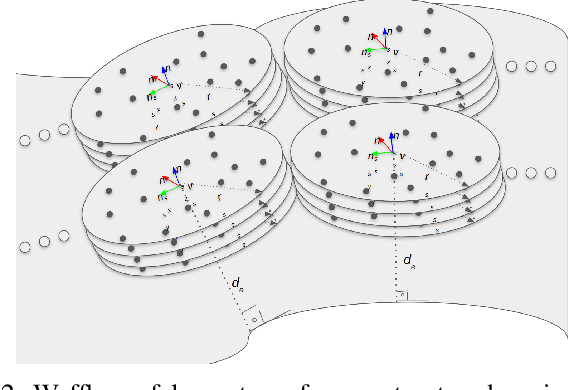

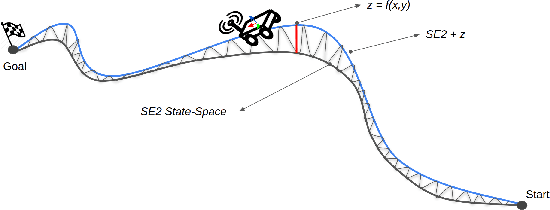

Elevation State-Space: Surfel-Based Navigation in Uneven Environments for Mobile Robots

Aug 17, 2022

Abstract:This paper introduces a new method for robot motion planning and navigation in uneven environments through a surfel representation of underlying point clouds. The proposed method addresses the shortcomings of state-of-the-art navigation methods by incorporating both kinematic and physical constraints of a robot with standard motion planning algorithms (e.g., those from the Open Motion Planning Library), thus enabling efficient sampling-based planners for challenging uneven terrain navigation on raw point cloud maps. Unlike techniques based on Digital Elevation Maps (DEMs), our novel surfel-based state-space formulation and implementation are based on raw point cloud maps, allowing for the modeling of overlapping surfaces such as bridges, piers, and tunnels. Experimental results demonstrate the robustness of the proposed method for robot navigation in real and simulated unstructured environments. The proposed approach also optimizes planners' performances by boosting their success rates up to 5x for challenging unstructured terrain planning and navigation, thanks to our surfel-based approach's robot constraint-aware sampling strategy. Finally, we provide an open-source implementation of the proposed method to benefit the robotics community.

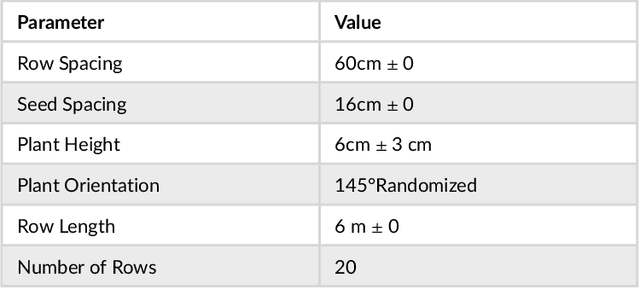

Towards Infield Navigation: leveraging simulated data for crop row detection

Apr 04, 2022

Abstract:Agricultural datasets for crop row detection are often bound by their limited number of images. This restricts the researchers from developing deep learning based models for precision agricultural tasks involving crop row detection. We suggest the utilization of small real-world datasets along with additional data generated by simulations to yield similar crop row detection performance as that of a model trained with a large real world dataset. Our method could reach the performance of a deep learning based crop row detection model trained with real-world data by using 60% less labelled real-world data. Our model performed well against field variations such as shadows, sunlight and grow stages. We introduce an automated pipeline to generate labelled images for crop row detection in simulation domain. An extensive comparison is done to analyze the contribution of simulated data towards reaching robust crop row detection in various real-world field scenarios.

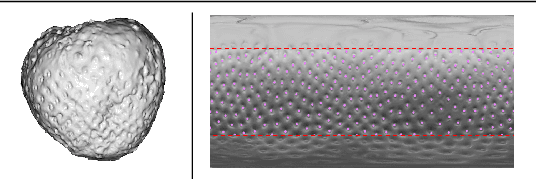

Gaussian map predictions for 3D surface feature localisation and counting

Dec 07, 2021

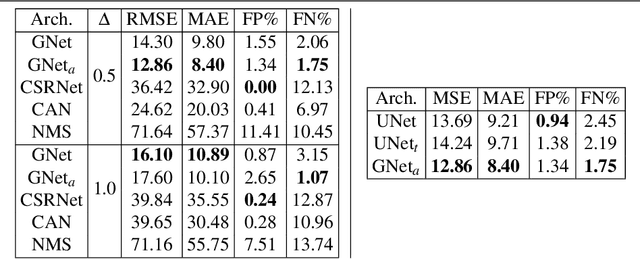

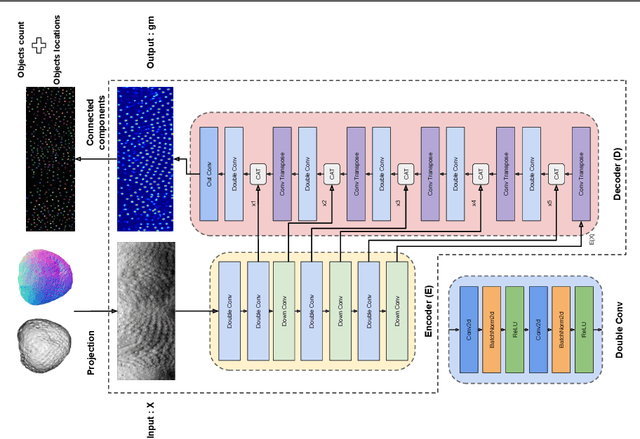

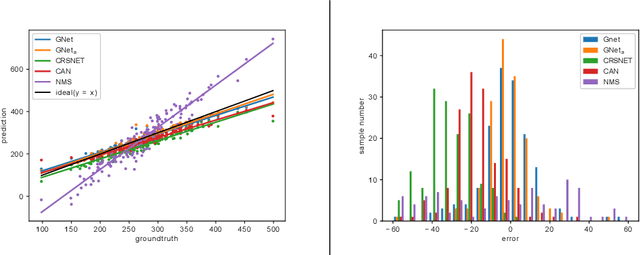

Abstract:In this paper, we propose to employ a Gaussian map representation to estimate precise location and count of 3D surface features, addressing the limitations of state-of-the-art methods based on density estimation which struggle in presence of local disturbances. Gaussian maps indicate probable object location and can be generated directly from keypoint annotations avoiding laborious and costly per-pixel annotations. We apply this method to the 3D spheroidal class of objects which can be projected into 2D shape representation enabling efficient processing by a neural network GNet, an improved UNet architecture, which generates the likely locations of surface features and their precise count. We demonstrate a practical use of this technique for counting strawberry achenes which is used as a fruit quality measure in phenotyping applications. The results of training the proposed system on several hundreds of 3D scans of strawberries from a publicly available dataset demonstrate the accuracy and precision of the system which outperforms the state-of-the-art density-based methods for this application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge