Dieter Schmalstieg

A LoD of Gaussians: Unified Training and Rendering for Ultra-Large Scale Reconstruction with External Memory

Jul 01, 2025Abstract:Gaussian Splatting has emerged as a high-performance technique for novel view synthesis, enabling real-time rendering and high-quality reconstruction of small scenes. However, scaling to larger environments has so far relied on partitioning the scene into chunks -- a strategy that introduces artifacts at chunk boundaries, complicates training across varying scales, and is poorly suited to unstructured scenarios such as city-scale flyovers combined with street-level views. Moreover, rendering remains fundamentally limited by GPU memory, as all visible chunks must reside in VRAM simultaneously. We introduce A LoD of Gaussians, a framework for training and rendering ultra-large-scale Gaussian scenes on a single consumer-grade GPU -- without partitioning. Our method stores the full scene out-of-core (e.g., in CPU memory) and trains a Level-of-Detail (LoD) representation directly, dynamically streaming only the relevant Gaussians. A hybrid data structure combining Gaussian hierarchies with Sequential Point Trees enables efficient, view-dependent LoD selection, while a lightweight caching and view scheduling system exploits temporal coherence to support real-time streaming and rendering. Together, these innovations enable seamless multi-scale reconstruction and interactive visualization of complex scenes -- from broad aerial views to fine-grained ground-level details.

SOF: Sorted Opacity Fields for Fast Unbounded Surface Reconstruction

Jun 23, 2025

Abstract:Recent advances in 3D Gaussian representations have significantly improved the quality and efficiency of image-based scene reconstruction. Their explicit nature facilitates real-time rendering and fast optimization, yet extracting accurate surfaces - particularly in large-scale, unbounded environments - remains a difficult task. Many existing methods rely on approximate depth estimates and global sorting heuristics, which can introduce artifacts and limit the fidelity of the reconstructed mesh. In this paper, we present Sorted Opacity Fields (SOF), a method designed to recover detailed surfaces from 3D Gaussians with both speed and precision. Our approach improves upon prior work by introducing hierarchical resorting and a robust formulation of Gaussian depth, which better aligns with the level-set. To enhance mesh quality, we incorporate a level-set regularizer operating on the opacity field and introduce losses that encourage geometrically-consistent primitive shapes. In addition, we develop a parallelized Marching Tetrahedra algorithm tailored to our opacity formulation, reducing meshing time by up to an order of magnitude. As demonstrated by our quantitative evaluation, SOF achieves higher reconstruction accuracy while cutting total processing time by more than a factor of three. These results mark a step forward in turning efficient Gaussian-based rendering into equally efficient geometry extraction.

AAA-Gaussians: Anti-Aliased and Artifact-Free 3D Gaussian Rendering

Apr 17, 2025Abstract:Although 3D Gaussian Splatting (3DGS) has revolutionized 3D reconstruction, it still faces challenges such as aliasing, projection artifacts, and view inconsistencies, primarily due to the simplification of treating splats as 2D entities. We argue that incorporating full 3D evaluation of Gaussians throughout the 3DGS pipeline can effectively address these issues while preserving rasterization efficiency. Specifically, we introduce an adaptive 3D smoothing filter to mitigate aliasing and present a stable view-space bounding method that eliminates popping artifacts when Gaussians extend beyond the view frustum. Furthermore, we promote tile-based culling to 3D with screen-space planes, accelerating rendering and reducing sorting costs for hierarchical rasterization. Our method achieves state-of-the-art quality on in-distribution evaluation sets and significantly outperforms other approaches for out-of-distribution views. Our qualitative evaluations further demonstrate the effective removal of aliasing, distortions, and popping artifacts, ensuring real-time, artifact-free rendering.

Deep Medial Voxels: Learned Medial Axis Approximations for Anatomical Shape Modeling

Mar 18, 2024Abstract:Shape reconstruction from imaging volumes is a recurring need in medical image analysis. Common workflows start with a segmentation step, followed by careful post-processing and,finally, ad hoc meshing algorithms. As this sequence can be timeconsuming, neural networks are trained to reconstruct shapes through template deformation. These networks deliver state-ofthe-art results without manual intervention, but, so far, they have primarily been evaluated on anatomical shapes with little topological variety between individuals. In contrast, other works favor learning implicit shape models, which have multiple benefits for meshing and visualization. Our work follows this direction by introducing deep medial voxels, a semi-implicit representation that faithfully approximates the topological skeleton from imaging volumes and eventually leads to shape reconstruction via convolution surfaces. Our reconstruction technique shows potential for both visualization and computer simulations.

DeepDR: Deep Structure-Aware RGB-D Inpainting for Diminished Reality

Dec 01, 2023Abstract:Diminished reality (DR) refers to the removal of real objects from the environment by virtually replacing them with their background. Modern DR frameworks use inpainting to hallucinate unobserved regions. While recent deep learning-based inpainting is promising, the DR use case is complicated by the need to generate coherent structure and 3D geometry (i.e., depth), in particular for advanced applications, such as 3D scene editing. In this paper, we propose DeepDR, a first RGB-D inpainting framework fulfilling all requirements of DR: Plausible image and geometry inpainting with coherent structure, running at real-time frame rates, with minimal temporal artifacts. Our structure-aware generative network allows us to explicitly condition color and depth outputs on the scene semantics, overcoming the difficulty of reconstructing sharp and consistent boundaries in regions with complex backgrounds. Experimental results show that the proposed framework can outperform related work qualitatively and quantitatively.

The HoloLens in Medicine: A systematic Review and Taxonomy

Sep 06, 2022

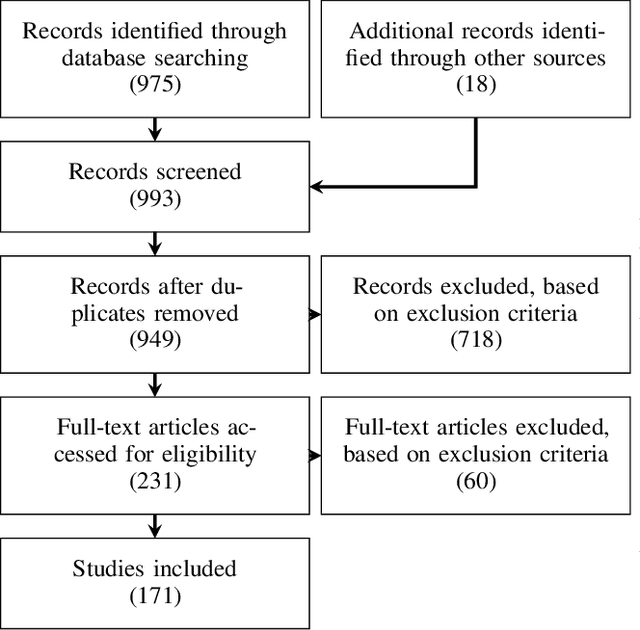

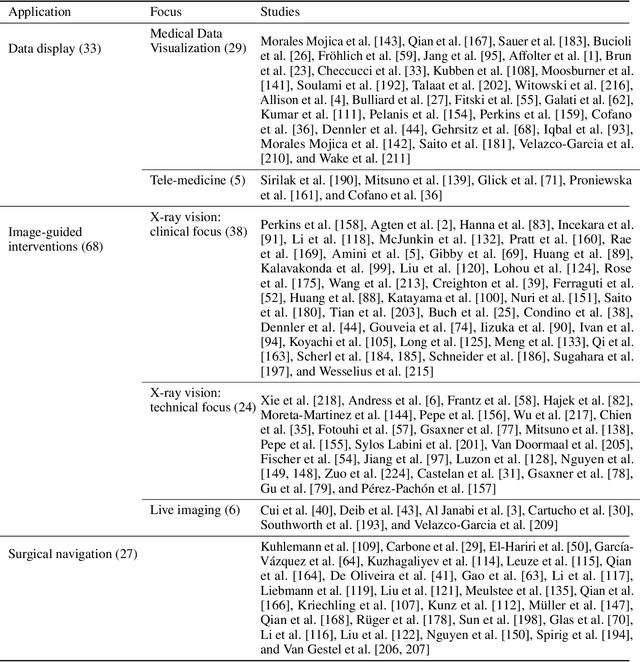

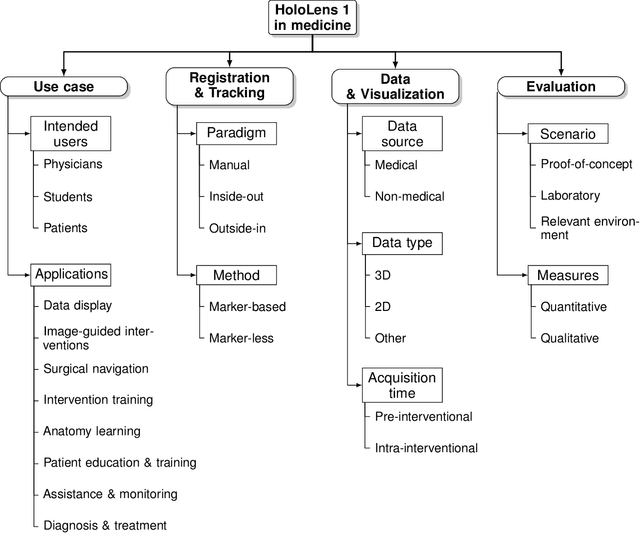

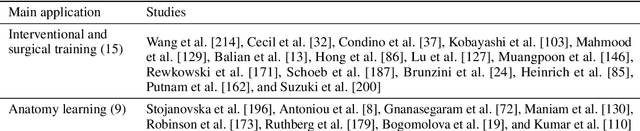

Abstract:The HoloLens (Microsoft Corp., Redmond, WA), a head-worn, optically see-through augmented reality display, is the main player in the recent boost in medical augmented reality research. In medical settings, the HoloLens enables the physician to obtain immediate insight into patient information, directly overlaid with their view of the clinical scenario, the medical student to gain a better understanding of complex anatomies or procedures, and even the patient to execute therapeutic tasks with improved, immersive guidance. In this systematic review, we provide a comprehensive overview of the usage of the first-generation HoloLens within the medical domain, from its release in March 2016, until the year of 2021, were attention is shifting towards it's successor, the HoloLens 2. We identified 171 relevant publications through a systematic search of the PubMed and Scopus databases. We analyze these publications in regard to their intended use case, technical methodology for registration and tracking, data sources, visualization as well as validation and evaluation. We find that, although the feasibility of using the HoloLens in various medical scenarios has been shown, increased efforts in the areas of precision, reliability, usability, workflow and perception are necessary to establish AR in clinical practice.

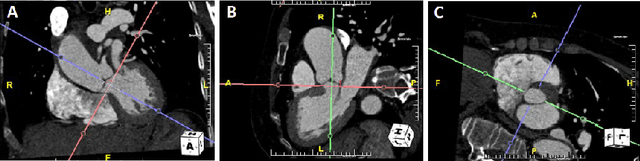

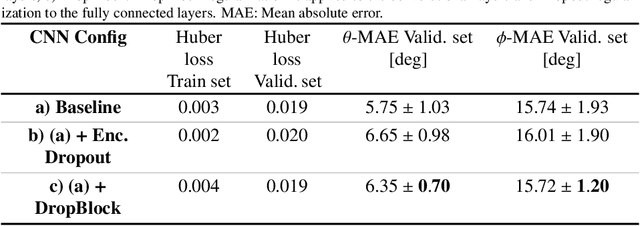

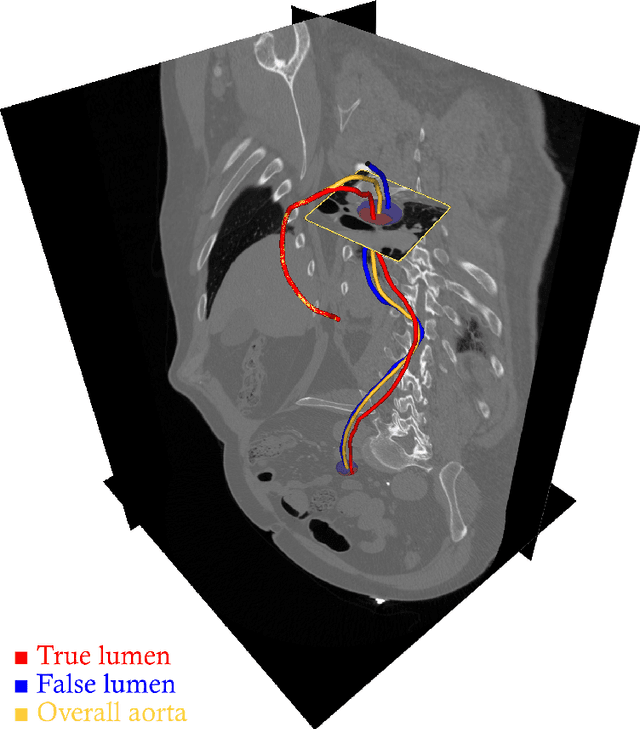

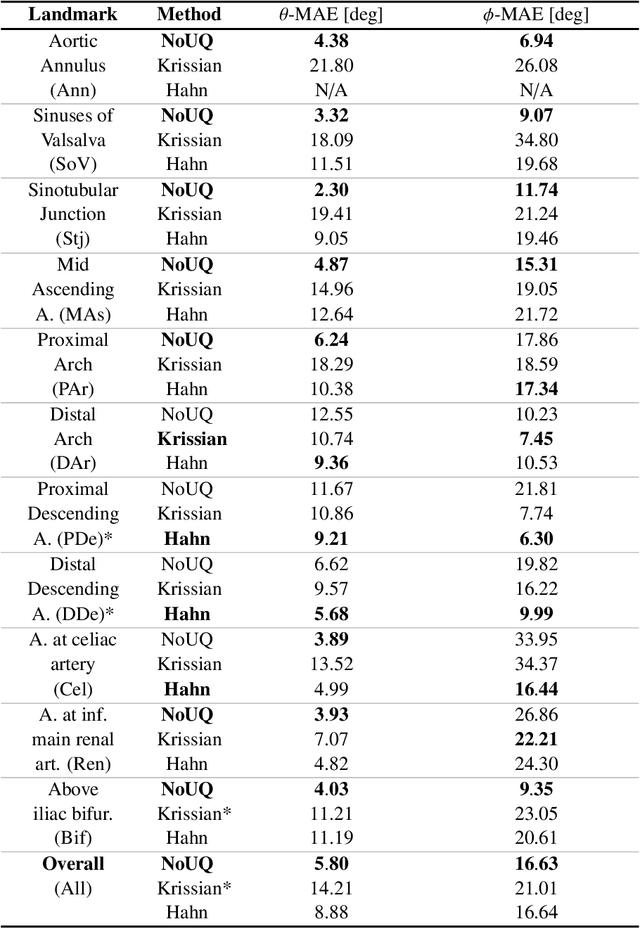

Automated cross-sectional view selection in CT angiography of aortic dissections with uncertainty awareness and retrospective clinical annotations

Nov 22, 2021

Abstract:Objective: Surveillance imaging of chronic aortic diseases, such as dissections, relies on obtaining and comparing cross-sectional diameter measurements at predefined aortic landmarks, over time. Due to a lack of robust tools, the orientation of the cross-sectional planes is defined manually by highly trained operators. We show how manual annotations routinely collected in a clinic can be efficiently used to ease this task, despite the presence of a non-negligible interoperator variability in the measurements. Impact: Ill-posed but repetitive imaging tasks can be eased or automated by leveraging imperfect, retrospective clinical annotations. Methodology: In this work, we combine convolutional neural networks and uncertainty quantification methods to predict the orientation of such cross-sectional planes. We use clinical data randomly processed by 11 operators for training, and test on a smaller set processed by 3 independent operators to assess interoperator variability. Results: Our analysis shows that manual selection of cross-sectional planes is characterized by 95% limits of agreement (LOA) of $10.6^\circ$ and $21.4^\circ$ per angle. Our method showed to decrease static error by $3.57^\circ$ ($40.2$%) and $4.11^\circ$ ($32.8$%) against state of the art and LOA by $5.4^\circ$ ($49.0$%) and $16.0^\circ$ ($74.6$%) against manual processing. Conclusion: This suggests that pre-existing annotations can be an inexpensive resource in clinics to ease ill-posed and repetitive tasks like cross-section extraction for surveillance of aortic dissections.

DetectFusion: Detecting and Segmenting Both Known and Unknown Dynamic Objects in Real-time SLAM

Jul 22, 2019

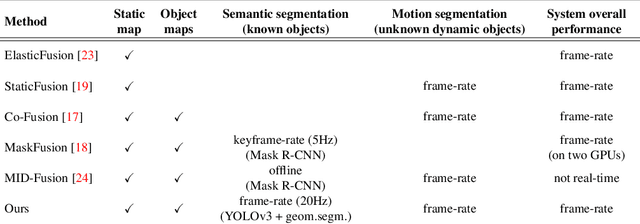

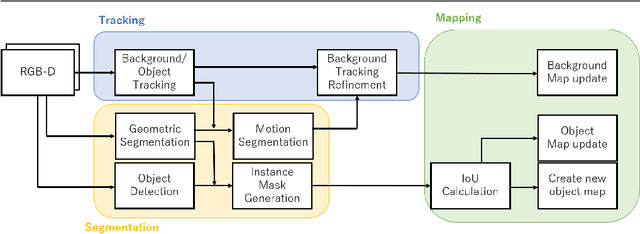

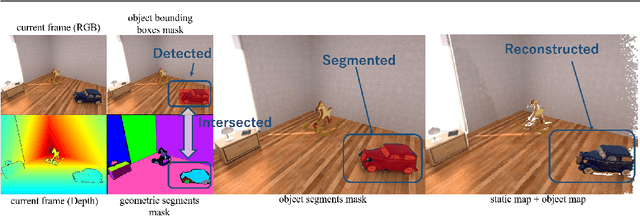

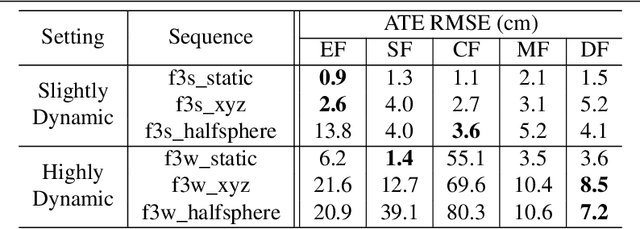

Abstract:We present DetectFusion, an RGB-D SLAM system that runs in real-time and can robustly handle semantically known and unknown objects that can move dynamically in the scene. Our system detects, segments and assigns semantic class labels to known objects in the scene, while tracking and reconstructing them even when they move independently in front of the monocular camera. In contrast to related work, we achieve real-time computational performance on semantic instance segmentation with a novel method combining 2D object detection and 3D geometric segmentation. In addition, we propose a method for detecting and segmenting the motion of semantically unknown objects, thus further improving the accuracy of camera tracking and map reconstruction. We show that our method performs on par or better than previous work in terms of localization and object reconstruction accuracy, while achieving about 20 FPS even if the objects are segmented in each frame.

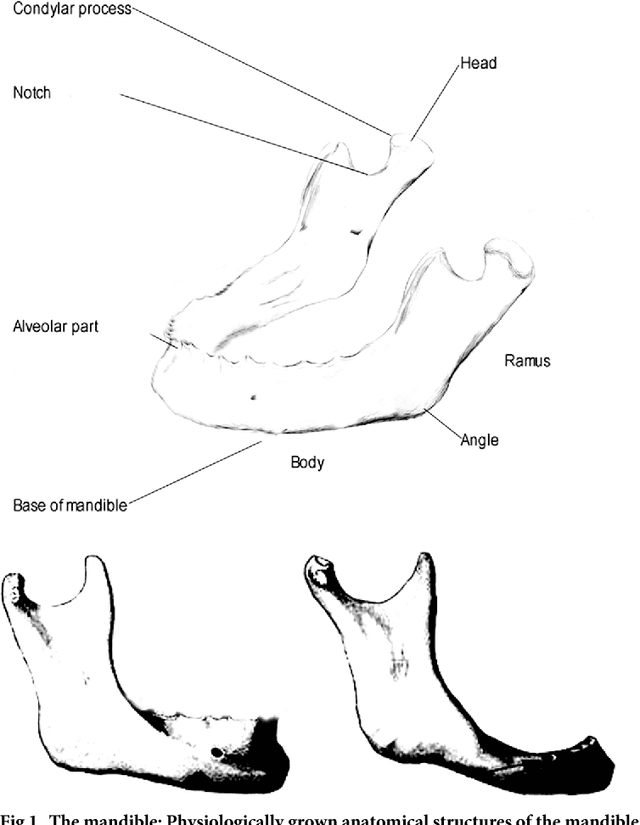

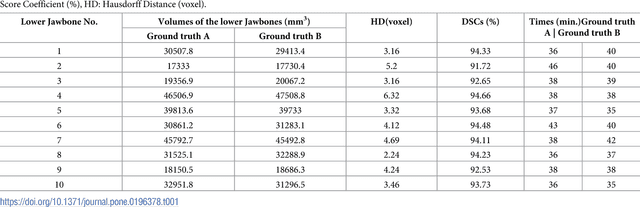

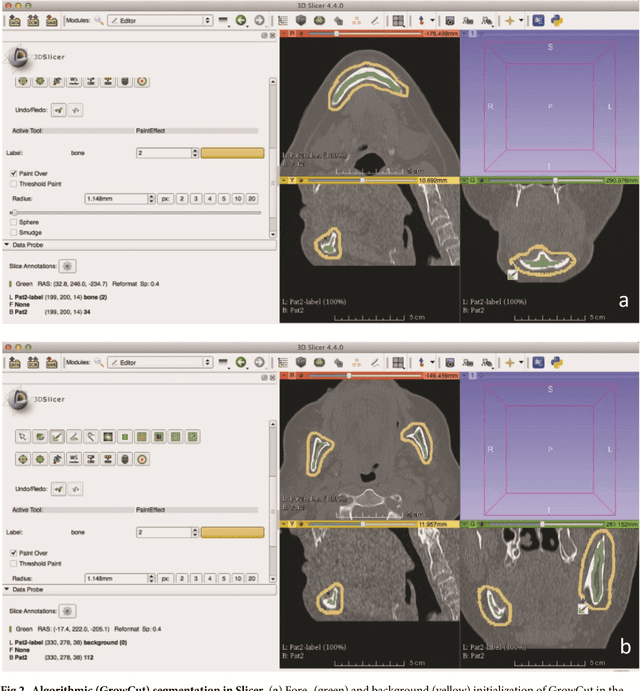

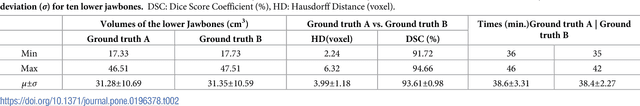

Clinical evaluation of semi-automatic opensource algorithmic software segmentation of the mandibular bone: Practical feasibility and assessment of a new course of action

May 11, 2018

Abstract:Computer assisted technologies based on algorithmic software segmentation are an increasing topic of interest in complex surgical cases. However - due to functional instability, time consuming software processes, personnel resources or licensed-based financial costs many segmentation processes are often outsourced from clinical centers to third parties and the industry. Therefore, the aim of this trial was to assess the practical feasibility of an easy available, functional stable and licensed-free segmentation approach to be used in the clinical practice. In this retrospective, randomized, controlled trail the accuracy and accordance of the open-source based segmentation algorithm GrowCut (GC) was assessed through the comparison to the manually generated ground truth of the same anatomy using 10 CT lower jaw data-sets from the clinical routine. Assessment parameters were the segmentation time, the volume, the voxel number, the Dice Score (DSC) and the Hausdorff distance (HD). Overall segmentation times were about one minute. Mean DSC values of over 85% and HD below 33.5 voxel could be achieved. Statistical differences between the assessment parameters were not significant (p<0.05) and correlation coefficients were close to the value one (r > 0.94). Complete functional stable and time saving segmentations with high accuracy and high positive correlation could be performed by the presented interactive open-source based approach. In the cranio-maxillofacial complex the used method could represent an algorithmic alternative for image-based segmentation in the clinical practice for e.g. surgical treatment planning or visualization of postoperative results and offers several advantages. Systematic comparisons to other segmentation approaches or with a greater data amount are areas of future works.

* 26 pages

In-depth Assessment of an Interactive Graph-based Approach for the Segmentation for Pancreatic Metastasis in Ultrasound Acquisitions of the Liver with two Specialists in Internal Medicine

Mar 12, 2018

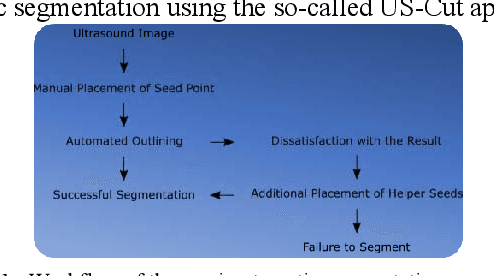

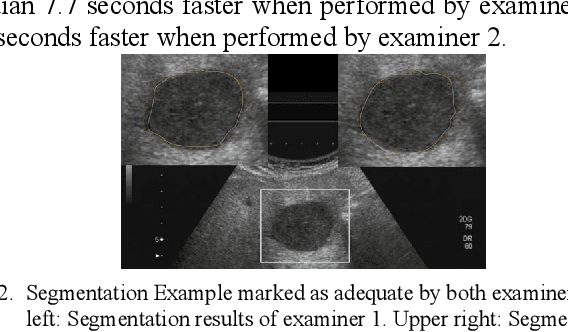

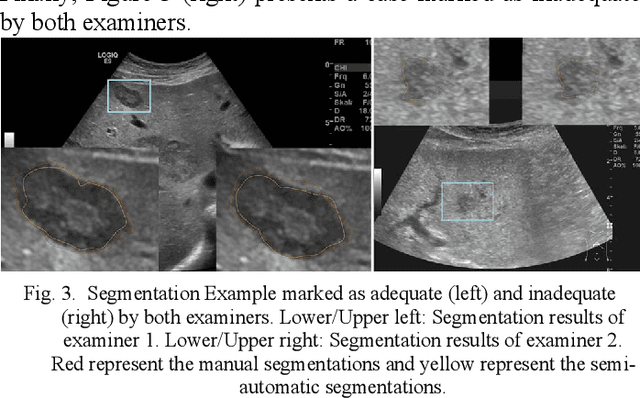

Abstract:The manual outlining of hepatic metastasis in (US) ultrasound acquisitions from patients suffering from pancreatic cancer is common practice. However, such pure manual measurements are often very time consuming, and the results repeatedly differ between the raters. In this contribution, we study the in-depth assessment of an interactive graph-based approach for the segmentation for pancreatic metastasis in US images of the liver with two specialists in Internal Medicine. Thereby, evaluating the approach with over one hundred different acquisitions of metastases. The two physicians or the algorithm had never assessed the acquisitions before the evaluation. In summary, the physicians first performed a pure manual outlining followed by an algorithmic segmentation over one month later. As a result, the experts satisfied in up to ninety percent of algorithmic segmentation results. Furthermore, the algorithmic segmentation was much faster than manual outlining and achieved a median Dice Similarity Coefficient (DSC) of over eighty percent. Ultimately, the algorithm enables a fast and accurate segmentation of liver metastasis in clinical US images, which can support the manual outlining in daily practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge