Who Should Predict? Exact Algorithms For Learning to Defer to Humans

Jan 15, 2023Hussein Mozannar, Hunter Lang, Dennis Wei, Prasanna Sattigeri, Subhro Das, David Sontag

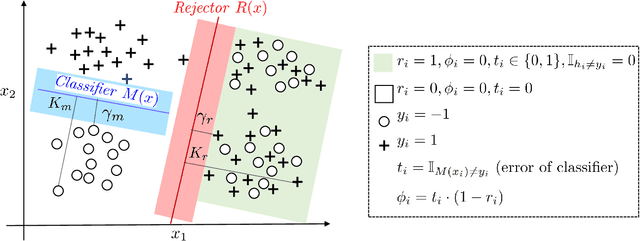

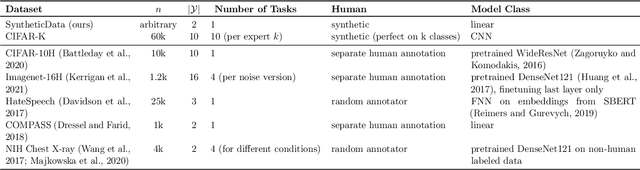

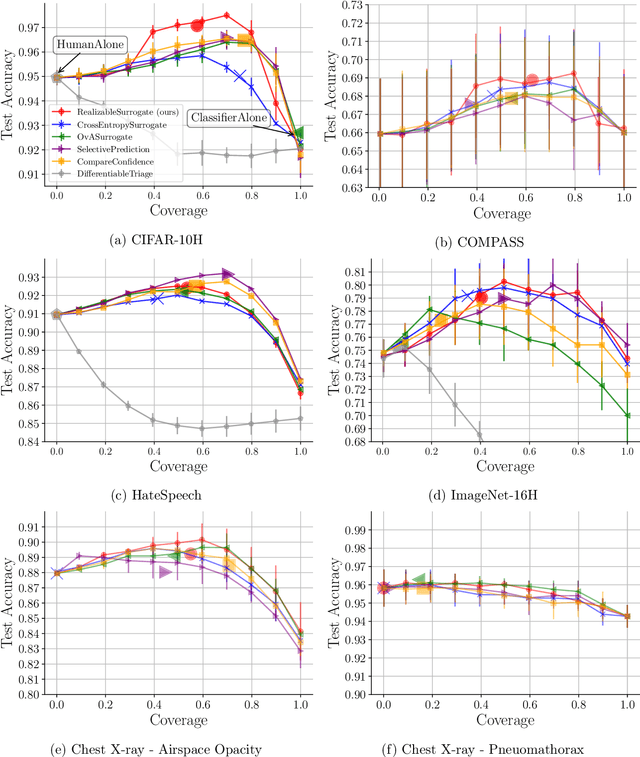

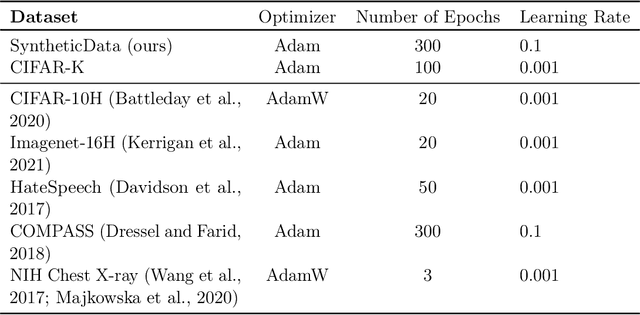

Automated AI classifiers should be able to defer the prediction to a human decision maker to ensure more accurate predictions. In this work, we jointly train a classifier with a rejector, which decides on each data point whether the classifier or the human should predict. We show that prior approaches can fail to find a human-AI system with low misclassification error even when there exists a linear classifier and rejector that have zero error (the realizable setting). We prove that obtaining a linear pair with low error is NP-hard even when the problem is realizable. To complement this negative result, we give a mixed-integer-linear-programming (MILP) formulation that can optimally solve the problem in the linear setting. However, the MILP only scales to moderately-sized problems. Therefore, we provide a novel surrogate loss function that is realizable-consistent and performs well empirically. We test our approaches on a comprehensive set of datasets and compare to a wide range of baselines.

On the Safety of Interpretable Machine Learning: A Maximum Deviation Approach

Nov 02, 2022Dennis Wei, Rahul Nair, Amit Dhurandhar, Kush R. Varshney, Elizabeth M. Daly, Moninder Singh

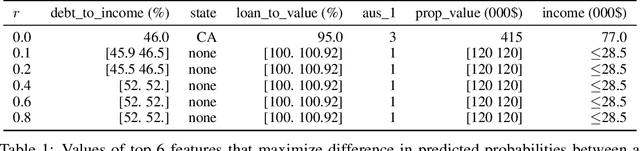

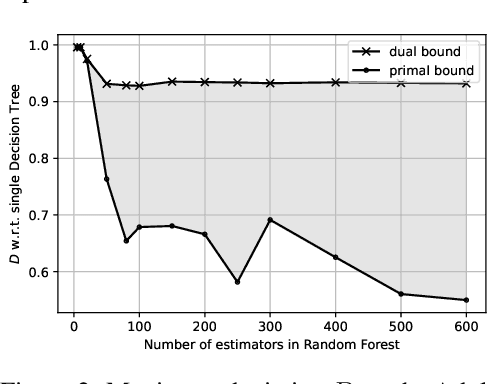

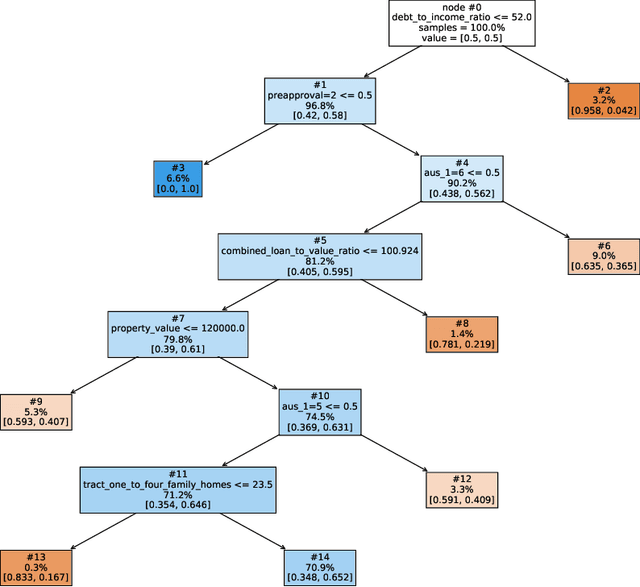

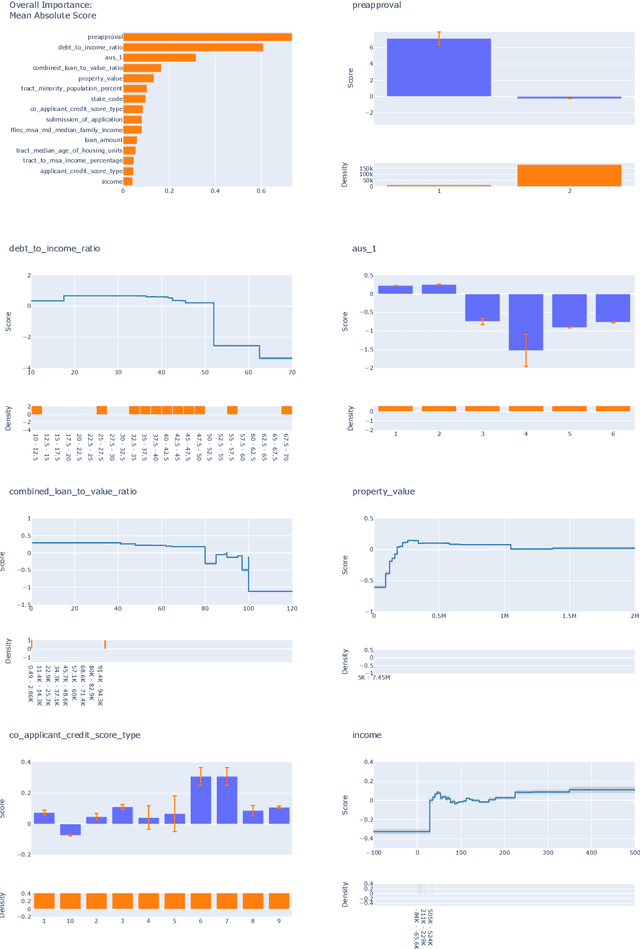

Interpretable and explainable machine learning has seen a recent surge of interest. We focus on safety as a key motivation behind the surge and make the relationship between interpretability and safety more quantitative. Toward assessing safety, we introduce the concept of maximum deviation via an optimization problem to find the largest deviation of a supervised learning model from a reference model regarded as safe. We then show how interpretability facilitates this safety assessment. For models including decision trees, generalized linear and additive models, the maximum deviation can be computed exactly and efficiently. For tree ensembles, which are not regarded as interpretable, discrete optimization techniques can still provide informative bounds. For a broader class of piecewise Lipschitz functions, we leverage the multi-armed bandit literature to show that interpretability produces tighter (regret) bounds on the maximum deviation. We present case studies, including one on mortgage approval, to illustrate our methods and the insights about models that may be obtained from deviation maximization.

Downstream Fairness Caveats with Synthetic Healthcare Data

Mar 09, 2022Karan Bhanot, Ioana Baldini, Dennis Wei, Jiaming Zeng, Kristin P. Bennett

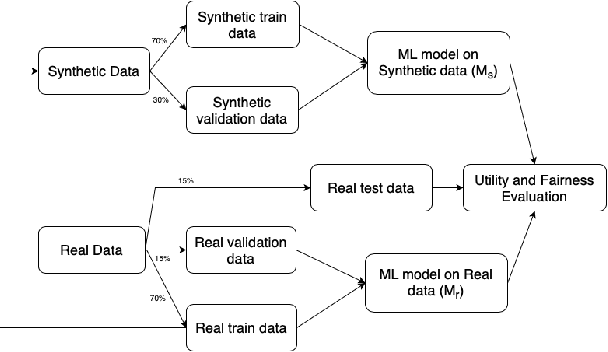

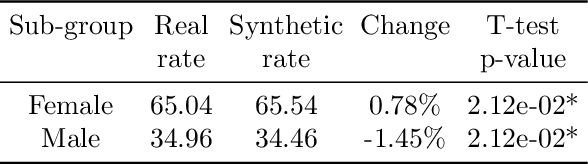

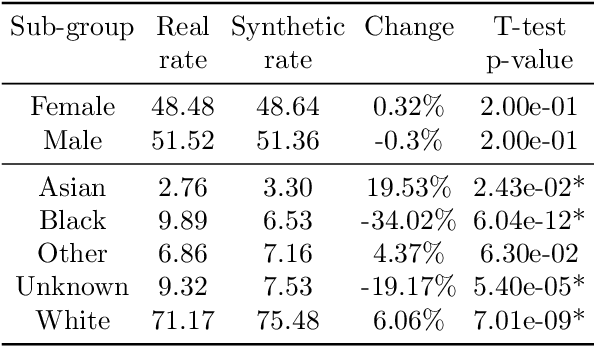

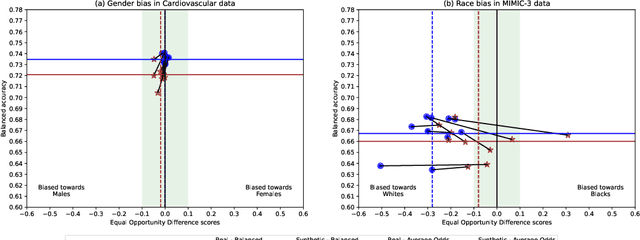

This paper evaluates synthetically generated healthcare data for biases and investigates the effect of fairness mitigation techniques on utility-fairness. Privacy laws limit access to health data such as Electronic Medical Records (EMRs) to preserve patient privacy. Albeit essential, these laws hinder research reproducibility. Synthetic data is a viable solution that can enable access to data similar to real healthcare data without privacy risks. Healthcare datasets may have biases in which certain protected groups might experience worse outcomes than others. With the real data having biases, the fairness of synthetically generated health data comes into question. In this paper, we evaluate the fairness of models generated on two healthcare datasets for gender and race biases. We generate synthetic versions of the dataset using a Generative Adversarial Network called HealthGAN, and compare the real and synthetic model's balanced accuracy and fairness scores. We find that synthetic data has different fairness properties compared to real data and fairness mitigation techniques perform differently, highlighting that synthetic data is not bias free.

Analogies and Feature Attributions for Model Agnostic Explanation of Similarity Learners

Feb 02, 2022Karthikeyan Natesan Ramamurthy, Amit Dhurandhar, Dennis Wei, Zaid Bin Tariq

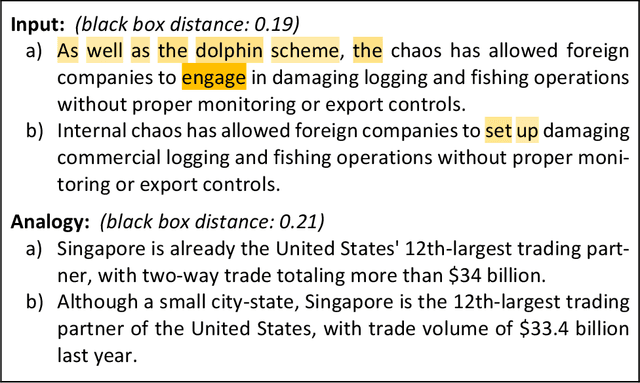

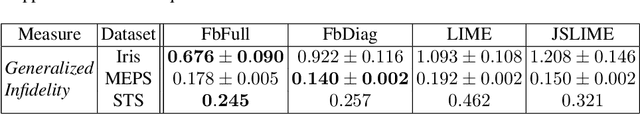

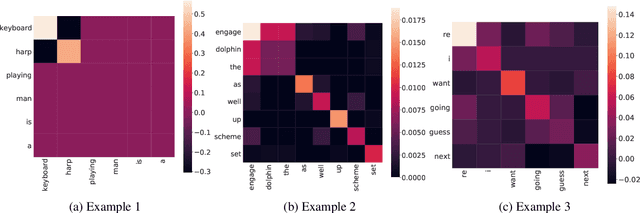

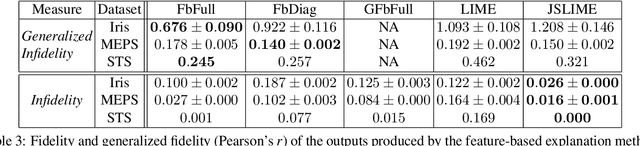

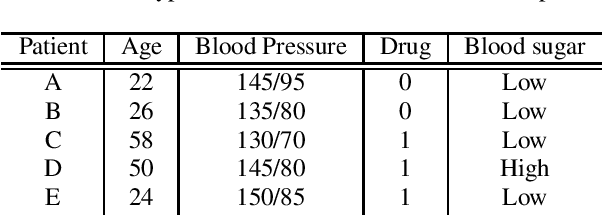

Post-hoc explanations for black box models have been studied extensively in classification and regression settings. However, explanations for models that output similarity between two inputs have received comparatively lesser attention. In this paper, we provide model agnostic local explanations for similarity learners applicable to tabular and text data. We first propose a method that provides feature attributions to explain the similarity between a pair of inputs as determined by a black box similarity learner. We then propose analogies as a new form of explanation in machine learning. Here the goal is to identify diverse analogous pairs of examples that share the same level of similarity as the input pair and provide insight into (latent) factors underlying the model's prediction. The selection of analogies can optionally leverage feature attributions, thus connecting the two forms of explanation while still maintaining complementarity. We prove that our analogy objective function is submodular, making the search for good-quality analogies efficient. We apply the proposed approaches to explain similarities between sentences as predicted by a state-of-the-art sentence encoder, and between patients in a healthcare utilization application. Efficacy is measured through quantitative evaluations, a careful user study, and examples of explanations.

FROTE: Feedback Rule-Driven Oversampling for Editing Models

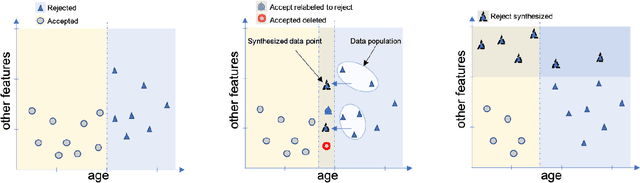

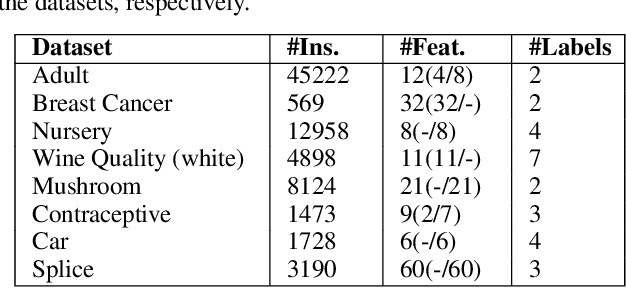

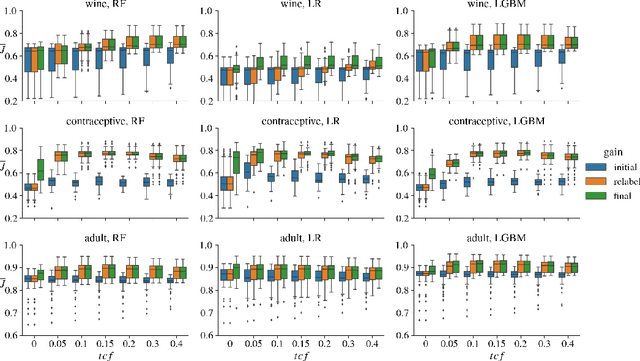

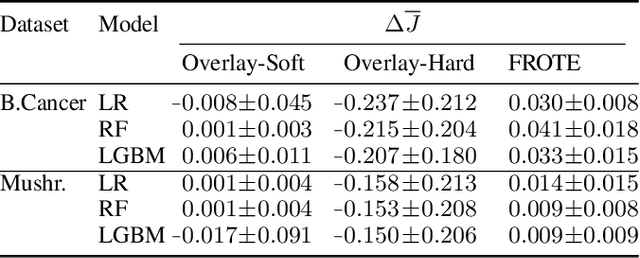

Jan 06, 2022Öznur Alkan, Dennis Wei, Massimiliano Mattetti, Rahul Nair, Elizabeth M. Daly, Diptikalyan Saha

Machine learning models may involve decision boundaries that change over time due to updates to rules and regulations, such as in loan approvals or claims management. However, in such scenarios, it may take time for sufficient training data to accumulate in order to retrain the model to reflect the new decision boundaries. While work has been done to reinforce existing decision boundaries, very little has been done to cover these scenarios where decision boundaries of the ML models should change in order to reflect new rules. In this paper, we focus on user-provided feedback rules as a way to expedite the ML models update process, and we formally introduce the problem of pre-processing training data to edit an ML model in response to feedback rules such that once the model is retrained on the pre-processed data, its decision boundaries align more closely with the rules. To solve this problem, we propose a novel data augmentation method, the Feedback Rule-Based Oversampling Technique. Extensive experiments using different ML models and real world datasets demonstrate the effectiveness of the method, in particular the benefit of augmentation and the ability to handle many feedback rules.

Ground-Truth, Whose Truth? -- Examining the Challenges with Annotating Toxic Text Datasets

Dec 07, 2021Kofi Arhin, Ioana Baldini, Dennis Wei, Karthikeyan Natesan Ramamurthy, Moninder Singh

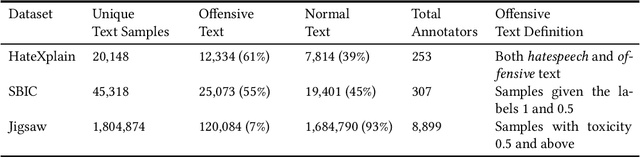

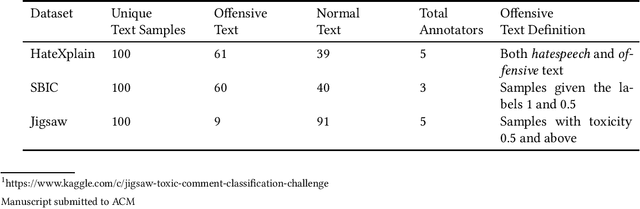

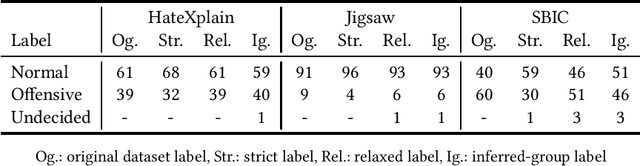

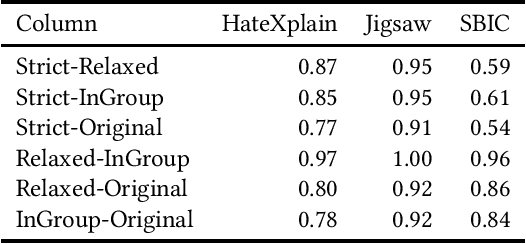

The use of machine learning (ML)-based language models (LMs) to monitor content online is on the rise. For toxic text identification, task-specific fine-tuning of these models are performed using datasets labeled by annotators who provide ground-truth labels in an effort to distinguish between offensive and normal content. These projects have led to the development, improvement, and expansion of large datasets over time, and have contributed immensely to research on natural language. Despite the achievements, existing evidence suggests that ML models built on these datasets do not always result in desirable outcomes. Therefore, using a design science research (DSR) approach, this study examines selected toxic text datasets with the goal of shedding light on some of the inherent issues and contributing to discussions on navigating these challenges for existing and future projects. To achieve the goal of the study, we re-annotate samples from three toxic text datasets and find that a multi-label approach to annotating toxic text samples can help to improve dataset quality. While this approach may not improve the traditional metric of inter-annotator agreement, it may better capture dependence on context and diversity in annotators. We discuss the implications of these results for both theory and practice.

Interpretable and Fair Boolean Rule Sets via Column Generation

Nov 16, 2021Connor Lawless, Sanjeeb Dash, Oktay Gunluk, Dennis Wei

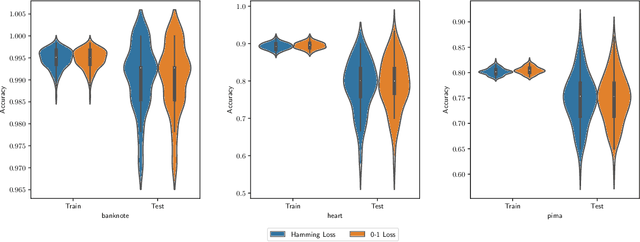

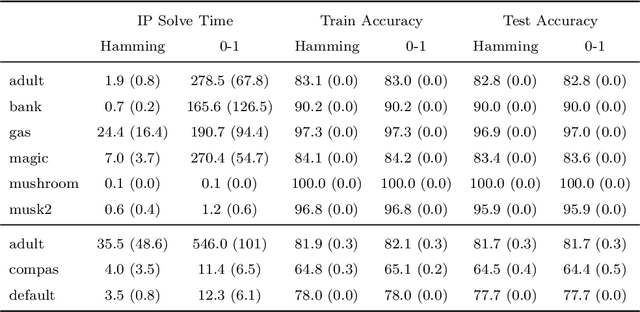

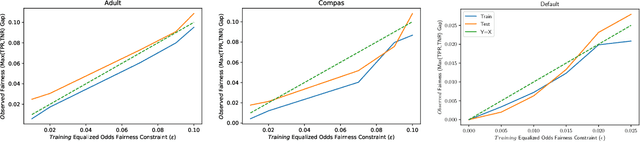

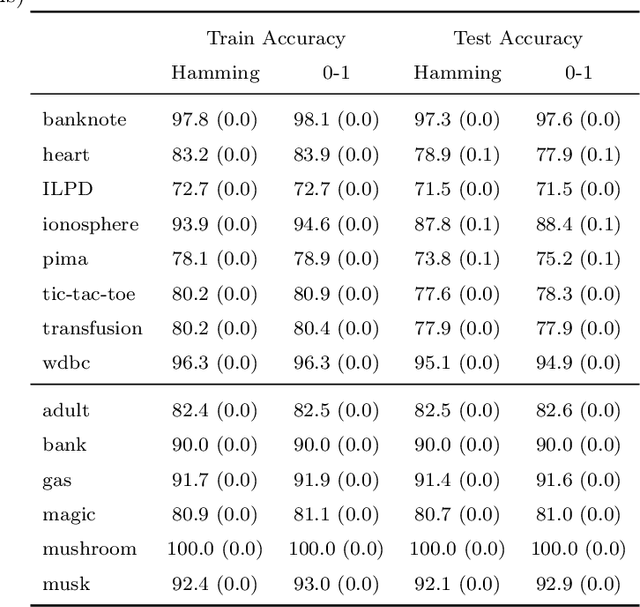

This paper considers the learning of Boolean rules in either disjunctive normal form (DNF, OR-of-ANDs, equivalent to decision rule sets) or conjunctive normal form (CNF, AND-of-ORs) as an interpretable model for classification. An integer program is formulated to optimally trade classification accuracy for rule simplicity. We also consider the fairness setting and extend the formulation to include explicit constraints on two different measures of classification parity: equality of opportunity and equalized odds. Column generation (CG) is used to efficiently search over an exponential number of candidate clauses (conjunctions or disjunctions) without the need for heuristic rule mining. This approach also bounds the gap between the selected rule set and the best possible rule set on the training data. To handle large datasets, we propose an approximate CG algorithm using randomization. Compared to three recently proposed alternatives, the CG algorithm dominates the accuracy-simplicity trade-off in 8 out of 16 datasets. When maximized for accuracy, CG is competitive with rule learners designed for this purpose, sometimes finding significantly simpler solutions that are no less accurate. Compared to other fair and interpretable classifiers, our method is able to find rule sets that meet stricter notions of fairness with a modest trade-off in accuracy.

AI Explainability 360: Impact and Design

Sep 24, 2021Vijay Arya, Rachel K. E. Bellamy, Pin-Yu Chen, Amit Dhurandhar, Michael Hind, Samuel C. Hoffman, Stephanie Houde, Q. Vera Liao, Ronny Luss, Aleksandra Mojsilovic, Sami Mourad, Pablo Pedemonte, Ramya Raghavendra, John Richards, Prasanna Sattigeri, Karthikeyan Shanmugam, Moninder Singh, Kush R. Varshney, Dennis Wei, Yunfeng Zhang

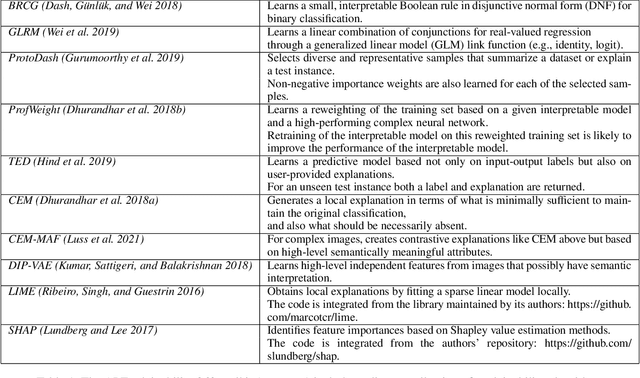

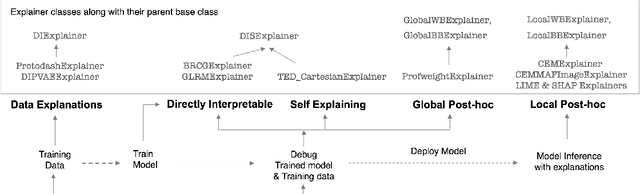

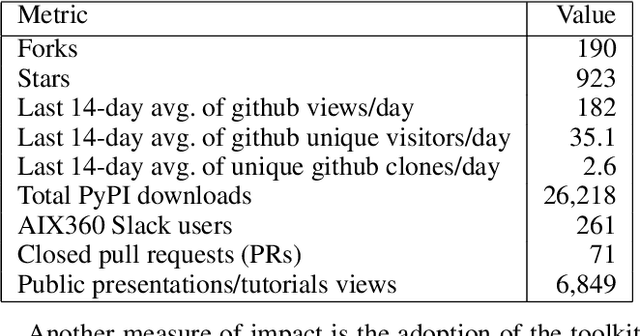

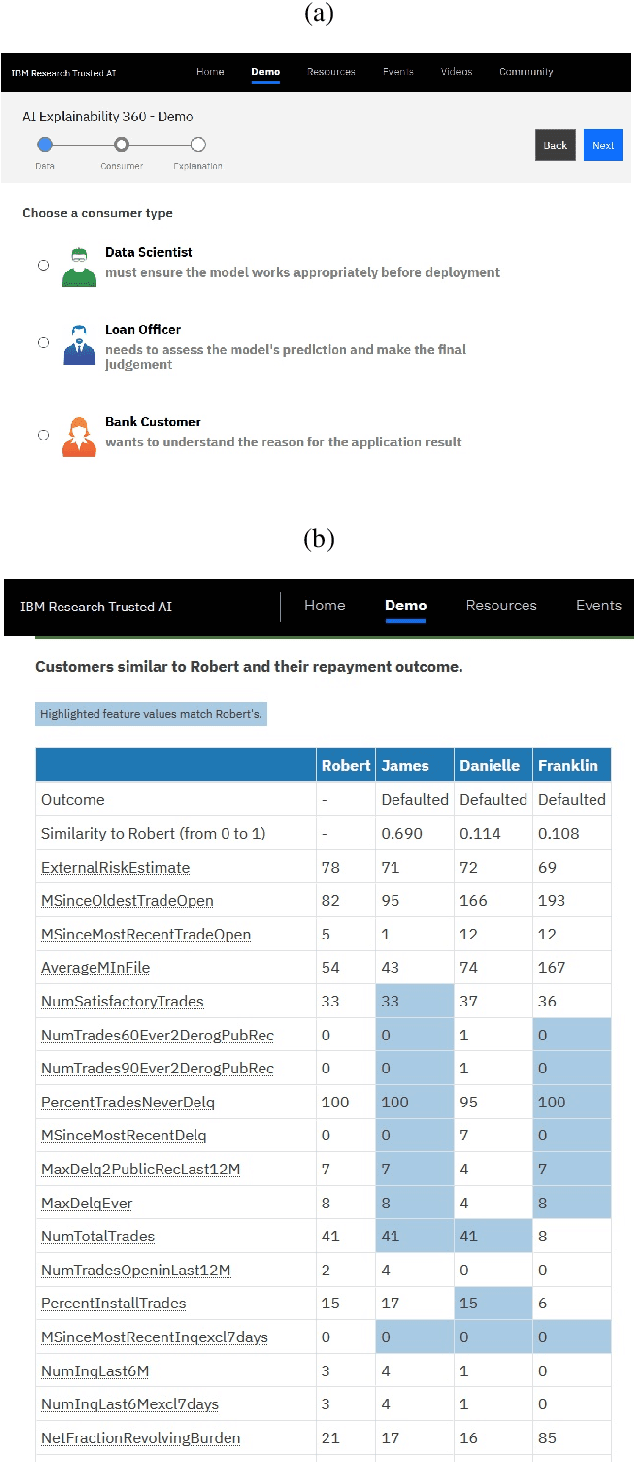

As artificial intelligence and machine learning algorithms become increasingly prevalent in society, multiple stakeholders are calling for these algorithms to provide explanations. At the same time, these stakeholders, whether they be affected citizens, government regulators, domain experts, or system developers, have different explanation needs. To address these needs, in 2019, we created AI Explainability 360 (Arya et al. 2020), an open source software toolkit featuring ten diverse and state-of-the-art explainability methods and two evaluation metrics. This paper examines the impact of the toolkit with several case studies, statistics, and community feedback. The different ways in which users have experienced AI Explainability 360 have resulted in multiple types of impact and improvements in multiple metrics, highlighted by the adoption of the toolkit by the independent LF AI & Data Foundation. The paper also describes the flexible design of the toolkit, examples of its use, and the significant educational material and documentation available to its users.

Your fairness may vary: Group fairness of pretrained language models in toxic text classification

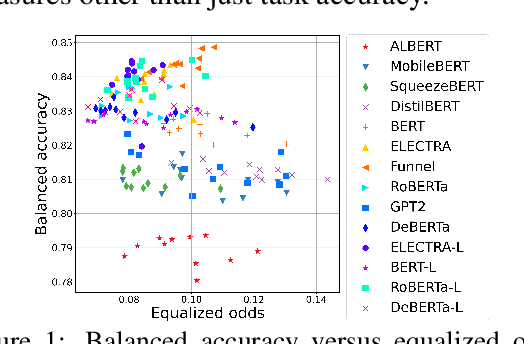

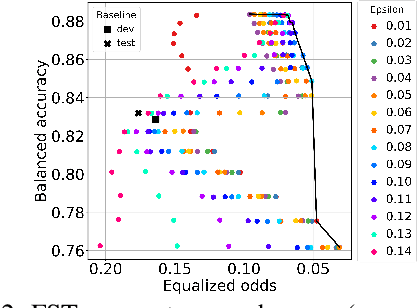

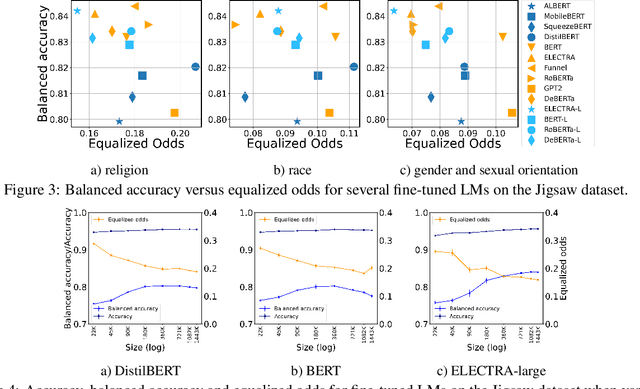

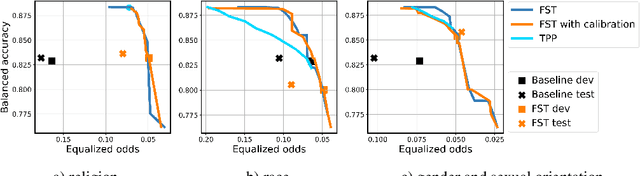

Aug 03, 2021Ioana Baldini, Dennis Wei, Karthikeyan Natesan Ramamurthy, Mikhail Yurochkin, Moninder Singh

We study the performance-fairness trade-off in more than a dozen fine-tuned LMs for toxic text classification. We empirically show that no blanket statement can be made with respect to the bias of large versus regular versus compressed models. Moreover, we find that focusing on fairness-agnostic performance metrics can lead to models with varied fairness characteristics.

Treatment Effect Estimation using Invariant Risk Minimization

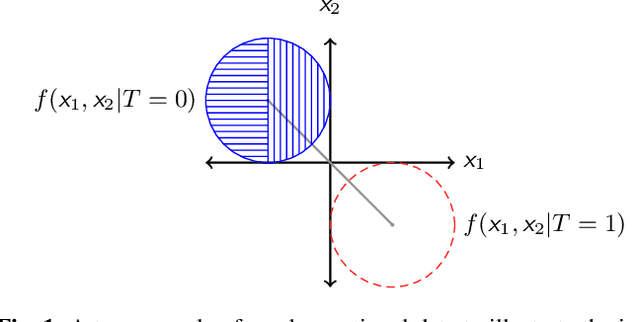

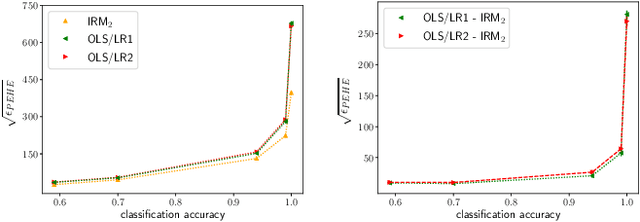

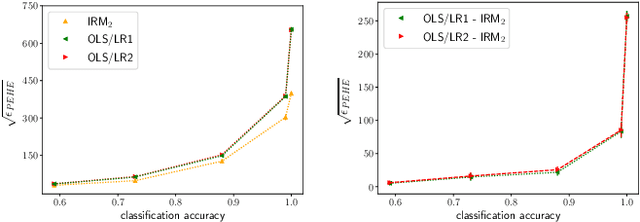

Mar 13, 2021Abhin Shah, Kartik Ahuja, Karthikeyan Shanmugam, Dennis Wei, Kush Varshney, Amit Dhurandhar

Inferring causal individual treatment effect (ITE) from observational data is a challenging problem whose difficulty is exacerbated by the presence of treatment assignment bias. In this work, we propose a new way to estimate the ITE using the domain generalization framework of invariant risk minimization (IRM). IRM uses data from multiple domains, learns predictors that do not exploit spurious domain-dependent factors, and generalizes better to unseen domains. We propose an IRM-based ITE estimator aimed at tackling treatment assignment bias when there is little support overlap between the control group and the treatment group. We accomplish this by creating diversity: given a single dataset, we split the data into multiple domains artificially. These diverse domains are then exploited by IRM to more effectively generalize regression-based models to data regions that lack support overlap. We show gains over classical regression approaches to ITE estimation in settings when support mismatch is more pronounced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge