Chuanyao Zhang

Machine Unlearning Methodology base on Stochastic Teacher Network

Aug 28, 2023Abstract:The rise of the phenomenon of the "right to be forgotten" has prompted research on machine unlearning, which grants data owners the right to actively withdraw data that has been used for model training, and requires the elimination of the contribution of that data to the model. A simple method to achieve this is to use the remaining data to retrain the model, but this is not acceptable for other data owners who continue to participate in training. Existing machine unlearning methods have been found to be ineffective in quickly removing knowledge from deep learning models. This paper proposes using a stochastic network as a teacher to expedite the mitigation of the influence caused by forgotten data on the model. We performed experiments on three datasets, and the findings demonstrate that our approach can efficiently mitigate the influence of target data on the model within a single epoch. This allows for one-time erasure and reconstruction of the model, and the reconstruction model achieves the same performance as the retrained model.

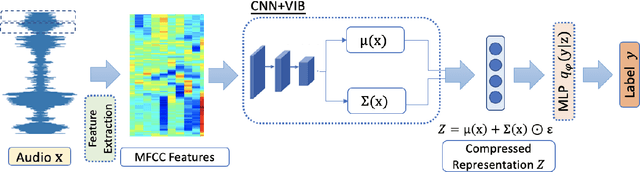

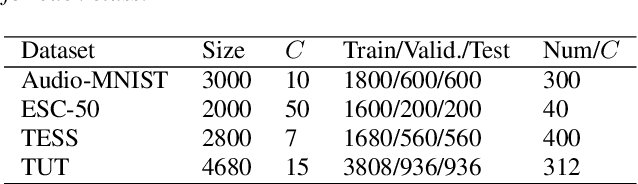

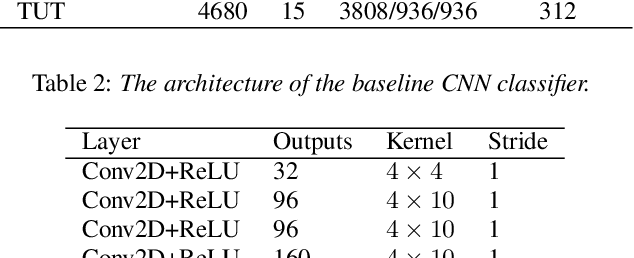

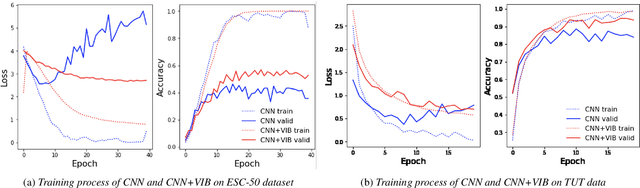

Variational Information Bottleneck for Effective Low-resource Audio Classification

Jul 10, 2021

Abstract:Large-scale deep neural networks (DNNs) such as convolutional neural networks (CNNs) have achieved impressive performance in audio classification for their powerful capacity and strong generalization ability. However, when training a DNN model on low-resource tasks, it is usually prone to overfitting the small data and learning too much redundant information. To address this issue, we propose to use variational information bottleneck (VIB) to mitigate overfitting and suppress irrelevant information. In this work, we conduct experiments ona 4-layer CNN. However, the VIB framework is ready-to-use and could be easily utilized with many other state-of-the-art network architectures. Evaluation on a few audio datasets shows that our approach significantly outperforms baseline methods, yielding more than 5.0% improvement in terms of classification accuracy in some low-source settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge