Learning for Multi-robot Cooperation in Partially Observable Stochastic Environments with Macro-actions

Aug 18, 2017Miao Liu, Kavinayan Sivakumar, Shayegan Omidshafiei, Christopher Amato, Jonathan P. How

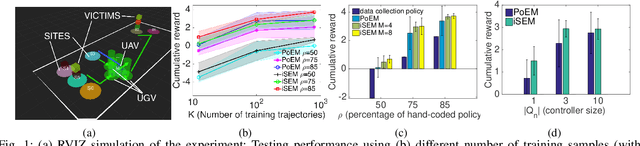

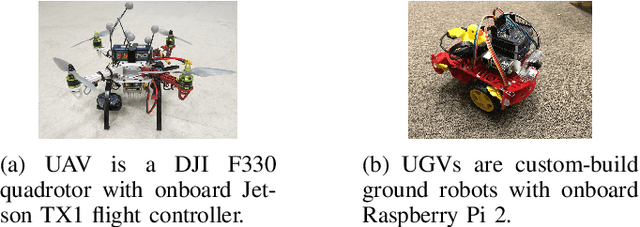

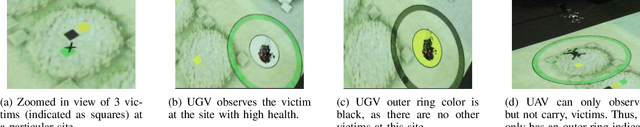

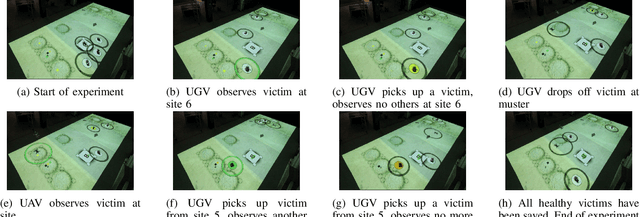

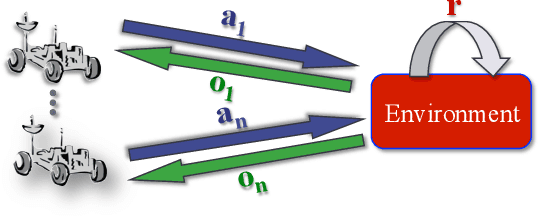

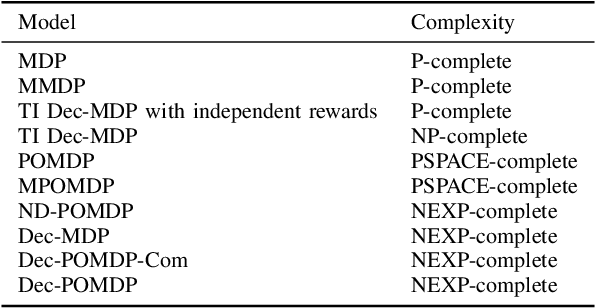

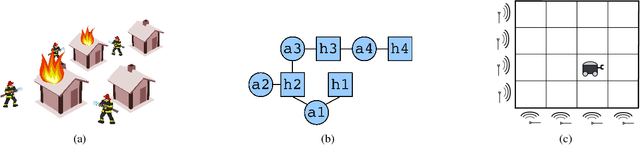

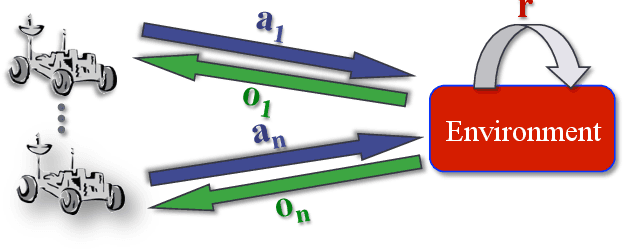

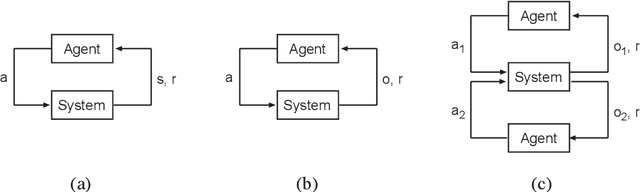

This paper presents a data-driven approach for multi-robot coordination in partially-observable domains based on Decentralized Partially Observable Markov Decision Processes (Dec-POMDPs) and macro-actions (MAs). Dec-POMDPs provide a general framework for cooperative sequential decision making under uncertainty and MAs allow temporally extended and asynchronous action execution. To date, most methods assume the underlying Dec-POMDP model is known a priori or a full simulator is available during planning time. Previous methods which aim to address these issues suffer from local optimality and sensitivity to initial conditions. Additionally, few hardware demonstrations involving a large team of heterogeneous robots and with long planning horizons exist. This work addresses these gaps by proposing an iterative sampling based Expectation-Maximization algorithm (iSEM) to learn polices using only trajectory data containing observations, MAs, and rewards. Our experiments show the algorithm is able to achieve better solution quality than the state-of-the-art learning-based methods. We implement two variants of multi-robot Search and Rescue (SAR) domains (with and without obstacles) on hardware to demonstrate the learned policies can effectively control a team of distributed robots to cooperate in a partially observable stochastic environment.

Deep Decentralized Multi-task Multi-Agent Reinforcement Learning under Partial Observability

Jul 13, 2017Shayegan Omidshafiei, Jason Pazis, Christopher Amato, Jonathan P. How, John Vian

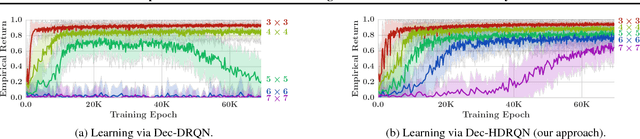

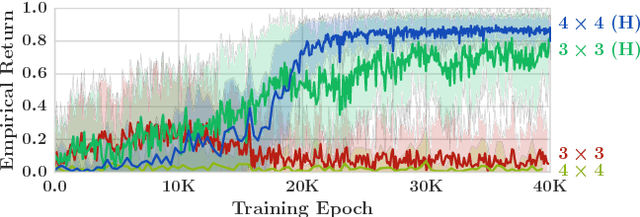

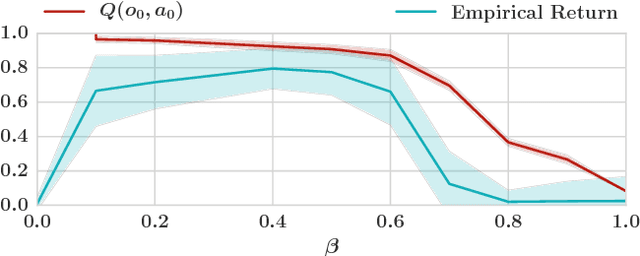

Many real-world tasks involve multiple agents with partial observability and limited communication. Learning is challenging in these settings due to local viewpoints of agents, which perceive the world as non-stationary due to concurrently-exploring teammates. Approaches that learn specialized policies for individual tasks face problems when applied to the real world: not only do agents have to learn and store distinct policies for each task, but in practice identities of tasks are often non-observable, making these approaches inapplicable. This paper formalizes and addresses the problem of multi-task multi-agent reinforcement learning under partial observability. We introduce a decentralized single-task learning approach that is robust to concurrent interactions of teammates, and present an approach for distilling single-task policies into a unified policy that performs well across multiple related tasks, without explicit provision of task identity.

* Accepted to ICML 2017

Scalable Accelerated Decentralized Multi-Robot Policy Search in Continuous Observation Spaces

Mar 16, 2017Shayegan Omidshafiei, Christopher Amato, Miao Liu, Michael Everett, Jonathan P. How, John Vian

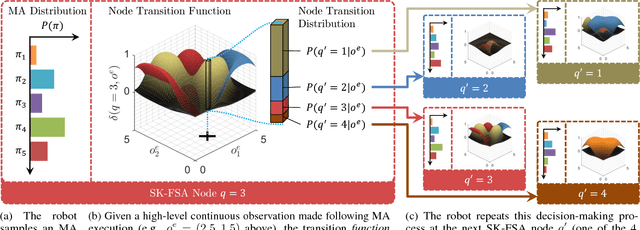

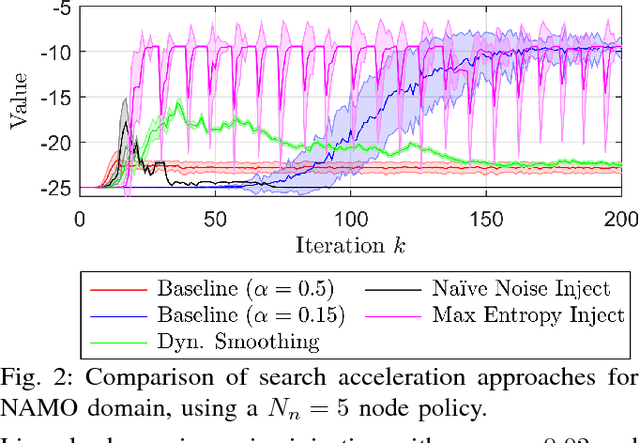

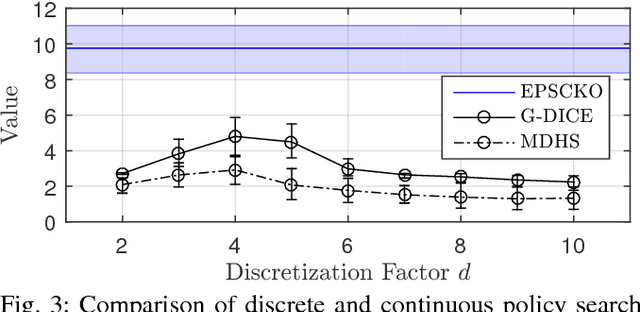

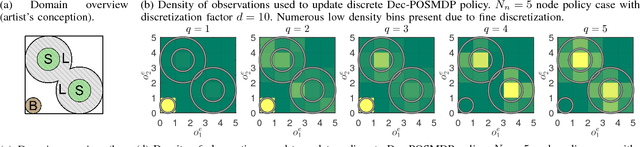

This paper presents the first ever approach for solving \emph{continuous-observation} Decentralized Partially Observable Markov Decision Processes (Dec-POMDPs) and their semi-Markovian counterparts, Dec-POSMDPs. This contribution is especially important in robotics, where a vast number of sensors provide continuous observation data. A continuous-observation policy representation is introduced using Stochastic Kernel-based Finite State Automata (SK-FSAs). An SK-FSA search algorithm titled Entropy-based Policy Search using Continuous Kernel Observations (EPSCKO) is introduced and applied to the first ever continuous-observation Dec-POMDP/Dec-POSMDP domain, where it significantly outperforms state-of-the-art discrete approaches. This methodology is equally applicable to Dec-POMDPs and Dec-POSMDPs, though the empirical analysis presented focuses on Dec-POSMDPs due to their higher scalability. To improve convergence, an entropy injection policy search acceleration approach for both continuous and discrete observation cases is also developed and shown to improve convergence rates without degrading policy quality.

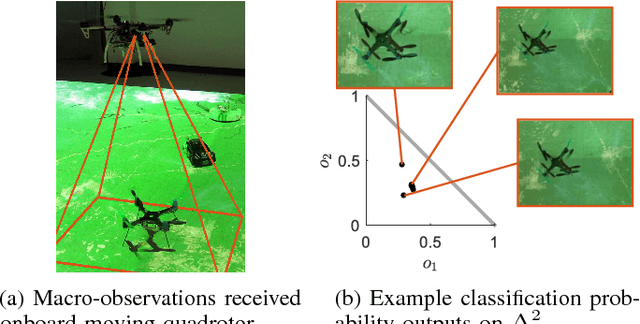

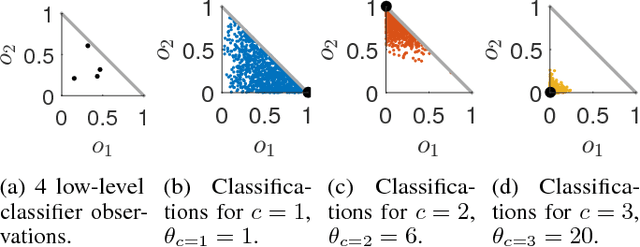

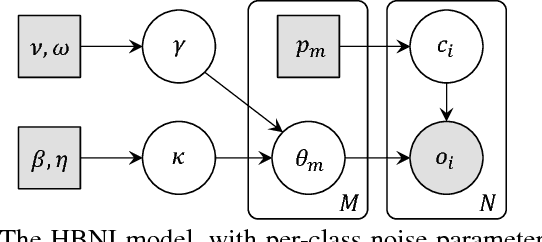

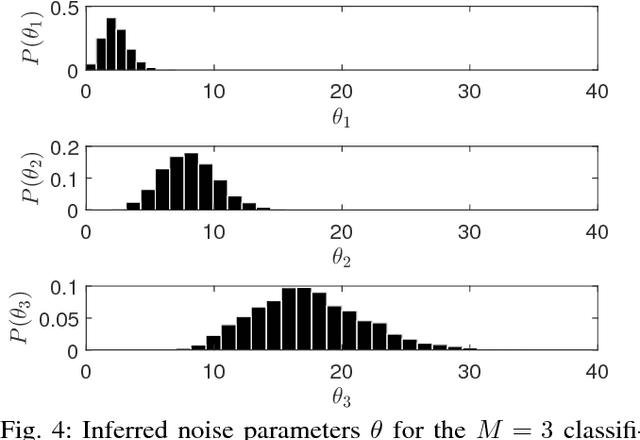

Semantic-level Decentralized Multi-Robot Decision-Making using Probabilistic Macro-Observations

Mar 16, 2017Shayegan Omidshafiei, Shih-Yuan Liu, Michael Everett, Brett T. Lopez, Christopher Amato, Miao Liu, Jonathan P. How, John Vian

Robust environment perception is essential for decision-making on robots operating in complex domains. Intelligent task execution requires principled treatment of uncertainty sources in a robot's observation model. This is important not only for low-level observations (e.g., accelerometer data), but also for high-level observations such as semantic object labels. This paper formalizes the concept of macro-observations in Decentralized Partially Observable Semi-Markov Decision Processes (Dec-POSMDPs), allowing scalable semantic-level multi-robot decision making. A hierarchical Bayesian approach is used to model noise statistics of low-level classifier outputs, while simultaneously allowing sharing of domain noise characteristics between classes. Classification accuracy of the proposed macro-observation scheme, called Hierarchical Bayesian Noise Inference (HBNI), is shown to exceed existing methods. The macro-observation scheme is then integrated into a Dec-POSMDP planner, with hardware experiments running onboard a team of dynamic quadrotors in a challenging domain where noise-agnostic filtering fails. To the best of our knowledge, this is the first demonstration of a real-time, convolutional neural net-based classification framework running fully onboard a team of quadrotors in a multi-robot decision-making domain.

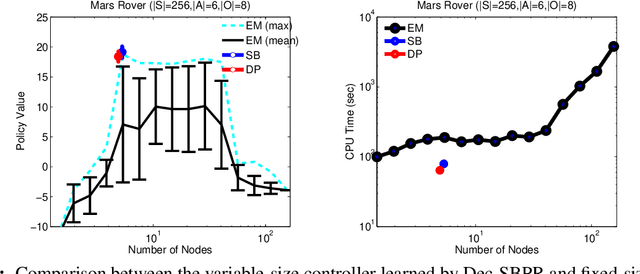

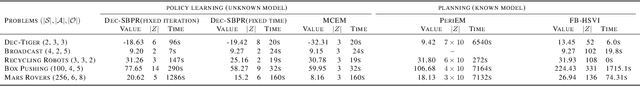

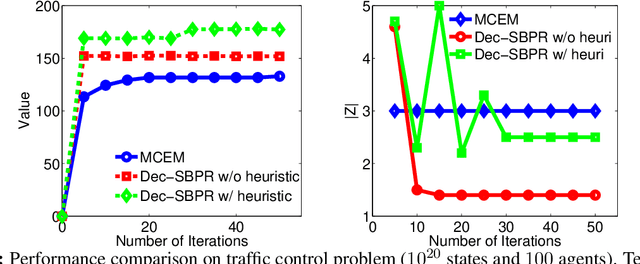

Stick-Breaking Policy Learning in Dec-POMDPs

Nov 23, 2015Miao Liu, Christopher Amato, Xuejun Liao, Lawrence Carin, Jonathan P. How

Expectation maximization (EM) has recently been shown to be an efficient algorithm for learning finite-state controllers (FSCs) in large decentralized POMDPs (Dec-POMDPs). However, current methods use fixed-size FSCs and often converge to maxima that are far from optimal. This paper considers a variable-size FSC to represent the local policy of each agent. These variable-size FSCs are constructed using a stick-breaking prior, leading to a new framework called \emph{decentralized stick-breaking policy representation} (Dec-SBPR). This approach learns the controller parameters with a variational Bayesian algorithm without having to assume that the Dec-POMDP model is available. The performance of Dec-SBPR is demonstrated on several benchmark problems, showing that the algorithm scales to large problems while outperforming other state-of-the-art methods.

Decentralized Control of Partially Observable Markov Decision Processes using Belief Space Macro-actions

Feb 20, 2015Shayegan Omidshafiei, Ali-akbar Agha-mohammadi, Christopher Amato, Jonathan P. How

The focus of this paper is on solving multi-robot planning problems in continuous spaces with partial observability. Decentralized partially observable Markov decision processes (Dec-POMDPs) are general models for multi-robot coordination problems, but representing and solving Dec-POMDPs is often intractable for large problems. To allow for a high-level representation that is natural for multi-robot problems and scalable to large discrete and continuous problems, this paper extends the Dec-POMDP model to the decentralized partially observable semi-Markov decision process (Dec-POSMDP). The Dec-POSMDP formulation allows asynchronous decision-making by the robots, which is crucial in multi-robot domains. We also present an algorithm for solving this Dec-POSMDP which is much more scalable than previous methods since it can incorporate closed-loop belief space macro-actions in planning. These macro-actions are automatically constructed to produce robust solutions. The proposed method's performance is evaluated on a complex multi-robot package delivery problem under uncertainty, showing that our approach can naturally represent multi-robot problems and provide high-quality solutions for large-scale problems.

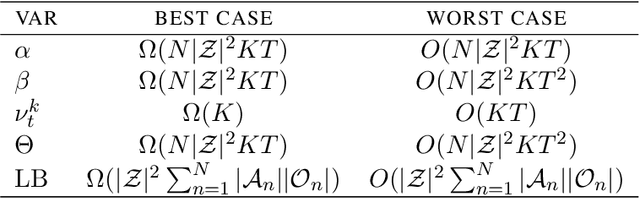

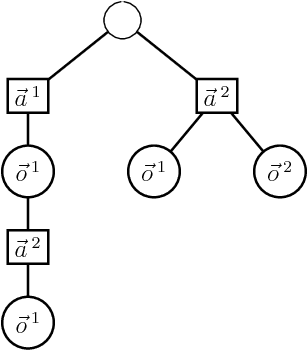

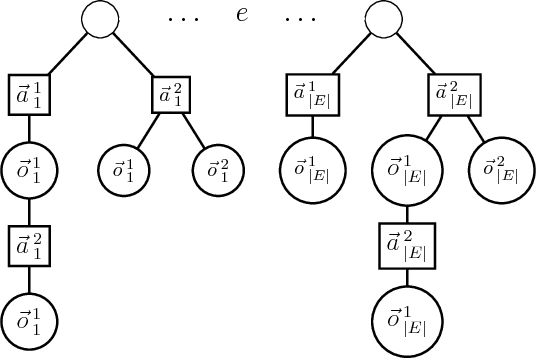

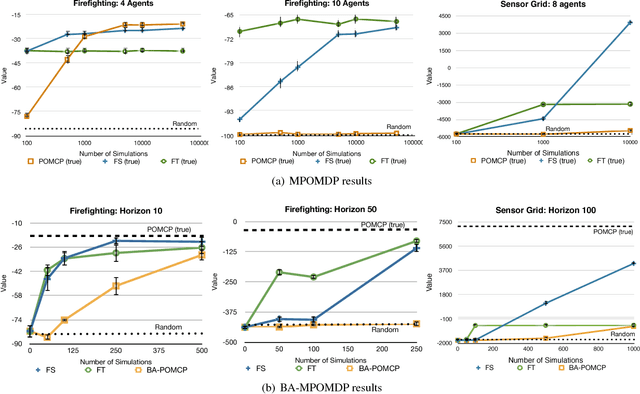

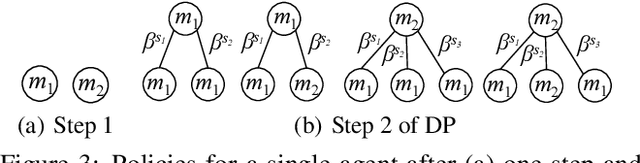

Scalable Planning and Learning for Multiagent POMDPs: Extended Version

Dec 20, 2014Christopher Amato, Frans A. Oliehoek

Online, sample-based planning algorithms for POMDPs have shown great promise in scaling to problems with large state spaces, but they become intractable for large action and observation spaces. This is particularly problematic in multiagent POMDPs where the action and observation space grows exponentially with the number of agents. To combat this intractability, we propose a novel scalable approach based on sample-based planning and factored value functions that exploits structure present in many multiagent settings. This approach applies not only in the planning case, but also in the Bayesian reinforcement learning setting. Experimental results show that we are able to provide high quality solutions to large multiagent planning and learning problems.

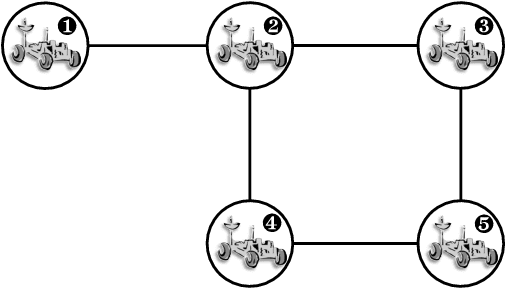

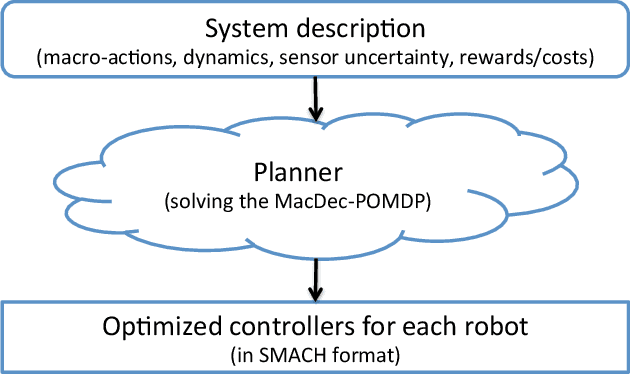

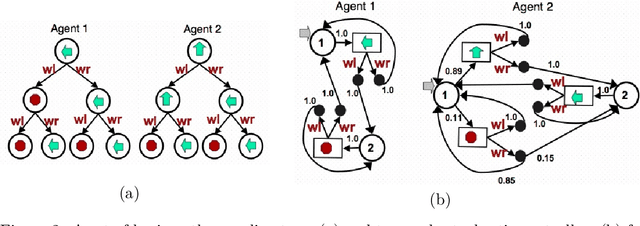

Planning for Decentralized Control of Multiple Robots Under Uncertainty

Feb 12, 2014Christopher Amato, George D. Konidaris, Gabriel Cruz, Christopher A. Maynor, Jonathan P. How, Leslie P. Kaelbling

We describe a probabilistic framework for synthesizing control policies for general multi-robot systems, given environment and sensor models and a cost function. Decentralized, partially observable Markov decision processes (Dec-POMDPs) are a general model of decision processes where a team of agents must cooperate to optimize some objective (specified by a shared reward or cost function) in the presence of uncertainty, but where communication limitations mean that the agents cannot share their state, so execution must proceed in a decentralized fashion. While Dec-POMDPs are typically intractable to solve for real-world problems, recent research on the use of macro-actions in Dec-POMDPs has significantly increased the size of problem that can be practically solved as a Dec-POMDP. We describe this general model, and show how, in contrast to most existing methods that are specialized to a particular problem class, it can synthesize control policies that use whatever opportunities for coordination are present in the problem, while balancing off uncertainty in outcomes, sensor information, and information about other agents. We use three variations on a warehouse task to show that a single planner of this type can generate cooperative behavior using task allocation, direct communication, and signaling, as appropriate.

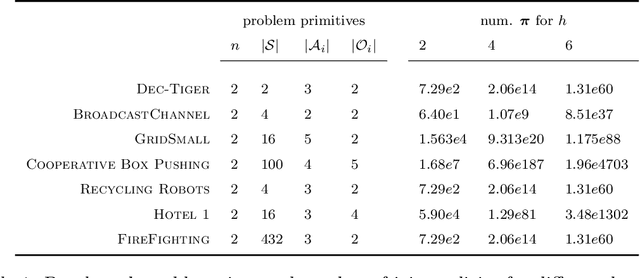

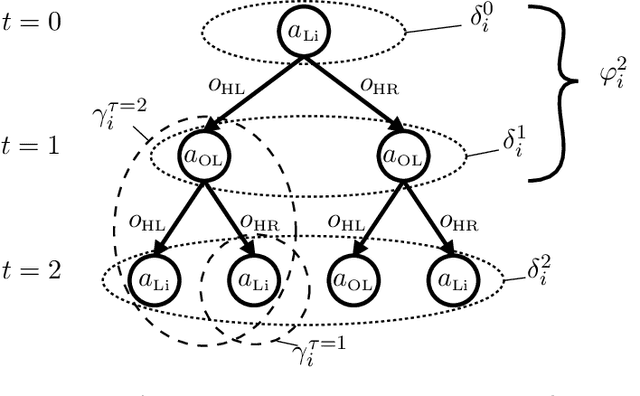

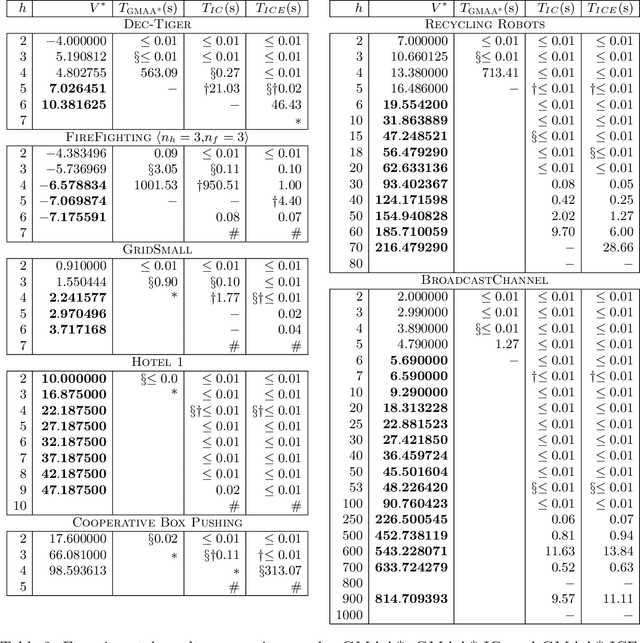

Incremental Clustering and Expansion for Faster Optimal Planning in Dec-POMDPs

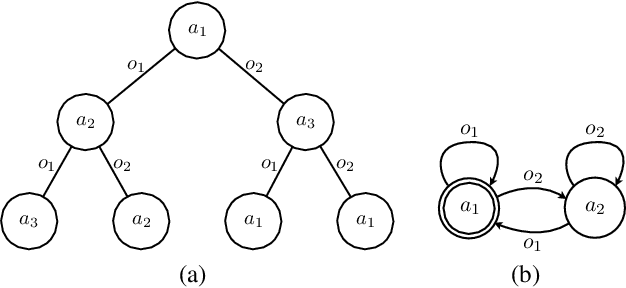

Feb 04, 2014Frans Adriaan Oliehoek, Matthijs T. J. Spaan, Christopher Amato, Shimon Whiteson

This article presents the state-of-the-art in optimal solution methods for decentralized partially observable Markov decision processes (Dec-POMDPs), which are general models for collaborative multiagent planning under uncertainty. Building off the generalized multiagent A* (GMAA*) algorithm, which reduces the problem to a tree of one-shot collaborative Bayesian games (CBGs), we describe several advances that greatly expand the range of Dec-POMDPs that can be solved optimally. First, we introduce lossless incremental clustering of the CBGs solved by GMAA*, which achieves exponential speedups without sacrificing optimality. Second, we introduce incremental expansion of nodes in the GMAA* search tree, which avoids the need to expand all children, the number of which is in the worst case doubly exponential in the nodes depth. This is particularly beneficial when little clustering is possible. In addition, we introduce new hybrid heuristic representations that are more compact and thereby enable the solution of larger Dec-POMDPs. We provide theoretical guarantees that, when a suitable heuristic is used, both incremental clustering and incremental expansion yield algorithms that are both complete and search equivalent. Finally, we present extensive empirical results demonstrating that GMAA*-ICE, an algorithm that synthesizes these advances, can optimally solve Dec-POMDPs of unprecedented size.

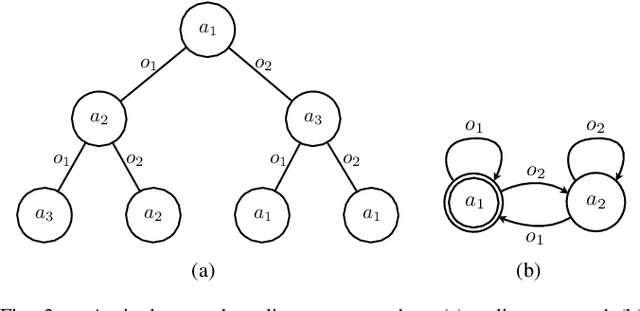

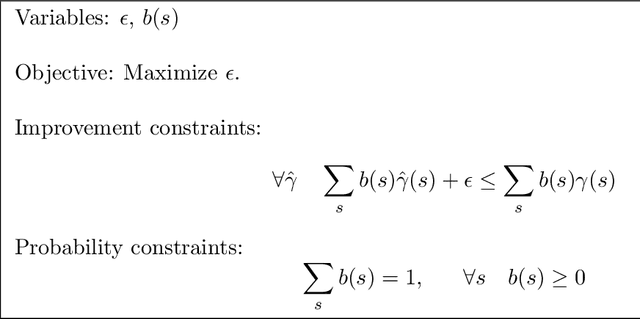

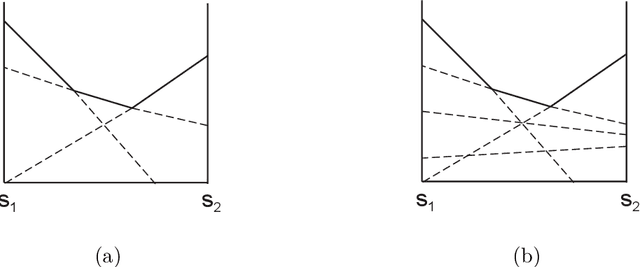

Policy Iteration for Decentralized Control of Markov Decision Processes

Jan 15, 2014Daniel S. Bernstein, Christopher Amato, Eric A. Hansen, Shlomo Zilberstein

Coordination of distributed agents is required for problems arising in many areas, including multi-robot systems, networking and e-commerce. As a formal framework for such problems, we use the decentralized partially observable Markov decision process (DEC-POMDP). Though much work has been done on optimal dynamic programming algorithms for the single-agent version of the problem, optimal algorithms for the multiagent case have been elusive. The main contribution of this paper is an optimal policy iteration algorithm for solving DEC-POMDPs. The algorithm uses stochastic finite-state controllers to represent policies. The solution can include a correlation device, which allows agents to correlate their actions without communicating. This approach alternates between expanding the controller and performing value-preserving transformations, which modify the controller without sacrificing value. We present two efficient value-preserving transformations: one can reduce the size of the controller and the other can improve its value while keeping the size fixed. Empirical results demonstrate the usefulness of value-preserving transformations in increasing value while keeping controller size to a minimum. To broaden the applicability of the approach, we also present a heuristic version of the policy iteration algorithm, which sacrifices convergence to optimality. This algorithm further reduces the size of the controllers at each step by assuming that probability distributions over the other agents actions are known. While this assumption may not hold in general, it helps produce higher quality solutions in our test problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge