Bo Yue

Toward Humanoid Brain-Body Co-design: Joint Optimization of Control and Morphology for Fall Recovery

Oct 25, 2025Abstract:Humanoid robots represent a central frontier in embodied intelligence, as their anthropomorphic form enables natural deployment in humans' workspace. Brain-body co-design for humanoids presents a promising approach to realizing this potential by jointly optimizing control policies and physical morphology. Within this context, fall recovery emerges as a critical capability. It not only enhances safety and resilience but also integrates naturally with locomotion systems, thereby advancing the autonomy of humanoids. In this paper, we propose RoboCraft, a scalable humanoid co-design framework for fall recovery that iteratively improves performance through the coupled updates of control policy and morphology. A shared policy pretrained across multiple designs is progressively finetuned on high-performing morphologies, enabling efficient adaptation without retraining from scratch. Concurrently, morphology search is guided by human-inspired priors and optimization algorithms, supported by a priority buffer that balances reevaluation of promising candidates with the exploration of novel designs. Experiments show that \ourmethod{} achieves an average performance gain of 44.55% on seven public humanoid robots, with morphology optimization drives at least 40% of improvements in co-designing four humanoid robots, underscoring the critical role of humanoid co-design.

Real-Time Verification of Embodied Reasoning for Generative Skill Acquisition

May 19, 2025Abstract:Generative skill acquisition enables embodied agents to actively learn a scalable and evolving repertoire of control skills, crucial for the advancement of large decision models. While prior approaches often rely on supervision signals from generalist agents (e.g., LLMs), their effectiveness in complex 3D environments remains unclear; exhaustive evaluation incurs substantial computational costs, significantly hindering the efficiency of skill learning. Inspired by recent successes in verification models for mathematical reasoning, we propose VERGSA (Verifying Embodied Reasoning in Generative Skill Acquisition), a framework that systematically integrates real-time verification principles into embodied skill learning. VERGSA establishes 1) a seamless extension from verification of mathematical reasoning into embodied learning by dynamically incorporating contextually relevant tasks into prompts and defining success metrics for both subtasks and overall tasks, and 2) an automated, scalable reward labeling scheme that synthesizes dense reward signals by iteratively finalizing the contribution of scene configuration and subtask learning to overall skill acquisition. To the best of our knowledge, this approach constitutes the first comprehensive training dataset for verification-driven generative skill acquisition, eliminating arduous manual reward engineering. Experiments validate the efficacy of our approach: 1) the exemplar task pool improves the average task success rates by 21%, 2) our verification model boosts success rates by 24% for novel tasks and 36% for encountered tasks, and 3) outperforms LLM-as-a-Judge baselines in verification quality.

Provably Efficient Exploration in Inverse Constrained Reinforcement Learning

Sep 24, 2024

Abstract:To obtain the optimal constraints in complex environments, Inverse Constrained Reinforcement Learning (ICRL) seeks to recover these constraints from expert demonstrations in a data-driven manner. Existing ICRL algorithms collect training samples from an interactive environment. However, the efficacy and efficiency of these sampling strategies remain unknown. To bridge this gap, we introduce a strategic exploration framework with provable efficiency. Specifically, we define a feasible constraint set for ICRL problems and investigate how expert policy and environmental dynamics influence the optimality of constraints. Motivated by our findings, we propose two exploratory algorithms to achieve efficient constraint inference via 1) dynamically reducing the bounded aggregate error of cost estimation and 2) strategically constraining the exploration policy. Both algorithms are theoretically grounded with tractable sample complexity. We empirically demonstrate the performance of our algorithms under various environments.

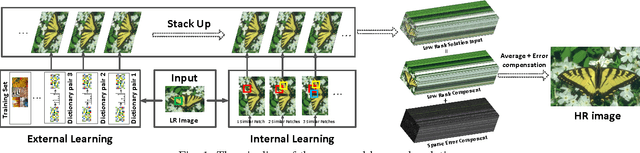

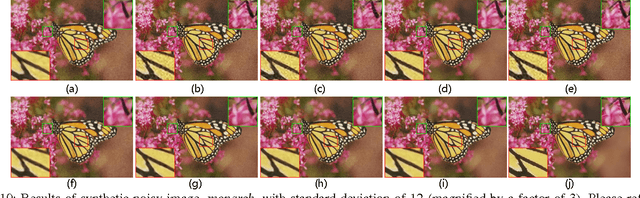

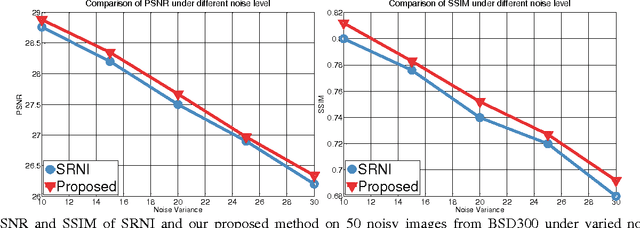

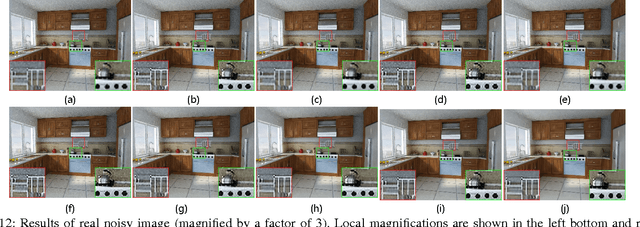

How Does the Low-Rank Matrix Decomposition Help Internal and External Learnings for Super-Resolution

Jun 30, 2017

Abstract:Wisely utilizing the internal and external learning methods is a new challenge in super-resolution problem. To address this issue, we analyze the attributes of two methodologies and find two observations of their recovered details: 1) they are complementary in both feature space and image plane, 2) they distribute sparsely in the spatial space. These inspire us to propose a low-rank solution which effectively integrates two learning methods and then achieves a superior result. To fit this solution, the internal learning method and the external learning method are tailored to produce multiple preliminary results. Our theoretical analysis and experiment prove that the proposed low-rank solution does not require massive inputs to guarantee the performance, and thereby simplifying the design of two learning methods for the solution. Intensive experiments show the proposed solution improves the single learning method in both qualitative and quantitative assessments. Surprisingly, it shows more superior capability on noisy images and outperforms state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge