Arber Zela

NAS-Bench-Suite: NAS Evaluation is (Now) Surprisingly Easy

Feb 11, 2022

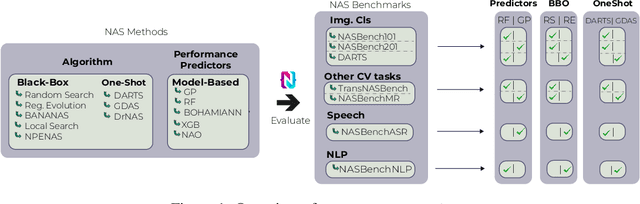

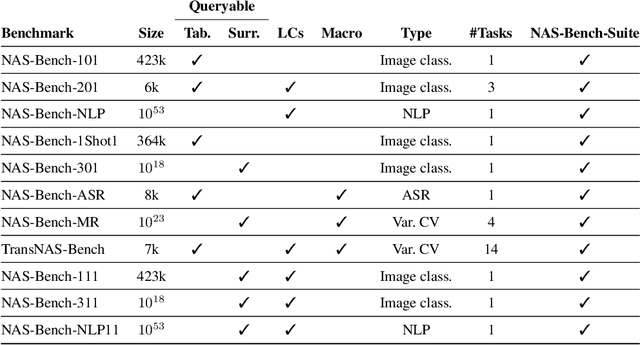

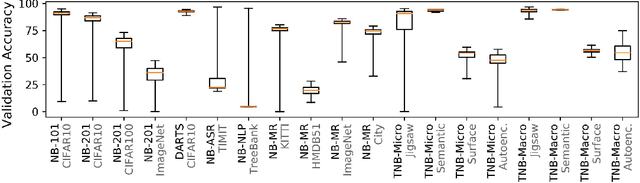

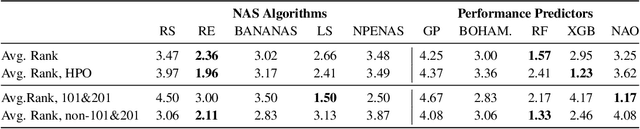

Abstract:The release of tabular benchmarks, such as NAS-Bench-101 and NAS-Bench-201, has significantly lowered the computational overhead for conducting scientific research in neural architecture search (NAS). Although they have been widely adopted and used to tune real-world NAS algorithms, these benchmarks are limited to small search spaces and focus solely on image classification. Recently, several new NAS benchmarks have been introduced that cover significantly larger search spaces over a wide range of tasks, including object detection, speech recognition, and natural language processing. However, substantial differences among these NAS benchmarks have so far prevented their widespread adoption, limiting researchers to using just a few benchmarks. In this work, we present an in-depth analysis of popular NAS algorithms and performance prediction methods across 25 different combinations of search spaces and datasets, finding that many conclusions drawn from a few NAS benchmarks do not generalize to other benchmarks. To help remedy this problem, we introduce NAS-Bench-Suite, a comprehensive and extensible collection of NAS benchmarks, accessible through a unified interface, created with the aim to facilitate reproducible, generalizable, and rapid NAS research. Our code is available at https://github.com/automl/naslib.

Multi-headed Neural Ensemble Search

Jul 09, 2021

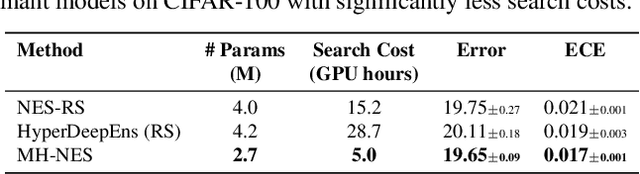

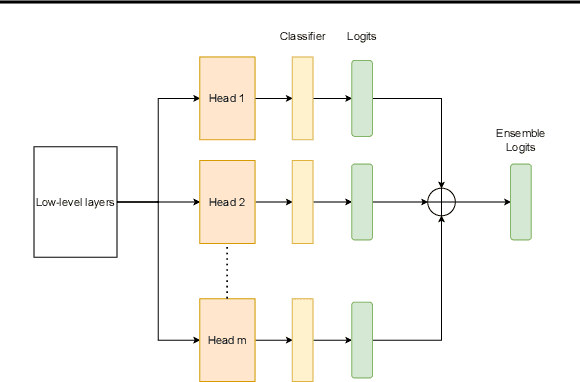

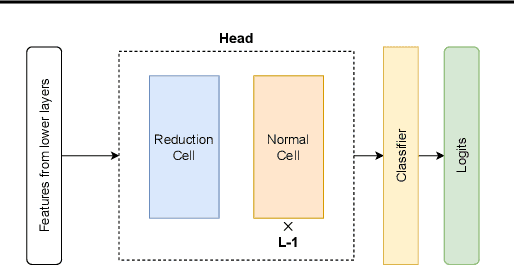

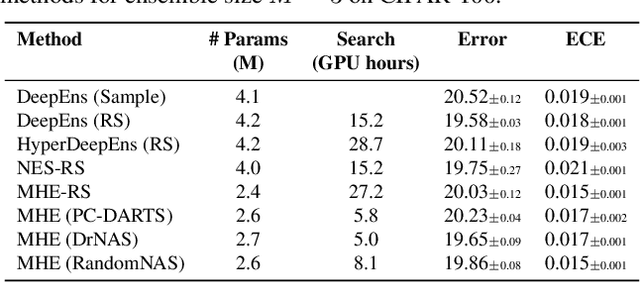

Abstract:Ensembles of CNN models trained with different seeds (also known as Deep Ensembles) are known to achieve superior performance over a single copy of the CNN. Neural Ensemble Search (NES) can further boost performance by adding architectural diversity. However, the scope of NES remains prohibitive under limited computational resources. In this work, we extend NES to multi-headed ensembles, which consist of a shared backbone attached to multiple prediction heads. Unlike Deep Ensembles, these multi-headed ensembles can be trained end to end, which enables us to leverage one-shot NAS methods to optimize an ensemble objective. With extensive empirical evaluations, we demonstrate that multi-headed ensemble search finds robust ensembles 3 times faster, while having comparable performance to other ensemble search methods, in both predictive performance and uncertainty calibration.

Bag of Tricks for Neural Architecture Search

Jul 08, 2021Abstract:While neural architecture search methods have been successful in previous years and led to new state-of-the-art performance on various problems, they have also been criticized for being unstable, being highly sensitive with respect to their hyperparameters, and often not performing better than random search. To shed some light on this issue, we discuss some practical considerations that help improve the stability, efficiency and overall performance.

How Powerful are Performance Predictors in Neural Architecture Search?

Apr 02, 2021

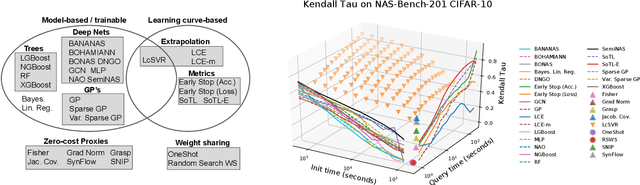

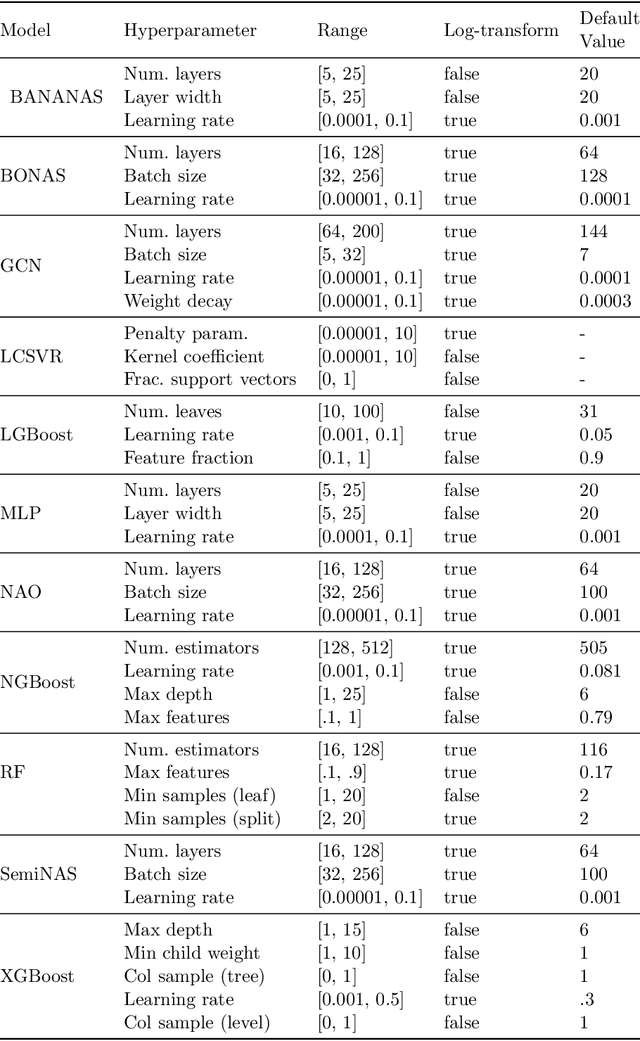

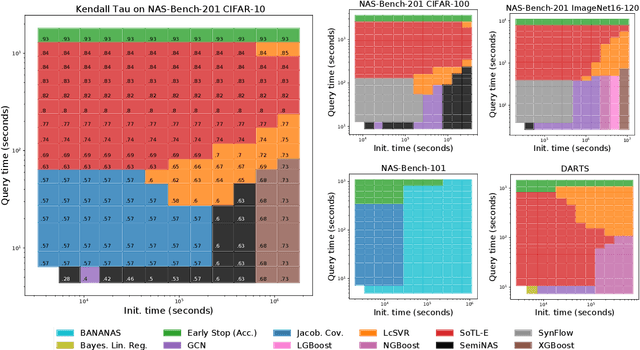

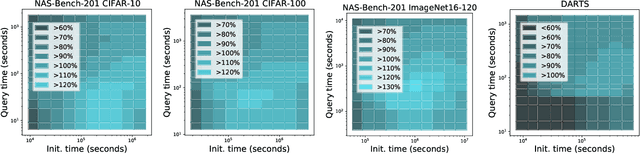

Abstract:Early methods in the rapidly developing field of neural architecture search (NAS) required fully training thousands of neural networks. To reduce this extreme computational cost, dozens of techniques have since been proposed to predict the final performance of neural architectures. Despite the success of such performance prediction methods, it is not well-understood how different families of techniques compare to one another, due to the lack of an agreed-upon evaluation metric and optimization for different constraints on the initialization time and query time. In this work, we give the first large-scale study of performance predictors by analyzing 31 techniques ranging from learning curve extrapolation, to weight-sharing, to supervised learning, to "zero-cost" proxies. We test a number of correlation- and rank-based performance measures in a variety of settings, as well as the ability of each technique to speed up predictor-based NAS frameworks. Our results act as recommendations for the best predictors to use in different settings, and we show that certain families of predictors can be combined to achieve even better predictive power, opening up promising research directions. Our code, featuring a library of 31 performance predictors, is available at https://github.com/automl/naslib.

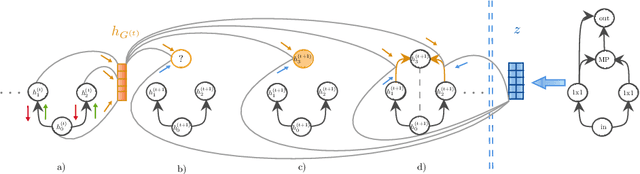

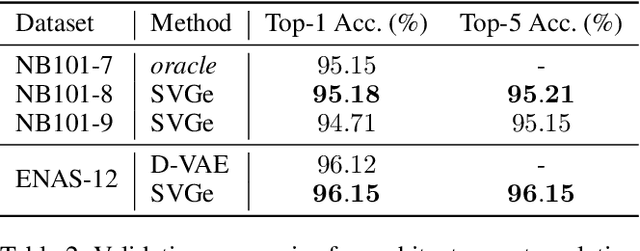

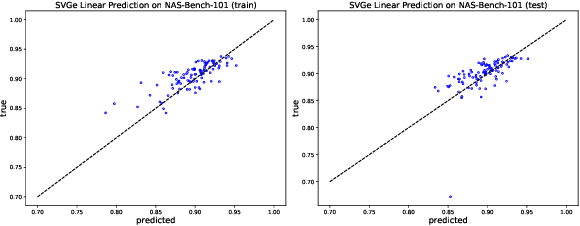

Smooth Variational Graph Embeddings for Efficient Neural Architecture Search

Oct 09, 2020

Abstract:In this paper, we propose an approach to neural architecture search (NAS) based on graph embeddings. NAS has been addressed previously using discrete, sampling based methods, which are computationally expensive as well as differentiable approaches, which come at lower costs but enforce stronger constraints on the search space. The proposed approach leverages advantages from both sides by building a smooth variational neural architecture embedding space in which we evaluate a structural subset of architectures at training time using the predicted performance while it allows to extrapolate from this subspace at inference time. We evaluate the proposed approach in the context of two common search spaces, the graph structure defined by the ENAS approach and the NAS-Bench-101 search space, and improve over the state of the art in both.

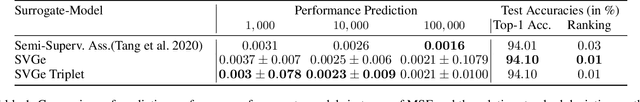

NAS-Bench-301 and the Case for Surrogate Benchmarks for Neural Architecture Search

Aug 22, 2020

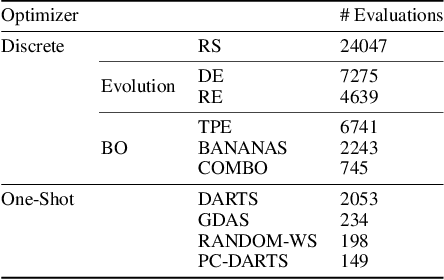

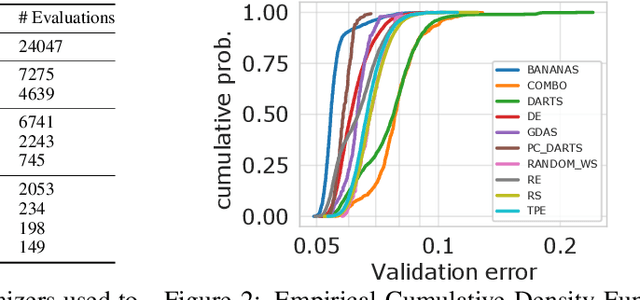

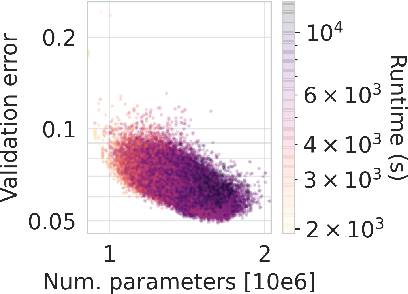

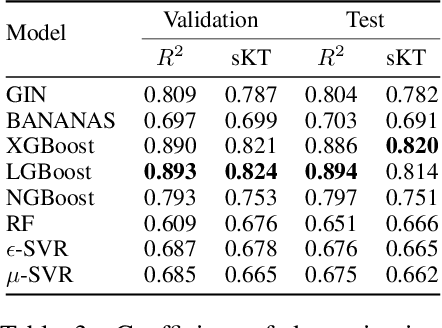

Abstract:Neural Architecture Search (NAS) is a logical next step in the automatic learning of representations, but the development of NAS methods is slowed by high computational demands. As a remedy, several tabular NAS benchmarks were proposed to simulate runs of NAS methods in seconds. However, all existing NAS benchmarks are limited to extremely small architectural spaces since they rely on exhaustive evaluations of the space. This leads to unrealistic results, such as a strong performance of local search and random search, that do not transfer to larger search spaces. To overcome this fundamental limitation, we propose NAS-Bench-301, the first model-based surrogate NAS benchmark, using a search space containing $10^{18}$ architectures, orders of magnitude larger than any previous NAS benchmark. We first motivate the benefits of using such a surrogate benchmark compared to a tabular one by smoothing out the noise stemming from the stochasticity of single SGD runs in a tabular benchmark. Then, we analyze our new dataset consisting of architecture evaluations and comprehensively evaluate various regression models as surrogates to demonstrate their capability to model the architecture space, also using deep ensembles to model uncertainty. Finally, we benchmark a wide range of NAS algorithms using NAS-Bench-301 allowing us to obtain comparable results to the true benchmark at a fraction of the cost.

Neural Ensemble Search for Performant and Calibrated Predictions

Jun 15, 2020

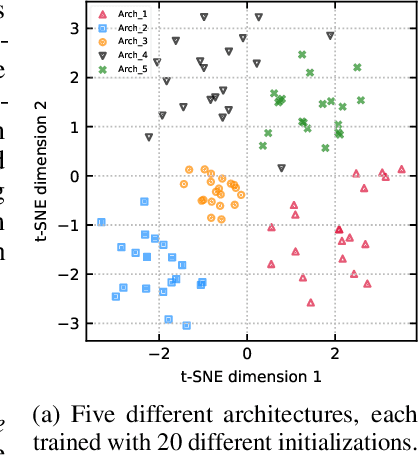

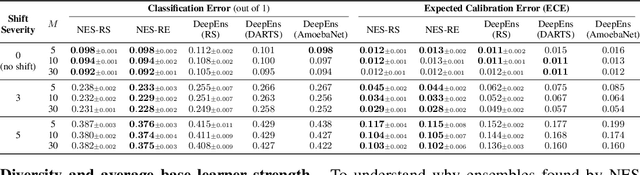

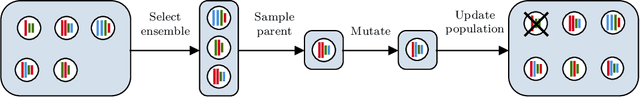

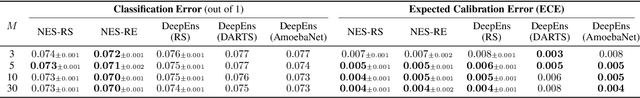

Abstract:Ensembles of neural networks achieve superior performance compared to stand-alone networks not only in terms of accuracy on in-distribution data but also on data with distributional shift alongside improved uncertainty calibration. Diversity among networks in an ensemble is believed to be key for building strong ensembles, but typical approaches only ensemble different weight vectors of a fixed architecture. Instead, we investigate neural architecture search (NAS) for explicitly constructing ensembles to exploit diversity among networks of varying architectures and to achieve robustness against distributional shift. By directly optimizing ensemble performance, our methods implicitly encourage diversity among networks, without the need to explicitly define diversity. We find that the resulting ensembles are more diverse compared to ensembles composed of a fixed architecture and are therefore also more powerful. We show significant improvements in ensemble performance on image classification tasks both for in-distribution data and during distributional shift with better uncertainty calibration.

NAS-Bench-1Shot1: Benchmarking and Dissecting One-shot Neural Architecture Search

Jan 28, 2020

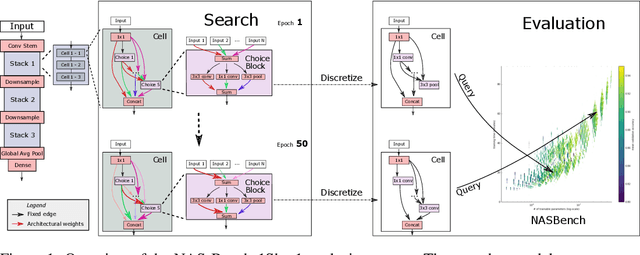

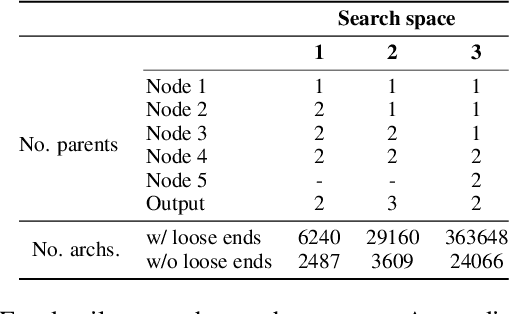

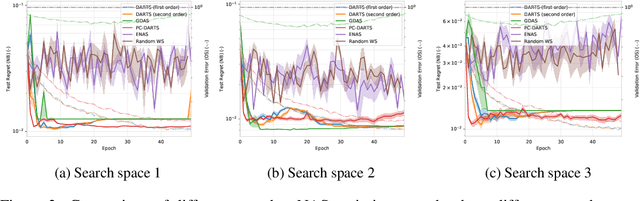

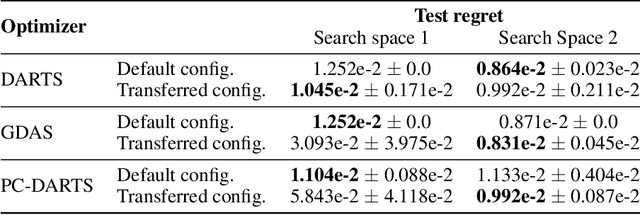

Abstract:One-shot neural architecture search (NAS) has played a crucial role in making NAS methods computationally feasible in practice. Nevertheless, there is still a lack of understanding on how these weight-sharing algorithms exactly work due to the many factors controlling the dynamics of the process. In order to allow a scientific study of these components, we introduce a general framework for one-shot NAS that can be instantiated to many recently-introduced variants and introduce a general benchmarking framework that draws on the recent large-scale tabular benchmark NAS-Bench-101 for cheap anytime evaluations of one-shot NAS methods. To showcase the framework, we compare several state-of-the-art one-shot NAS methods, examine how sensitive they are to their hyperparameters and how they can be improved by tuning their hyperparameters, and compare their performance to that of blackbox optimizers for NAS-Bench-101.

Understanding and Robustifying Differentiable Architecture Search

Sep 20, 2019

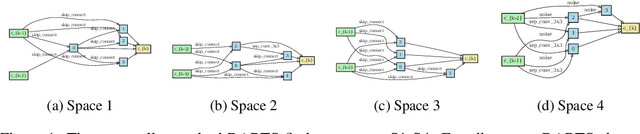

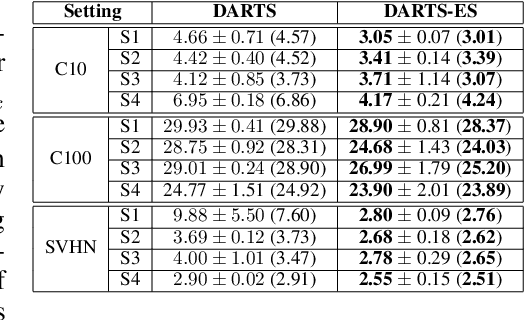

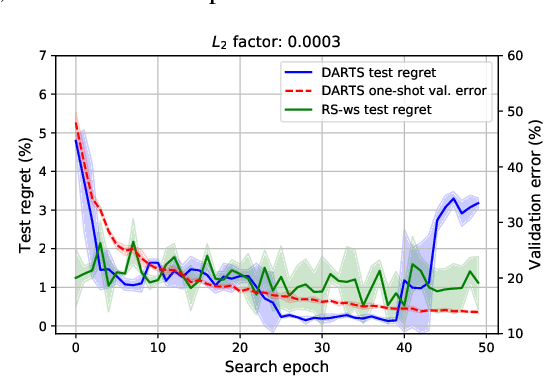

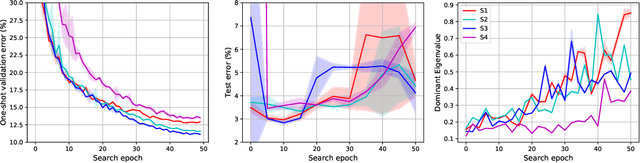

Abstract:Differentiable Architecture Search (DARTS) has attracted a lot of attention due to its simplicity and small search costs achieved by a continuous relaxation and an approximation of the resulting bi-level optimization problem. However, DARTS does not work robustly for new problems: we identify a wide range of search spaces for which DARTS yields degenerate architectures with very poor test performance. We study this failure mode and show that, while DARTS successfully minimizes validation loss, the found solutions generalize poorly when they coincide with high validation loss curvature in the space of architectures. We show that by adding one of various types of regularization we can robustify DARTS to find solutions with smaller Hessian spectrum and with better generalization properties. Based on these observations we propose several simple variations of DARTS that perform substantially more robustly in practice. Our observations are robust across five search spaces on three image classification tasks and also hold for the very different domains of disparity estimation (a dense regression task) and language modelling. We provide our implementation and scripts to facilitate reproducibility.

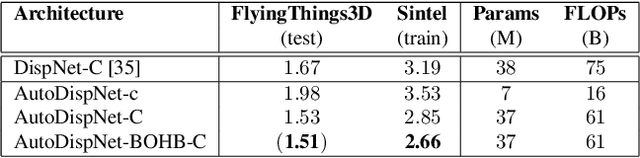

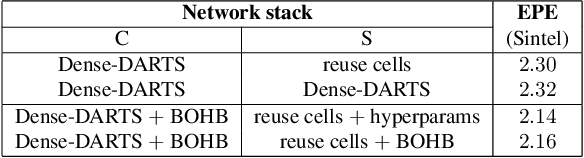

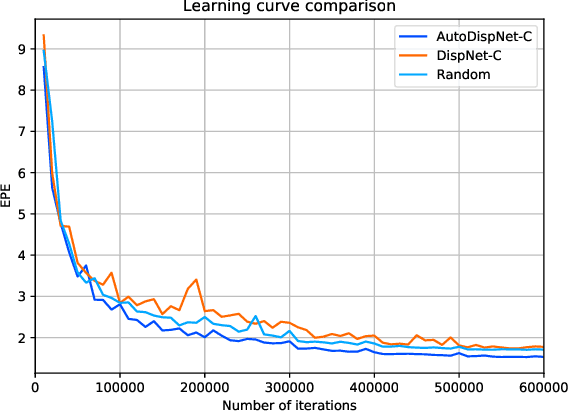

AutoDispNet: Improving Disparity Estimation with AutoML

May 17, 2019

Abstract:Much research work in computer vision is being spent on optimizing existing network architectures to obtain a few more percentage points on benchmarks. Recent AutoML approaches promise to relieve us from this effort. However, they are mainly designed for comparatively small-scale classification tasks. In this work, we show how to use and extend existing AutoML techniques to efficiently optimize large-scale U-Net-like encoder-decoder architectures. In particular, we leverage gradient-based neural architecture search and Bayesian optimization for hyperparameter search. The resulting optimization does not require a large company-scale compute cluster. We show results on disparity estimation that clearly outperform the manually optimized baseline and reach state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge