Anand Rangarajan

Domain-guided Machine Learning for Remotely Sensed In-Season Crop Growth Estimation

Jun 24, 2021

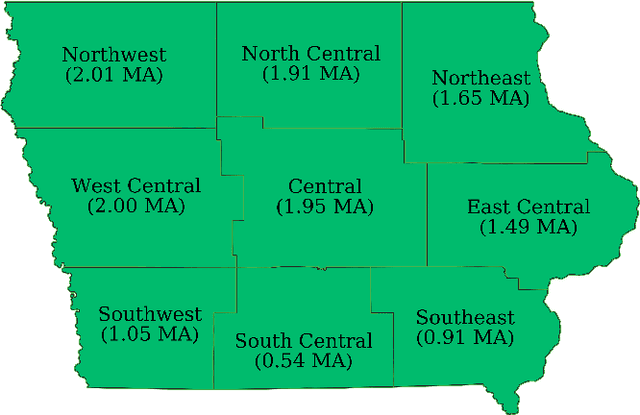

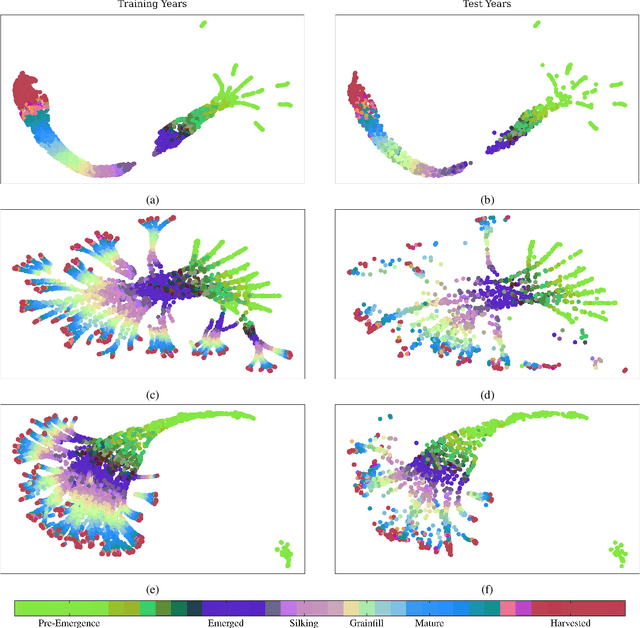

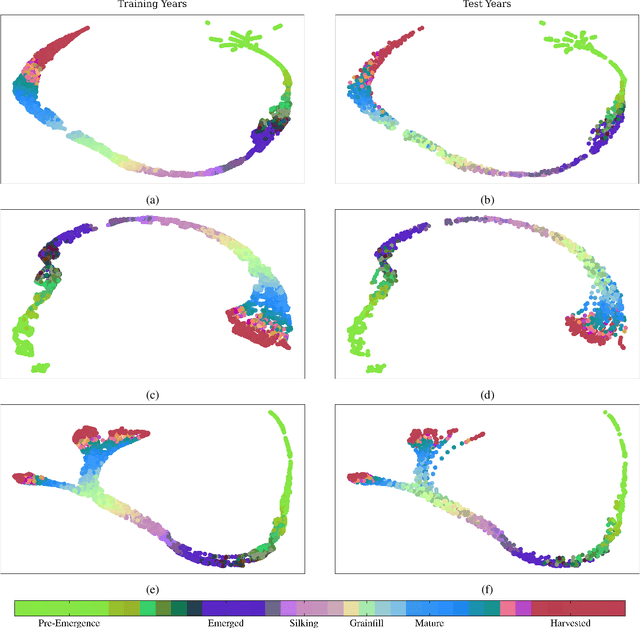

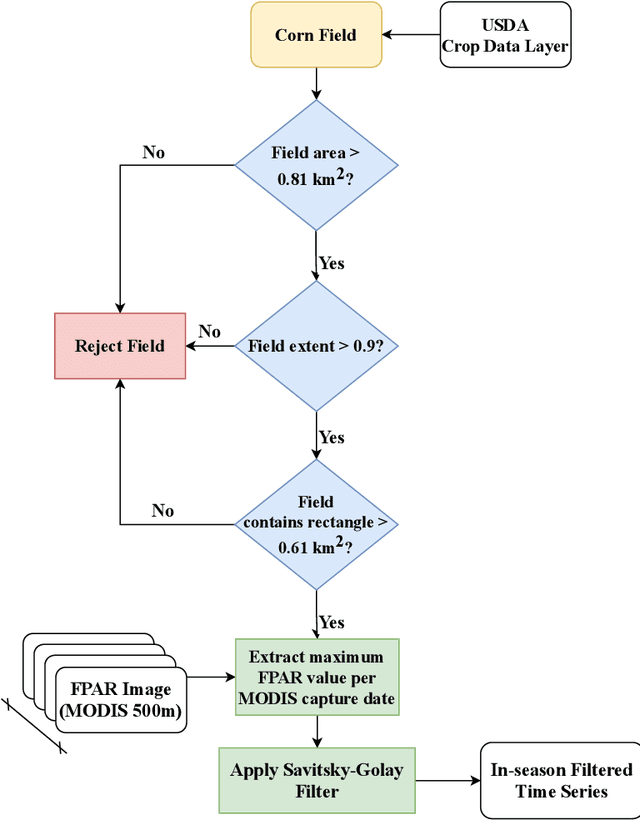

Abstract:Advanced machine learning techniques have been used in remote sensing (RS) applications such as crop mapping and yield prediction, but remain under-utilized for tracking crop progress. In this study, we demonstrate the use of agronomic knowledge of crop growth drivers in a Long Short-Term Memory-based, Domain-guided neural network (DgNN) for in-season crop progress estimation. The DgNN uses a branched structure and attention to separate independent crop growth drivers and capture their varying importance throughout the growing season. The DgNN is implemented for corn, using RS data in Iowa for the period 2003-2019, with USDA crop progress reports used as ground truth. State-wide DgNN performance shows significant improvement over sequential and dense-only NN structures, and a widely-used Hidden Markov Model method. The DgNN had a 3.5% higher Nash-Sutfliffe efficiency over all growth stages and 33% more weeks with highest cosine similarity than the other NNs during test years. The DgNN and Sequential NN were more robust during periods of abnormal crop progress, though estimating the Silking-Grainfill transition was difficult for all methods. Finally, Uniform Manifold Approximation and Projection visualizations of layer activations showed how LSTM-based NNs separate crop growth time-series differently from a dense-only structure. Results from this study exhibit both the viability of NNs in crop growth stage estimation (CGSE) and the benefits of using domain knowledge. The DgNN methodology presented here can be extended to provide near-real time CGSE of other crops.

Efficient Iterative Amortized Inference for Learning Symmetric and Disentangled Multi-Object Representations

Jun 07, 2021

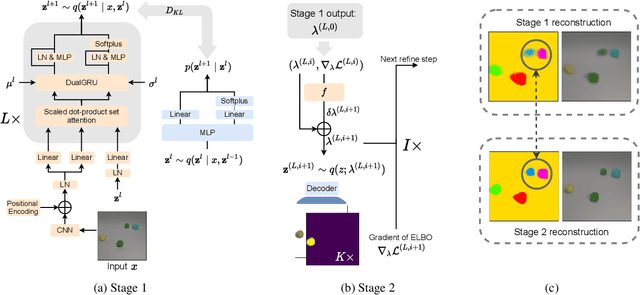

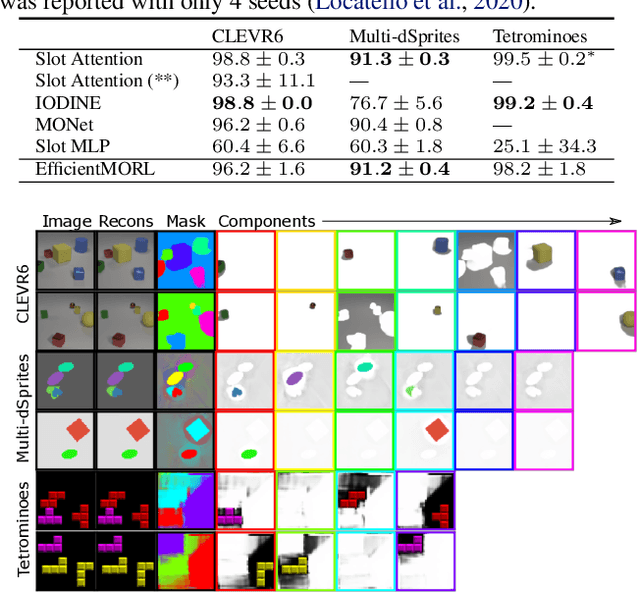

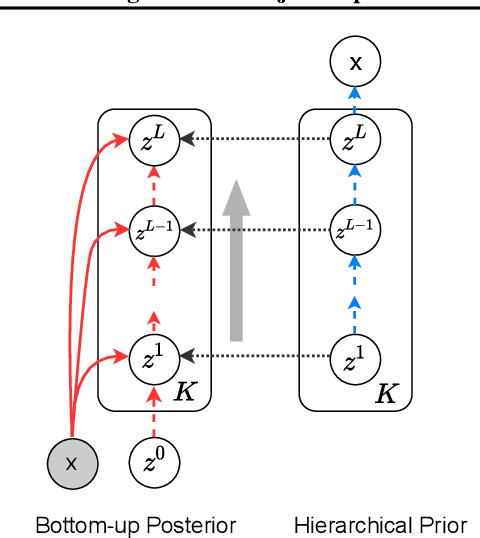

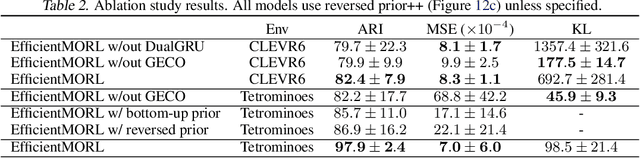

Abstract:Unsupervised multi-object representation learning depends on inductive biases to guide the discovery of object-centric representations that generalize. However, we observe that methods for learning these representations are either impractical due to long training times and large memory consumption or forego key inductive biases. In this work, we introduce EfficientMORL, an efficient framework for the unsupervised learning of object-centric representations. We show that optimization challenges caused by requiring both symmetry and disentanglement can in fact be addressed by high-cost iterative amortized inference by designing the framework to minimize its dependence on it. We take a two-stage approach to inference: first, a hierarchical variational autoencoder extracts symmetric and disentangled representations through bottom-up inference, and second, a lightweight network refines the representations with top-down feedback. The number of refinement steps taken during training is reduced following a curriculum, so that at test time with zero steps the model achieves 99.1% of the refined decomposition performance. We demonstrate strong object decomposition and disentanglement on the standard multi-object benchmark while achieving nearly an order of magnitude faster training and test time inference over the previous state-of-the-art model.

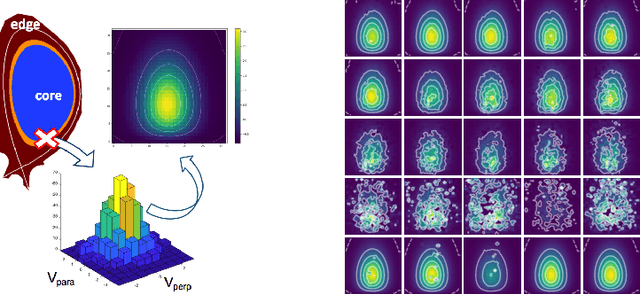

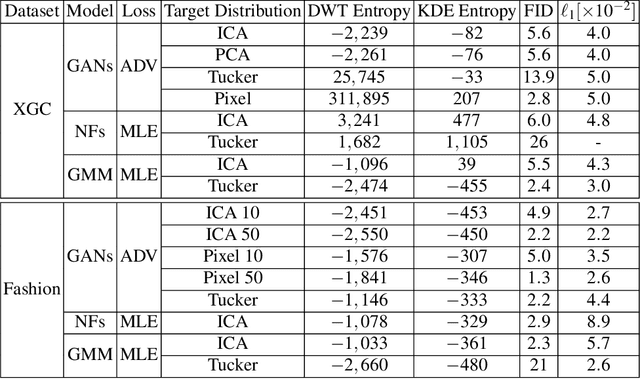

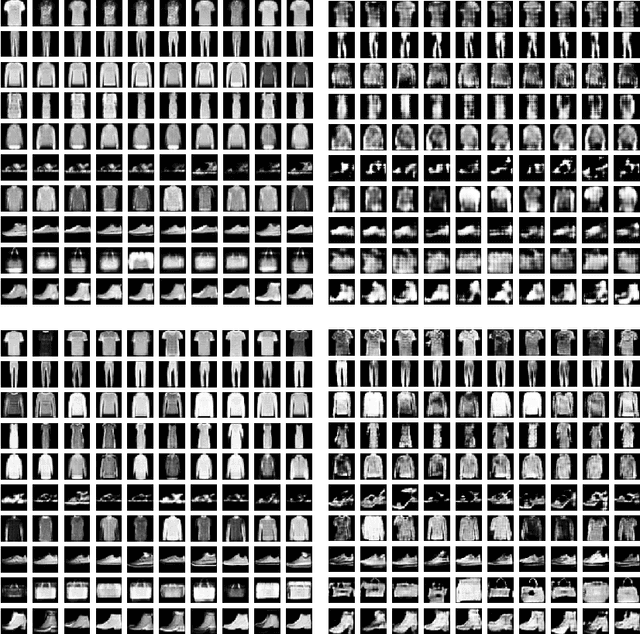

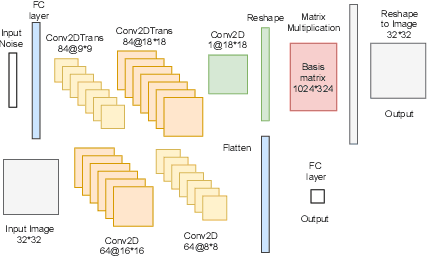

Hybrid Generative Models for Two-Dimensional Datasets

Jun 01, 2021

Abstract:Two-dimensional array-based datasets are pervasive in a variety of domains. Current approaches for generative modeling have typically been limited to conventional image datasets and performed in the pixel domain which do not explicitly capture the correlation between pixels. Additionally, these approaches do not extend to scientific and other applications where each element value is continuous and is not limited to a fixed range. In this paper, we propose a novel approach for generating two-dimensional datasets by moving the computations to the space of representation bases and show its usefulness for two different datasets, one from imaging and another from scientific computing. The proposed approach is general and can be applied to any dataset, representation basis, or generative model. We provide a comprehensive performance comparison of various combinations of generative models and representation basis spaces. We also propose a new evaluation metric which captures the deficiency of generating images in pixel space.

SparsePipe: Parallel Deep Learning for 3D Point Clouds

Dec 27, 2020

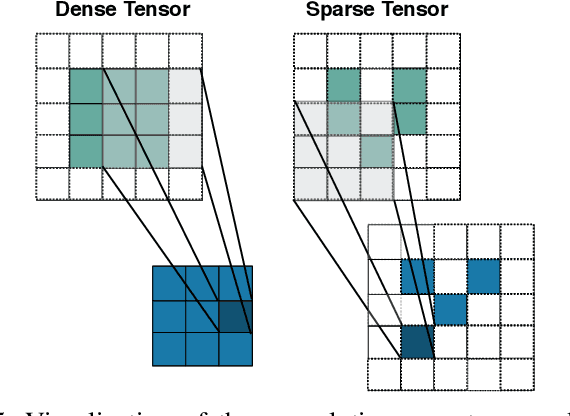

Abstract:We propose SparsePipe, an efficient and asynchronous parallelism approach for handling 3D point clouds with multi-GPU training. SparsePipe is built to support 3D sparse data such as point clouds. It achieves this by adopting generalized convolutions with sparse tensor representation to build expressive high-dimensional convolutional neural networks. Compared to dense solutions, the new models can efficiently process irregular point clouds without densely sliding over the entire space, significantly reducing the memory requirements and allowing higher resolutions of the underlying 3D volumes for better performance. SparsePipe exploits intra-batch parallelism that partitions input data into multiple processors and further improves the training throughput with inter-batch pipelining to overlap communication and computing. Besides, it suitably partitions the model when the GPUs are heterogeneous such that the computing is load-balanced with reduced communication overhead. Using experimental results on an eight-GPU platform, we show that SparsePipe can parallelize effectively and obtain better performance on current point cloud benchmarks for both training and inference, compared to its dense solutions.

A Unified Framework for Multiclass and Multilabel Support Vector Machines

Mar 25, 2020

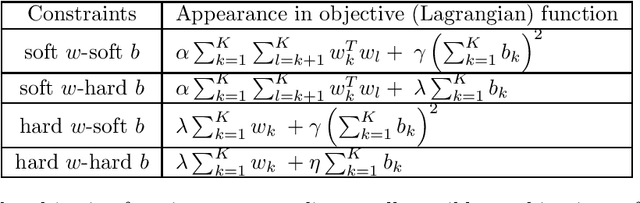

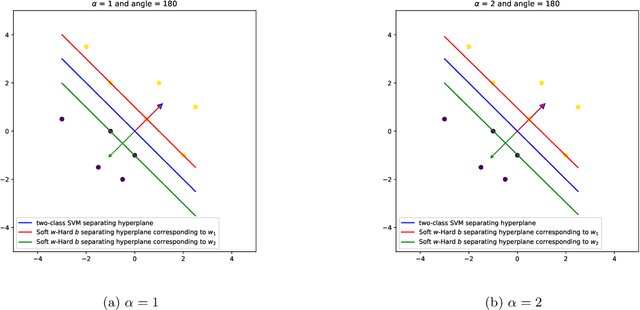

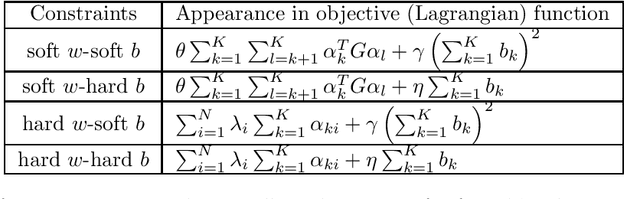

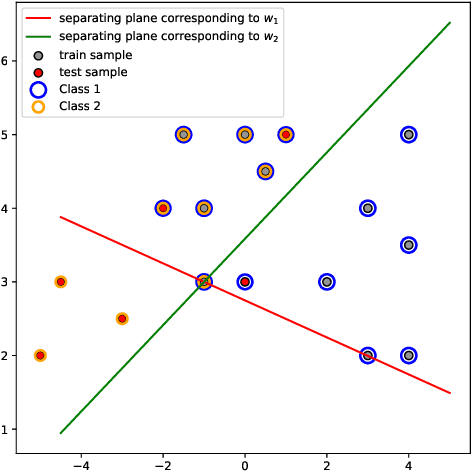

Abstract:We propose a novel integrated formulation for multiclass and multilabel support vector machines (SVMs). A number of approaches have been proposed to extend the original binary SVM to an all-in-one multiclass SVM. However, its direct extension to a unified multilabel SVM has not been widely investigated. We propose a straightforward extension to the SVM to cope with multiclass and multilabel classification problems within a unified framework. Our framework deviates from the conventional soft margin SVM framework with its direct oppositional structure. In our formulation, class-specific weight vectors (normal vectors) are learned by maximizing their margin with respect to an origin and penalizing patterns when they get too close to this origin. As a result, each weight vector chooses an orientation and a magnitude with respect to this origin in such a way that it best represents the patterns belonging to its corresponding class. Opposition between classes is introduced into the formulation via the minimization of pairwise inner products of weight vectors. We also extend our framework to cope with nonlinear separability via standard reproducing kernel Hilbert spaces (RKHS). Biases which are closely related to the origin need to be treated properly in both the original feature space and Hilbert space. We have the flexibility to incorporate constraints into the formulation (if they better reflect the underlying geometry) and improve the performance of the classifier. To this end, specifics and technicalities such as the origin in RKHS are addressed. Results demonstrates a competitive classifier for both multiclass and multilabel classification problems.

Intelligent Intersection: Two-Stream Convolutional Networks for Real-time Near Accident Detection in Traffic Video

Jan 04, 2019

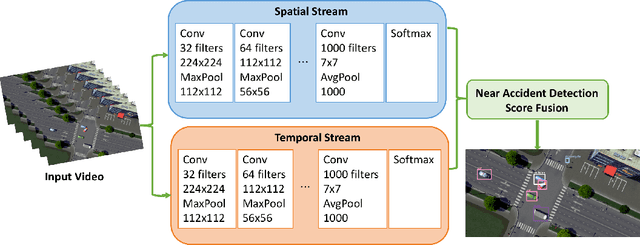

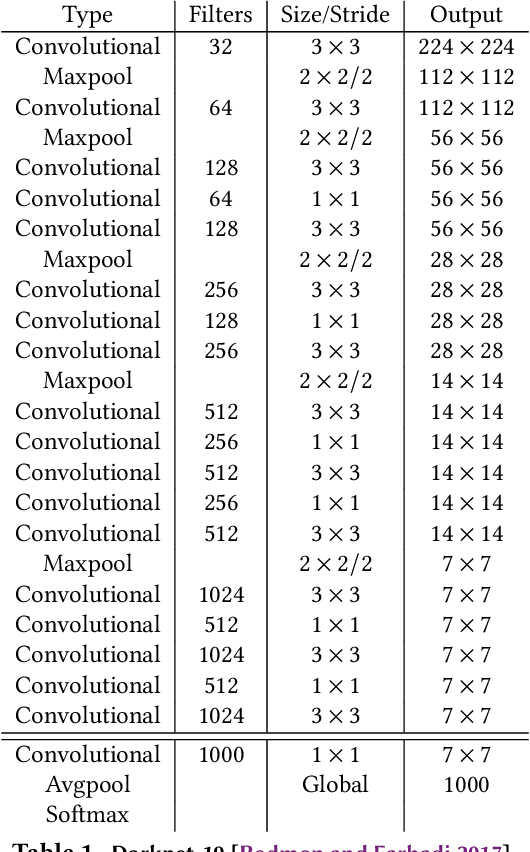

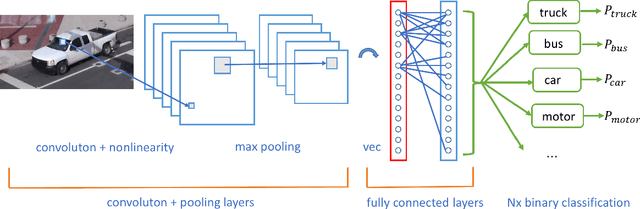

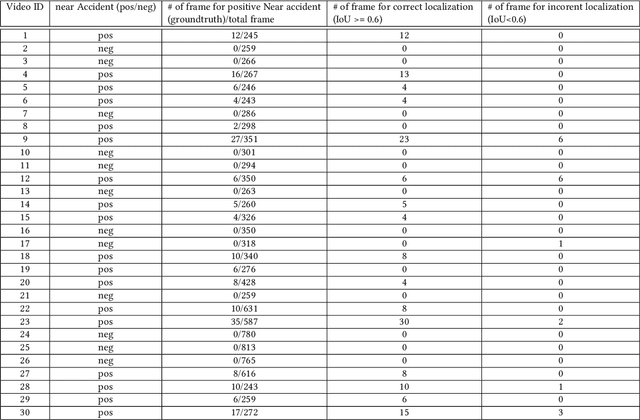

Abstract:In Intelligent Transportation System, real-time systems that monitor and analyze road users become increasingly critical as we march toward the smart city era. Vision-based frameworks for Object Detection, Multiple Object Tracking, and Traffic Near Accident Detection are important applications of Intelligent Transportation System, particularly in video surveillance and etc. Although deep neural networks have recently achieved great success in many computer vision tasks, a uniformed framework for all the three tasks is still challenging where the challenges multiply from demand for real-time performance, complex urban setting, highly dynamic traffic event, and many traffic movements. In this paper, we propose a two-stream Convolutional Network architecture that performs real-time detection, tracking, and near accident detection of road users in traffic video data. The two-stream model consists of a spatial stream network for Object Detection and a temporal stream network to leverage motion features for Multiple Object Tracking. We detect near accidents by incorporating appearance features and motion features from two-stream networks. Using aerial videos, we propose a Traffic Near Accident Dataset (TNAD) covering various types of traffic interactions that is suitable for vision-based traffic analysis tasks. Our experiments demonstrate the advantage of our framework with an overall competitive qualitative and quantitative performance at high frame rates on the TNAD dataset.

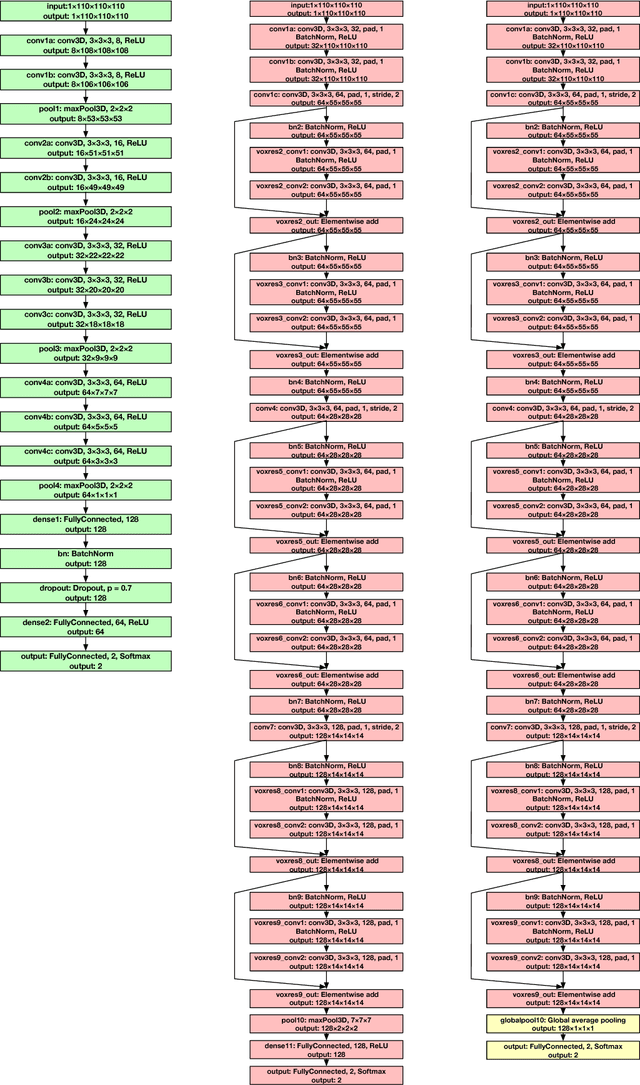

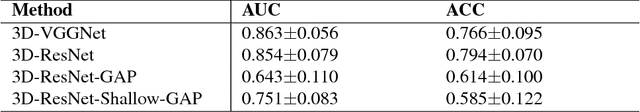

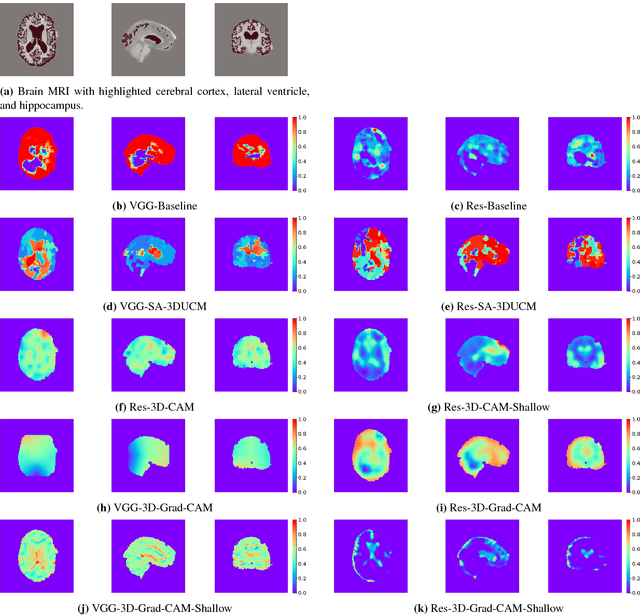

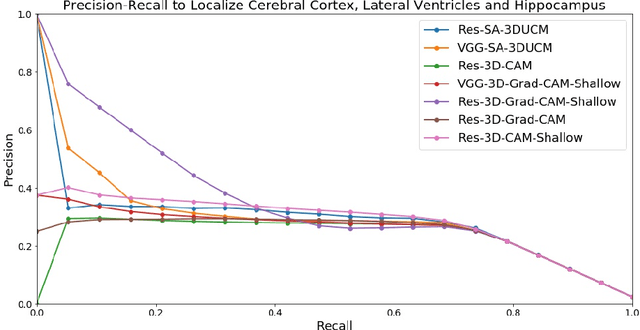

Visual Explanations From Deep 3D Convolutional Neural Networks for Alzheimer's Disease Classification

Jul 06, 2018

Abstract:We develop three efficient approaches for generating visual explanations from 3D convolutional neural networks (3D-CNNs) for Alzheimer's disease classification. One approach conducts sensitivity analysis on hierarchical 3D image segmentation, and the other two visualize network activations on a spatial map. Visual checks and a quantitative localization benchmark indicate that all approaches identify important brain parts for Alzheimer's disease diagnosis. Comparative analysis show that the sensitivity analysis based approach has difficulty handling loosely distributed cerebral cortex, and approaches based on visualization of activations are constrained by the resolution of the convolutional layer. The complementarity of these methods improves the understanding of 3D-CNNs in Alzheimer's disease classification from different perspectives.

Global Model Interpretation via Recursive Partitioning

May 23, 2018

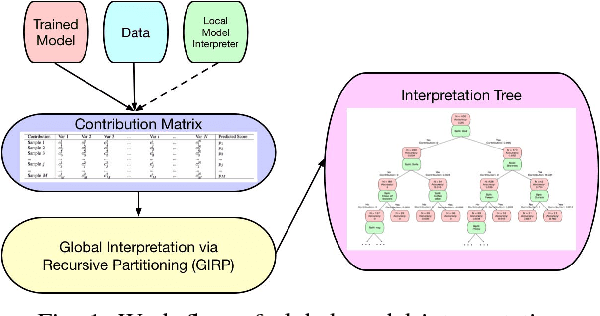

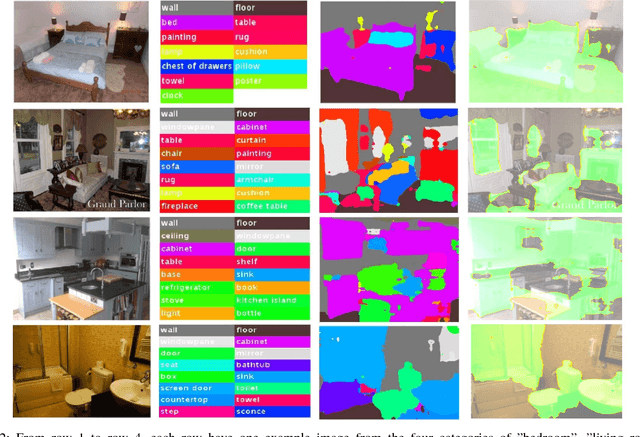

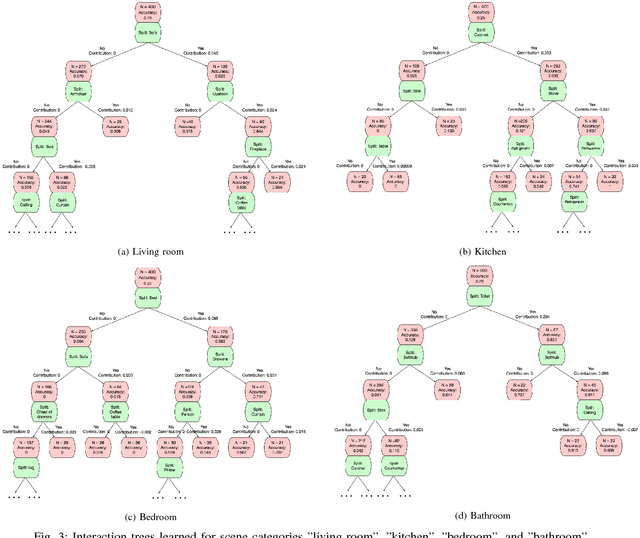

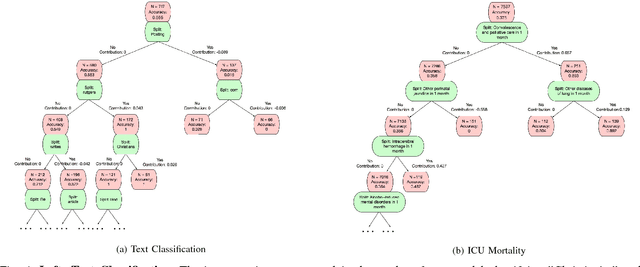

Abstract:In this work, we propose a simple but effective method to interpret black-box machine learning models globally. That is, we use a compact binary tree, the interpretation tree, to explicitly represent the most important decision rules that are implicitly contained in the black-box machine learning models. This tree is learned from the contribution matrix which consists of the contributions of input variables to predicted scores for each single prediction. To generate the interpretation tree, a unified process recursively partitions the input variable space by maximizing the difference in the average contribution of the split variable between the divided spaces. We demonstrate the effectiveness of our method in diagnosing machine learning models on multiple tasks. Also, it is useful for new knowledge discovery as such insights are not easily identifiable when only looking at single predictions. In general, our work makes it easier and more efficient for human beings to understand machine learning models.

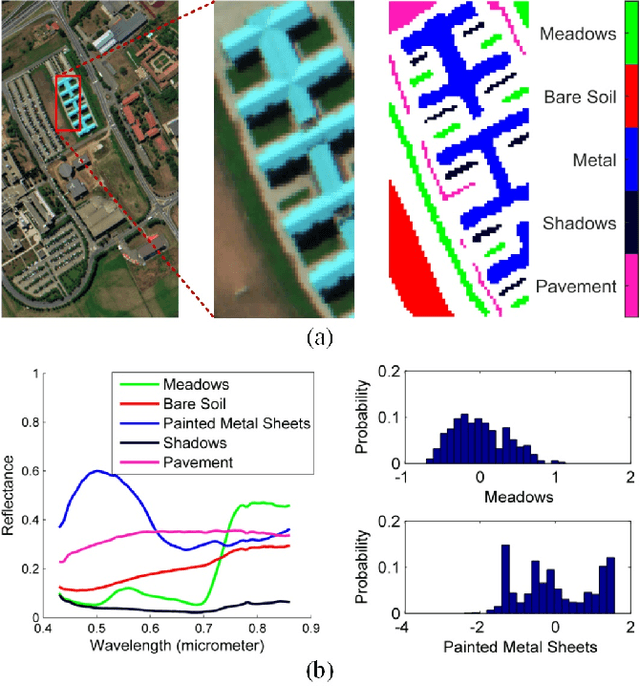

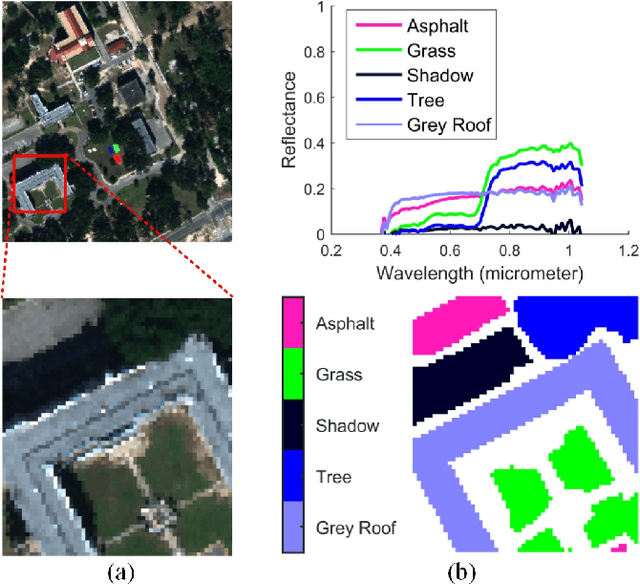

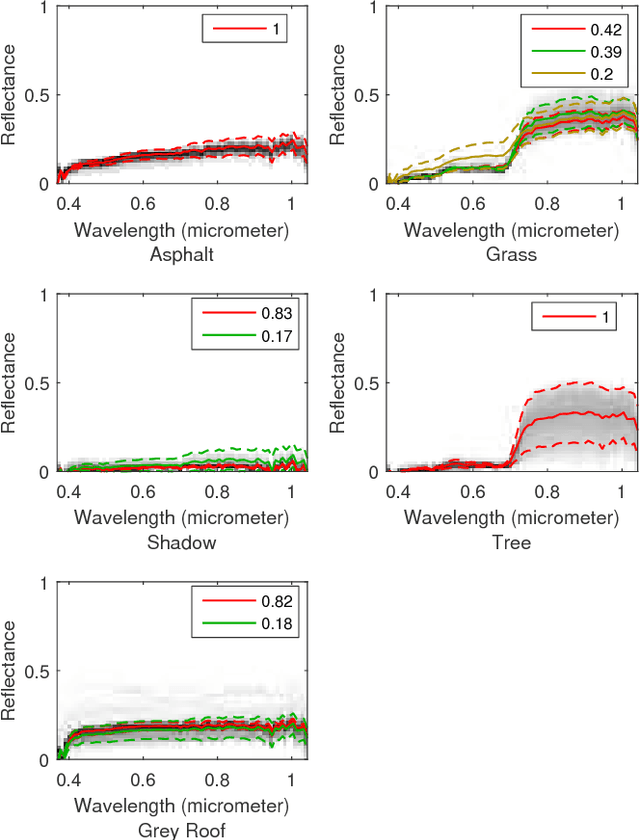

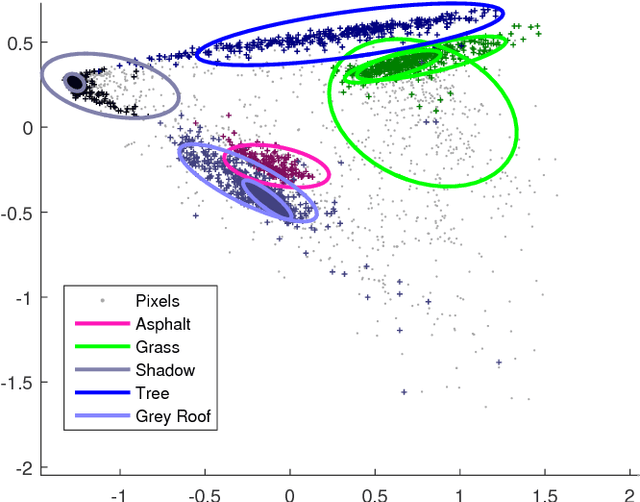

A Gaussian mixture model representation of endmember variability in hyperspectral unmixing

Jan 15, 2018

Abstract:Hyperspectral unmixing while considering endmember variability is usually performed by the normal compositional model (NCM), where the endmembers for each pixel are assumed to be sampled from unimodal Gaussian distributions. However, in real applications, the distribution of a material is often not Gaussian. In this paper, we use Gaussian mixture models (GMM) to represent the endmember variability. We show, given the GMM starting premise, that the distribution of the mixed pixel (under the linear mixing model) is also a GMM (and this is shown from two perspectives). The first perspective originates from the random variable transformation and gives a conditional density function of the pixels given the abundances and GMM parameters. With proper smoothness and sparsity prior constraints on the abundances, the conditional density function leads to a standard maximum a posteriori (MAP) problem which can be solved using generalized expectation maximization. The second perspective originates from marginalizing over the endmembers in the GMM, which provides us with a foundation to solve for the endmembers at each pixel. Hence, our model can not only estimate the abundances and distribution parameters, but also the distinct endmember set for each pixel. We tested the proposed GMM on several synthetic and real datasets, and showed its potential by comparing it to current popular methods.

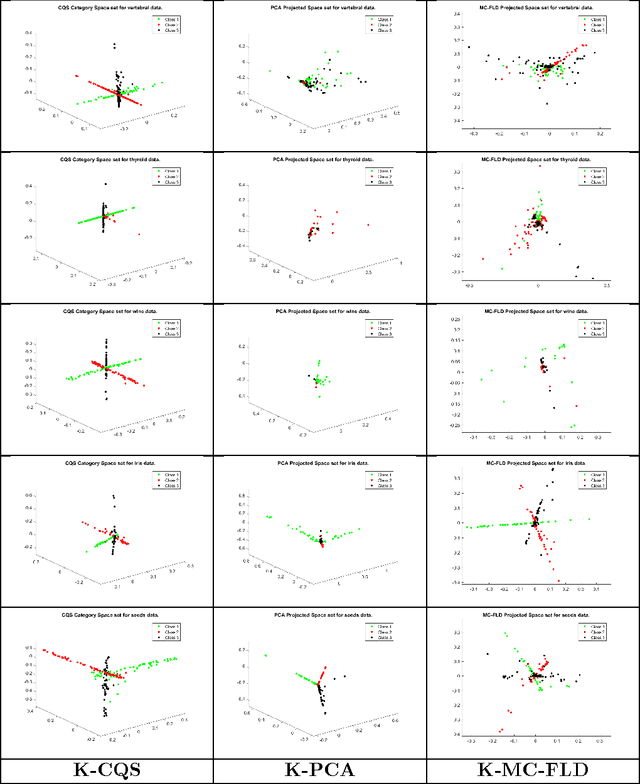

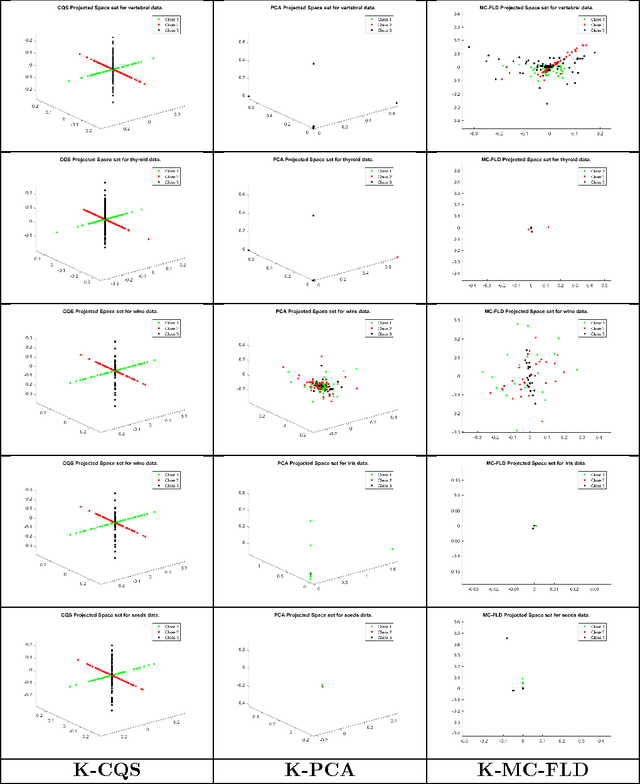

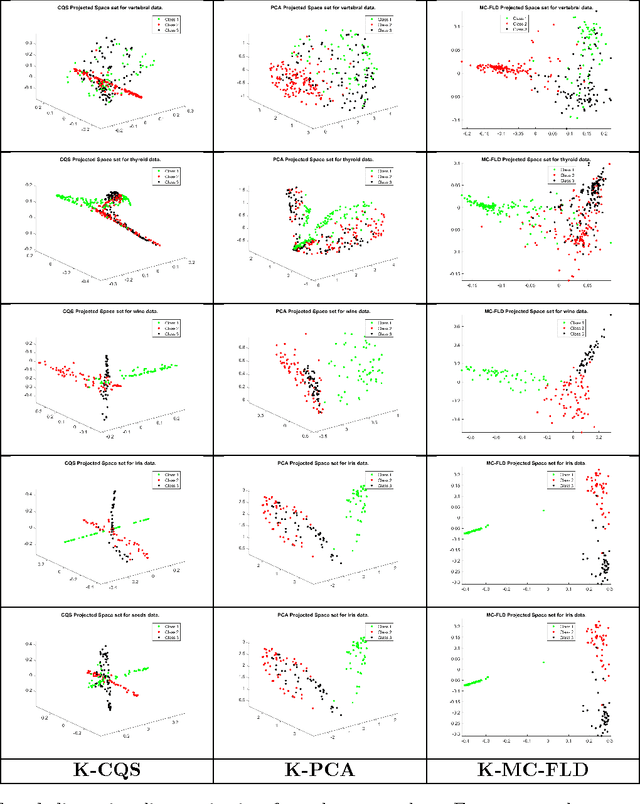

A Category Space Approach to Supervised Dimensionality Reduction

Oct 27, 2016

Abstract:Supervised dimensionality reduction has emerged as an important theme in the last decade. Despite the plethora of models and formulations, there is a lack of a simple model which aims to project the set of patterns into a space defined by the classes (or categories). To this end, we set up a model in which each class is represented as a 1D subspace of the vector space formed by the features. Assuming the set of classes does not exceed the cardinality of the features, the model results in multi-class supervised learning in which the features of each class are projected into the class subspace. Class discrimination is automatically guaranteed via the imposition of orthogonality of the 1D class sub-spaces. The resulting optimization problem - formulated as the minimization of a sum of quadratic functions on a Stiefel manifold - while being non-convex (due to the constraints), nevertheless has a structure for which we can identify when we have reached a global minimum. After formulating a version with standard inner products, we extend the formulation to reproducing kernel Hilbert spaces in a straightforward manner. The optimization approach also extends in a similar fashion to the kernel version. Results and comparisons with the multi-class Fisher linear (and kernel) discriminants and principal component analysis (linear and kernel) showcase the relative merits of this approach to dimensionality reduction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge