Chengliang Yang

Visual Explanations From Deep 3D Convolutional Neural Networks for Alzheimer's Disease Classification

Jul 06, 2018

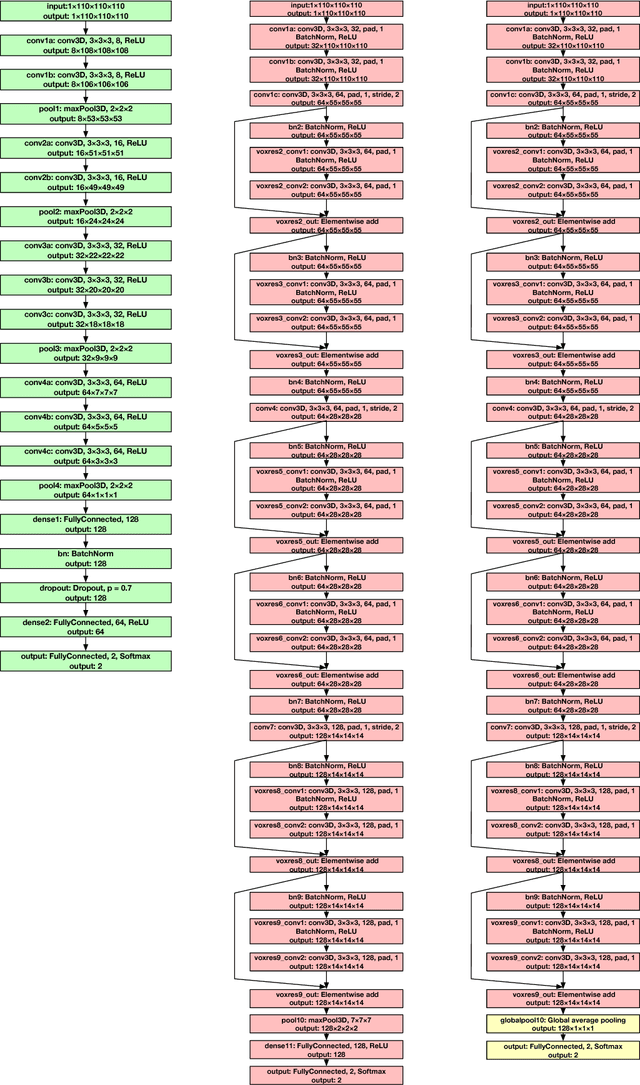

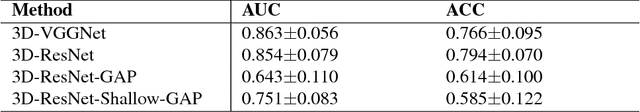

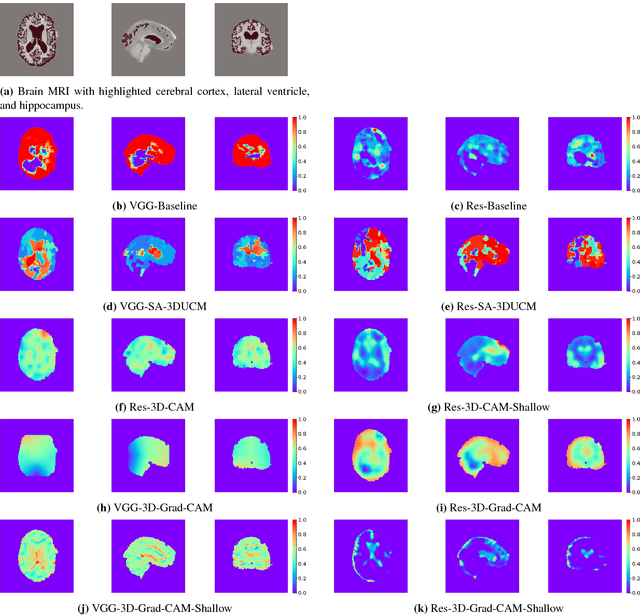

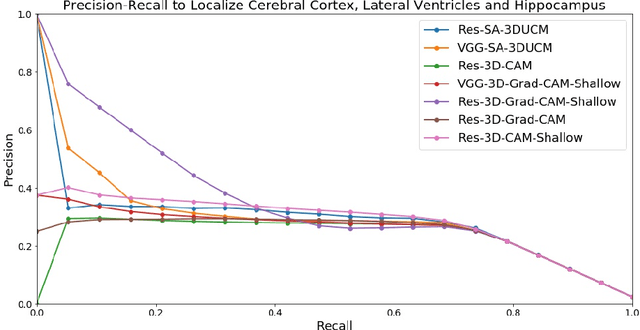

Abstract:We develop three efficient approaches for generating visual explanations from 3D convolutional neural networks (3D-CNNs) for Alzheimer's disease classification. One approach conducts sensitivity analysis on hierarchical 3D image segmentation, and the other two visualize network activations on a spatial map. Visual checks and a quantitative localization benchmark indicate that all approaches identify important brain parts for Alzheimer's disease diagnosis. Comparative analysis show that the sensitivity analysis based approach has difficulty handling loosely distributed cerebral cortex, and approaches based on visualization of activations are constrained by the resolution of the convolutional layer. The complementarity of these methods improves the understanding of 3D-CNNs in Alzheimer's disease classification from different perspectives.

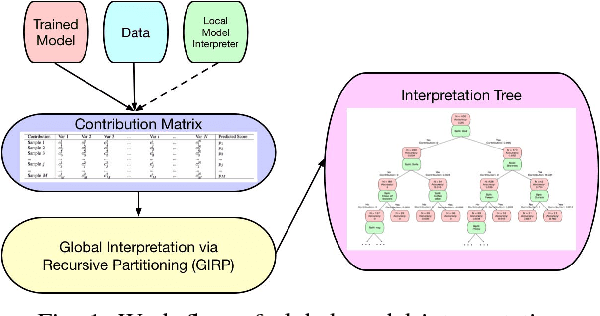

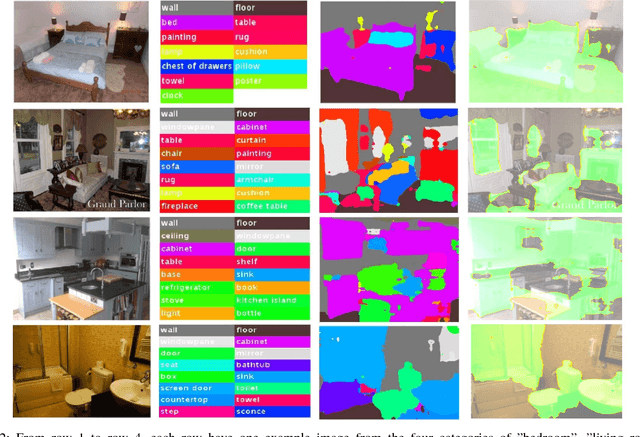

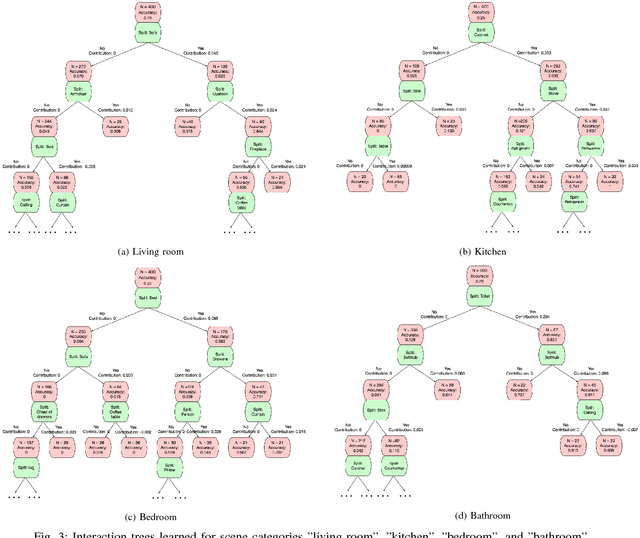

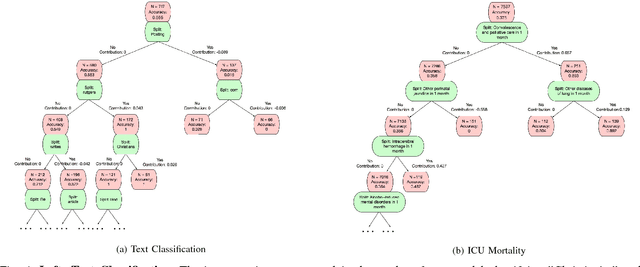

Global Model Interpretation via Recursive Partitioning

May 23, 2018

Abstract:In this work, we propose a simple but effective method to interpret black-box machine learning models globally. That is, we use a compact binary tree, the interpretation tree, to explicitly represent the most important decision rules that are implicitly contained in the black-box machine learning models. This tree is learned from the contribution matrix which consists of the contributions of input variables to predicted scores for each single prediction. To generate the interpretation tree, a unified process recursively partitions the input variable space by maximizing the difference in the average contribution of the split variable between the divided spaces. We demonstrate the effectiveness of our method in diagnosing machine learning models on multiple tasks. Also, it is useful for new knowledge discovery as such insights are not easily identifiable when only looking at single predictions. In general, our work makes it easier and more efficient for human beings to understand machine learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge