Amr Mohamed

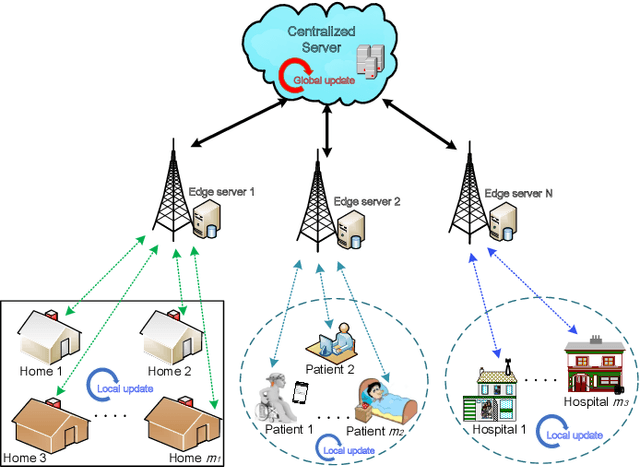

Optimal Resource Management for Hierarchical Federated Learning over HetNets with Wireless Energy Transfer

May 03, 2023

Abstract:Remote monitoring systems analyze the environment dynamics in different smart industrial applications, such as occupational health and safety, and environmental monitoring. Specifically, in industrial Internet of Things (IoT) systems, the huge number of devices and the expected performance put pressure on resources, such as computational, network, and device energy. Distributed training of Machine and Deep Learning (ML/DL) models for intelligent industrial IoT applications is very challenging for resource limited devices over heterogeneous wireless networks (HetNets). Hierarchical Federated Learning (HFL) performs training at multiple layers offloading the tasks to nearby Multi-Access Edge Computing (MEC) units. In this paper, we propose a novel energy-efficient HFL framework enabled by Wireless Energy Transfer (WET) and designed for heterogeneous networks with massive Multiple-Input Multiple-Output (MIMO) wireless backhaul. Our energy-efficiency approach is formulated as a Mixed-Integer Non-Linear Programming (MINLP) problem, where we optimize the HFL device association and manage the wireless transmitted energy. However due to its high complexity, we design a Heuristic Resource Management Algorithm, namely H2RMA, that respects energy, channel quality, and accuracy constraints, while presenting a low computational complexity. We also improve the energy consumption of the network using an efficient device scheduling scheme. Finally, we investigate device mobility and its impact on the HFL performance. Our extensive experiments confirm the high performance of the proposed resource management approach in HFL over HetNets, in terms of training loss and grid energy costs.

RL-DistPrivacy: Privacy-Aware Distributed Deep Inference for low latency IoT systems

Aug 27, 2022

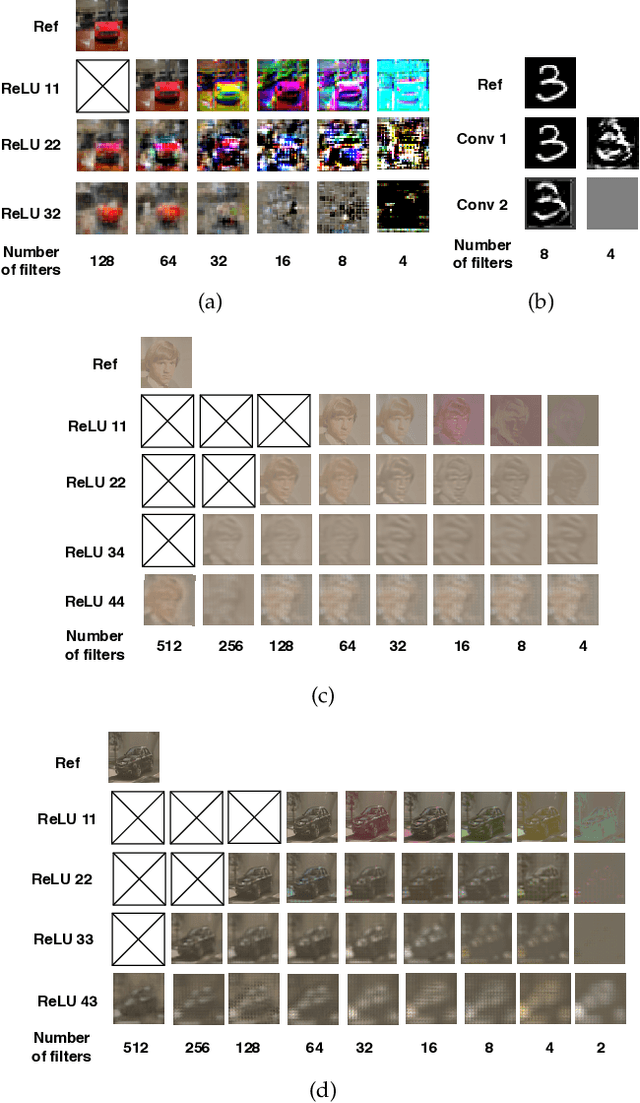

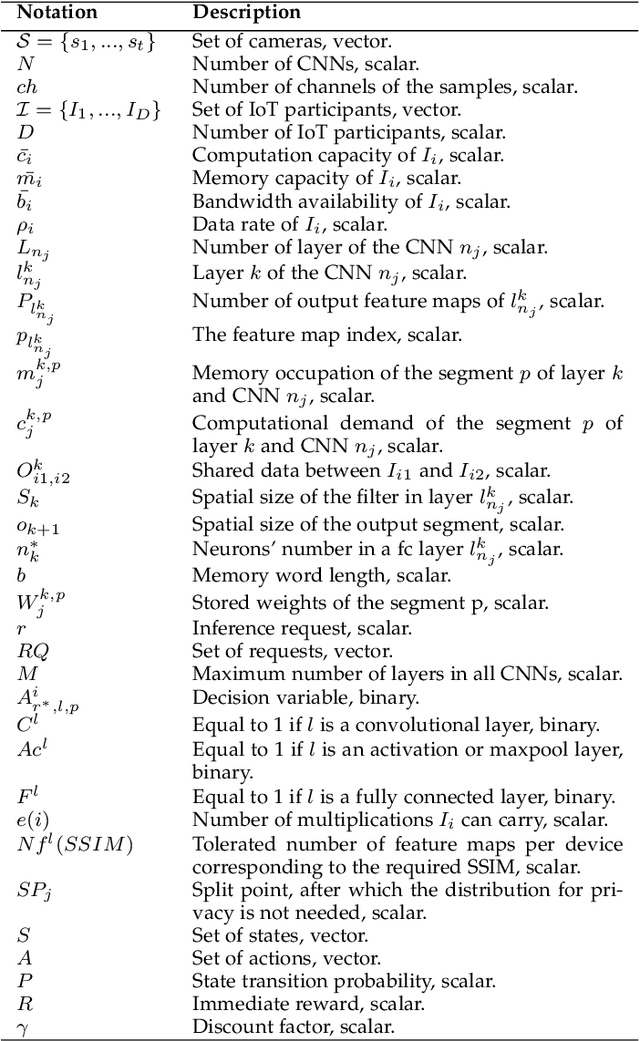

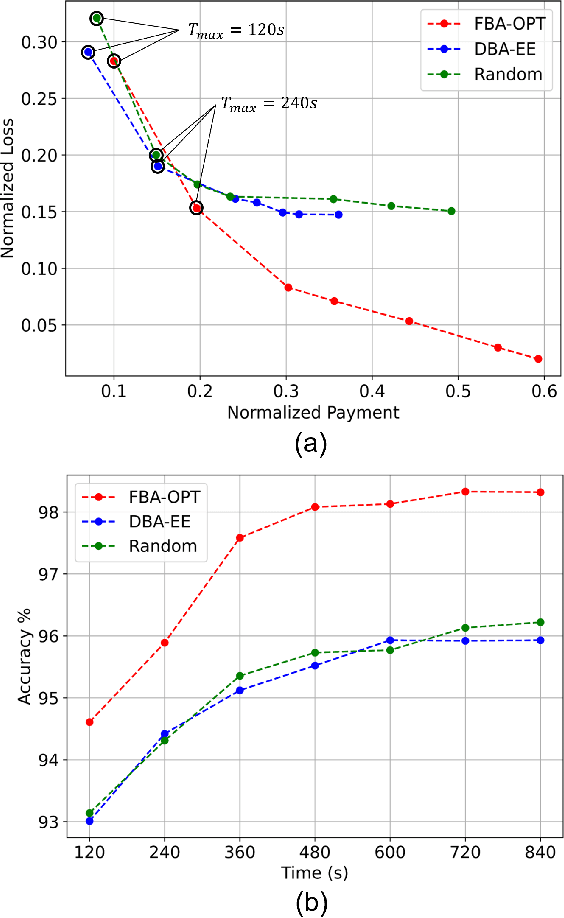

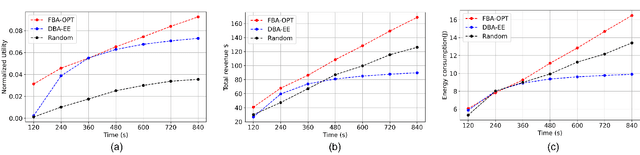

Abstract:Although Deep Neural Networks (DNN) have become the backbone technology of several ubiquitous applications, their deployment in resource-constrained machines, e.g., Internet of Things (IoT) devices, is still challenging. To satisfy the resource requirements of such a paradigm, collaborative deep inference with IoT synergy was introduced. However, the distribution of DNN networks suffers from severe data leakage. Various threats have been presented, including black-box attacks, where malicious participants can recover arbitrary inputs fed into their devices. Although many countermeasures were designed to achieve privacy-preserving DNN, most of them result in additional computation and lower accuracy. In this paper, we present an approach that targets the security of collaborative deep inference via re-thinking the distribution strategy, without sacrificing the model performance. Particularly, we examine different DNN partitions that make the model susceptible to black-box threats and we derive the amount of data that should be allocated per device to hide proprieties of the original input. We formulate this methodology, as an optimization, where we establish a trade-off between the latency of co-inference and the privacy-level of data. Next, to relax the optimal solution, we shape our approach as a Reinforcement Learning (RL) design that supports heterogeneous devices as well as multiple DNNs/datasets.

* Published in IEEE Transactions on Network Science and Engineering

Motivating Learners in Multi-Orchestrator Mobile Edge Learning: A Stackelberg Game Approach

Sep 25, 2021

Abstract:Mobile Edge Learning (MEL) is a learning paradigm that enables distributed training of Machine Learning models over heterogeneous edge devices (e.g., IoT devices). Multi-orchestrator MEL refers to the coexistence of multiple learning tasks with different datasets, each of which being governed by an orchestrator to facilitate the distributed training process. In MEL, the training performance deteriorates without the availability of sufficient training data or computing resources. Therefore, it is crucial to motivate edge devices to become learners and offer their computing resources, and either offer their private data or receive the needed data from the orchestrator and participate in the training process of a learning task. In this work, we propose an incentive mechanism, where we formulate the orchestrators-learners interactions as a 2-round Stackelberg game to motivate the participation of the learners. In the first round, the learners decide which learning task to get engaged in, and then in the second round, the amount of data for training in case of participation such that their utility is maximized. We then study the game analytically and derive the learners' optimal strategy. Finally, numerical experiments have been conducted to evaluate the performance of the proposed incentive mechanism.

Energy-Efficient Multi-Orchestrator Mobile Edge Learning

Sep 02, 2021

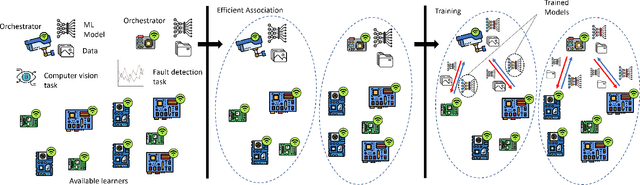

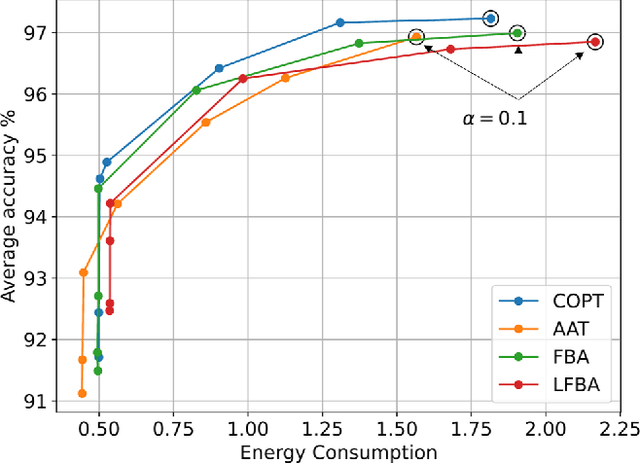

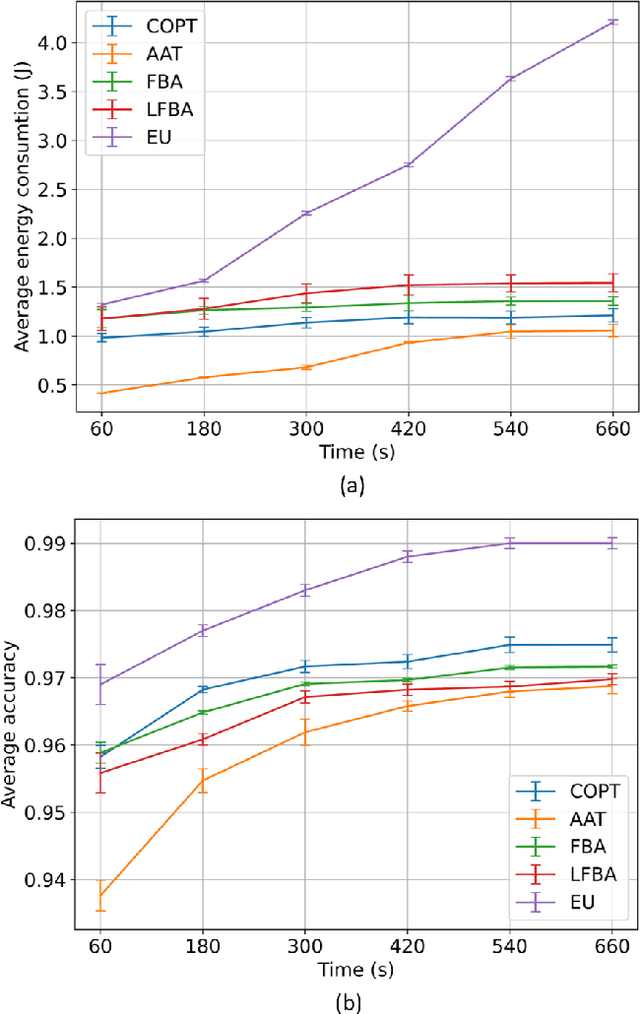

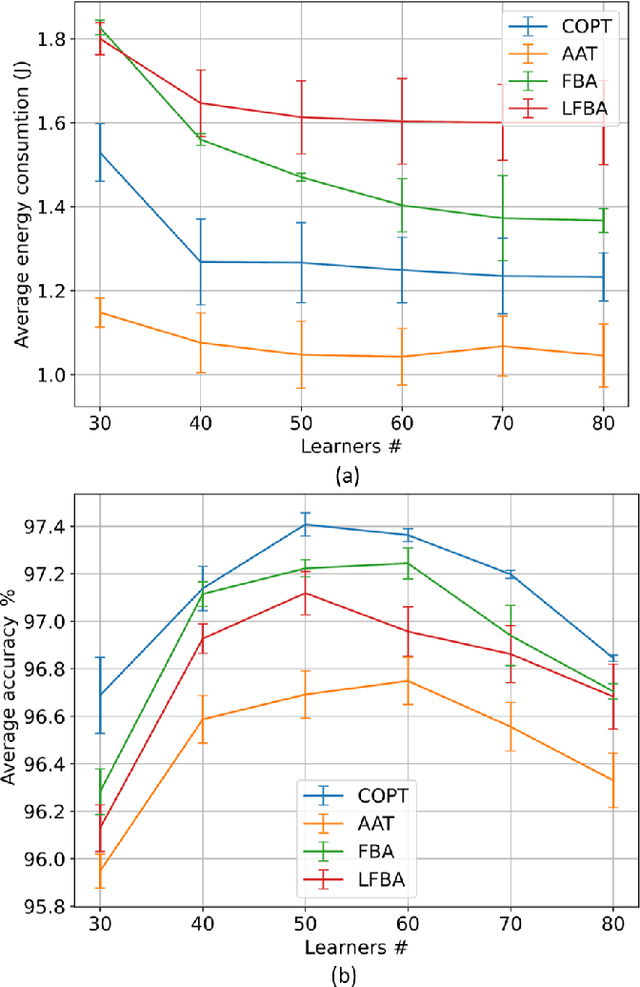

Abstract:Mobile Edge Learning (MEL) is a collaborative learning paradigm that features distributed training of Machine Learning (ML) models over edge devices (e.g., IoT devices). In MEL, possible coexistence of multiple learning tasks with different datasets may arise. The heterogeneity in edge devices' capabilities will require the joint optimization of the learners-orchestrator association and task allocation. To this end, we aim to develop an energy-efficient framework for learners-orchestrator association and learning task allocation, in which each orchestrator gets associated with a group of learners with the same learning task based on their communication channel qualities and computational resources, and allocate the tasks accordingly. Therein, a multi objective optimization problem is formulated to minimize the total energy consumption and maximize the learning tasks' accuracy. However, solving such optimization problem requires centralization and the presence of the whole environment information at a single entity, which becomes impractical in large-scale systems. To reduce the solution complexity and to enable solution decentralization, we propose lightweight heuristic algorithms that can achieve near-optimal performance and facilitate the trade-offs between energy consumption, accuracy, and solution complexity. Simulation results show that the proposed approaches reduce the energy consumption significantly while executing multiple learning tasks compared to recent state-of-the-art methods.

Reinforcement Learning for Intelligent Healthcare Systems: A Comprehensive Survey

Aug 05, 2021

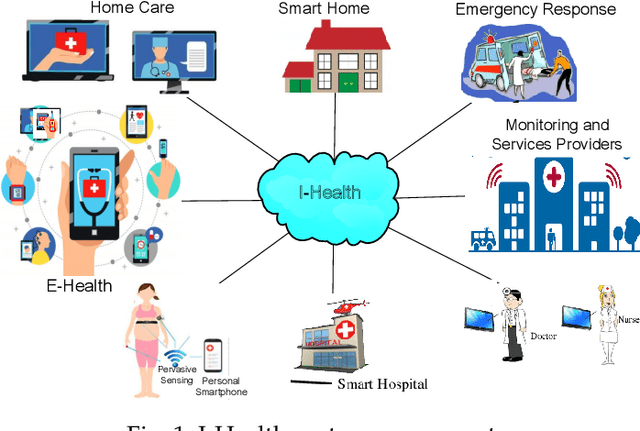

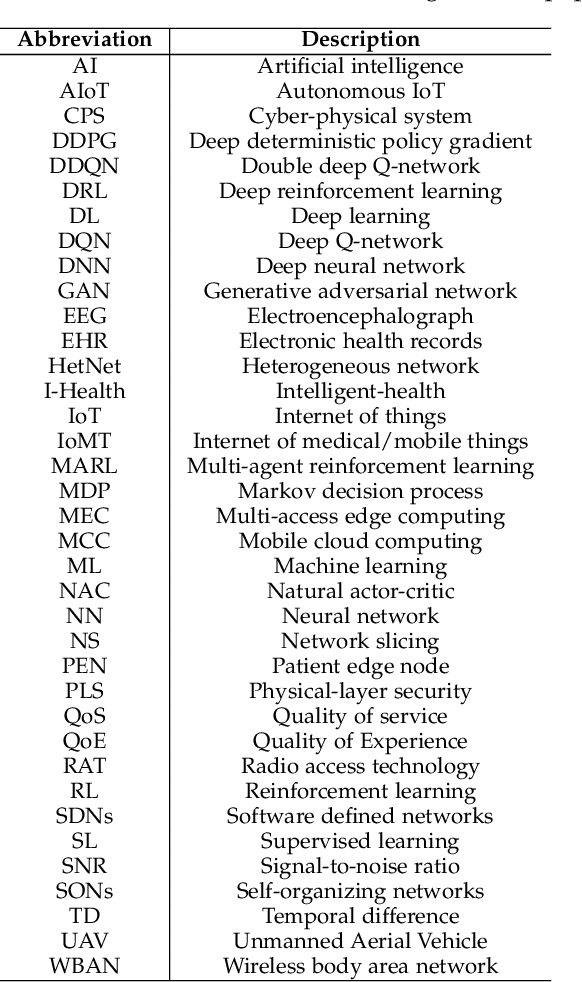

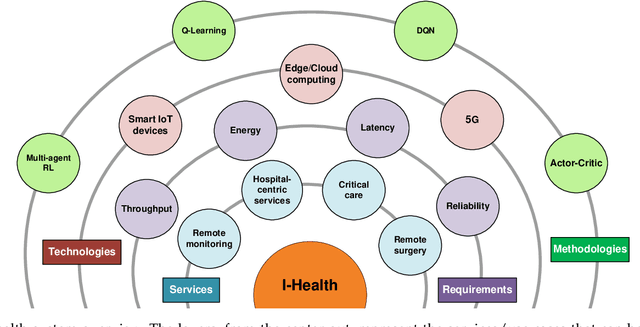

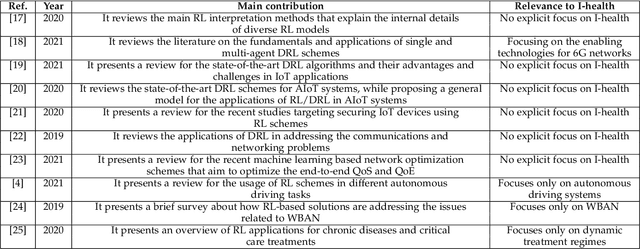

Abstract:The rapid increase in the percentage of chronic disease patients along with the recent pandemic pose immediate threats on healthcare expenditure and elevate causes of death. This calls for transforming healthcare systems away from one-on-one patient treatment into intelligent health systems, to improve services, access and scalability, while reducing costs. Reinforcement Learning (RL) has witnessed an intrinsic breakthrough in solving a variety of complex problems for diverse applications and services. Thus, we conduct in this paper a comprehensive survey of the recent models and techniques of RL that have been developed/used for supporting Intelligent-healthcare (I-health) systems. This paper can guide the readers to deeply understand the state-of-the-art regarding the use of RL in the context of I-health. Specifically, we first present an overview for the I-health systems challenges, architecture, and how RL can benefit these systems. We then review the background and mathematical modeling of different RL, Deep RL (DRL), and multi-agent RL models. After that, we provide a deep literature review for the applications of RL in I-health systems. In particular, three main areas have been tackled, i.e., edge intelligence, smart core network, and dynamic treatment regimes. Finally, we highlight emerging challenges and outline future research directions in driving the future success of RL in I-health systems, which opens the door for exploring some interesting and unsolved problems.

VisDrone-CC2020: The Vision Meets Drone Crowd Counting Challenge Results

Jul 19, 2021

Abstract:Crowd counting on the drone platform is an interesting topic in computer vision, which brings new challenges such as small object inference, background clutter and wide viewpoint. However, there are few algorithms focusing on crowd counting on the drone-captured data due to the lack of comprehensive datasets. To this end, we collect a large-scale dataset and organize the Vision Meets Drone Crowd Counting Challenge (VisDrone-CC2020) in conjunction with the 16th European Conference on Computer Vision (ECCV 2020) to promote the developments in the related fields. The collected dataset is formed by $3,360$ images, including $2,460$ images for training, and $900$ images for testing. Specifically, we manually annotate persons with points in each video frame. There are $14$ algorithms from $15$ institutes submitted to the VisDrone-CC2020 Challenge. We provide a detailed analysis of the evaluation results and conclude the challenge. More information can be found at the website: \url{http://www.aiskyeye.com/}.

* The method description of A7 Mutil-Scale Aware based SFANet (M-SFANet) is updated and missing references are added

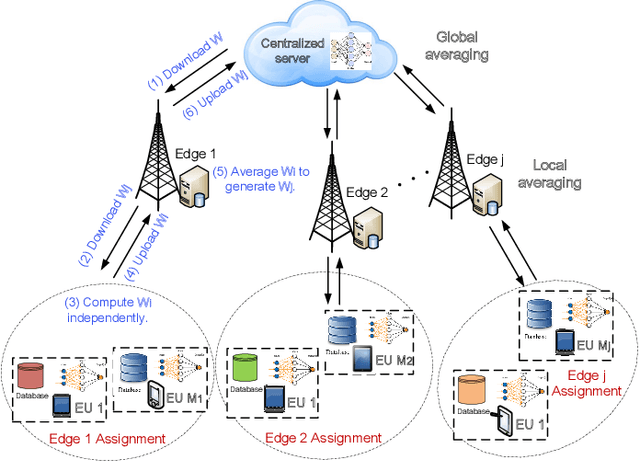

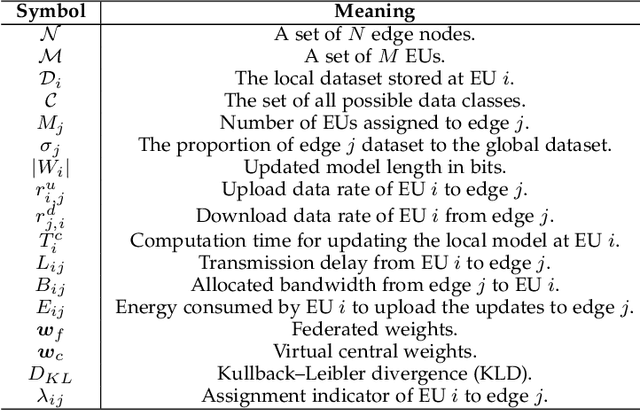

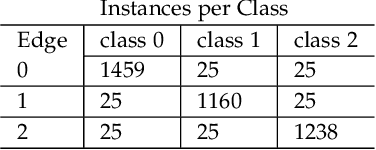

Communication-Efficient Hierarchical Federated Learning for IoT Heterogeneous Systems with Imbalanced Data

Jul 14, 2021

Abstract:Federated learning (FL) is a distributed learning methodology that allows multiple nodes to cooperatively train a deep learning model, without the need to share their local data. It is a promising solution for telemonitoring systems that demand intensive data collection, for detection, classification, and prediction of future events, from different locations while maintaining a strict privacy constraint. Due to privacy concerns and critical communication bottlenecks, it can become impractical to send the FL updated models to a centralized server. Thus, this paper studies the potential of hierarchical FL in IoT heterogeneous systems and propose an optimized solution for user assignment and resource allocation on multiple edge nodes. In particular, this work focuses on a generic class of machine learning models that are trained using gradient-descent-based schemes while considering the practical constraints of non-uniformly distributed data across different users. We evaluate the proposed system using two real-world datasets, and we show that it outperforms state-of-the-art FL solutions. In particular, our numerical results highlight the effectiveness of our approach and its ability to provide 4-6% increase in the classification accuracy, with respect to hierarchical FL schemes that consider distance-based user assignment. Furthermore, the proposed approach could significantly accelerate FL training and reduce communication overhead by providing 75-85% reduction in the communication rounds between edge nodes and the centralized server, for the same model accuracy.

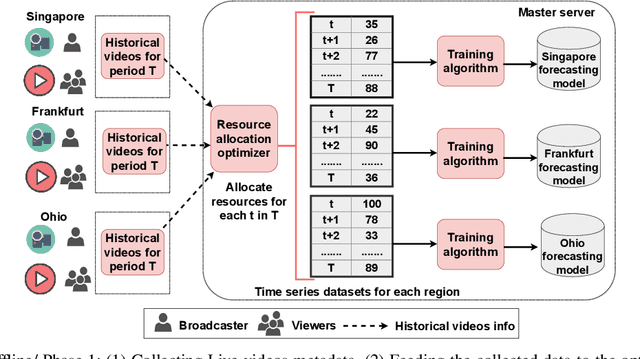

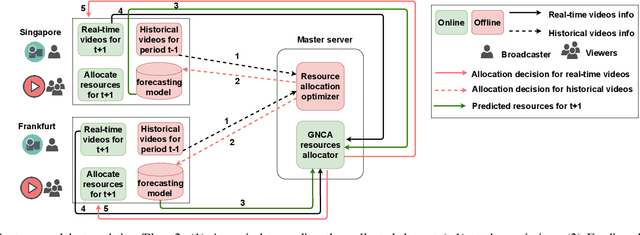

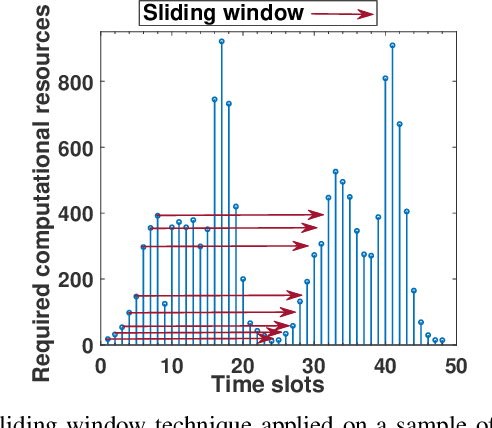

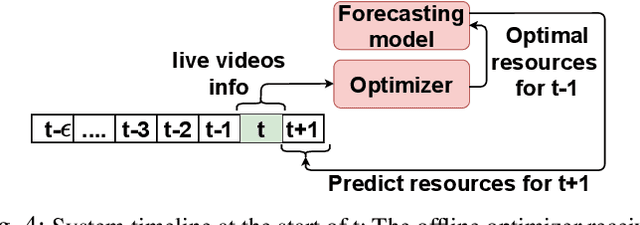

An Intelligent Resource Reservation for Crowdsourced Live Video Streaming Applications in Geo-Distributed Cloud Environment

Jun 04, 2021

Abstract:Crowdsourced live video streaming (livecast) services such as Facebook Live, YouNow, Douyu and Twitch are gaining more momentum recently. Allocating the limited resources in a cost-effective manner while maximizing the Quality of Service (QoS) through real-time delivery and the provision of the appropriate representations for all viewers is a challenging problem. In our paper, we introduce a machine-learning based predictive resource allocation framework for geo-distributed cloud sites, considering the delay and quality constraints to guarantee the maximum QoS for viewers and the minimum cost for content providers. First, we present an offline optimization that decides the required transcoding resources in distributed regions near the viewers with a trade-off between the QoS and the overall cost. Second, we use machine learning to build forecasting models that proactively predict the approximate transcoding resources to be reserved at each cloud site ahead of time. Finally, we develop a Greedy Nearest and Cheapest algorithm (GNCA) to perform the resource allocation of real-time broadcasted videos on the rented resources. Extensive simulations have shown that GNCA outperforms the state-of-the art resource allocation approaches for crowdsourced live streaming by achieving more than 20% gain in terms of system cost while serving the viewers with relatively lower latency.

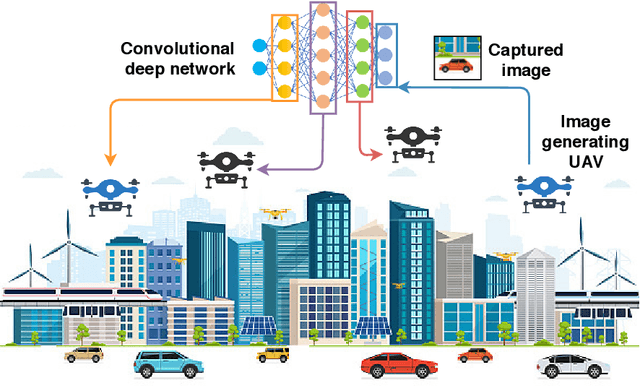

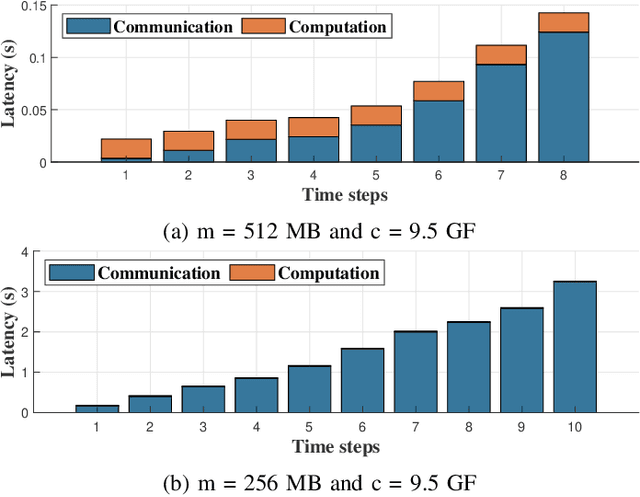

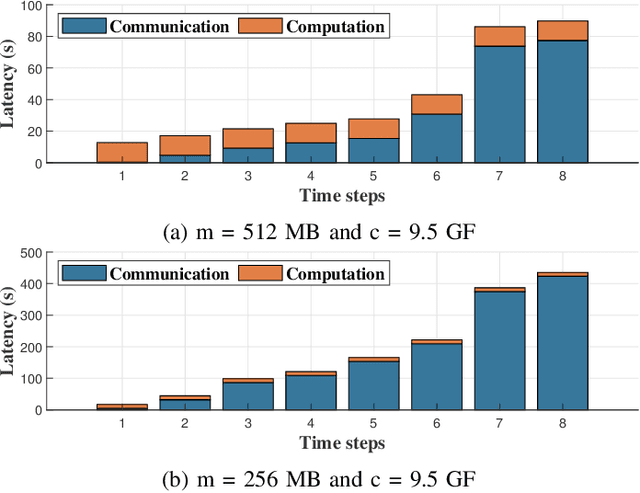

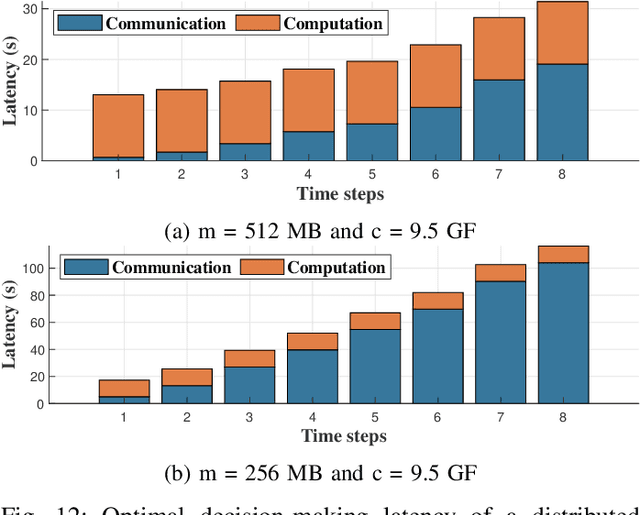

Distributed CNN Inference on Resource-Constrained UAVs for Surveillance Systems: Design and Optimization

May 23, 2021

Abstract:Unmanned Aerial Vehicles (UAVs) have attracted great interest in the last few years owing to their ability to cover large areas and access difficult and hazardous target zones, which is not the case of traditional systems relying on direct observations obtained from fixed cameras and sensors. Furthermore, thanks to the advancements in computer vision and machine learning, UAVs are being adopted for a broad range of solutions and applications. However, Deep Neural Networks (DNNs) are progressing toward deeper and complex models that prevent them from being executed on-board. In this paper, we propose a DNN distribution methodology within UAVs to enable data classification in resource-constrained devices and avoid extra delays introduced by the server-based solutions due to data communication over air-to-ground links. The proposed method is formulated as an optimization problem that aims to minimize the latency between data collection and decision-making while considering the mobility model and the resource constraints of the UAVs as part of the air-to-air communication. We also introduce the mobility prediction to adapt our system to the dynamics of UAVs and the network variation. The simulation conducted to evaluate the performance and benchmark the proposed methods, namely Optimal UAV-based Layer Distribution (OULD) and OULD with Mobility Prediction (OULD-MP), were run in an HPC cluster. The obtained results show that our optimization solution outperforms the existing and heuristic-based approaches.

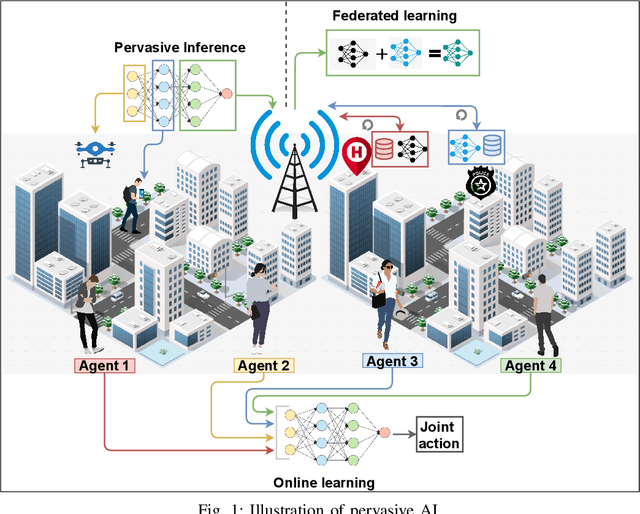

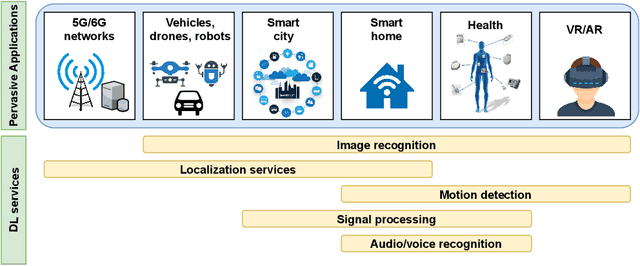

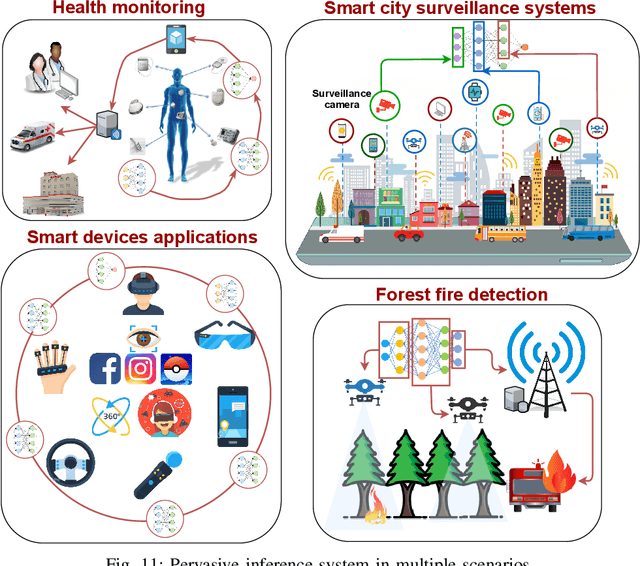

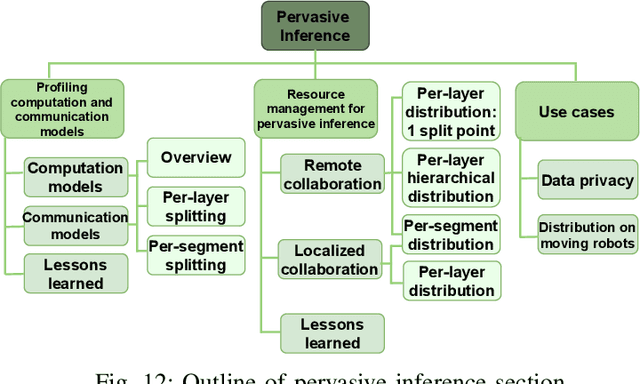

Pervasive AI for IoT Applications: Resource-efficient Distributed Artificial Intelligence

May 04, 2021

Abstract:Artificial intelligence (AI) has witnessed a substantial breakthrough in a variety of Internet of Things (IoT) applications and services, spanning from recommendation systems to robotics control and military surveillance. This is driven by the easier access to sensory data and the enormous scale of pervasive/ubiquitous devices that generate zettabytes (ZB) of real-time data streams. Designing accurate models using such data streams, to predict future insights and revolutionize the decision-taking process, inaugurates pervasive systems as a worthy paradigm for a better quality-of-life. The confluence of pervasive computing and artificial intelligence, Pervasive AI, expanded the role of ubiquitous IoT systems from mainly data collection to executing distributed computations with a promising alternative to centralized learning, presenting various challenges. In this context, a wise cooperation and resource scheduling should be envisaged among IoT devices (e.g., smartphones, smart vehicles) and infrastructure (e.g. edge nodes, and base stations) to avoid communication and computation overheads and ensure maximum performance. In this paper, we conduct a comprehensive survey of the recent techniques developed to overcome these resource challenges in pervasive AI systems. Specifically, we first present an overview of the pervasive computing, its architecture, and its intersection with artificial intelligence. We then review the background, applications and performance metrics of AI, particularly Deep Learning (DL) and online learning, running in a ubiquitous system. Next, we provide a deep literature review of communication-efficient techniques, from both algorithmic and system perspectives, of distributed inference, training and online learning tasks across the combination of IoT devices, edge devices and cloud servers. Finally, we discuss our future vision and research challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge