Alberto Sangiovanni-Vincentelli

Domain Randomization and Pyramid Consistency: Simulation-to-Real Generalization without Accessing Target Domain Data

Sep 02, 2019

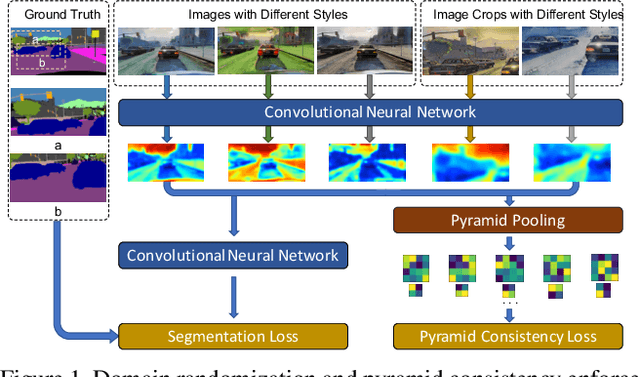

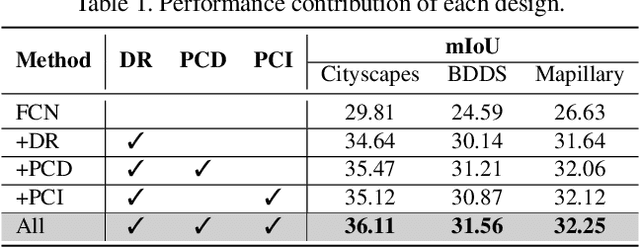

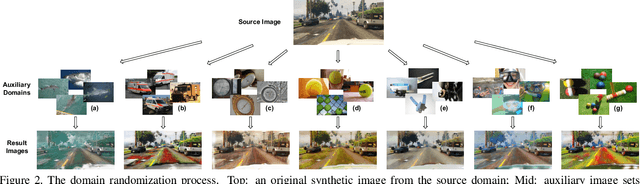

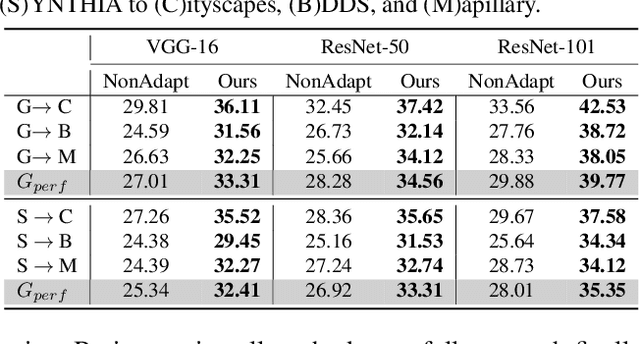

Abstract:We propose to harness the potential of simulation for the semantic segmentation of real-world self-driving scenes in a domain generalization fashion. The segmentation network is trained without any data of target domains and tested on the unseen target domains. To this end, we propose a new approach of domain randomization and pyramid consistency to learn a model with high generalizability. First, we propose to randomize the synthetic images with the styles of real images in terms of visual appearances using auxiliary datasets, in order to effectively learn domain-invariant representations. Second, we further enforce pyramid consistency across different "stylized" images and within an image, in order to learn domain-invariant and scale-invariant features, respectively. Extensive experiments are conducted on the generalization from GTA and SYNTHIA to Cityscapes, BDDS and Mapillary; and our method achieves superior results over the state-of-the-art techniques. Remarkably, our generalization results are on par with or even better than those obtained by state-of-the-art simulation-to-real domain adaptation methods, which access the target domain data at training time.

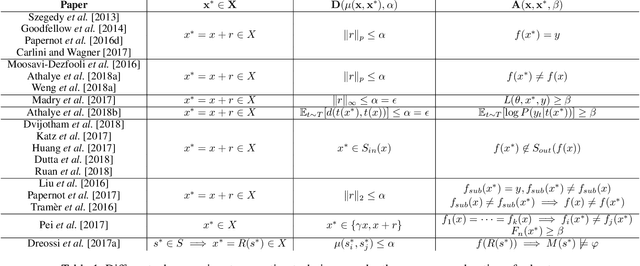

A Formalization of Robustness for Deep Neural Networks

Mar 24, 2019

Abstract:Deep neural networks have been shown to lack robustness to small input perturbations. The process of generating the perturbations that expose the lack of robustness of neural networks is known as adversarial input generation. This process depends on the goals and capabilities of the adversary, In this paper, we propose a unifying formalization of the adversarial input generation process from a formal methods perspective. We provide a definition of robustness that is general enough to capture different formulations. The expressiveness of our formalization is shown by modeling and comparing a variety of adversarial attack techniques.

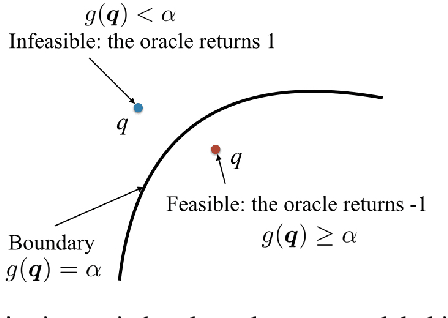

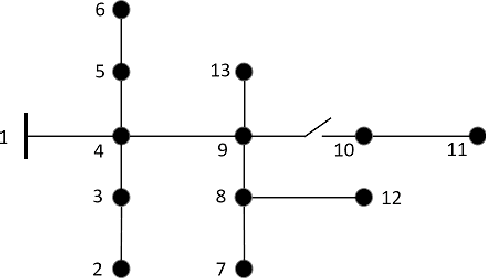

A tractable ellipsoidal approximation for voltage regulation problems

Mar 09, 2019

Abstract:We present a machine learning approach to the solution of chance constrained optimizations in the context of voltage regulation problems in power system operation. The novelty of our approach resides in approximating the feasible region of uncertainty with an ellipsoid. We formulate this problem using a learning model similar to Support Vector Machines (SVM) and propose a sampling algorithm that efficiently trains the model. We demonstrate our approach on a voltage regulation problem using standard IEEE distribution test feeders.

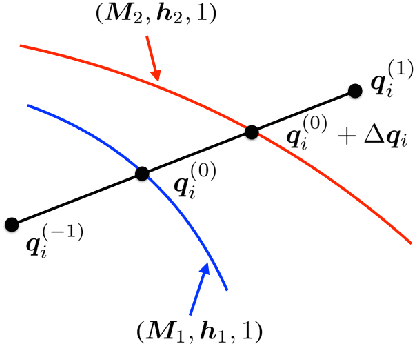

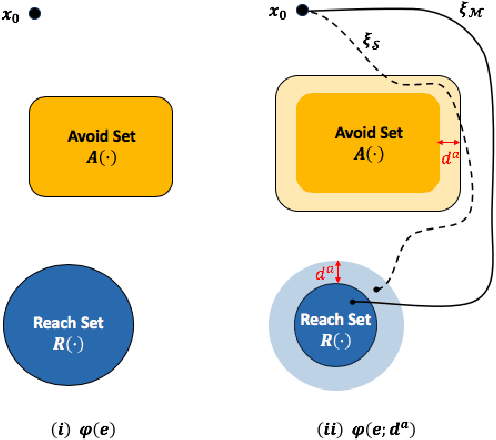

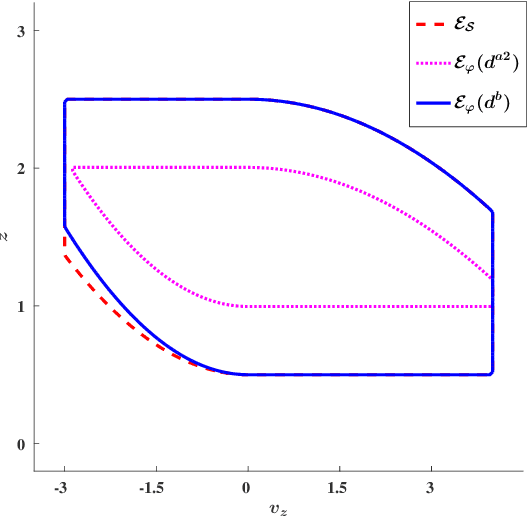

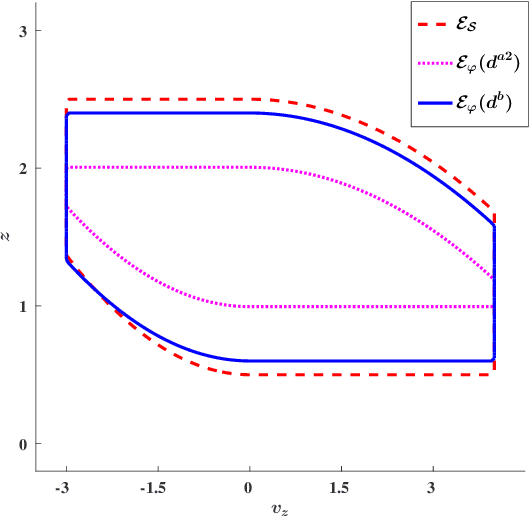

A New Simulation Metric to Determine Safe Environments and Controllers for Systems with Unknown Dynamics

Feb 27, 2019

Abstract:We consider the problem of extracting safe environments and controllers for reach-avoid objectives for systems with known state and control spaces, but unknown dynamics. In a given environment, a common approach is to synthesize a controller from an abstraction or a model of the system (potentially learned from data). However, in many situations, the relationship between the dynamics of the model and the \textit{actual system} is not known; and hence it is difficult to provide safety guarantees for the system. In such cases, the Standard Simulation Metric (SSM), defined as the worst-case norm distance between the model and the system output trajectories, can be used to modify a reach-avoid specification for the system into a more stringent specification for the abstraction. Nevertheless, the obtained distance, and hence the modified specification, can be quite conservative. This limits the set of environments for which a safe controller can be obtained. We propose SPEC, a specification-centric simulation metric, which overcomes these limitations by computing the distance using only the trajectories that violate the specification for the system. We show that modifying a reach-avoid specification with SPEC allows us to synthesize a safe controller for a larger set of environments compared to SSM. We also propose a probabilistic method to compute SPEC for a general class of systems. Case studies using simulators for quadrotors and autonomous cars illustrate the advantages of the proposed metric for determining safe environment sets and controllers.

A One-Class Support Vector Machine Calibration Method for Time Series Change Point Detection

Feb 18, 2019

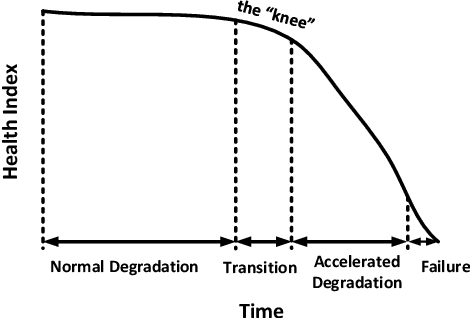

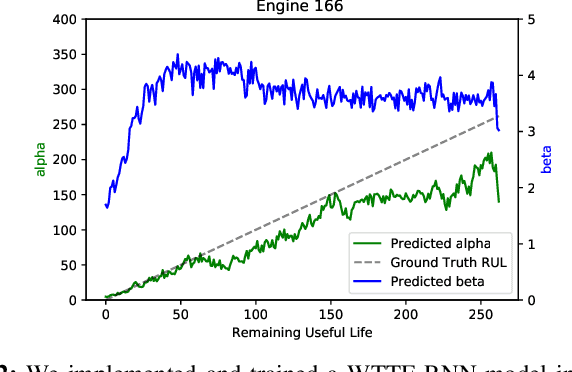

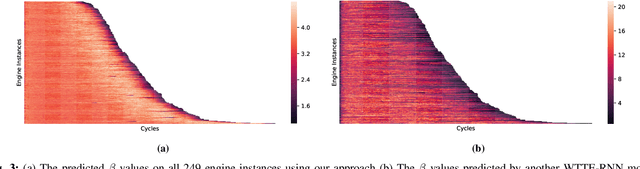

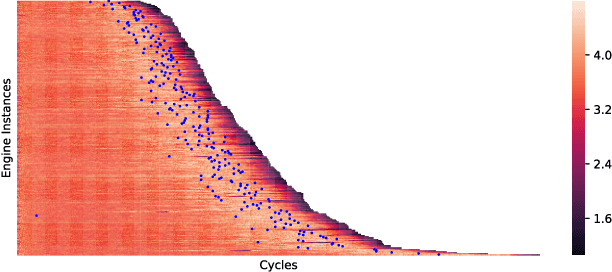

Abstract:It is important to identify the change point of a system's health status, which usually signifies an incipient fault under development. The One-Class Support Vector Machine (OC-SVM) is a popular machine learning model for anomaly detection and hence could be used for identifying change points; however, it is sometimes difficult to obtain a good OC-SVM model that can be used on sensor measurement time series to identify the change points in system health status. In this paper, we propose a novel approach for calibrating OC-SVM models. The approach uses a heuristic search method to find a good set of input data and hyperparameters that yield a well-performing model. Our results on the C-MAPSS dataset demonstrate that OC-SVM can also achieve satisfactory accuracy in detecting change point in time series with fewer training data, compared to state-of-the-art deep learning approaches. In our case study, the OC-SVM calibrated by the proposed model is shown to be useful especially in scenarios with limited amount of training data.

Time Series Learning using Monotonic Logical Properties

Aug 01, 2018

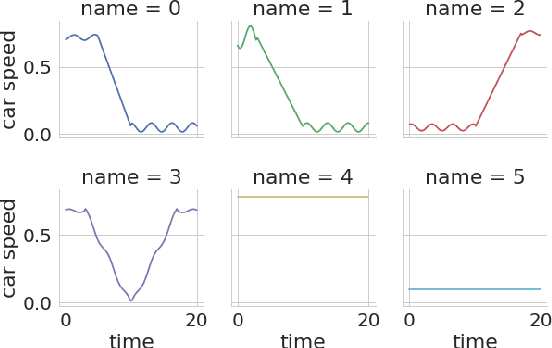

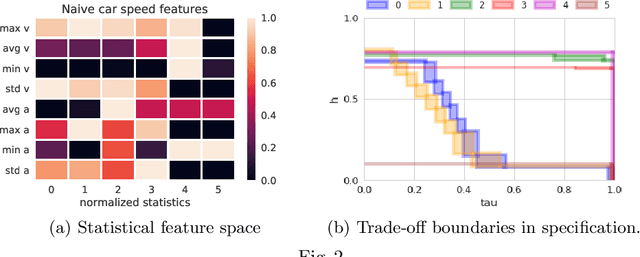

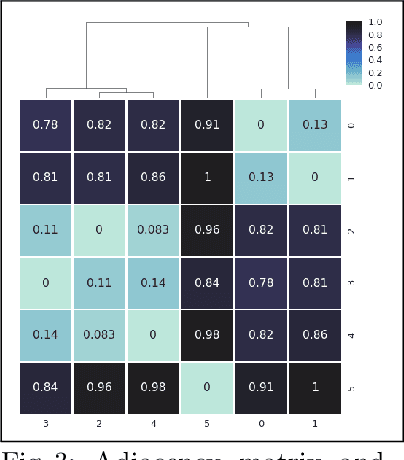

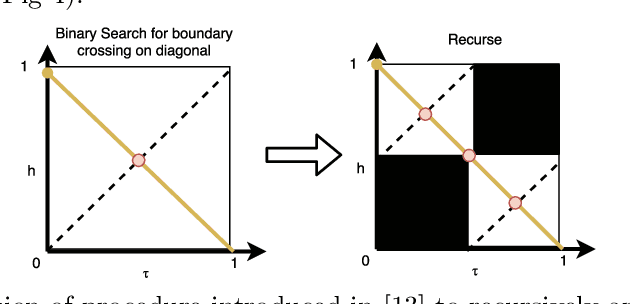

Abstract:Cyber-physical systems of today are generating large volumes of time-series data. As manual inspection of such data is not tractable, the need for learning methods to help discover logical structure in the data has increased. We propose a logic-based framework that allows domain-specific knowledge to be embedded into formulas in a parametric logical specification over time-series data. The key idea is to then map a time series to a surface in the parameter space of the formula. Given this mapping, we identify the Hausdorff distance between boundaries as a natural distance metric between two time-series data under the lens of the parametric specification. This enables embedding non-trivial domain-specific knowledge into the distance metric and then using off-the-shelf machine learning tools to label the data. After labeling the data, we demonstrate how to extract a logical specification for each label. Finally, we showcase our technique on real world traffic data to learn classifiers/monitors for slow-downs and traffic jams.

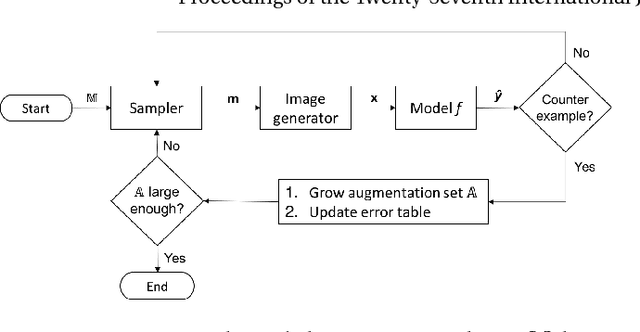

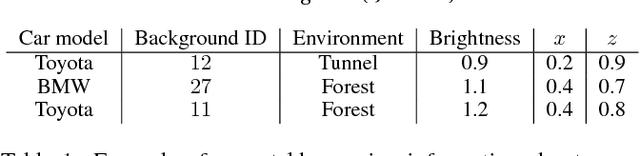

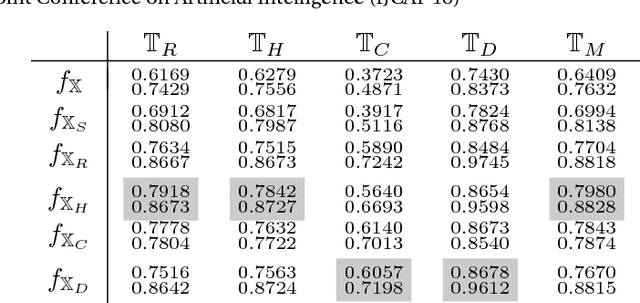

Counterexample-Guided Data Augmentation

May 17, 2018

Abstract:We present a novel framework for augmenting data sets for machine learning based on counterexamples. Counterexamples are misclassified examples that have important properties for retraining and improving the model. Key components of our framework include a counterexample generator, which produces data items that are misclassified by the model and error tables, a novel data structure that stores information pertaining to misclassifications. Error tables can be used to explain the model's vulnerabilities and are used to efficiently generate counterexamples for augmentation. We show the efficacy of the proposed framework by comparing it to classical augmentation techniques on a case study of object detection in autonomous driving based on deep neural networks.

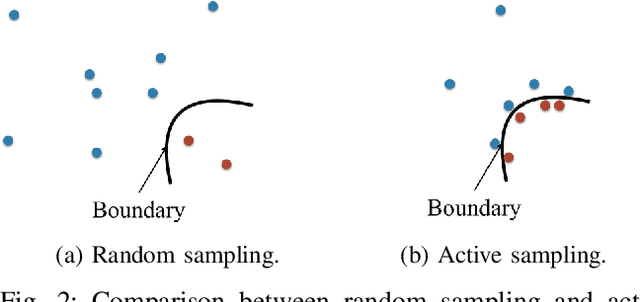

Context-Specific Validation of Data-Driven Models

Mar 26, 2018

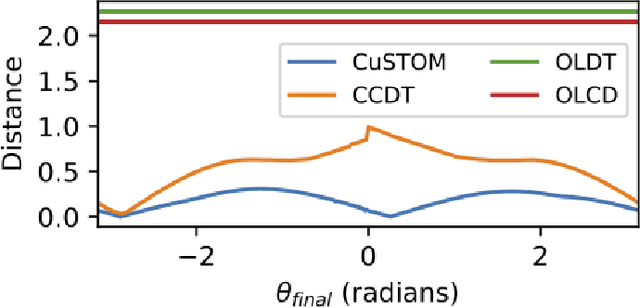

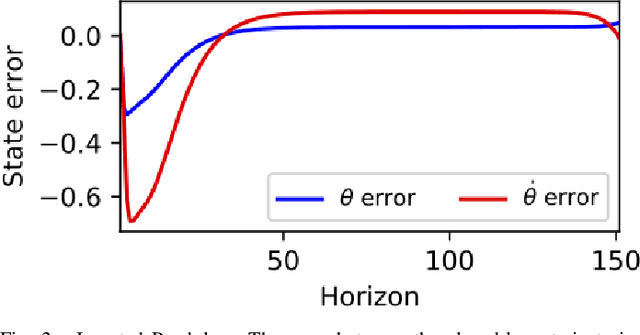

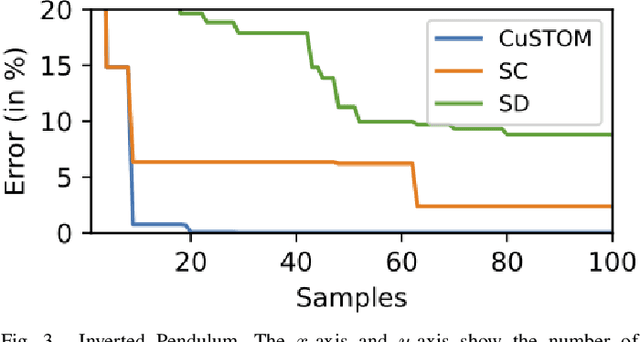

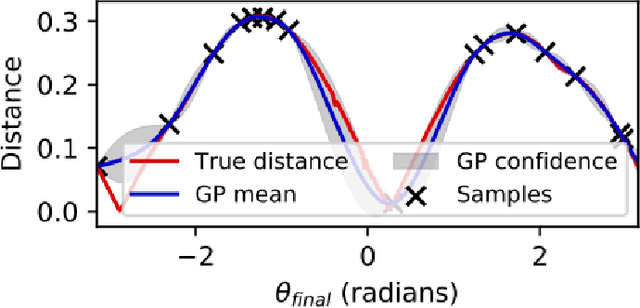

Abstract:With an increasing use of data-driven models to control robotic systems, it has become important to develop a methodology for validating such models before they can be deployed to design a controller for the actual system. Specifically, it must be ensured that the controller designed for a learned model would perform as expected on the actual physical system. We propose a context-specific validation framework to quantify the quality of a learned model based on a distance measure between the closed-loop actual system and the learned model. We then propose an active sampling scheme to compute a probabilistic upper bound on this distance in a sample-efficient manner. The proposed framework validates the learned model against only those behaviors of the system that are relevant for the purpose for which we intend to use this model, and does not require any a priori knowledge of the system dynamics. Several simulations illustrate the practicality of the proposed framework for validating the models of real-world systems, and consequently, for controller synthesis.

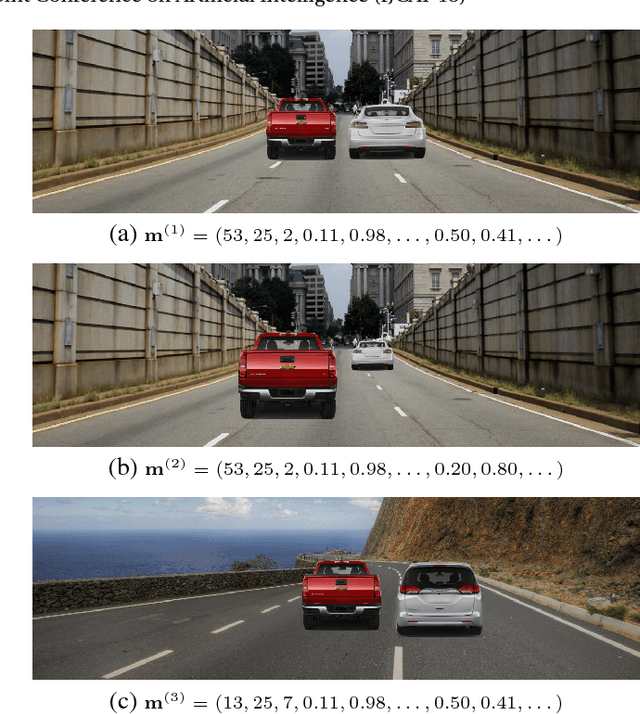

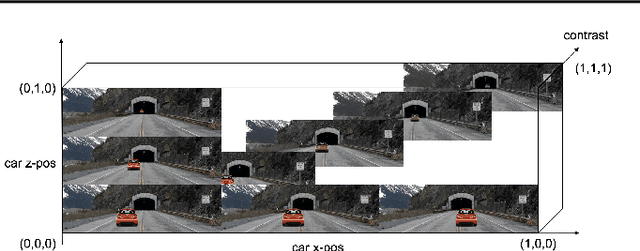

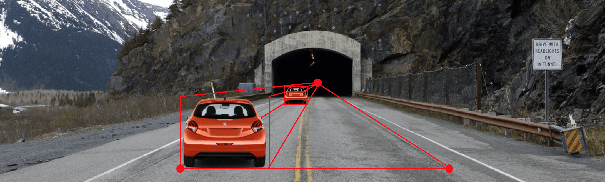

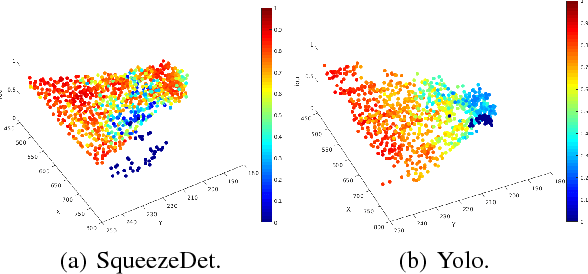

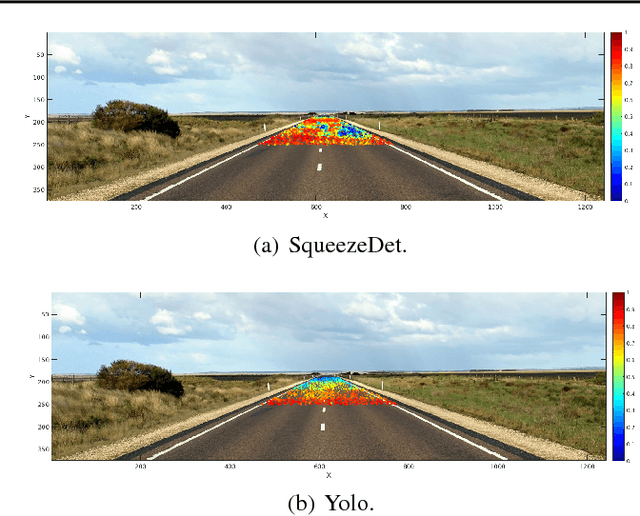

Systematic Testing of Convolutional Neural Networks for Autonomous Driving

Aug 11, 2017

Abstract:We present a framework to systematically analyze convolutional neural networks (CNNs) used in classification of cars in autonomous vehicles. Our analysis procedure comprises an image generator that produces synthetic pictures by sampling in a lower dimension image modification subspace and a suite of visualization tools. The image generator produces images which can be used to test the CNN and hence expose its vulnerabilities. The presented framework can be used to extract insights of the CNN classifier, compare across classification models, or generate training and validation datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge