cancer detection

Cancer detection using Artificial Intelligence (AI) involves leveraging advanced machine learning algorithms and techniques to identify and diagnose cancer from various medical data sources. The goal is to enhance early detection, improve diagnostic accuracy, and potentially reduce the need for invasive procedures.

Papers and Code

Deep Learning Enabled Segmentation, Classification and Risk Assessment of Cervical Cancer

May 21, 2025

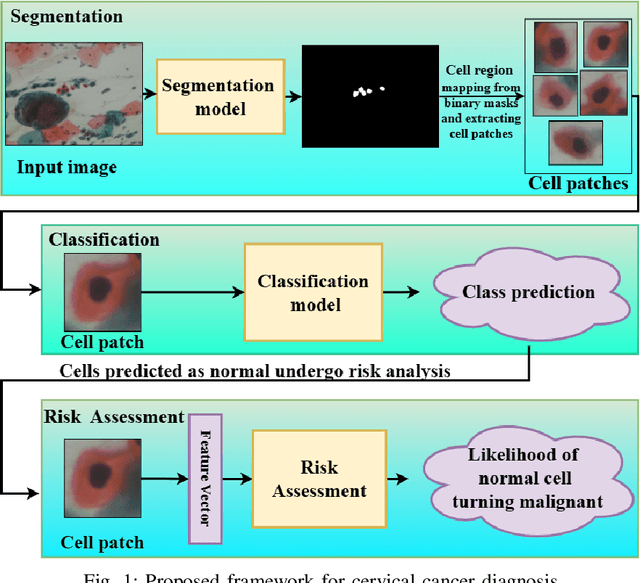

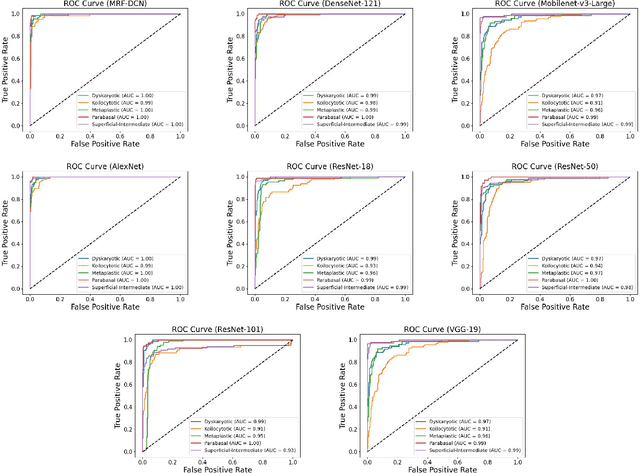

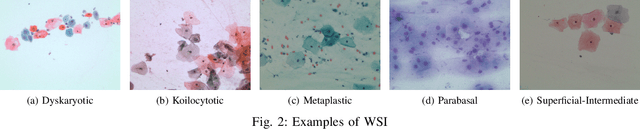

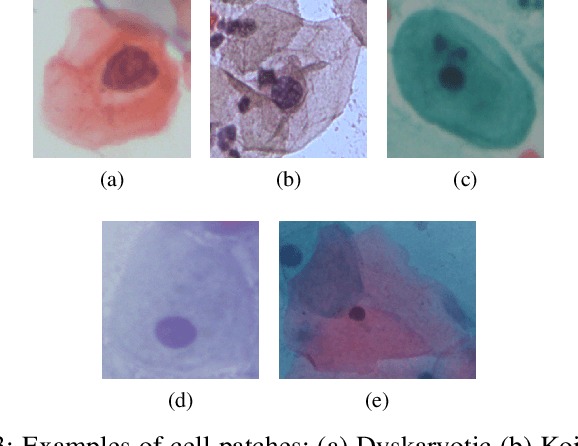

Cervical cancer, the fourth leading cause of cancer in women globally, requires early detection through Pap smear tests to identify precancerous changes and prevent disease progression. In this study, we performed a focused analysis by segmenting the cellular boundaries and drawing bounding boxes to isolate the cancer cells. A novel Deep Learning (DL) architecture, the ``Multi-Resolution Fusion Deep Convolutional Network", was proposed to effectively handle images with varying resolutions and aspect ratios, with its efficacy showcased using the SIPaKMeD dataset. The performance of this DL model was observed to be similar to the state-of-the-art models, with accuracy variations of a mere 2\% to 3\%, achieved using just 1.7 million learnable parameters, which is approximately 85 times less than the VGG-19 model. Furthermore, we introduced a multi-task learning technique that simultaneously performs segmentation and classification tasks and begets an Intersection over Union score of 0.83 and a classification accuracy of 90\%. The final stage of the workflow employs a probabilistic approach for risk assessment, extracting feature vectors to predict the likelihood of normal cells progressing to malignant states, which can be utilized for the prognosis of cervical cancer.

A Multi-Modal AI System for Screening Mammography: Integrating 2D and 3D Imaging to Improve Breast Cancer Detection in a Prospective Clinical Study

Apr 08, 2025

Although digital breast tomosynthesis (DBT) improves diagnostic performance over full-field digital mammography (FFDM), false-positive recalls remain a concern in breast cancer screening. We developed a multi-modal artificial intelligence system integrating FFDM, synthetic mammography, and DBT to provide breast-level predictions and bounding-box localizations of suspicious findings. Our AI system, trained on approximately 500,000 mammography exams, achieved 0.945 AUROC on an internal test set. It demonstrated capacity to reduce recalls by 31.7% and radiologist workload by 43.8% while maintaining 100% sensitivity, underscoring its potential to improve clinical workflows. External validation confirmed strong generalizability, reducing the gap to a perfect AUROC by 35.31%-69.14% relative to strong baselines. In prospective deployment across 18 sites, the system reduced recall rates for low-risk cases. An improved version, trained on over 750,000 exams with additional labels, further reduced the gap by 18.86%-56.62% across large external datasets. Overall, these results underscore the importance of utilizing all available imaging modalities, demonstrate the potential for clinical impact, and indicate feasibility of further reduction of the test error with increased training set when using large-capacity neural networks.

An Integrated AI-Enabled System Using One Class Twin Cross Learning (OCT-X) for Early Gastric Cancer Detection

Mar 31, 2025

Early detection of gastric cancer, a leading cause of cancer-related mortality worldwide, remains hampered by the limitations of current diagnostic technologies, leading to high rates of misdiagnosis and missed diagnoses. To address these challenges, we propose an integrated system that synergizes advanced hardware and software technologies to balance speed-accuracy. Our study introduces the One Class Twin Cross Learning (OCT-X) algorithm. Leveraging a novel fast double-threshold grid search strategy (FDT-GS) and a patch-based deep fully convolutional network, OCT-X maximizes diagnostic accuracy through real-time data processing and seamless lesion surveillance. The hardware component includes an all-in-one point-of-care testing (POCT) device with high-resolution imaging sensors, real-time data processing, and wireless connectivity, facilitated by the NI CompactDAQ and LabVIEW software. Our integrated system achieved an unprecedented diagnostic accuracy of 99.70%, significantly outperforming existing models by up to 4.47%, and demonstrated a 10% improvement in multirate adaptability. These findings underscore the potential of OCT-X as well as the integrated system in clinical diagnostics, offering a path toward more accurate, efficient, and less invasive early gastric cancer detection. Future research will explore broader applications, further advancing oncological diagnostics. Code is available at https://github.com/liu37972/Multirate-Location-on-OCT-X-Learning.git.

CLIP-IT: CLIP-based Pairing for Histology Images Classification

Apr 22, 2025Multimodal learning has shown significant promise for improving medical image analysis by integrating information from complementary data sources. This is widely employed for training vision-language models (VLMs) for cancer detection based on histology images and text reports. However, one of the main limitations in training these VLMs is the requirement for large paired datasets, raising concerns over privacy, and data collection, annotation, and maintenance costs. To address this challenge, we introduce CLIP-IT method to train a vision backbone model to classify histology images by pairing them with privileged textual information from an external source. At first, the modality pairing step relies on a CLIP-based model to match histology images with semantically relevant textual report data from external sources, creating an augmented multimodal dataset without the need for manually paired samples. Then, we propose a multimodal training procedure that distills the knowledge from the paired text modality to the unimodal image classifier for enhanced performance without the need for the textual data during inference. A parameter-efficient fine-tuning method is used to efficiently address the misalignment between the main (image) and paired (text) modalities. During inference, the improved unimodal histology classifier is used, with only minimal additional computational complexity. Our experiments on challenging PCAM, CRC, and BACH histology image datasets show that CLIP-IT can provide a cost-effective approach to leverage privileged textual information and outperform unimodal classifiers for histology.

Improving Oral Cancer Outcomes Through Machine Learning and Dimensionality Reduction

Jun 11, 2025Oral cancer presents a formidable challenge in oncology, necessitating early diagnosis and accurate prognosis to enhance patient survival rates. Recent advancements in machine learning and data mining have revolutionized traditional diagnostic methodologies, providing sophisticated and automated tools for differentiating between benign and malignant oral lesions. This study presents a comprehensive review of cutting-edge data mining methodologies, including Neural Networks, K-Nearest Neighbors (KNN), Support Vector Machines (SVM), and ensemble learning techniques, specifically applied to the diagnosis and prognosis of oral cancer. Through a rigorous comparative analysis, our findings reveal that Neural Networks surpass other models, achieving an impressive classification accuracy of 93,6 % in predicting oral cancer. Furthermore, we underscore the potential benefits of integrating feature selection and dimensionality reduction techniques to enhance model performance. These insights underscore the significant promise of advanced data mining techniques in bolstering early detection, optimizing treatment strategies, and ultimately improving patient outcomes in the realm of oral oncology.

MSAD-Net: Multiscale and Spatial Attention-based Dense Network for Lung Cancer Classification

Apr 20, 2025

Lung cancer, a severe form of malignant tumor that originates in the tissues of the lungs, can be fatal if not detected in its early stages. It ranks among the top causes of cancer-related mortality worldwide. Detecting lung cancer manually using chest X-Ray image or Computational Tomography (CT) scans image poses significant challenges for radiologists. Hence, there is a need for automatic diagnosis system of lung cancers from radiology images. With the recent emergence of deep learning, particularly through Convolutional Neural Networks (CNNs), the automated detection of lung cancer has become a much simpler task. Nevertheless, numerous researchers have addressed that the performance of conventional CNNs may be hindered due to class imbalance issue, which is prevalent in medical images. In this research work, we have proposed a novel CNN architecture ``Multi-Scale Dense Network (MSD-Net)'' (trained-from-scratch). The novelties we bring in the proposed model are (I) We introduce novel dense modules in the 4th block and 5th block of the CNN model. We have leveraged 3 depthwise separable convolutional (DWSC) layers, and one 1x1 convolutional layer in each dense module, in order to reduce complexity of the model considerably. (II) Additionally, we have incorporated one skip connection from 3rd block to 5th block and one parallel branch connection from 4th block to Global Average Pooling (GAP) layer. We have utilized dilated convolutional layer (with dilation rate=2) in the last parallel branch in order to extract multi-scale features. Extensive experiments reveal that our proposed model has outperformed latest CNN model ConvNext-Tiny, recent trend Vision Transformer (ViT), Pooling-based ViT (PiT), and other existing models by significant margins.

Cohort-attention Evaluation Metric against Tied Data: Studying Performance of Classification Models in Cancer Detection

Mar 17, 2025

Artificial intelligence (AI) has significantly improved medical screening accuracy, particularly in cancer detection and risk assessment. However, traditional classification metrics often fail to account for imbalanced data, varying performance across cohorts, and patient-level inconsistencies, leading to biased evaluations. We propose the Cohort-Attention Evaluation Metrics (CAT) framework to address these challenges. CAT introduces patient-level assessment, entropy-based distribution weighting, and cohort-weighted sensitivity and specificity. Key metrics like CATSensitivity (CATSen), CATSpecificity (CATSpe), and CATMean ensure balanced and fair evaluation across diverse populations. This approach enhances predictive reliability, fairness, and interpretability, providing a robust evaluation method for AI-driven medical screening models.

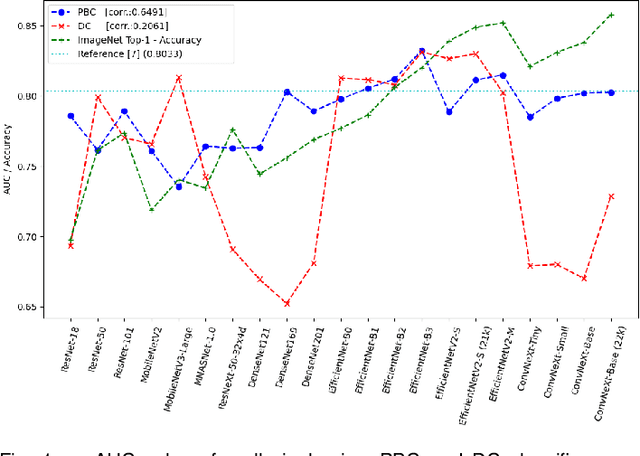

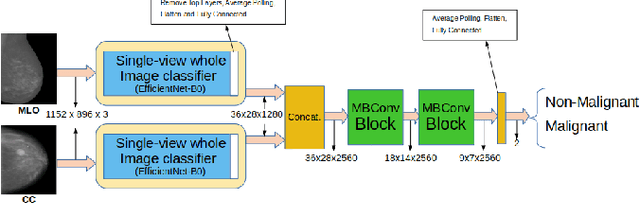

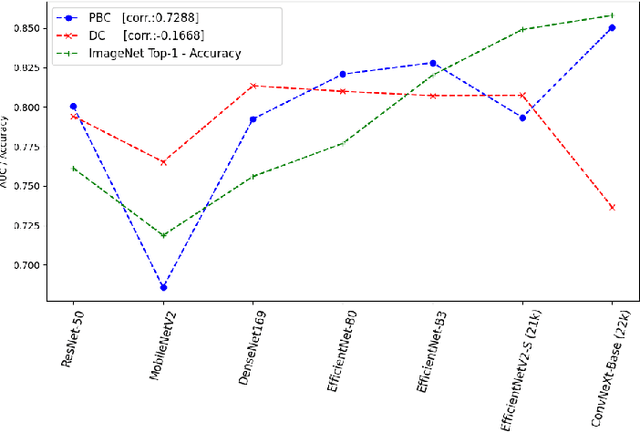

Optimizing Breast Cancer Detection in Mammograms: A Comprehensive Study of Transfer Learning, Resolution Reduction, and Multi-View Classification

Mar 25, 2025

This study explores open questions in the application of machine learning for breast cancer detection in mammograms. Current approaches often employ a two-stage transfer learning process: first, adapting a backbone model trained on natural images to develop a patch classifier, which is then used to create a single-view whole-image classifier. Additionally, many studies leverage both mammographic views to enhance model performance. In this work, we systematically investigate five key questions: (1) Is the intermediate patch classifier essential for optimal performance? (2) Do backbone models that excel in natural image classification consistently outperform others on mammograms? (3) When reducing mammogram resolution for GPU processing, does the learn-to-resize technique outperform conventional methods? (4) Does incorporating both mammographic views in a two-view classifier significantly improve detection accuracy? (5) How do these findings vary when analyzing low-quality versus high-quality mammograms? By addressing these questions, we developed models that outperform previous results for both single-view and two-view classifiers. Our findings provide insights into model architecture and transfer learning strategies contributing to more accurate and efficient mammogram analysis.

Anomaly Detection and Improvement of Clusters using Enhanced K-Means Algorithm

May 30, 2025This paper introduces a unified approach to cluster refinement and anomaly detection in datasets. We propose a novel algorithm that iteratively reduces the intra-cluster variance of N clusters until a global minimum is reached, yielding tighter clusters than the standard k-means algorithm. We evaluate the method using intrinsic measures for unsupervised learning, including the silhouette coefficient, Calinski-Harabasz index, and Davies-Bouldin index, and extend it to anomaly detection by identifying points whose assignment causes a significant variance increase. External validation on synthetic data and the UCI Breast Cancer and UCI Wine Quality datasets employs the Jaccard similarity score, V-measure, and F1 score. Results show variance reductions of 18.7% and 88.1% on the synthetic and Wine Quality datasets, respectively, along with accuracy and F1 score improvements of 22.5% and 20.8% on the Wine Quality dataset.

The Application of Deep Learning for Lymph Node Segmentation: A Systematic Review

May 09, 2025Automatic lymph node segmentation is the cornerstone for advances in computer vision tasks for early detection and staging of cancer. Traditional segmentation methods are constrained by manual delineation and variability in operator proficiency, limiting their ability to achieve high accuracy. The introduction of deep learning technologies offers new possibilities for improving the accuracy of lymph node image analysis. This study evaluates the application of deep learning in lymph node segmentation and discusses the methodologies of various deep learning architectures such as convolutional neural networks, encoder-decoder networks, and transformers in analyzing medical imaging data across different modalities. Despite the advancements, it still confronts challenges like the shape diversity of lymph nodes, the scarcity of accurately labeled datasets, and the inadequate development of methods that are robust and generalizable across different imaging modalities. To the best of our knowledge, this is the first study that provides a comprehensive overview of the application of deep learning techniques in lymph node segmentation task. Furthermore, this study also explores potential future research directions, including multimodal fusion techniques, transfer learning, and the use of large-scale pre-trained models to overcome current limitations while enhancing cancer diagnosis and treatment planning strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge