Filter Sketch for Network Pruning

Paper and Code

Jan 23, 2020

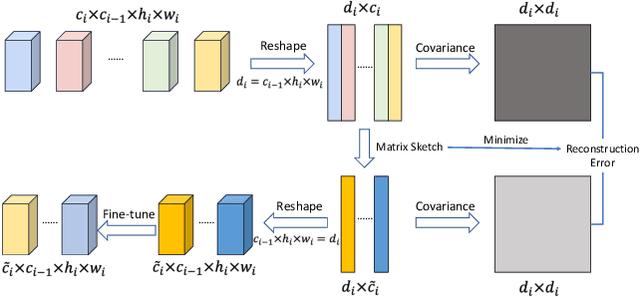

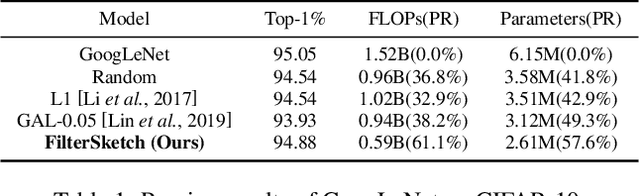

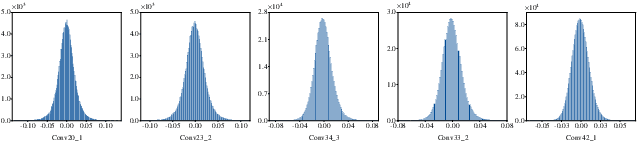

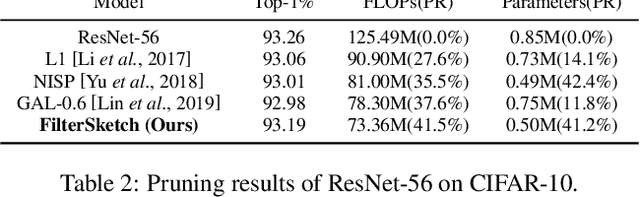

In this paper, we propose a novel network pruning approach by information preserving of pre-trained network weights (filters). Our approach, referred to as FilterSketch, encodes the second-order information of pre-trained weights, through which the model performance is recovered by fine-tuning the pruned network in an end-to-end manner. Network pruning with information preserving can be approximated as a matrix sketch problem, which is efficiently solved by the off-the-shelf Frequent Direction method. FilterSketch thereby requires neither training from scratch nor data-driven iterative optimization, leading to a magnitude-order reduction of time consumption in the optimization of pruning. Experiments on CIFAR-10 show that FilterSketch reduces 63.3% of FLOPs and prunes 59.9% of network parameters with negligible accuracy cost overhead for ResNet-110. On ILSVRC-2012, it achieves a reduction of 45.5% FLOPs and removes 43.0% of parameters with only a small top-5 accuracy drop of 0.69% for ResNet-50. Source codes of the proposed FilterSketch can be available at https://github.com/lmbxmu/FilterSketch.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge