Yucheng Lin

VAE-REPA: Variational Autoencoder Representation Alignment for Efficient Diffusion Training

Jan 25, 2026Abstract:Denoising-based diffusion transformers, despite their strong generation performance, suffer from inefficient training convergence. Existing methods addressing this issue, such as REPA (relying on external representation encoders) or SRA (requiring dual-model setups), inevitably incur heavy computational overhead during training due to external dependencies. To tackle these challenges, this paper proposes \textbf{\namex}, a lightweight intrinsic guidance framework for efficient diffusion training. \name leverages off-the-shelf pre-trained Variational Autoencoder (VAE) features: their reconstruction property ensures inherent encoding of visual priors like rich texture details, structural patterns, and basic semantic information. Specifically, \name aligns the intermediate latent features of diffusion transformers with VAE features via a lightweight projection layer, supervised by a feature alignment loss. This design accelerates training without extra representation encoders or dual-model maintenance, resulting in a simple yet effective pipeline. Extensive experiments demonstrate that \name improves both generation quality and training convergence speed compared to vanilla diffusion transformers, matches or outperforms state-of-the-art acceleration methods, and incurs merely 4\% extra GFLOPs with zero additional cost for external guidance models.

Augmenting emotion features in irony detection with Large language modeling

Apr 18, 2024Abstract:This study introduces a novel method for irony detection, applying Large Language Models (LLMs) with prompt-based learning to facilitate emotion-centric text augmentation. Traditional irony detection techniques typically fall short due to their reliance on static linguistic features and predefined knowledge bases, often overlooking the nuanced emotional dimensions integral to irony. In contrast, our methodology augments the detection process by integrating subtle emotional cues, augmented through LLMs, into three benchmark pre-trained NLP models - BERT, T5, and GPT-2 - which are widely recognized as foundational in irony detection. We assessed our method using the SemEval-2018 Task 3 dataset and observed substantial enhancements in irony detection capabilities.

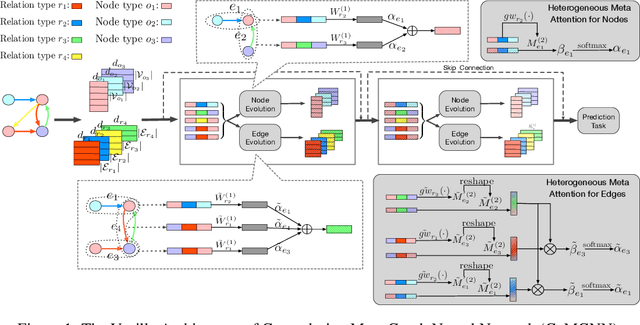

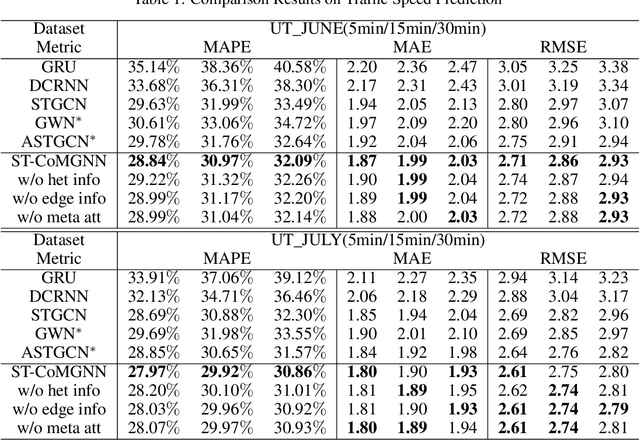

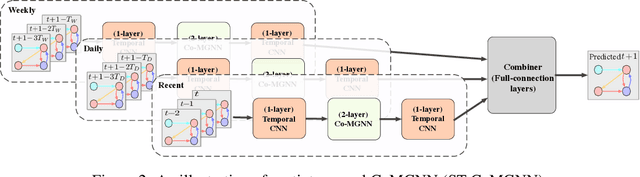

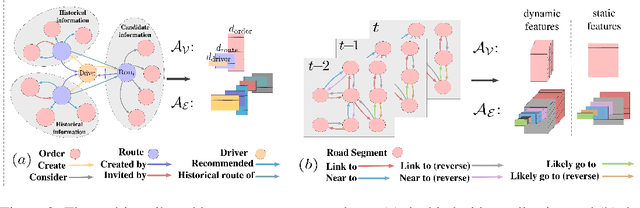

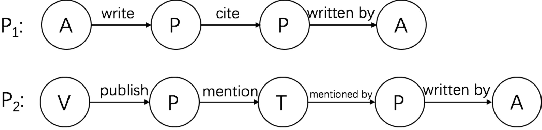

Meta Graph Attention on Heterogeneous Graph with Node-Edge Co-evolution

Oct 09, 2020

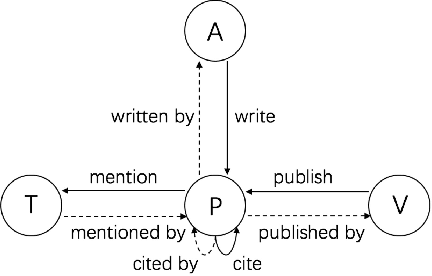

Abstract:Graph neural networks have become an important tool for modeling structured data. In many real-world systems, intricate hidden information may exist, e.g., heterogeneity in nodes/edges, static node/edge attributes, and spatiotemporal node/edge features. However, most existing methods only take part of the information into consideration. In this paper, we present the Co-evolved Meta Graph Neural Network (CoMGNN), which applies meta graph attention to heterogeneous graphs with co-evolution of node and edge states. We further propose a spatiotemporal adaption of CoMGNN (ST-CoMGNN) for modeling spatiotemporal patterns on nodes and edges. We conduct experiments on two large-scale real-world datasets. Experimental results show that our models significantly outperform the state-of-the-art methods, demonstrating the effectiveness of encoding diverse information from different aspects.

An Attention-based Graph Neural Network for Heterogeneous Structural Learning

Dec 19, 2019

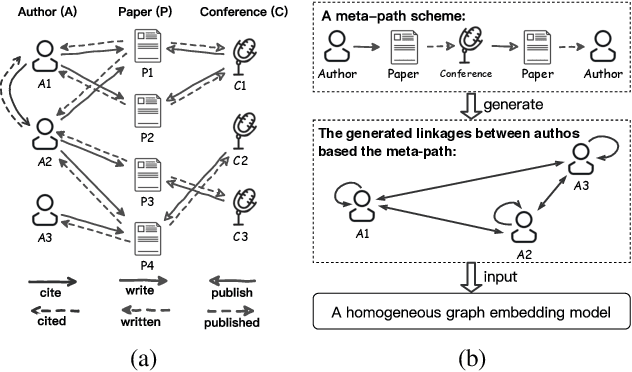

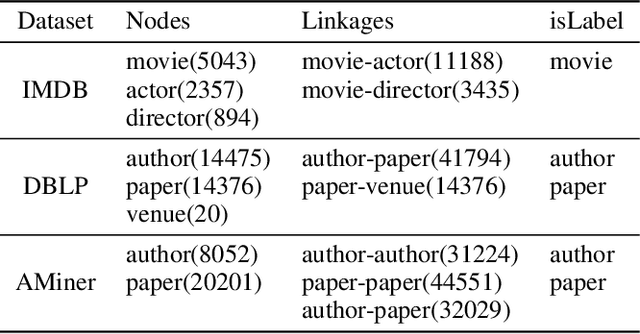

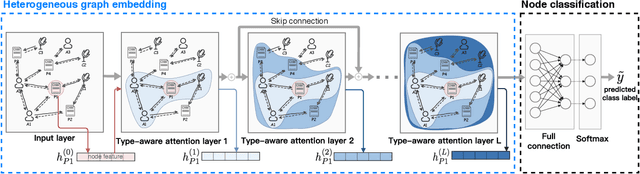

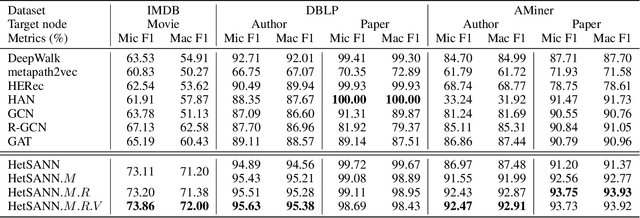

Abstract:In this paper, we focus on graph representation learning of heterogeneous information network (HIN), in which various types of vertices are connected by various types of relations. Most of the existing methods conducted on HIN revise homogeneous graph embedding models via meta-paths to learn low-dimensional vector space of HIN. In this paper, we propose a novel Heterogeneous Graph Structural Attention Neural Network (HetSANN) to directly encode structural information of HIN without meta-path and achieve more informative representations. With this method, domain experts will not be needed to design meta-path schemes and the heterogeneous information can be processed automatically by our proposed model. Specifically, we implicitly represent heterogeneous information using the following two methods: 1) we model the transformation between heterogeneous vertices through a projection in low-dimensional entity spaces; 2) afterwards, we apply the graph neural network to aggregate multi-relational information of projected neighborhood by means of attention mechanism. We also present three extensions of HetSANN, i.e., voices-sharing product attention for the pairwise relationships in HIN, cycle-consistency loss to retain the transformation between heterogeneous entity spaces, and multi-task learning with full use of information. The experiments conducted on three public datasets demonstrate that our proposed models achieve significant and consistent improvements compared to state-of-the-art solutions.

Interpretable Text Classification Using CNN and Max-pooling

Oct 14, 2019

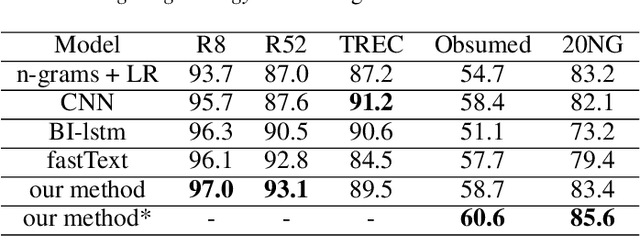

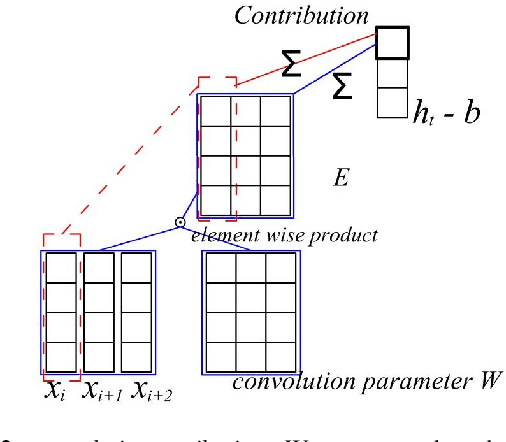

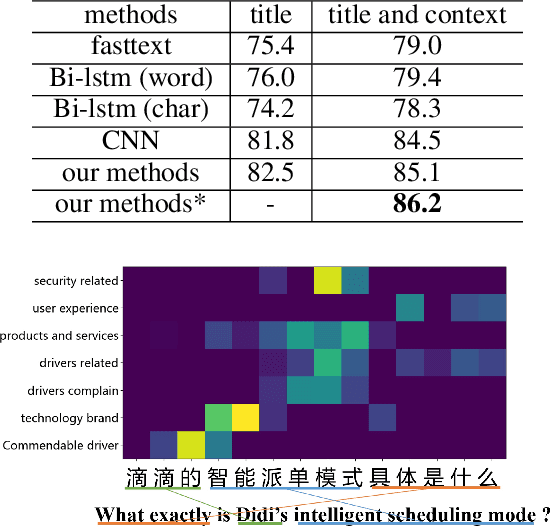

Abstract:Deep neural networks have been widely used in text classification. However, it is hard to interpret the neural models due to the complicate mechanisms. In this work, we study the interpretability of a variant of the typical text classification model which is based on convolutional operation and max-pooling layer. Two mechanisms: convolution attribution and n-gram feature analysis are proposed to analyse the process procedure for the CNN model. The interpretability of the model is reflected by providing posterior interpretation for neural network predictions. Besides, a multi-sentence strategy is proposed to enable the model to beused in multi-sentence situation without loss of performance and interpret ability. We evaluate the performance of the model on several classification tasks and justify the interpretable performance with some case studies.

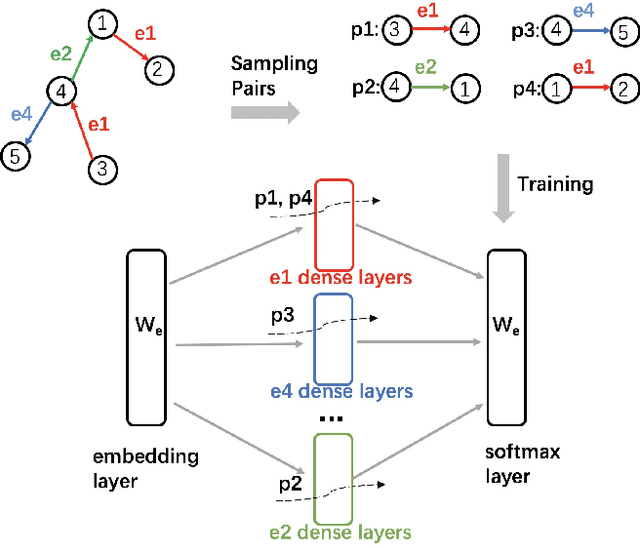

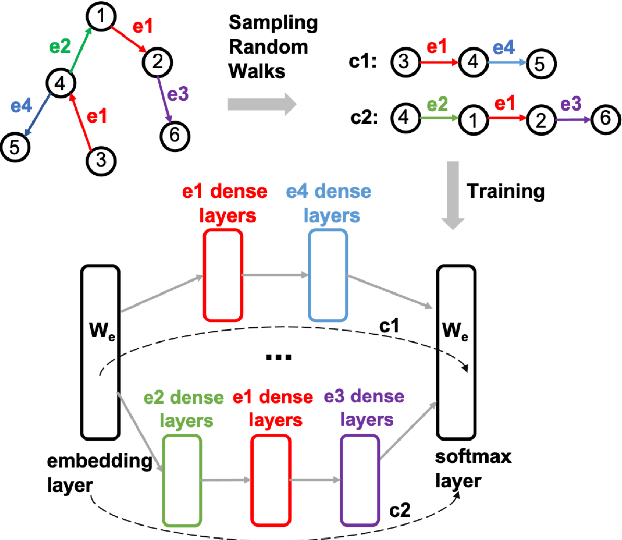

AHINE: Adaptive Heterogeneous Information Network Embedding

Aug 20, 2019

Abstract:Network embedding is an effective way to solve the network analytics problems such as node classification, link prediction, etc. It represents network elements using low dimensional vectors such that the graph structural information and properties are maximumly preserved. Many prior works focused on embeddings for networks with the same type of edges or vertices, while some works tried to generate embeddings for heterogeneous network using mechanisms like specially designed meta paths. In this paper, we propose two novel algorithms, GHINE (General Heterogeneous Information Network Embedding) and AHINE (Adaptive Heterogeneous Information Network Embedding), to compute distributed representations for elements in heterogeneous networks. Specially, AHINE uses an adaptive deep model to learn network embeddings that maximizes the likelihood of preserving the relationship chains between non-adjacent nodes. We apply our embeddings to a large network of points of interest (POIs) and achieve superior accuracy on some prediction problems on a ride-hailing platform. In addition, we show that AHINE outperforms state-of-the-art methods on a set of learning tasks on public datasets, including node labelling and similarity ranking in bibliographic networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge