Xiaoyun Zhao

Generalizable Memory-driven Transformer for Multivariate Long Sequence Time-series Forecasting

Jul 16, 2022

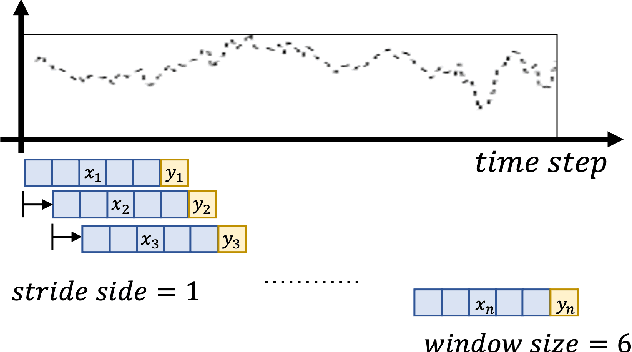

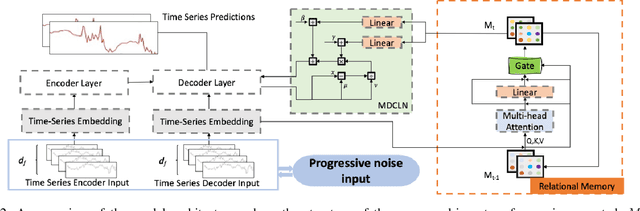

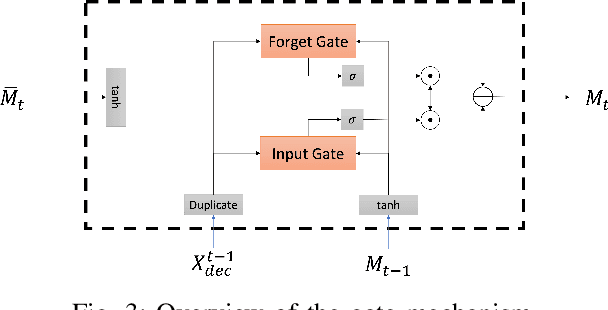

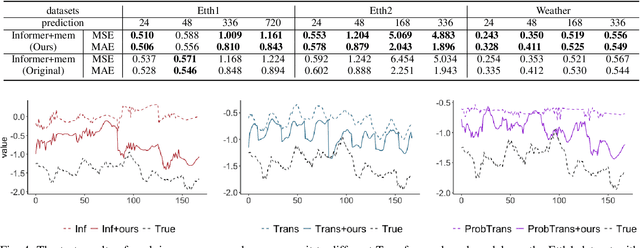

Abstract:Multivariate long sequence time-series forecasting (M-LSTF) is a practical but challenging problem. Unlike traditional timer-series forecasting tasks, M-LSTF tasks are more challenging from two aspects: 1) M-LSTF models need to learn time-series patterns both within and between multiple time features; 2) Under the rolling forecasting setting, the similarity between two consecutive training samples increases with the increasing prediction length, which makes models more prone to overfitting. In this paper, we propose a generalizable memory-driven Transformer to target M-LSTF problems. Specifically, we first propose a global-level memory component to drive the forecasting procedure by integrating multiple time-series features. In addition, we adopt a progressive fashion to train our model to increase its generalizability, in which we gradually introduce Bernoulli noises to training samples. Extensive experiments have been performed on five different datasets across multiple fields. Experimental results demonstrate that our approach can be seamlessly plugged into varying Transformer-based models to improve their performances up to roughly 30%. Particularly, this is the first work to specifically focus on the M-LSTF tasks to the best of our knowledge.

Multi-Sparse-Domain Collaborative Recommendation via Enhanced Comprehensive Aspect Preference Learning

Jan 16, 2022

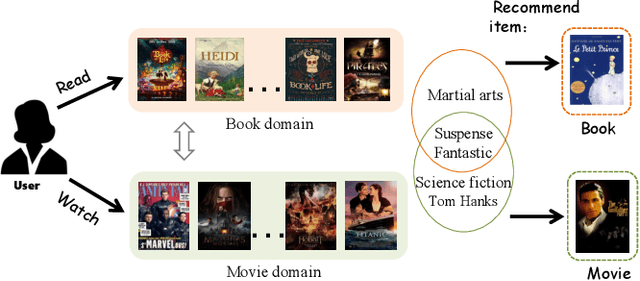

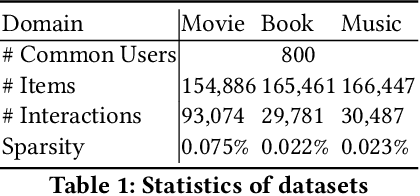

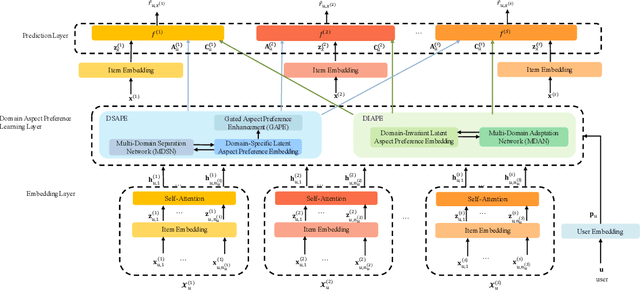

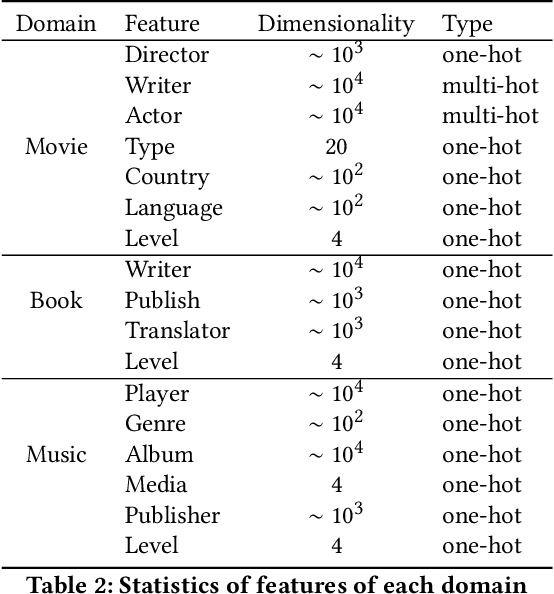

Abstract:Cross-domain recommendation (CDR) has been attracting increasing attention of researchers for its ability to alleviate the data sparsity problem in recommender systems. However, the existing single-target or dual-target CDR methods often suffer from two drawbacks, the assumption of at least one rich domain and the heavy dependence on domain-invariant preference, which are impractical in real world where sparsity is ubiquitous and might degrade the user preference learning. To overcome these issues, we propose a Multi-Sparse-Domain Collaborative Recommendation (MSDCR) model for multi-target cross-domain recommendation. Unlike traditional CDR methods, MSDCR treats the multiple relevant domains as all sparse and can simultaneously improve the recommendation performance in each domain. We propose a Multi-Domain Separation Network (MDSN) and a Gated Aspect Preference Enhancement (GAPE) module for MSDCR to enhance a user's domain-specific aspect preferences in a domain by transferring the complementary aspect preferences in other domains, during which the uniqueness of the domain-specific preference can be preserved through the adversarial training offered by MDSN and the complementarity can be adaptively determined by GAPE. Meanwhile, we propose a Multi-Domain Adaptation Network (MDAN) for MSDCR to capture a user's domain-invariant aspect preference. With the integration of the enhanced domain-specific aspect preference and the domain-invariant aspect preference, MSDCR can reach a comprehensive understanding of a user's preference in each sparse domain. At last, the extensive experiments conducted on real datasets demonstrate the remarkable superiority of MSDCR over the state-of-the-art single-domain recommendation models and CDR models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge