Woody Bayliss

Towards Reliable Identification of Diffusion-based Image Manipulations

Jun 05, 2025Abstract:Changing facial expressions, gestures, or background details may dramatically alter the meaning conveyed by an image. Notably, recent advances in diffusion models greatly improve the quality of image manipulation while also opening the door to misuse. Identifying changes made to authentic images, thus, becomes an important task, constantly challenged by new diffusion-based editing tools. To this end, we propose a novel approach for ReliAble iDentification of inpainted AReas (RADAR). RADAR builds on existing foundation models and combines features from different image modalities. It also incorporates an auxiliary contrastive loss that helps to isolate manipulated image patches. We demonstrate these techniques to significantly improve both the accuracy of our method and its generalisation to a large number of diffusion models. To support realistic evaluation, we further introduce BBC-PAIR, a new comprehensive benchmark, with images tampered by 28 diffusion models. Our experiments show that RADAR achieves excellent results, outperforming the state-of-the-art in detecting and localising image edits made by both seen and unseen diffusion models. Our code, data and models will be publicly available at alex-costanzino.github.io/radar.

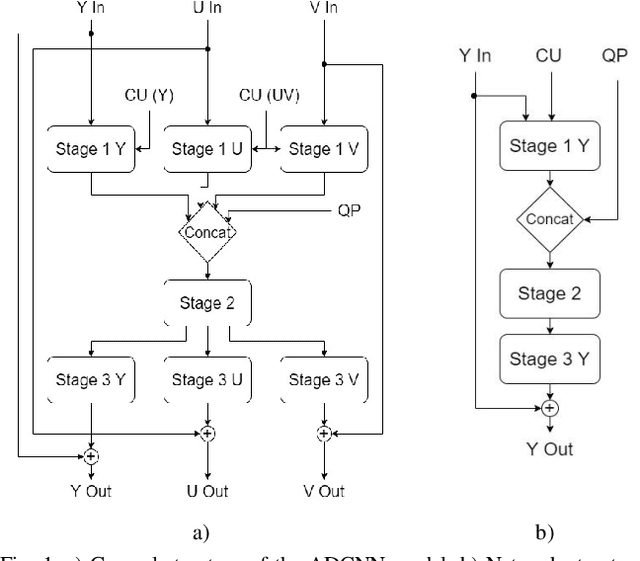

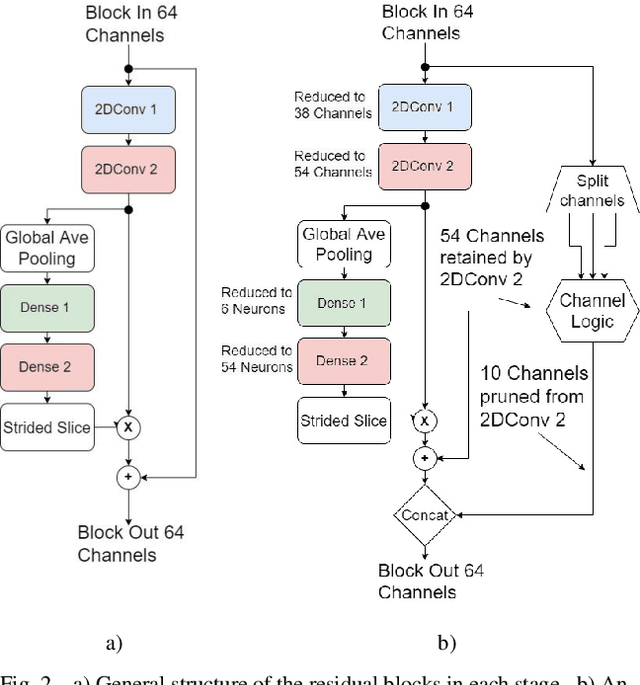

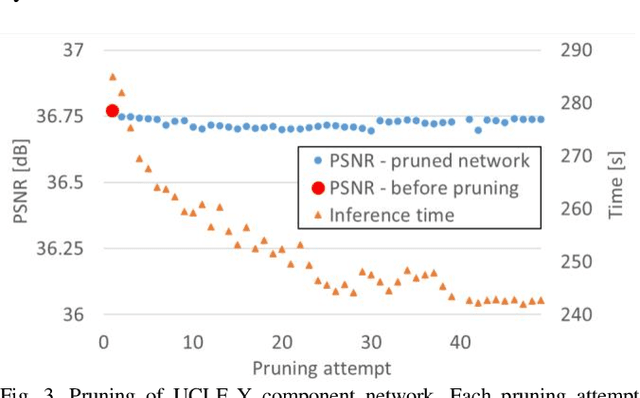

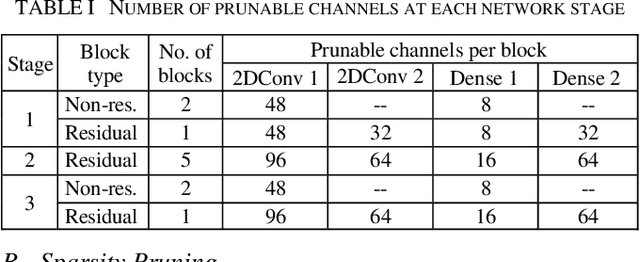

Complexity Reduction of Learned In-Loop Filtering in Video Coding

Mar 17, 2022

Abstract:In video coding, in-loop filters are applied on reconstructed video frames to enhance their perceptual quality, before storing the frames for output. Conventional in-loop filters are obtained by hand-crafted methods. Recently, learned filters based on convolutional neural networks that utilize attention mechanisms have been shown to improve upon traditional techniques. However, these solutions are typically significantly more computationally expensive, limiting their potential for practical applications. The proposed method uses a novel combination of sparsity and structured pruning for complexity reduction of learned in-loop filters. This is done through a three-step training process of magnitude-guidedweight pruning, insignificant neuron identification and removal, and fine-tuning. Through initial tests we find that network parameters can be significantly reduced with a minimal impact on network performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge