Tomohiro Tanaka

All-in-One ASR: Unifying Encoder-Decoder Models of CTC, Attention, and Transducer in Dual-Mode ASR

Dec 12, 2025Abstract:This paper proposes a unified framework, All-in-One ASR, that allows a single model to support multiple automatic speech recognition (ASR) paradigms, including connectionist temporal classification (CTC), attention-based encoder-decoder (AED), and Transducer, in both offline and streaming modes. While each ASR architecture offers distinct advantages and trade-offs depending on the application, maintaining separate models for each scenario incurs substantial development and deployment costs. To address this issue, we introduce a multi-mode joiner that enables seamless integration of various ASR modes within a single unified model. Experiments show that All-in-One ASR significantly reduces the total model footprint while matching or even surpassing the recognition performance of individually optimized ASR models. Furthermore, joint decoding leverages the complementary strengths of different ASR modes, yielding additional improvements in recognition accuracy.

Difference Vector Equalization for Robust Fine-tuning of Vision-Language Models

Nov 13, 2025

Abstract:Contrastive pre-trained vision-language models, such as CLIP, demonstrate strong generalization abilities in zero-shot classification by leveraging embeddings extracted from image and text encoders. This paper aims to robustly fine-tune these vision-language models on in-distribution (ID) data without compromising their generalization abilities in out-of-distribution (OOD) and zero-shot settings. Current robust fine-tuning methods tackle this challenge by reusing contrastive learning, which was used in pre-training, for fine-tuning. However, we found that these methods distort the geometric structure of the embeddings, which plays a crucial role in the generalization of vision-language models, resulting in limited OOD and zero-shot performance. To address this, we propose Difference Vector Equalization (DiVE), which preserves the geometric structure during fine-tuning. The idea behind DiVE is to constrain difference vectors, each of which is obtained by subtracting the embeddings extracted from the pre-trained and fine-tuning models for the same data sample. By constraining the difference vectors to be equal across various data samples, we effectively preserve the geometric structure. Therefore, we introduce two losses: average vector loss (AVL) and pairwise vector loss (PVL). AVL preserves the geometric structure globally by constraining difference vectors to be equal to their weighted average. PVL preserves the geometric structure locally by ensuring a consistent multimodal alignment. Our experiments demonstrate that DiVE effectively preserves the geometric structure, achieving strong results across ID, OOD, and zero-shot metrics.

Joint Modeling of Big Five and HEXACO for Multimodal Apparent Personality-trait Recognition

Oct 16, 2025Abstract:This paper proposes a joint modeling method of the Big Five, which has long been studied, and HEXACO, which has recently attracted attention in psychology, for automatically recognizing apparent personality traits from multimodal human behavior. Most previous studies have used the Big Five for multimodal apparent personality-trait recognition. However, no study has focused on apparent HEXACO which can evaluate an Honesty-Humility trait related to displaced aggression and vengefulness, social-dominance orientation, etc. In addition, the relationships between the Big Five and HEXACO when modeled by machine learning have not been clarified. We expect awareness of multimodal human behavior to improve by considering these relationships. The key advance of our proposed method is to optimize jointly recognizing the Big Five and HEXACO. Experiments using a self-introduction video dataset demonstrate that the proposed method can effectively recognize the Big Five and HEXACO.

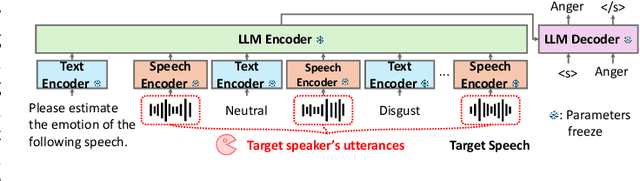

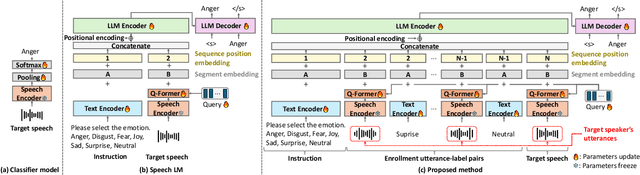

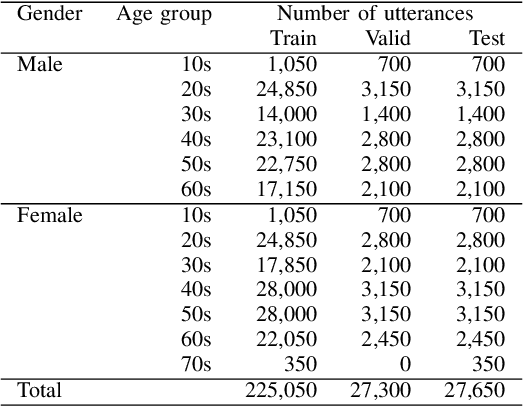

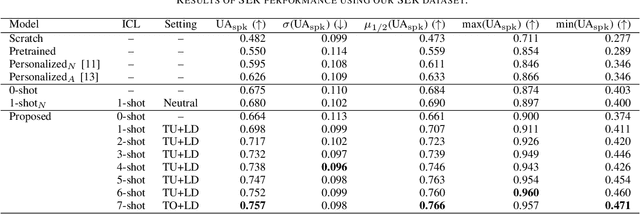

Few-shot Personalization via In-Context Learning for Speech Emotion Recognition based on Speech-Language Model

Sep 10, 2025

Abstract:This paper proposes a personalization method for speech emotion recognition (SER) through in-context learning (ICL). Since the expression of emotions varies from person to person, speaker-specific adaptation is crucial for improving the SER performance. Conventional SER methods have been personalized using emotional utterances of a target speaker, but it is often difficult to prepare utterances corresponding to all emotion labels in advance. Our idea to overcome this difficulty is to obtain speaker characteristics by conditioning a few emotional utterances of the target speaker in ICL-based inference. ICL is a method to perform unseen tasks by conditioning a few input-output examples through inference in large language models (LLMs). We meta-train a speech-language model extended from the LLM to learn how to perform personalized SER via ICL. Experimental results using our newly collected SER dataset demonstrate that the proposed method outperforms conventional methods.

Constant Rate Schedule: Constant-Rate Distributional Change for Efficient Training and Sampling in Diffusion Models

Nov 19, 2024Abstract:We propose a noise schedule that ensures a constant rate of change in the probability distribution of diffused data throughout the diffusion process. To obtain this noise schedule, we measure the rate of change in the probability distribution of the forward process and use it to determine the noise schedule before training diffusion models. The functional form of the noise schedule is automatically determined and tailored to each dataset and type of diffusion model. We evaluate the effectiveness of our noise schedule on unconditional and class-conditional image generation tasks using the LSUN (bedroom/church/cat/horse), ImageNet, and FFHQ datasets. Through extensive experiments, we confirmed that our noise schedule broadly improves the performance of the diffusion models regardless of the dataset, sampler, number of function evaluations, or type of diffusion model.

Attention as Annotation: Generating Images and Pseudo-masks for Weakly Supervised Semantic Segmentation with Diffusion

Sep 04, 2023Abstract:Although recent advancements in diffusion models enabled high-fidelity and diverse image generation, training of discriminative models largely depends on collections of massive real images and their manual annotation. Here, we present a training method for semantic segmentation that neither relies on real images nor manual annotation. The proposed method {\it attn2mask} utilizes images generated by a text-to-image diffusion model in combination with its internal text-to-image cross-attention as supervisory pseudo-masks. Since the text-to-image generator is trained with image-caption pairs but without pixel-wise labels, attn2mask can be regarded as a weakly supervised segmentation method overall. Experiments show that attn2mask achieves promising results in PASCAL VOC for not using real training data for segmentation at all, and it is also useful to scale up segmentation to a more-class scenario, i.e., ImageNet segmentation. It also shows adaptation ability with LoRA-based fine-tuning, which enables the transfer to a distant domain i.e., Cityscapes.

SpeechGLUE: How Well Can Self-Supervised Speech Models Capture Linguistic Knowledge?

Jun 14, 2023

Abstract:Self-supervised learning (SSL) for speech representation has been successfully applied in various downstream tasks, such as speech and speaker recognition. More recently, speech SSL models have also been shown to be beneficial in advancing spoken language understanding tasks, implying that the SSL models have the potential to learn not only acoustic but also linguistic information. In this paper, we aim to clarify if speech SSL techniques can well capture linguistic knowledge. For this purpose, we introduce SpeechGLUE, a speech version of the General Language Understanding Evaluation (GLUE) benchmark. Since GLUE comprises a variety of natural language understanding tasks, SpeechGLUE can elucidate the degree of linguistic ability of speech SSL models. Experiments demonstrate that speech SSL models, although inferior to text-based SSL models, perform better than baselines, suggesting that they can acquire a certain amount of general linguistic knowledge from just unlabeled speech data.

Transfer Learning from Pre-trained Language Models Improves End-to-End Speech Summarization

Jun 07, 2023Abstract:End-to-end speech summarization (E2E SSum) directly summarizes input speech into easy-to-read short sentences with a single model. This approach is promising because it, in contrast to the conventional cascade approach, can utilize full acoustical information and mitigate to the propagation of transcription errors. However, due to the high cost of collecting speech-summary pairs, an E2E SSum model tends to suffer from training data scarcity and output unnatural sentences. To overcome this drawback, we propose for the first time to integrate a pre-trained language model (LM), which is highly capable of generating natural sentences, into the E2E SSum decoder via transfer learning. In addition, to reduce the gap between the independently pre-trained encoder and decoder, we also propose to transfer the baseline E2E SSum encoder instead of the commonly used automatic speech recognition encoder. Experimental results show that the proposed model outperforms baseline and data augmented models.

End-to-End Joint Target and Non-Target Speakers ASR

Jun 04, 2023

Abstract:This paper proposes a novel automatic speech recognition (ASR) system that can transcribe individual speaker's speech while identifying whether they are target or non-target speakers from multi-talker overlapped speech. Target-speaker ASR systems are a promising way to only transcribe a target speaker's speech by enrolling the target speaker's information. However, in conversational ASR applications, transcribing both the target speaker's speech and non-target speakers' ones is often required to understand interactive information. To naturally consider both target and non-target speakers in a single ASR model, our idea is to extend autoregressive modeling-based multi-talker ASR systems to utilize the enrollment speech of the target speaker. Our proposed ASR is performed by recursively generating both textual tokens and tokens that represent target or non-target speakers. Our experiments demonstrate the effectiveness of our proposed method.

Improving Scheduled Sampling for Neural Transducer-based ASR

May 25, 2023

Abstract:The recurrent neural network-transducer (RNNT) is a promising approach for automatic speech recognition (ASR) with the introduction of a prediction network that autoregressively considers linguistic aspects. To train the autoregressive part, the ground-truth tokens are used as substitutions for the previous output token, which leads to insufficient robustness to incorrect past tokens; a recognition error in the decoding leads to further errors. Scheduled sampling (SS) is a technique to train autoregressive model robustly to past errors by randomly replacing some ground-truth tokens with actual outputs generated from a model. SS mitigates the gaps between training and decoding steps, known as exposure bias, and it is often used for attentional encoder-decoder training. However SS has not been fully examined for RNNT because of the difficulty in applying SS to RNNT due to the complicated RNNT output form. In this paper we propose SS approaches suited for RNNT. Our SS approaches sample the tokens generated from the distiribution of RNNT itself, i.e. internal language model or RNNT outputs. Experiments in three datasets confirm that RNNT trained with our SS approach achieves the best ASR performance. In particular, on a Japanese ASR task, our best system outperforms the previous state-of-the-art alternative.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge