Emergent Communication through Metropolis-Hastings Naming Game with Deep Generative Models

May 24, 2022Tadahiro Taniguchi, Yuto Yoshida, Akira Taniguchi, Yoshinobu Hagiwara

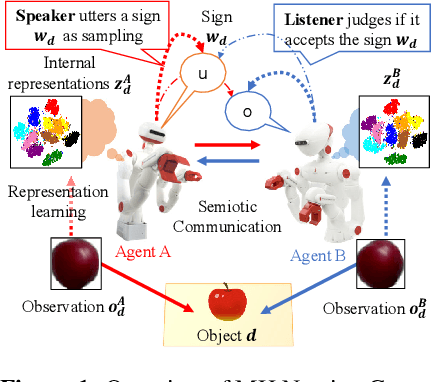

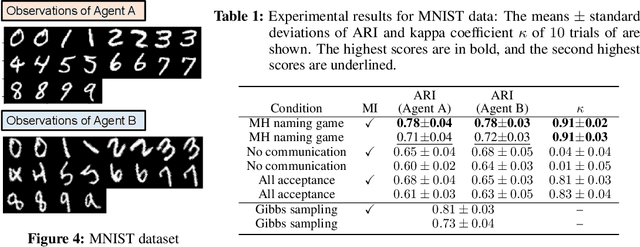

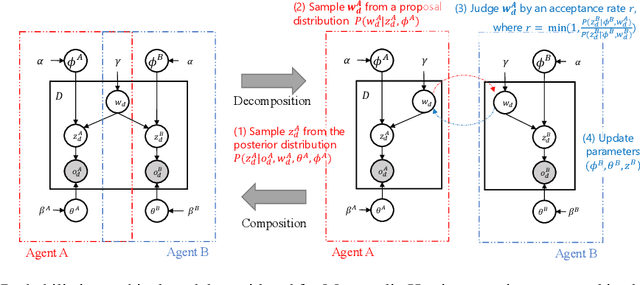

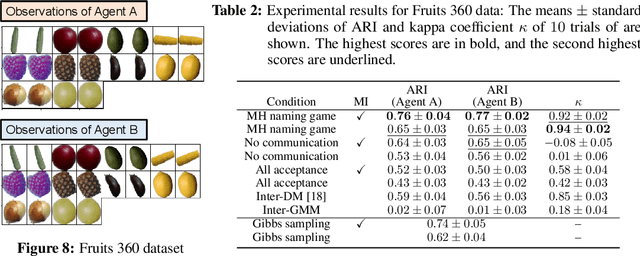

Emergent communication, also known as symbol emergence, seeks to investigate computational models that can better explain human language evolution and the creation of symbol systems. This study aims to provide a new model for emergent communication, which is based on a probabilistic generative model. We define the Metropolis-Hastings (MH) naming game by generalizing a model proposed by Hagiwara et al. \cite{hagiwara2019symbol}. The MH naming game is a sort of MH algorithm for an integrative probabilistic generative model that combines two agents playing the naming game. From this viewpoint, symbol emergence is regarded as decentralized Bayesian inference, and semiotic communication is regarded as inter-personal cross-modal inference. We also offer Inter-GMM+VAE, a deep generative model for simulating emergent communication, in which two agents create internal representations and categories and share signs (i.e., names of objects) from raw visual images observed from different viewpoints. The model has been validated on MNIST and Fruits 360 datasets. Experiment findings show that categories are formed from real images observed by agents, and signs are correctly shared across agents by successfully utilizing both of the agents' views via the MH naming game. Furthermore, it has been verified that the visual images were recalled from the signs uttered by the agents. Notably, emergent communication without supervision and reward feedback improved the performance of unsupervised representation learning.

Self-Supervised Representation Learning as Multimodal Variational Inference

Mar 22, 2022Hiroki Nakamura, Masashi Okada, Tadahiro Taniguchi

This paper proposes a probabilistic extension of SimSiam, a recent self-supervised learning (SSL) method. SimSiam trains a model by maximizing the similarity between image representations of different augmented views of the same image. Although uncertainty-aware machine learning has been getting general like deep variational inference, SimSiam and other SSL are insufficiently uncertainty-aware, which could lead to limitations on its potential. The proposed extension is to make SimSiam uncertainty-aware based on variational inference. Our main contributions are twofold: Firstly, we clarify the theoretical relationship between non-contrastive SSL and multimodal variational inference. Secondly, we introduce a novel SSL called variational inference SimSiam (VI-SimSiam), which incorporates the uncertainty by involving spherical posterior distributions. Our experiment shows that VI-SimSiam outperforms SimSiam in classification tasks in ImageNette and ImageWoof by successfully estimating the representation uncertainty.

Spatial Concept-based Topometric Semantic Mapping for Hierarchical Path-planning from Speech Instructions

Mar 21, 2022Akira Taniguchi, Shuya Ito, Tadahiro Taniguchi

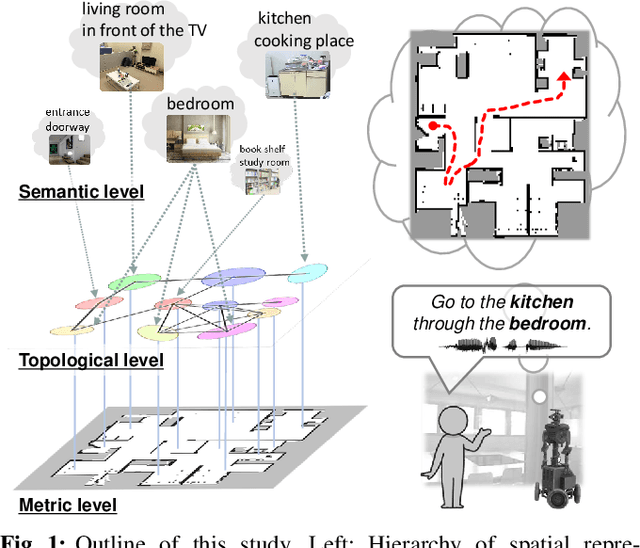

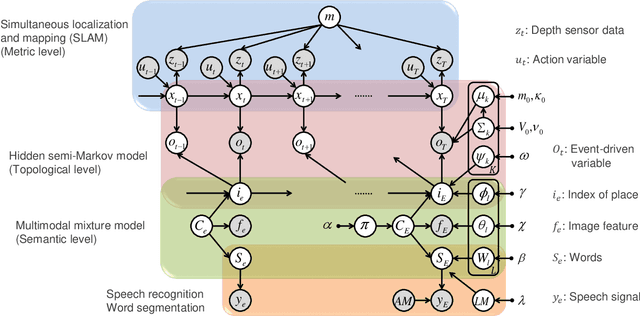

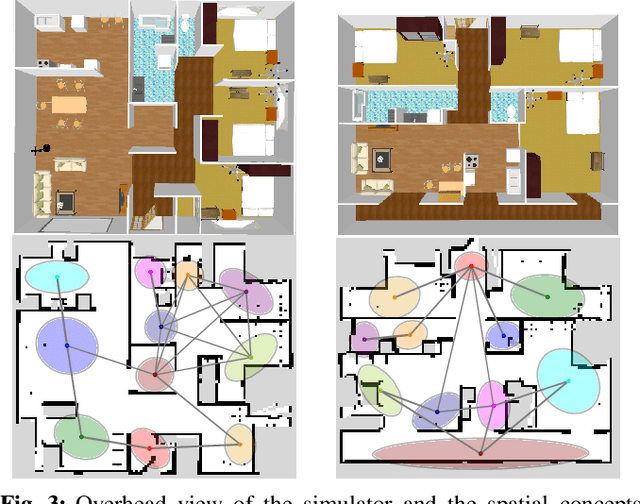

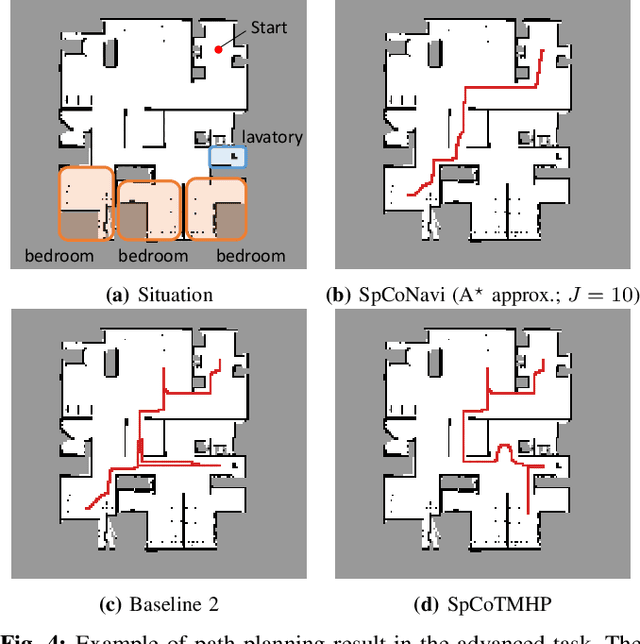

Navigating to destinations using human speech instructions is an important task for autonomous mobile robots that operate in the real world. Spatial representations include a semantic level that represents an abstracted location category, a topological level that represents their connectivity, and a metric level that depends on the structure of the environment. The purpose of this study is to realize a hierarchical spatial representation using a topometric semantic map and planning efficient paths through human-robot interactions. We propose a novel probabilistic generative model, SpCoTMHP, that forms a topometric semantic map that adapts to the environment and leads to hierarchical path planning. We also developed approximate inference methods for path planning, where the levels of the hierarchy can influence each other. The proposed path planning method is theoretically supported by deriving a formulation based on control as probabilistic inference. The navigation experiment using human speech instruction shows that the proposed spatial concept-based hierarchical path planning improves the performance and reduces the calculation cost compared with conventional methods. Hierarchical spatial representation provides a mutually understandable form for humans and robots to render language-based navigation tasks feasible.

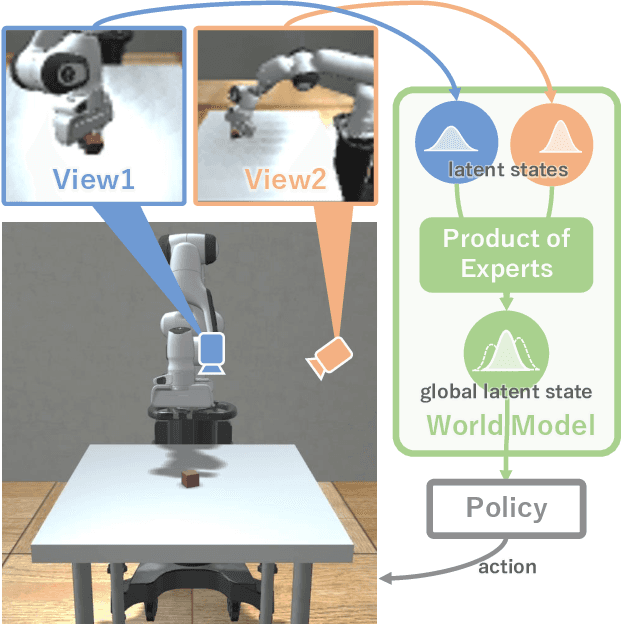

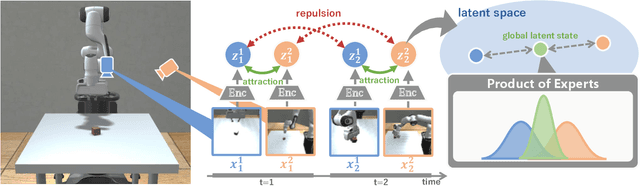

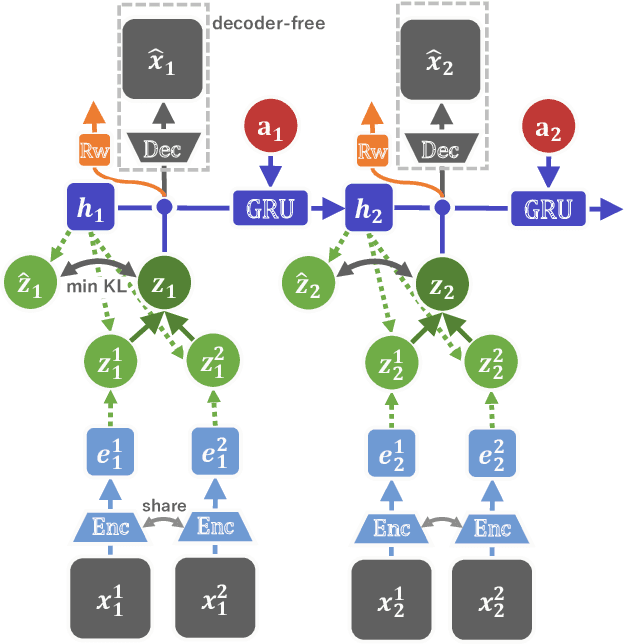

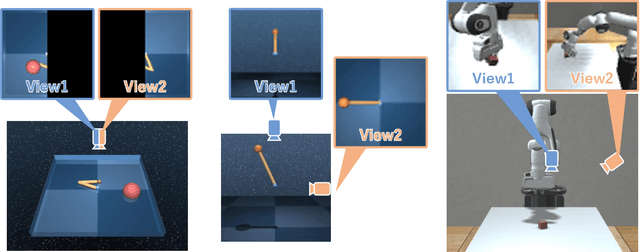

Multi-View Dreaming: Multi-View World Model with Contrastive Learning

Mar 15, 2022Akira Kinose, Masashi Okada, Ryo Okumura, Tadahiro Taniguchi

In this paper, we propose Multi-View Dreaming, a novel reinforcement learning agent for integrated recognition and control from multi-view observations by extending Dreaming. Most current reinforcement learning method assumes a single-view observation space, and this imposes limitations on the observed data, such as lack of spatial information and occlusions. This makes obtaining ideal observational information from the environment difficult and is a bottleneck for real-world robotics applications. In this paper, we use contrastive learning to train a shared latent space between different viewpoints, and show how the Products of Experts approach can be used to integrate and control the probability distributions of latent states for multiple viewpoints. We also propose Multi-View DreamingV2, a variant of Multi-View Dreaming that uses a categorical distribution to model the latent state instead of the Gaussian distribution. Experiments show that the proposed method outperforms simple extensions of existing methods in a realistic robot control task.

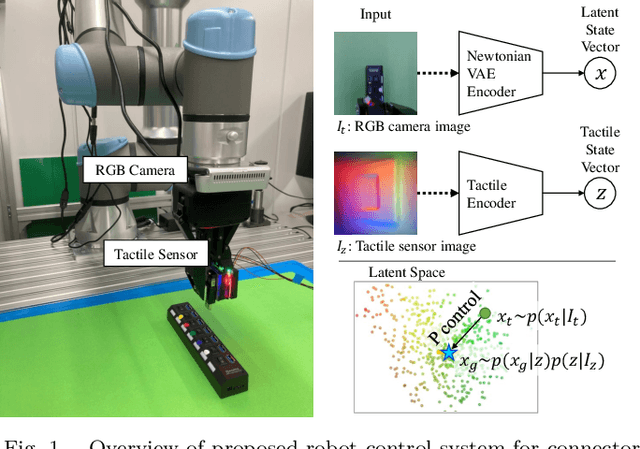

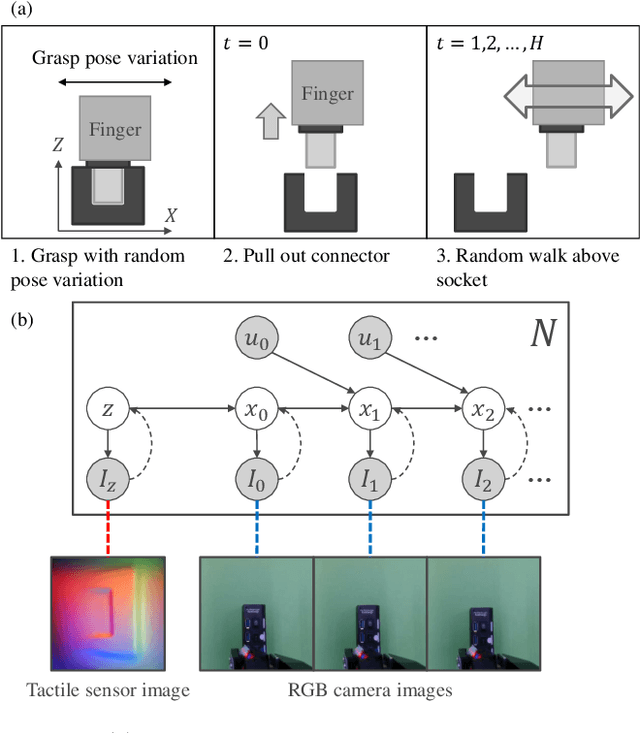

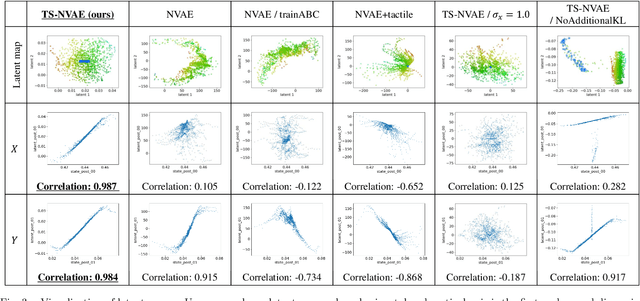

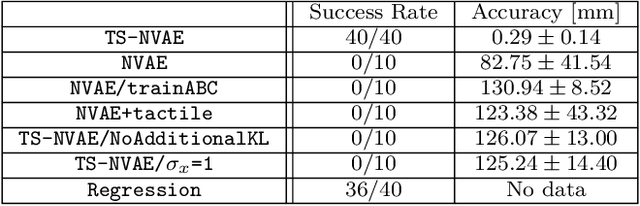

Tactile-Sensitive NewtonianVAE for High-Accuracy Industrial Connector-Socket Insertion

Mar 10, 2022Ryo Okumura, Nobuki Nishio, Tadahiro Taniguchi

An industrial connector-socket insertion task requires sub-millimeter positioning and compensation of grasp pose of a connector. Thus high accurate estimation of relative pose between socket and connector is a key factor to achieve the task. World models are promising technology for visuo-motor control. They obtain appropriate state representation for control to jointly optimize feature extraction and latent dynamics model. Recent study shows NewtonianVAE, which is a kind of the world models, acquires latent space which is equivalent to mapping from images to physical coordinate. Proportional control can be achieved in the latent space of NewtonianVAE. However, application of NewtonianVAE to high accuracy industrial tasks in physical environments is open problem. Moreover, there is no general frameworks to compensate goal position in the obtained latent space considering the grasp pose. In this work, we apply NewtonianVAE to USB connector insertion with grasp pose variation in the physical environments. We adopt a GelSight type tactile sensor and estimate insertion position compensated by the grasp pose of the connector. Our method trains the latent space in an end-to-end manner, and simple proportional control is available. Therefore, it requires no additional engineering and annotation. Experimental results show that the proposed method, Tactile-Sensitive NewtonianVAE, outperforms naive combination of regression-based grasp pose estimator and coordinate transformation. Moreover, we reveal the original NewtonianVAE does not work in some situation, and demonstrate that domain knowledge induction improves model accuracy. This domain knowledge is easy to be known from specification of robots or measurement.

DreamingV2: Reinforcement Learning with Discrete World Models without Reconstruction

Mar 01, 2022Masashi Okada, Tadahiro Taniguchi

The present paper proposes a novel reinforcement learning method with world models, DreamingV2, a collaborative extension of DreamerV2 and Dreaming. DreamerV2 is a cutting-edge model-based reinforcement learning from pixels that uses discrete world models to represent latent states with categorical variables. Dreaming is also a form of reinforcement learning from pixels that attempts to avoid the autoencoding process in general world model training by involving a reconstruction-free contrastive learning objective. The proposed DreamingV2 is a novel approach of adopting both the discrete representation of DreamingV2 and the reconstruction-free objective of Dreaming. Compared to DreamerV2 and other recent model-based methods without reconstruction, DreamingV2 achieves the best scores on five simulated challenging 3D robot arm tasks. We believe that DreamingV2 will be a reliable solution for robot learning since its discrete representation is suitable to describe discontinuous environments, and the reconstruction-free fashion well manages complex vision observations.

Unsupervised Multimodal Word Discovery based on Double Articulation Analysis with Co-occurrence cues

Jan 18, 2022Akira Taniguchi, Hiroaki Murakami, Ryo Ozaki, Tadahiro Taniguchi

Human infants acquire their verbal lexicon from minimal prior knowledge of language based on the statistical properties of phonological distributions and the co-occurrence of other sensory stimuli. In this study, we propose a novel fully unsupervised learning method discovering speech units by utilizing phonological information as a distributional cue and object information as a co-occurrence cue. The proposed method can not only (1) acquire words and phonemes from speech signals using unsupervised learning, but can also (2) utilize object information based on multiple modalities (i.e., vision, tactile, and auditory) simultaneously. The proposed method is based on the Nonparametric Bayesian Double Articulation Analyzer (NPB-DAA) discovering phonemes and words from phonological features, and Multimodal Latent Dirichlet Allocation (MLDA) categorizing multimodal information obtained from objects. In the experiment, the proposed method showed higher word discovery performance than the baseline methods. In particular, words that expressed the characteristics of the object (i.e., words corresponding to nouns and adjectives) were segmented accurately. Furthermore, we examined how learning performance is affected by differences in the importance of linguistic information. When the weight of the word modality was increased, the performance was further improved compared to the fixed condition.

Multiagent Multimodal Categorization for Symbol Emergence: Emergent Communication via Interpersonal Cross-modal Inference

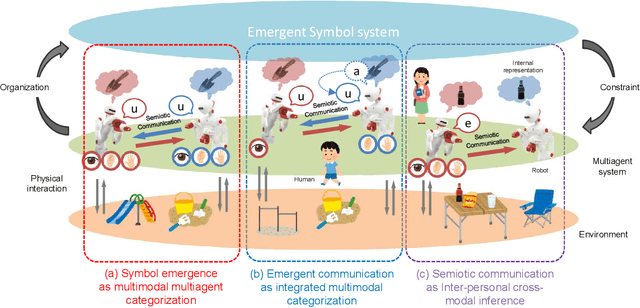

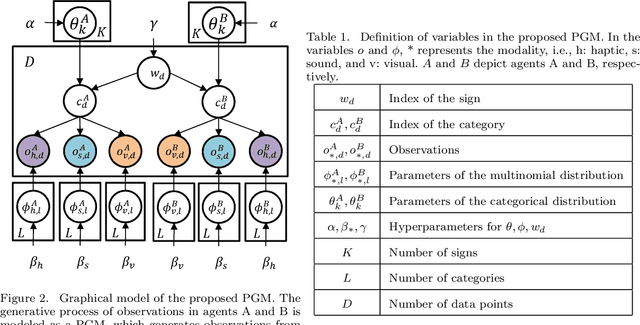

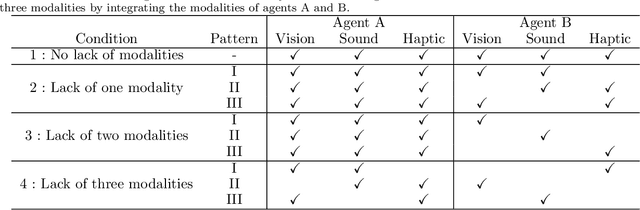

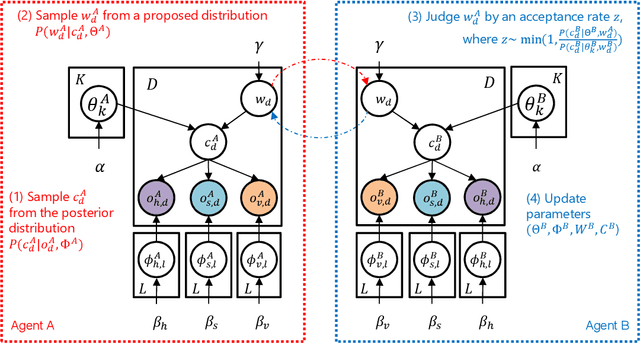

Sep 15, 2021Yoshinobu Hagiwara, Kazuma Furukawa, Akira Taniguchi, Tadahiro Taniguchi

This paper describes a computational model of multiagent multimodal categorization that realizes emergent communication. We clarify whether the computational model can reproduce the following functions in a symbol emergence system, comprising two agents with different sensory modalities playing a naming game. (1) Function for forming a shared lexical system that comprises perceptual categories and corresponding signs, formed by agents through individual learning and semiotic communication between agents. (2) Function to improve the categorization accuracy in an agent via semiotic communication with another agent, even when some sensory modalities of each agent are missing. (3) Function that an agent infers unobserved sensory information based on a sign sampled from another agent in the same manner as cross-modal inference. We propose an interpersonal multimodal Dirichlet mixture (Inter-MDM), which is derived by dividing an integrative probabilistic generative model, which is obtained by integrating two Dirichlet mixtures (DMs). The Markov chain Monte Carlo algorithm realizes emergent communication. The experimental results demonstrated that Inter-MDM enables agents to form multimodal categories and appropriately share signs between agents. It is shown that emergent communication improves categorization accuracy, even when some sensory modalities are missing. Inter-MDM enables an agent to predict unobserved information based on a shared sign.

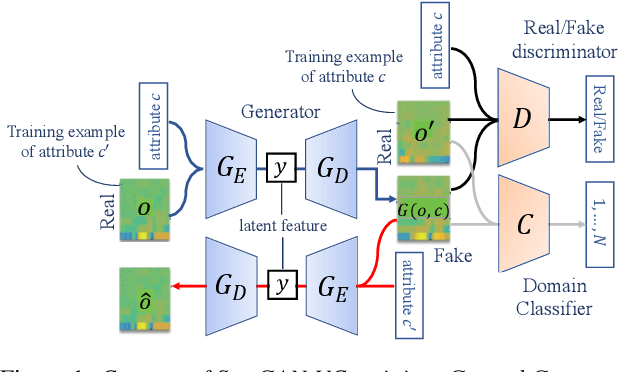

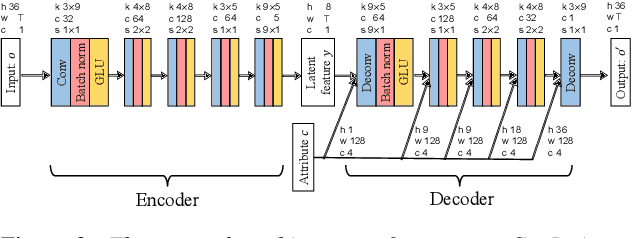

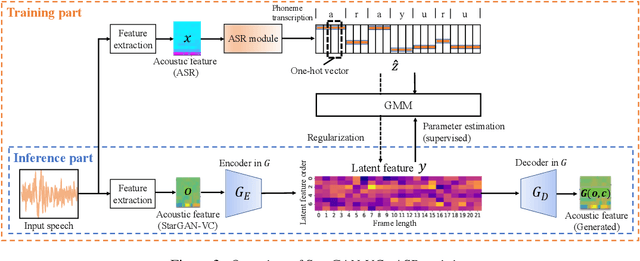

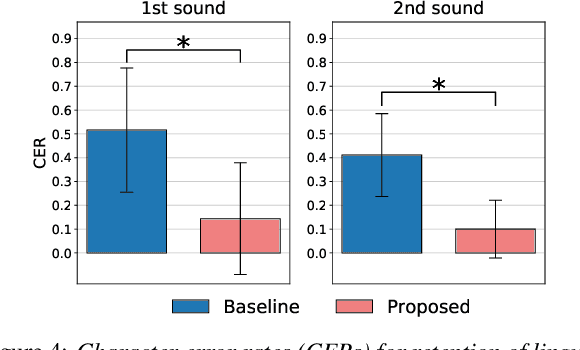

StarGAN-VC+ASR: StarGAN-based Non-Parallel Voice Conversion Regularized by Automatic Speech Recognition

Aug 10, 2021Shoki Sakamoto, Akira Taniguchi, Tadahiro Taniguchi, Hirokazu Kameoka

Preserving the linguistic content of input speech is essential during voice conversion (VC). The star generative adversarial network-based VC method (StarGAN-VC) is a recently developed method that allows non-parallel many-to-many VC. Although this method is powerful, it can fail to preserve the linguistic content of input speech when the number of available training samples is extremely small. To overcome this problem, we propose the use of automatic speech recognition to assist model training, to improve StarGAN-VC, especially in low-resource scenarios. Experimental results show that using our proposed method, StarGAN-VC can retain more linguistic information than vanilla StarGAN-VC.

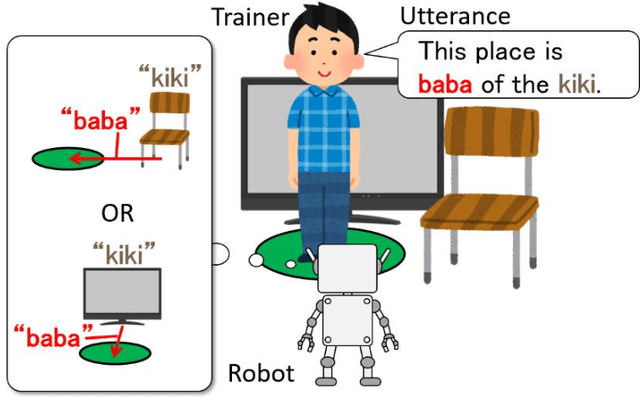

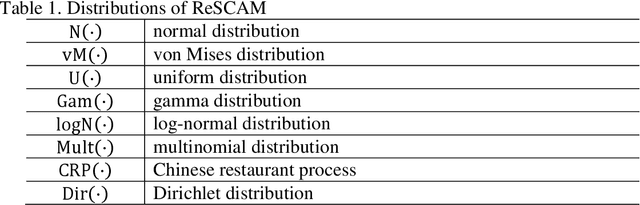

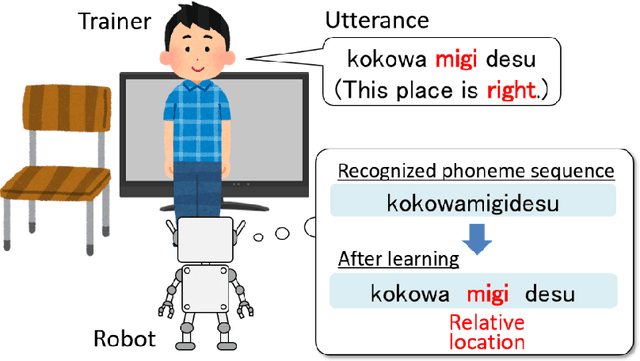

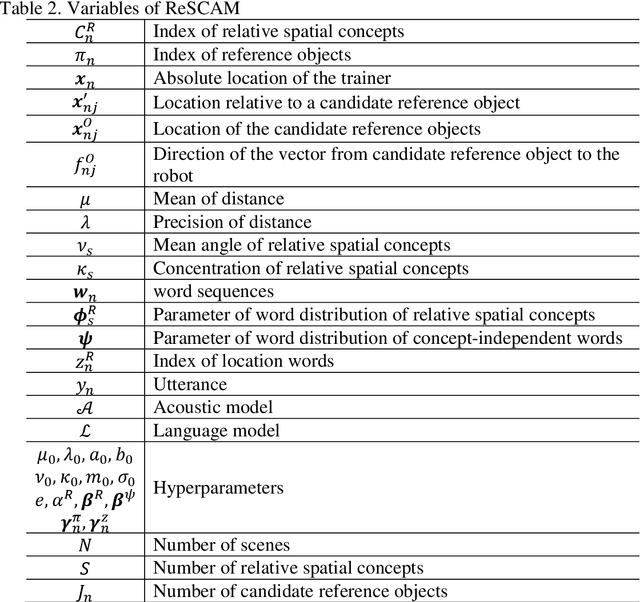

Unsupervised Lexical Acquisition of Relative Spatial Concepts Using Spoken User Utterances

Jun 16, 2021Rikunari Sagara, Ryo Taguchi, Akira Taniguchi, Tadahiro Taniguchi, Koosuke Hattori, Masahiro Hoguro, Taizo Umezaki

This paper proposes methods for unsupervised lexical acquisition for relative spatial concepts using spoken user utterances. A robot with a flexible spoken dialog system must be able to acquire linguistic representation and its meaning specific to an environment through interactions with humans as children do. Specifically, relative spatial concepts (e.g., front and right) are widely used in our daily lives, however, it is not obvious which object is a reference object when a robot learns relative spatial concepts. Therefore, we propose methods by which a robot without prior knowledge of words can learn relative spatial concepts. The methods are formulated using a probabilistic model to estimate the proper reference objects and distributions representing concepts simultaneously. The experimental results show that relative spatial concepts and a phoneme sequence representing each concept can be learned under the condition that the robot does not know which located object is the reference object. Additionally, we show that two processes in the proposed method improve the estimation accuracy of the concepts: generating candidate word sequences by class n-gram and selecting word sequences using location information. Furthermore, we show that clues to reference objects improve accuracy even though the number of candidate reference objects increases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge