Sonali Singh

GPU Cluster Scheduling for Network-Sensitive Deep Learning

Jan 29, 2024Abstract:We propose a novel GPU-cluster scheduler for distributed DL (DDL) workloads that enables proximity based consolidation of GPU resources based on the DDL jobs' sensitivities to the anticipated communication-network delays. Our scheduler consists of three major components: (i) a classical delay scheduling algorithm to facilitate job placement and consolidation; (ii) a network-sensitive job preemption strategy; and (iii) an "auto-tuner" mechanism to optimize delay timers for effective delay scheduling. Additionally, to enable a cost-effective methodology for large-scale experiments, we develop a data-driven DDL cluster simulation platform. Employing the simulation platform we compare against several state-of-the-art alternatives on real-world workload traces to demonstrate the benefits of our design. Our scheduler can provide improvement of up to 69% in end-to-end Makespan for training all jobs compared to the prevailing consolidation-based scheduling methods, while reducing the average job completion time by up to 83% and minimizing the communication overheads by up to 98% under congested networking conditions.

Analysis of Distributed Deep Learning in the Cloud

Aug 30, 2022Abstract:We aim to resolve this problem by introducing a comprehensive distributed deep learning (DDL) profiler, which can determine the various execution "stalls" that DDL suffers from while running on a public cloud. We have implemented the profiler by extending prior work to additionally estimate two types of communication stalls - interconnect and network stalls. We train popular DNN models using the profiler to characterize various AWS GPU instances and list their advantages and shortcomings for users to make an informed decision. We observe that the more expensive GPU instances may not be the most performant for all DNN models and AWS may sub-optimally allocate hardware interconnect resources. Specifically, the intra-machine interconnect can introduce communication overheads up to 90% of DNN training time and network-connected instances can suffer from up to 5x slowdown compared to training on a single instance. Further, we model the impact of DNN macroscopic features such as the number of layers and the number of gradients on communication stalls. Finally, we propose a measurement-based recommendation model for users to lower their public cloud monetary costs for DDL, given a time budget.

Machine Learning: Algorithms, Models, and Applications

Jan 06, 2022

Abstract:Recent times are witnessing rapid development in machine learning algorithm systems, especially in reinforcement learning, natural language processing, computer and robot vision, image processing, speech, and emotional processing and understanding. In tune with the increasing importance and relevance of machine learning models, algorithms, and their applications, and with the emergence of more innovative uses cases of deep learning and artificial intelligence, the current volume presents a few innovative research works and their applications in real world, such as stock trading, medical and healthcare systems, and software automation. The chapters in the book illustrate how machine learning and deep learning algorithms and models are designed, optimized, and deployed. The volume will be useful for advanced graduate and doctoral students, researchers, faculty members of universities, practicing data scientists and data engineers, professionals, and consultants working on the broad areas of machine learning, deep learning, and artificial intelligence.

Exploiting Activation based Gradient Output Sparsity to Accelerate Backpropagation in CNNs

Sep 16, 2021

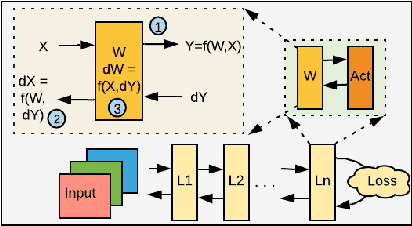

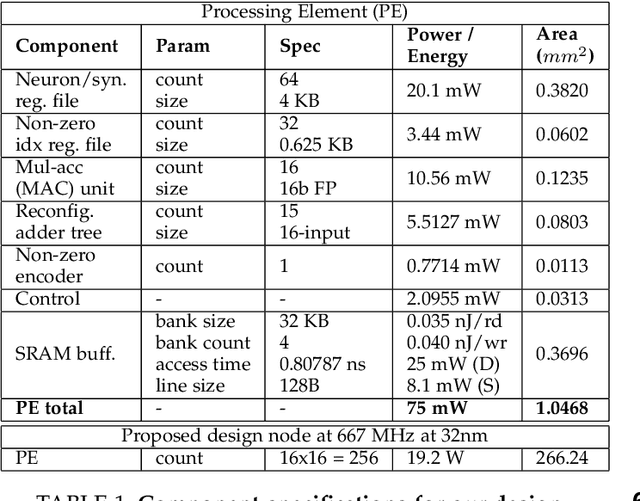

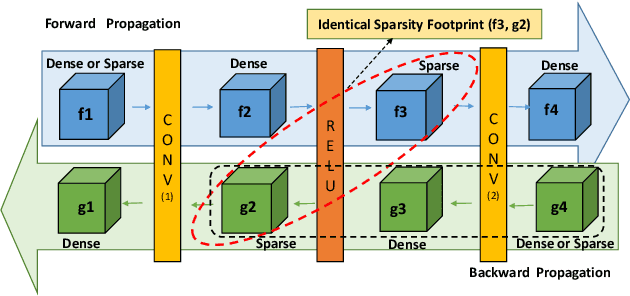

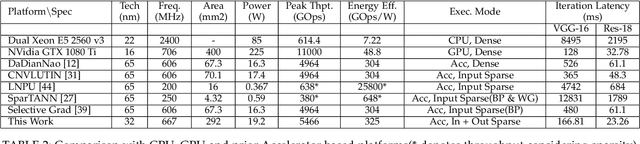

Abstract:Machine/deep-learning (ML/DL) based techniques are emerging as a driving force behind many cutting-edge technologies, achieving high accuracy on computer vision workloads such as image classification and object detection. However, training these models involving large parameters is both time-consuming and energy-hogging. In this regard, several prior works have advocated for sparsity to speed up the of DL training and more so, the inference phase. This work begins with the observation that during training, sparsity in the forward and backward passes are correlated. In that context, we investigate two types of sparsity (input and output type) inherent in gradient descent-based optimization algorithms and propose a hardware micro-architecture to leverage the same. Our experimental results use five state-of-the-art CNN models on the Imagenet dataset, and show back propagation speedups in the range of 1.69$\times$ to 5.43$\times$, compared to the dense baseline execution. By exploiting sparsity in both the forward and backward passes, speedup improvements range from 1.68$\times$ to 3.30$\times$ over the sparsity-agnostic baseline execution. Our work also achieves significant reduction in training iteration time over several previously proposed dense as well as sparse accelerator based platforms, in addition to achieving order of magnitude energy efficiency improvements over GPU based execution.

Structured in Space, Randomized in Time: Leveraging Dropout in RNNs for Efficient Training

Jun 22, 2021

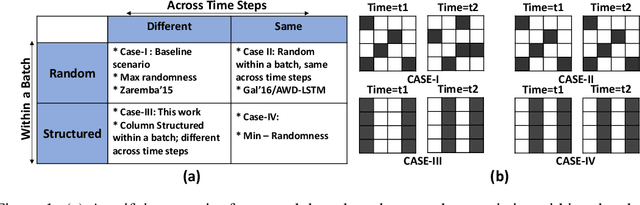

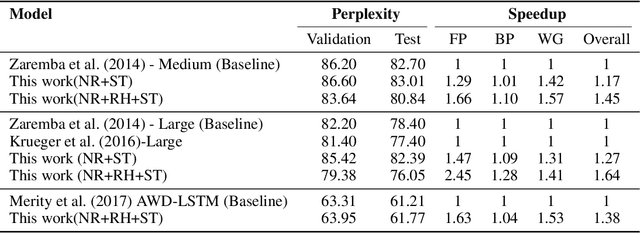

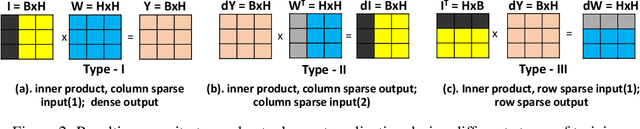

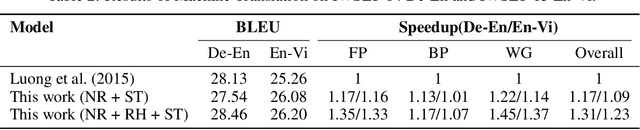

Abstract:Recurrent Neural Networks (RNNs), more specifically their Long Short-Term Memory (LSTM) variants, have been widely used as a deep learning tool for tackling sequence-based learning tasks in text and speech. Training of such LSTM applications is computationally intensive due to the recurrent nature of hidden state computation that repeats for each time step. While sparsity in Deep Neural Nets has been widely seen as an opportunity for reducing computation time in both training and inference phases, the usage of non-ReLU activation in LSTM RNNs renders the opportunities for such dynamic sparsity associated with neuron activation and gradient values to be limited or non-existent. In this work, we identify dropout induced sparsity for LSTMs as a suitable mode of computation reduction. Dropout is a widely used regularization mechanism, which randomly drops computed neuron values during each iteration of training. We propose to structure dropout patterns, by dropping out the same set of physical neurons within a batch, resulting in column (row) level hidden state sparsity, which are well amenable to computation reduction at run-time in general-purpose SIMD hardware as well as systolic arrays. We conduct our experiments for three representative NLP tasks: language modelling on the PTB dataset, OpenNMT based machine translation using the IWSLT De-En and En-Vi datasets, and named entity recognition sequence labelling using the CoNLL-2003 shared task. We demonstrate that our proposed approach can be used to translate dropout-based computation reduction into reduced training time, with improvement ranging from 1.23x to 1.64x, without sacrificing the target metric.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge