Sinan Kalkan

KOVAN Research Lab, Dept. of Computer Engineering, Middle East Technical University, Ankara, Turkey

XoFTR: Cross-modal Feature Matching Transformer

Apr 15, 2024Abstract:We introduce, XoFTR, a cross-modal cross-view method for local feature matching between thermal infrared (TIR) and visible images. Unlike visible images, TIR images are less susceptible to adverse lighting and weather conditions but present difficulties in matching due to significant texture and intensity differences. Current hand-crafted and learning-based methods for visible-TIR matching fall short in handling viewpoint, scale, and texture diversities. To address this, XoFTR incorporates masked image modeling pre-training and fine-tuning with pseudo-thermal image augmentation to handle the modality differences. Additionally, we introduce a refined matching pipeline that adjusts for scale discrepancies and enhances match reliability through sub-pixel level refinement. To validate our approach, we collect a comprehensive visible-thermal dataset, and show that our method outperforms existing methods on many benchmarks.

RankED: Addressing Imbalance and Uncertainty in Edge Detection Using Ranking-based Losses

Mar 07, 2024Abstract:Detecting edges in images suffers from the problems of (P1) heavy imbalance between positive and negative classes as well as (P2) label uncertainty owing to disagreement between different annotators. Existing solutions address P1 using class-balanced cross-entropy loss and dice loss and P2 by only predicting edges agreed upon by most annotators. In this paper, we propose RankED, a unified ranking-based approach that addresses both the imbalance problem (P1) and the uncertainty problem (P2). RankED tackles these two problems with two components: One component which ranks positive pixels over negative pixels, and the second which promotes high confidence edge pixels to have more label certainty. We show that RankED outperforms previous studies and sets a new state-of-the-art on NYUD-v2, BSDS500 and Multi-cue datasets. Code is available at https://ranked-cvpr24.github.io.

Generalized Mask-aware IoU for Anchor Assignment for Real-time Instance Segmentation

Dec 28, 2023

Abstract:This paper introduces Generalized Mask-aware Intersection-over-Union (GmaIoU) as a new measure for positive-negative assignment of anchor boxes during training of instance segmentation methods. Unlike conventional IoU measure or its variants, which only consider the proximity of anchor and ground-truth boxes; GmaIoU additionally takes into account the segmentation mask. This enables GmaIoU to provide more accurate supervision during training. We demonstrate the effectiveness of GmaIoU by replacing IoU with our GmaIoU in ATSS, a state-of-the-art (SOTA) assigner. Then, we train YOLACT, a real-time instance segmentation method, using our GmaIoU-based ATSS assigner. The resulting YOLACT based on the GmaIoU assigner outperforms (i) ATSS with IoU by $\sim 1.0-1.5$ mask AP, (ii) YOLACT with a fixed IoU threshold assigner by $\sim 1.5-2$ mask AP over different image sizes and (iii) decreases the inference time by $25 \%$ owing to using less anchors. Taking advantage of this efficiency, we further devise GmaYOLACT, a faster and $+7$ mask AP points more accurate detector than YOLACT. Our best model achieves $38.7$ mask AP at $26$ fps on COCO test-dev establishing a new state-of-the-art for real-time instance segmentation.

Uncertainty-based Fairness Measures

Dec 18, 2023

Abstract:Unfair predictions of machine learning (ML) models impede their broad acceptance in real-world settings. Tackling this arduous challenge first necessitates defining what it means for an ML model to be fair. This has been addressed by the ML community with various measures of fairness that depend on the prediction outcomes of the ML models, either at the group level or the individual level. These fairness measures are limited in that they utilize point predictions, neglecting their variances, or uncertainties, making them susceptible to noise, missingness and shifts in data. In this paper, we first show that an ML model may appear to be fair with existing point-based fairness measures but biased against a demographic group in terms of prediction uncertainties. Then, we introduce new fairness measures based on different types of uncertainties, namely, aleatoric uncertainty and epistemic uncertainty. We demonstrate on many datasets that (i) our uncertainty-based measures are complementary to existing measures of fairness, and (ii) they provide more insights about the underlying issues leading to bias.

Correlation Loss: Enforcing Correlation between Classification and Localization

Jan 03, 2023

Abstract:Object detectors are conventionally trained by a weighted sum of classification and localization losses. Recent studies (e.g., predicting IoU with an auxiliary head, Generalized Focal Loss, Rank & Sort Loss) have shown that forcing these two loss terms to interact with each other in non-conventional ways creates a useful inductive bias and improves performance. Inspired by these works, we focus on the correlation between classification and localization and make two main contributions: (i) We provide an analysis about the effects of correlation between classification and localization tasks in object detectors. We identify why correlation affects the performance of various NMS-based and NMS-free detectors, and we devise measures to evaluate the effect of correlation and use them to analyze common detectors. (ii) Motivated by our observations, e.g., that NMS-free detectors can also benefit from correlation, we propose Correlation Loss, a novel plug-in loss function that improves the performance of various object detectors by directly optimizing correlation coefficients: E.g., Correlation Loss on Sparse R-CNN, an NMS-free method, yields 1.6 AP gain on COCO and 1.8 AP gain on Cityscapes dataset. Our best model on Sparse R-CNN reaches 51.0 AP without test-time augmentation on COCO test-dev, reaching state-of-the-art. Code is available at https://github.com/fehmikahraman/CorrLoss

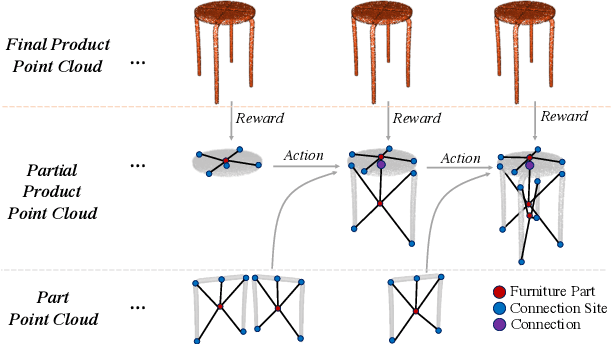

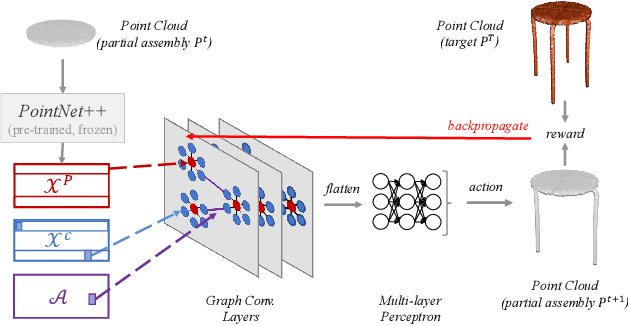

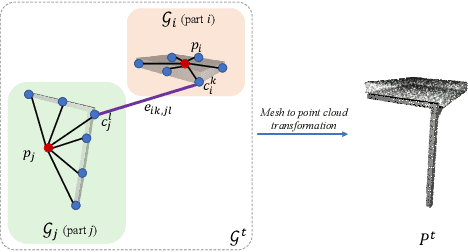

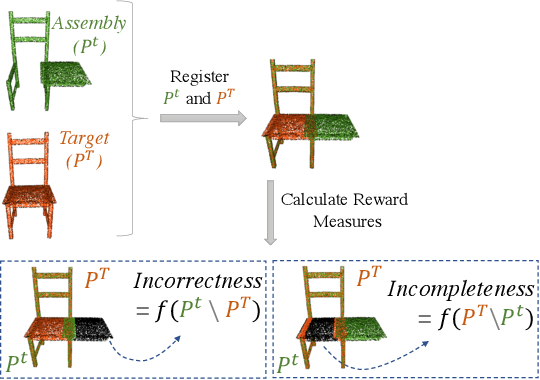

AssembleRL: Learning to Assemble Furniture from Their Point Clouds

Sep 15, 2022

Abstract:The rise of simulation environments has enabled learning-based approaches for assembly planning, which is otherwise a labor-intensive and daunting task. Assembling furniture is especially interesting since furniture are intricate and pose challenges for learning-based approaches. Surprisingly, humans can solve furniture assembly mostly given a 2D snapshot of the assembled product. Although recent years have witnessed promising learning-based approaches for furniture assembly, they assume the availability of correct connection labels for each assembly step, which are expensive to obtain in practice. In this paper, we alleviate this assumption and aim to solve furniture assembly with as little human expertise and supervision as possible. To be specific, we assume the availability of the assembled point cloud, and comparing the point cloud of the current assembly and the point cloud of the target product, obtain a novel reward signal based on two measures: Incorrectness and incompleteness. We show that our novel reward signal can train a deep network to successfully assemble different types of furniture. Code and networks available here: https://github.com/METU-KALFA/AssembleRL

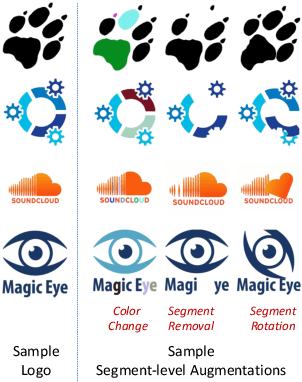

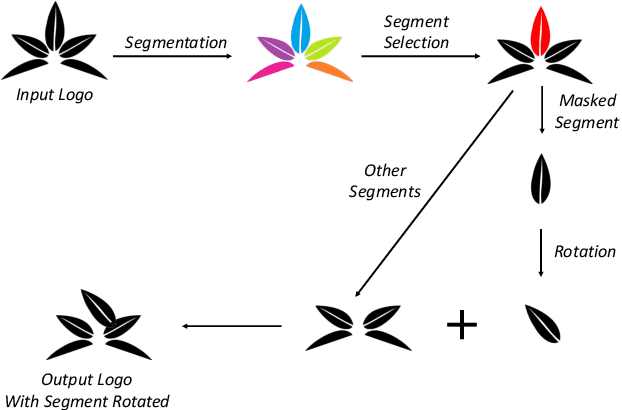

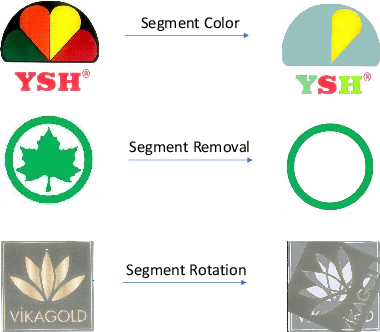

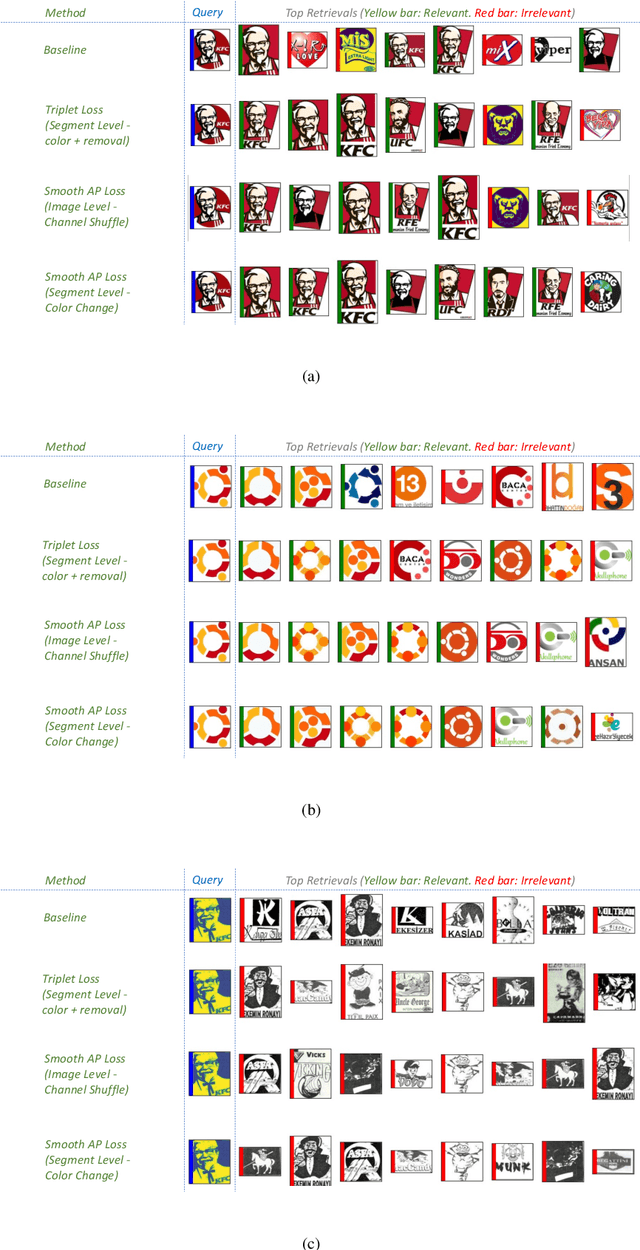

Segment Augmentation and Differentiable Ranking for Logo Retrieval

Sep 13, 2022

Abstract:Logo retrieval is a challenging problem since the definition of similarity is more subjective compared to image retrieval tasks and the set of known similarities is very scarce. To tackle this challenge, in this paper, we propose a simple but effective segment-based augmentation strategy to introduce artificially similar logos for training deep networks for logo retrieval. In this novel augmentation strategy, we first find segments in a logo and apply transformations such as rotation, scaling, and color change, on the segments, unlike the conventional image-level augmentation strategies. Moreover, we evaluate whether the recently introduced ranking-based loss function, Smooth-AP, is a better approach for learning similarity for logo retrieval. On the large scale METU Trademark Dataset, we show that (i) our segment-based augmentation strategy improves retrieval performance compared to the baseline model or image-level augmentation strategies, and (ii) Smooth-AP indeed performs better than conventional losses for logo retrieval.

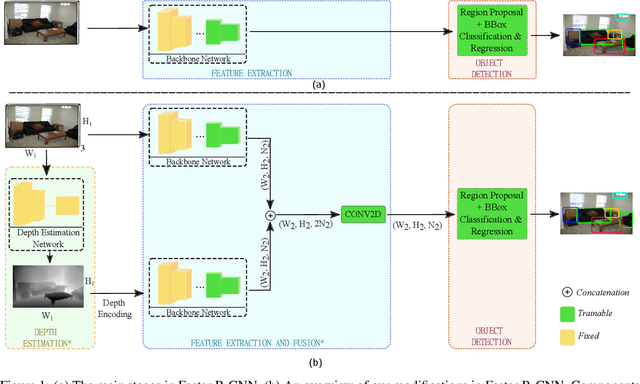

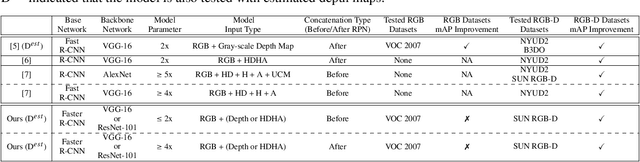

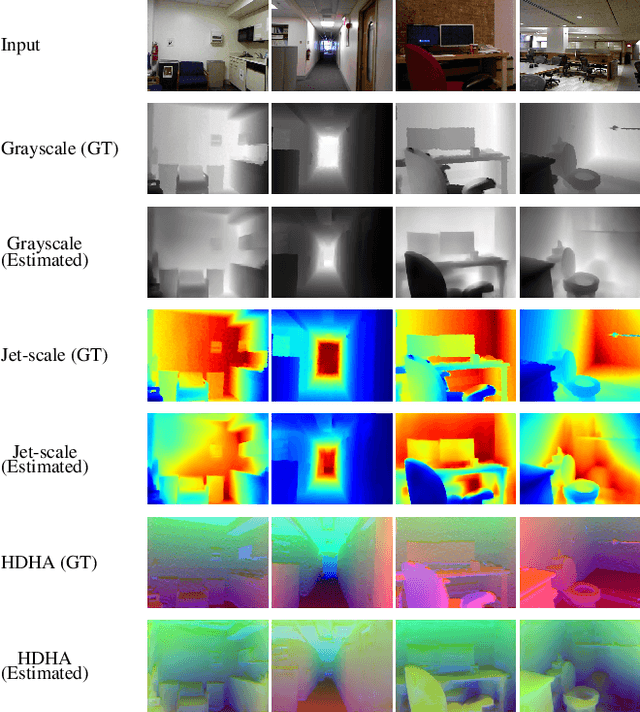

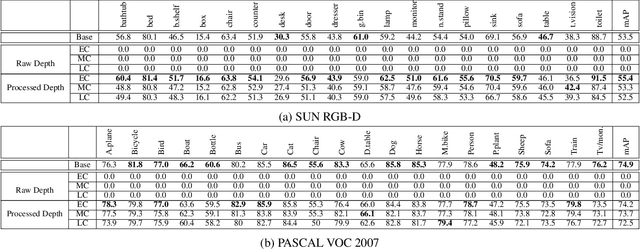

Does depth estimation help object detection?

Apr 13, 2022

Abstract:Ground-truth depth, when combined with color data, helps improve object detection accuracy over baseline models that only use color. However, estimated depth does not always yield improvements. Many factors affect the performance of object detection when estimated depth is used. In this paper, we comprehensively investigate these factors with detailed experiments, such as using ground-truth vs. estimated depth, effects of different state-of-the-art depth estimation networks, effects of using different indoor and outdoor RGB-D datasets as training data for depth estimation, and different architectural choices for integrating depth to the base object detector network. We propose an early concatenation strategy of depth, which yields higher mAP than previous works' while using significantly fewer parameters.

Mask-aware IoU for Anchor Assignment in Real-time Instance Segmentation

Oct 19, 2021

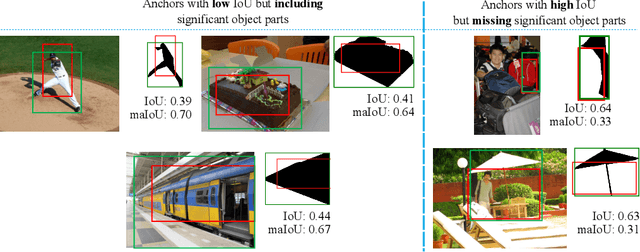

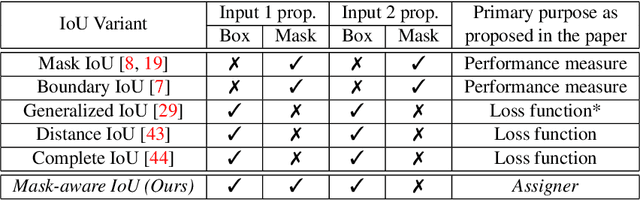

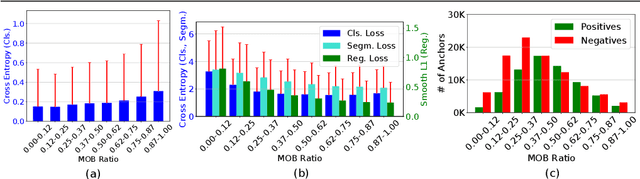

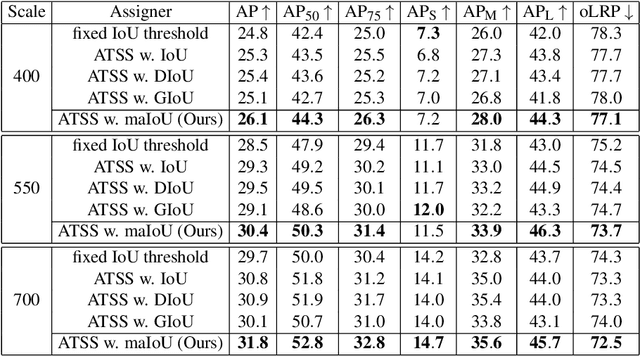

Abstract:This paper presents Mask-aware Intersection-over-Union (maIoU) for assigning anchor boxes as positives and negatives during training of instance segmentation methods. Unlike conventional IoU or its variants, which only considers the proximity of two boxes; maIoU consistently measures the proximity of an anchor box with not only a ground truth box but also its associated ground truth mask. Thus, additionally considering the mask, which, in fact, represents the shape of the object, maIoU enables a more accurate supervision during training. We present the effectiveness of maIoU on a state-of-the-art (SOTA) assigner, ATSS, by replacing IoU operation by our maIoU and training YOLACT, a SOTA real-time instance segmentation method. Using ATSS with maIoU consistently outperforms (i) ATSS with IoU by $\sim 1$ mask AP, (ii) baseline YOLACT with fixed IoU threshold assigner by $\sim 2$ mask AP over different image sizes and (iii) decreases the inference time by $25 \%$ owing to using less anchors. Then, exploiting this efficiency, we devise maYOLACT, a faster and $+6$ AP more accurate detector than YOLACT. Our best model achieves $37.7$ mask AP at $25$ fps on COCO test-dev establishing a new state-of-the-art for real-time instance segmentation. Code is available at https://github.com/kemaloksuz/Mask-aware-IoU

Rank & Sort Loss for Object Detection and Instance Segmentation

Jul 24, 2021

Abstract:We propose Rank & Sort (RS) Loss, as a ranking-based loss function to train deep object detection and instance segmentation methods (i.e. visual detectors). RS Loss supervises the classifier, a sub-network of these methods, to rank each positive above all negatives as well as to sort positives among themselves with respect to (wrt.) their continuous localisation qualities (e.g. Intersection-over-Union - IoU). To tackle the non-differentiable nature of ranking and sorting, we reformulate the incorporation of error-driven update with backpropagation as Identity Update, which enables us to model our novel sorting error among positives. With RS Loss, we significantly simplify training: (i) Thanks to our sorting objective, the positives are prioritized by the classifier without an additional auxiliary head (e.g. for centerness, IoU, mask-IoU), (ii) due to its ranking-based nature, RS Loss is robust to class imbalance, and thus, no sampling heuristic is required, and (iii) we address the multi-task nature of visual detectors using tuning-free task-balancing coefficients. Using RS Loss, we train seven diverse visual detectors only by tuning the learning rate, and show that it consistently outperforms baselines: e.g. our RS Loss improves (i) Faster R-CNN by ~ 3 box AP and aLRP Loss (ranking-based baseline) by ~ 2 box AP on COCO dataset, (ii) Mask R-CNN with repeat factor sampling (RFS) by 3.5 mask AP (~ 7 AP for rare classes) on LVIS dataset; and also outperforms all counterparts. Code available at https://github.com/kemaloksuz/RankSortLoss

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge