Shuangsang Fang

Open World Knowledge Aided Single-Cell Foundation Model with Robust Cross-Modal Cell-Language Pre-training

Jan 09, 2026Abstract:Recent advancements in single-cell multi-omics, particularly RNA-seq, have provided profound insights into cellular heterogeneity and gene regulation. While pre-trained language model (PLM) paradigm based single-cell foundation models have shown promise, they remain constrained by insufficient integration of in-depth individual profiles and neglecting the influence of noise within multi-modal data. To address both issues, we propose an Open-world Language Knowledge-Aided Robust Single-Cell Foundation Model (OKR-CELL). It is built based on a cross-modal Cell-Language pre-training framework, which comprises two key innovations: (1) leveraging Large Language Models (LLMs) based workflow with retrieval-augmented generation (RAG) enriches cell textual descriptions using open-world knowledge; (2) devising a Cross-modal Robust Alignment (CRA) objective that incorporates sample reliability assessment, curriculum learning, and coupled momentum contrastive learning to strengthen the model's resistance to noisy data. After pretraining on 32M cell-text pairs, OKR-CELL obtains cutting-edge results across 6 evaluation tasks. Beyond standard benchmarks such as cell clustering, cell-type annotation, batch-effect correction, and few-shot annotation, the model also demonstrates superior performance in broader multi-modal applications, including zero-shot cell-type annotation and bidirectional cell-text retrieval.

Implementation of Training Convolutional Neural Networks

Jun 04, 2015

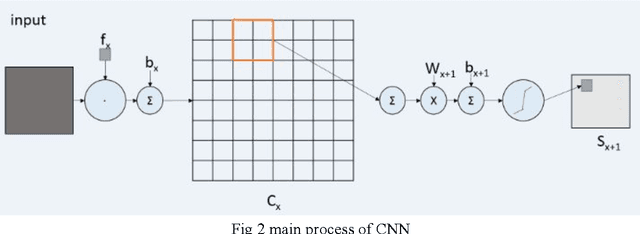

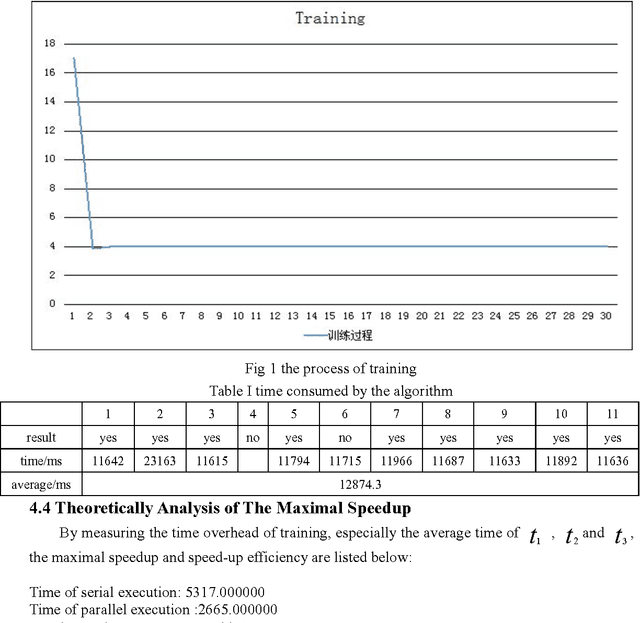

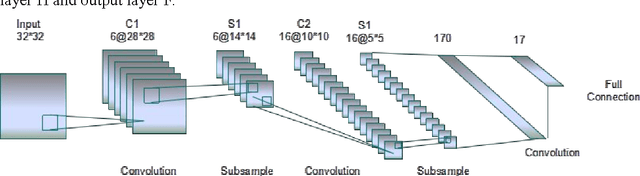

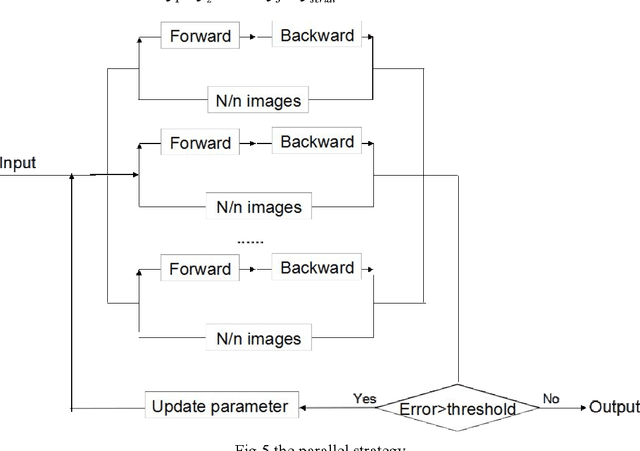

Abstract:Deep learning refers to the shining branch of machine learning that is based on learning levels of representations. Convolutional Neural Networks (CNN) is one kind of deep neural network. It can study concurrently. In this article, we gave a detailed analysis of the process of CNN algorithm both the forward process and back propagation. Then we applied the particular convolutional neural network to implement the typical face recognition problem by java. Then, a parallel strategy was proposed in section4. In addition, by measuring the actual time of forward and backward computing, we analysed the maximal speed up and parallel efficiency theoretically.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge