Samuel Kaski

Department of Computer Science, Aalto University, Department of Computer Science, University of Manchester

Differentially Private Bayesian Inference for Generalized Linear Models

Nov 09, 2020

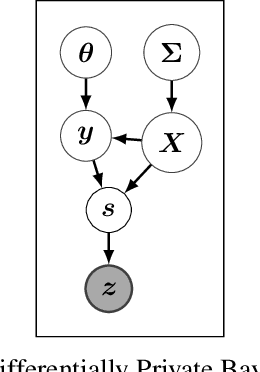

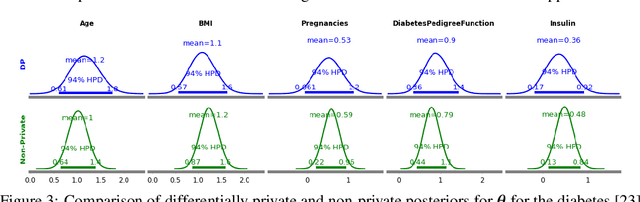

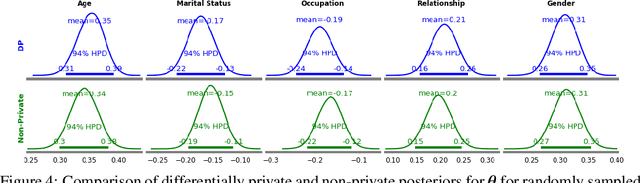

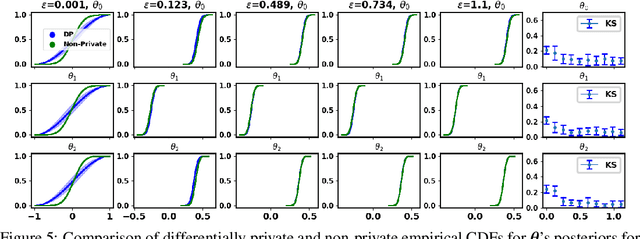

Abstract:The framework of differential privacy (DP) upper bounds the information disclosure risk involved in using sensitive datasets for statistical analysis. A DP mechanism typically operates by adding carefully calibrated noise to the data release procedure. Generalized linear models (GLMs) are among the most widely used arms in data analyst's repertoire. In this work, with logistic and Poisson regression as running examples, we propose a generic noise-aware Bayesian framework to quantify the parameter uncertainty for a GLM at hand, given noisy sufficient statistics. We perform a tight privacy analysis and experimentally demonstrate that the posteriors obtained from our model, while adhering to strong privacy guarantees, are similar to the non-private posteriors.

Sample-efficient reinforcement learning using deep Gaussian processes

Nov 02, 2020

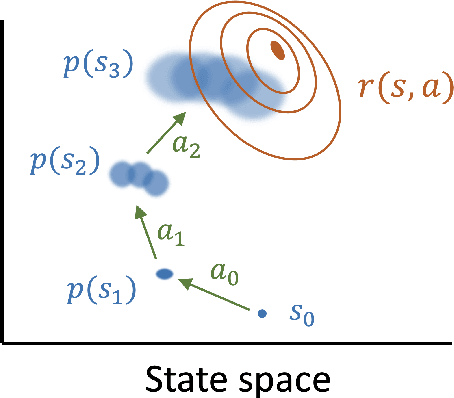

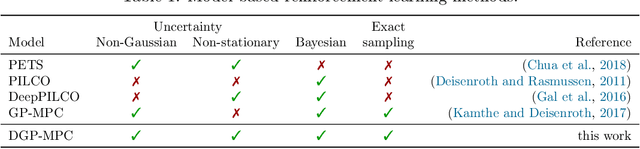

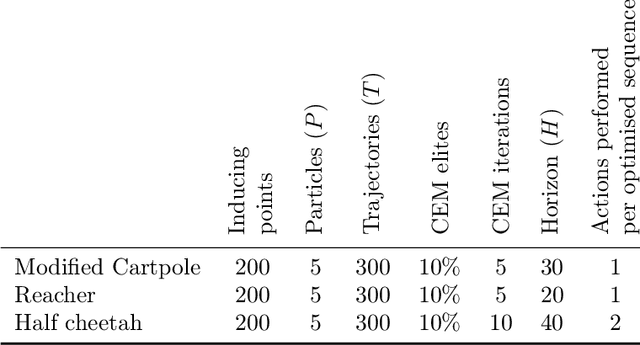

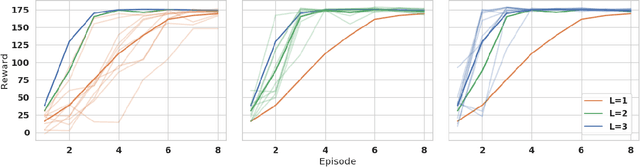

Abstract:Reinforcement learning provides a framework for learning to control which actions to take towards completing a task through trial-and-error. In many applications observing interactions is costly, necessitating sample-efficient learning. In model-based reinforcement learning efficiency is improved by learning to simulate the world dynamics. The challenge is that model inaccuracies rapidly accumulate over planned trajectories. We introduce deep Gaussian processes where the depth of the compositions introduces model complexity while incorporating prior knowledge on the dynamics brings smoothness and structure. Our approach is able to sample a Bayesian posterior over trajectories. We demonstrate highly improved early sample-efficiency over competing methods. This is shown across a number of continuous control tasks, including the half-cheetah whose contact dynamics have previously posed an insurmountable problem for earlier sample-efficient Gaussian process based models.

Scalable Bayesian neural networks by layer-wise input augmentation

Oct 26, 2020

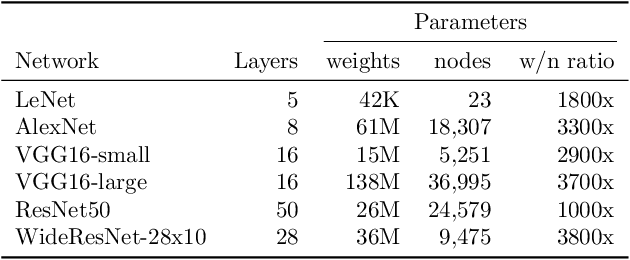

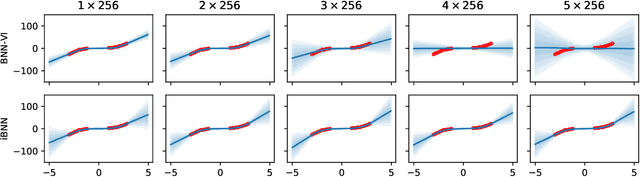

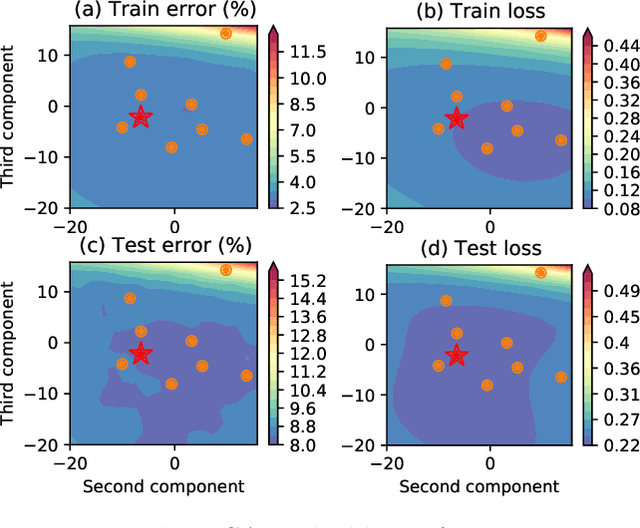

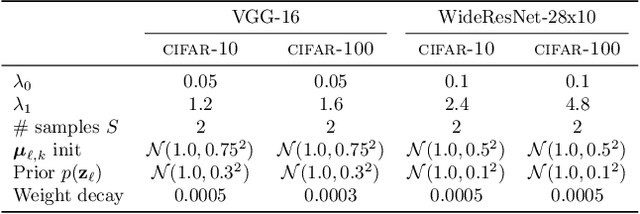

Abstract:We introduce implicit Bayesian neural networks, a simple and scalable approach for uncertainty representation in deep learning. Standard Bayesian approach to deep learning requires the impractical inference of the posterior distribution over millions of parameters. Instead, we propose to induce a distribution that captures the uncertainty over neural networks by augmenting each layer's inputs with latent variables. We present appropriate input distributions and demonstrate state-of-the-art performance in terms of calibration, robustness and uncertainty characterisation over large-scale, multi-million parameter image classification tasks.

Rethinking pooling in graph neural networks

Oct 22, 2020

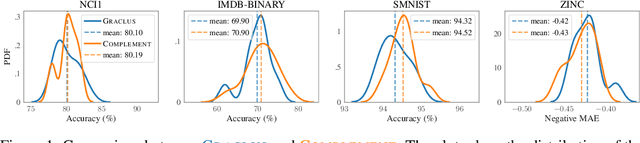

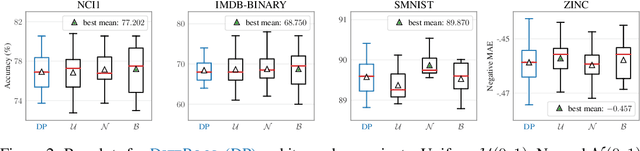

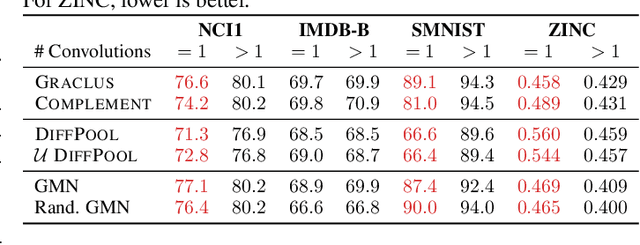

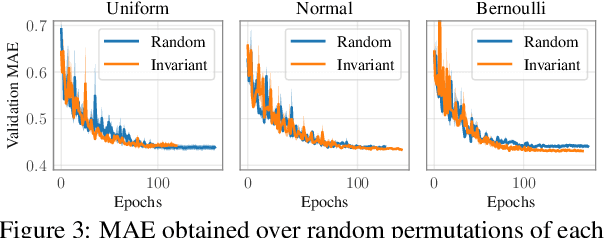

Abstract:Graph pooling is a central component of a myriad of graph neural network (GNN) architectures. As an inheritance from traditional CNNs, most approaches formulate graph pooling as a cluster assignment problem, extending the idea of local patches in regular grids to graphs. Despite the wide adherence to this design choice, no work has rigorously evaluated its influence on the success of GNNs. In this paper, we build upon representative GNNs and introduce variants that challenge the need for locality-preserving representations, either using randomization or clustering on the complement graph. Strikingly, our experiments demonstrate that using these variants does not result in any decrease in performance. To understand this phenomenon, we study the interplay between convolutional layers and the subsequent pooling ones. We show that the convolutions play a leading role in the learned representations. In contrast to the common belief, local pooling is not responsible for the success of GNNs on relevant and widely-used benchmarks.

Bayesian Inference for Optimal Transport with Stochastic Cost

Oct 19, 2020

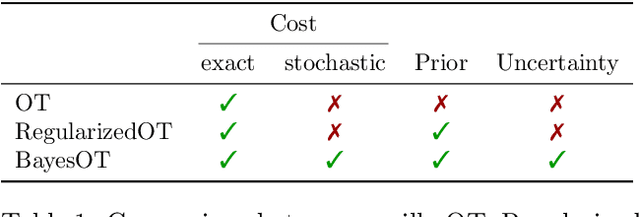

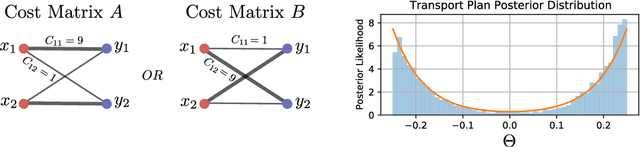

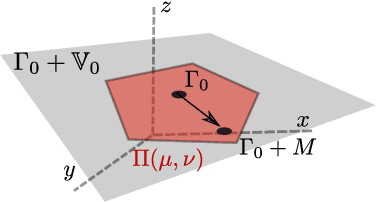

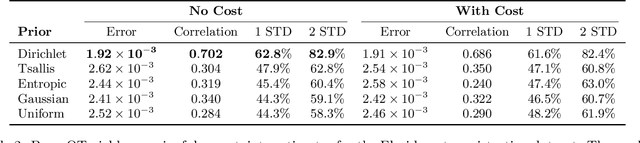

Abstract:In machine learning and computer vision, optimal transport has had significant success in learning generative models and defining metric distances between structured and stochastic data objects, that can be cast as probability measures. The key element of optimal transport is the so called lifting of an \emph{exact} cost (distance) function, defined on the sample space, to a cost (distance) between probability measures over the sample space. However, in many real life applications the cost is \emph{stochastic}: e.g., the unpredictable traffic flow affects the cost of transportation between a factory and an outlet. To take this stochasticity into account, we introduce a Bayesian framework for inferring the optimal transport plan distribution induced by the stochastic cost, allowing for a principled way to include prior information and to model the induced stochasticity on the transport plans. Additionally, we tailor an HMC method to sample from the resulting transport plan posterior distribution.

Privacy-preserving Data Sharing on Vertically Partitioned Data

Oct 19, 2020

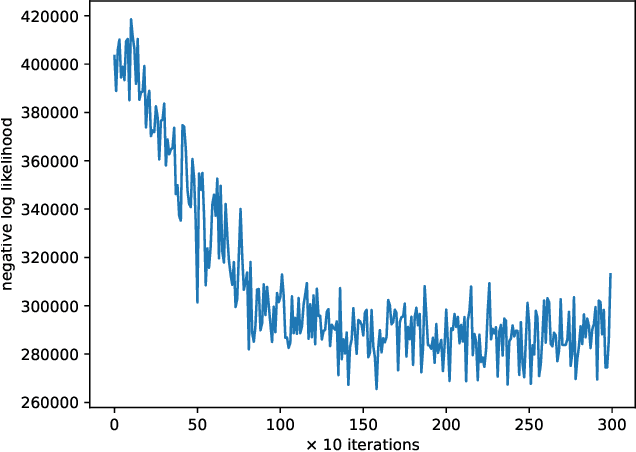

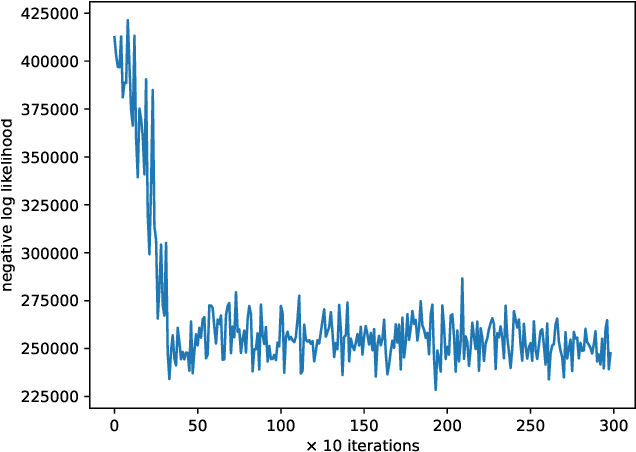

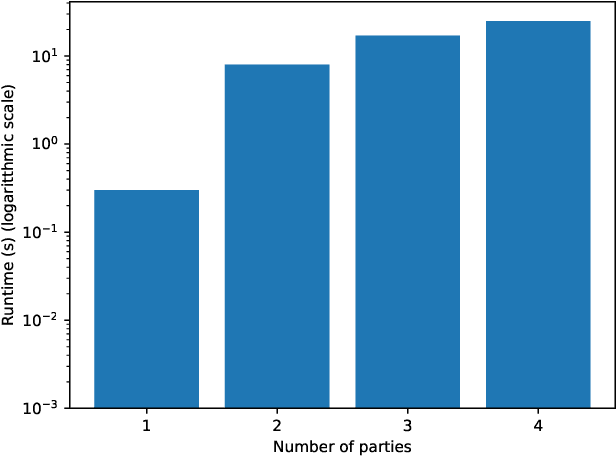

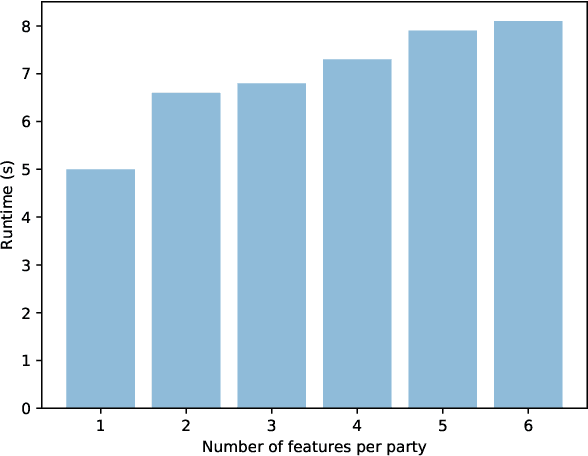

Abstract:In this work, we present a method for differentially private data sharing by training a mixture model on vertically partitioned data, where each party holds different features for the same set of individuals. We use secure multi-party computation (MPC) to combine the contribution of the data from the parties to train the model. We apply the differentially private variational inference (DPVI) for learning the model. Assuming the mixture components contain no dependencies across different parties, the objective function can be factorized into a sum of products of individual components of each party. Therefore, each party can calculate its shares on its own without the use of MPC. Then MPC is only needed to get the product between the different shares and add the noise. Applying the method to demographic data from the US Census, we obtain comparable accuracy to the non-partitioned case with approximately 20-fold increase in computing time.

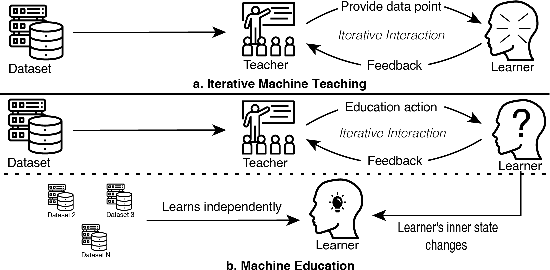

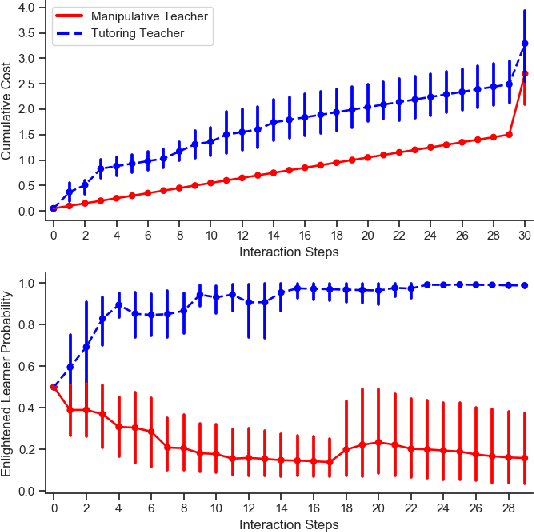

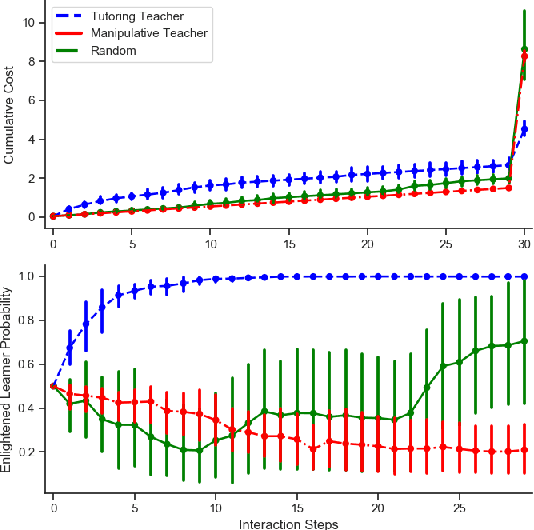

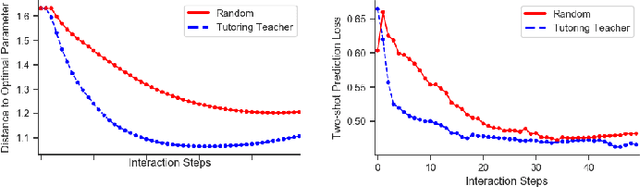

Teaching to Learn: Sequential Teaching of Agents with Inner States

Sep 14, 2020

Abstract:In sequential machine teaching, a teacher's objective is to provide the optimal sequence of inputs to sequential learners in order to guide them towards the best model. In this paper we extend this setting from current static one-data-set analyses to learners which change their learning algorithm or latent state to improve during learning, and to generalize to new datasets. We introduce a multi-agent formulation in which learners' inner state may change with the teaching interaction, which affects the learning performance in future tasks. In order to teach such learners, we propose an optimal control approach that takes the future performance of the learner after teaching into account. This provides tools for modelling learners having inner states, and machine teaching of meta-learning algorithms. Furthermore, we distinguish manipulative teaching, which can be done by effectively hiding data and also used for indoctrination, from more general education which aims to help the learner become better at generalization and learning in new datasets in the absence of a teacher.

Differentially private cross-silo federated learning

Jul 10, 2020

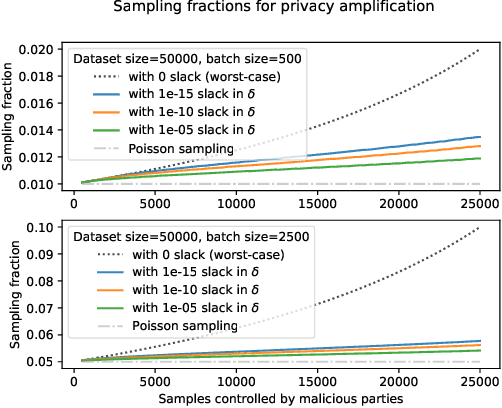

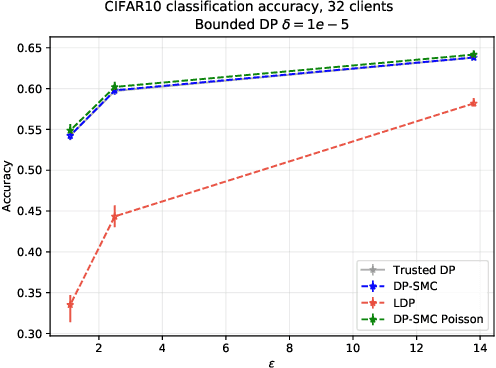

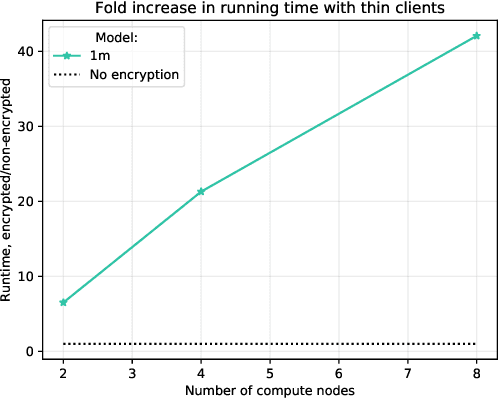

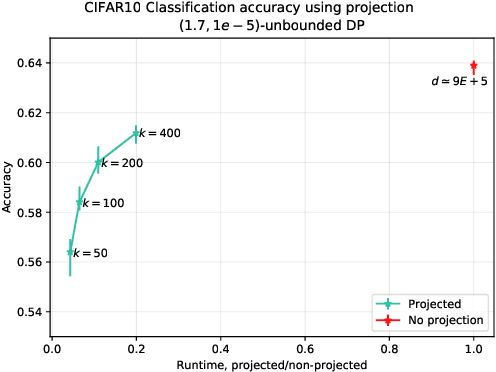

Abstract:Strict privacy is of paramount importance in distributed machine learning. Federated learning, with the main idea of communicating only what is needed for learning, has been recently introduced as a general approach for distributed learning to enhance learning and improve security. However, federated learning by itself does not guarantee any privacy for data subjects. To quantify and control how much privacy is compromised in the worst-case, we can use differential privacy. In this paper we combine additively homomorphic secure summation protocols with differential privacy in the so-called cross-silo federated learning setting. The goal is to learn complex models like neural networks while guaranteeing strict privacy for the individual data subjects. We demonstrate that our proposed solutions give prediction accuracy that is comparable to the non-distributed setting, and are fast enough to enable learning models with millions of parameters in a reasonable time. To enable learning under strict privacy guarantees that need privacy amplification by subsampling, we present a general algorithm for oblivious distributed subsampling. However, we also argue that when malicious parties are present, a simple approach using distributed Poisson subsampling gives better privacy. Finally, we show that by leveraging random projections we can further scale-up our approach to larger models while suffering only a modest performance loss.

Likelihood-Free Inference with Deep Gaussian Processes

Jun 18, 2020

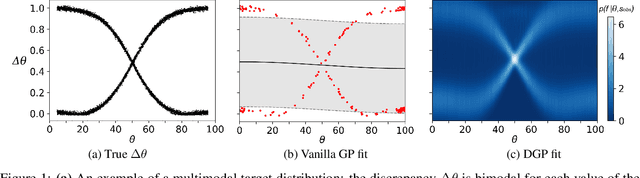

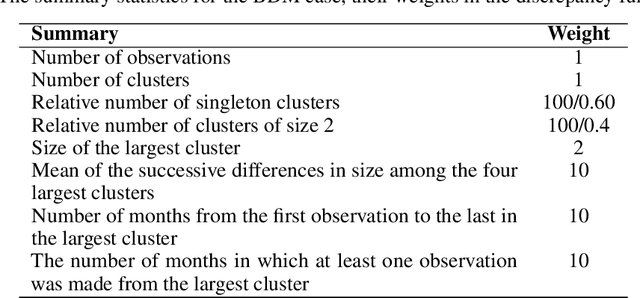

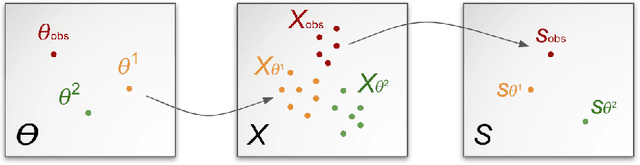

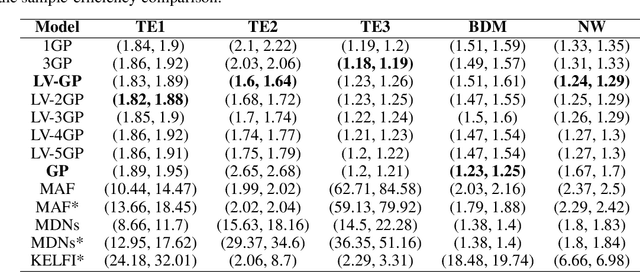

Abstract:In recent years, surrogate models have been successfully used in likelihood-free inference to decrease the number of simulator evaluations. The current state-of-the-art performance for this task has been achieved by Bayesian Optimization with Gaussian Processes (GPs). While this combination works well for unimodal target distributions, it is restricting the flexibility and applicability of Bayesian Optimization for accelerating likelihood-free inference more generally. We address this problem by proposing a Deep Gaussian Process (DGP) surrogate model that can handle more irregularly behaved target distributions. Our experiments show how DGPs can outperform GPs on objective functions with multimodal distributions and maintain a comparable performance in unimodal cases. This confirms that DGPs as surrogate models can extend the applicability of Bayesian Optimization for likelihood-free inference (BOLFI), while adding computational overhead that remains negligible for computationally intensive simulators.

Human Strategic Steering Improves Performance of Interactive Optimization

May 04, 2020

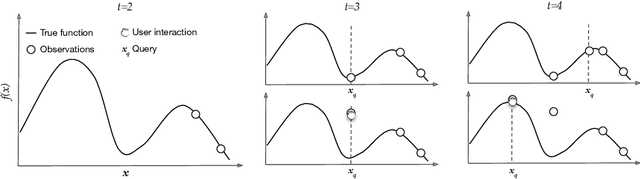

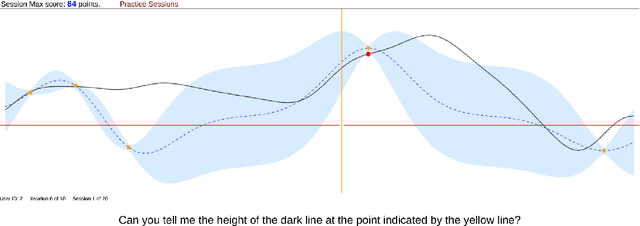

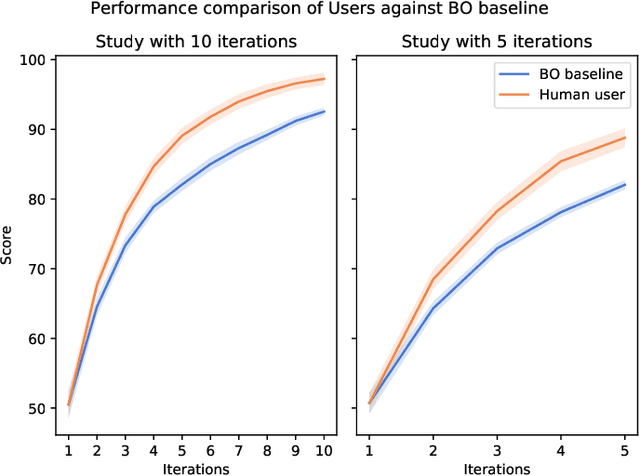

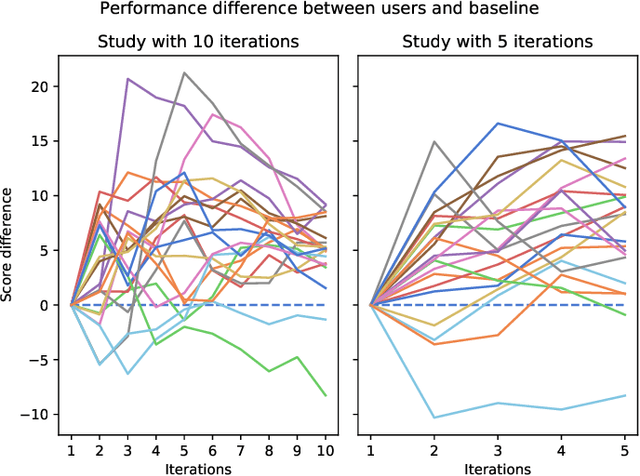

Abstract:A central concern in an interactive intelligent system is optimization of its actions, to be maximally helpful to its human user. In recommender systems for instance, the action is to choose what to recommend, and the optimization task is to recommend items the user prefers. The optimization is done based on earlier user's feedback (e.g. "likes" and "dislikes"), and the algorithms assume the feedback to be faithful. That is, when the user clicks "like," they actually prefer the item. We argue that this fundamental assumption can be extensively violated by human users, who are not passive feedback sources. Instead, they are in control, actively steering the system towards their goal. To verify this hypothesis, that humans steer and are able to improve performance by steering, we designed a function optimization task where a human and an optimization algorithm collaborate to find the maximum of a 1-dimensional function. At each iteration, the optimization algorithm queries the user for the value of a hidden function $f$ at a point $x$, and the user, who sees the hidden function, provides an answer about $f(x)$. Our study on 21 participants shows that users who understand how the optimization works, strategically provide biased answers (answers not equal to $f(x)$), which results in the algorithm finding the optimum significantly faster. Our work highlights that next-generation intelligent systems will need user models capable of helping users who steer systems to pursue their goals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge