Sahithi Ankireddy

RadDiff: Describing Differences in Radiology Image Sets with Natural Language

Jan 07, 2026Abstract:Understanding how two radiology image sets differ is critical for generating clinical insights and for interpreting medical AI systems. We introduce RadDiff, a multimodal agentic system that performs radiologist-style comparative reasoning to describe clinically meaningful differences between paired radiology studies. RadDiff builds on a proposer-ranker framework from VisDiff, and incorporates four innovations inspired by real diagnostic workflows: (1) medical knowledge injection through domain-adapted vision-language models; (2) multimodal reasoning that integrates images with their clinical reports; (3) iterative hypothesis refinement across multiple reasoning rounds; and (4) targeted visual search that localizes and zooms in on salient regions to capture subtle findings. To evaluate RadDiff, we construct RadDiffBench, a challenging benchmark comprising 57 expert-validated radiology study pairs with ground-truth difference descriptions. On RadDiffBench, RadDiff achieves 47% accuracy, and 50% accuracy when guided by ground-truth reports, significantly outperforming the general-domain VisDiff baseline. We further demonstrate RadDiff's versatility across diverse clinical tasks, including COVID-19 phenotype comparison, racial subgroup analysis, and discovery of survival-related imaging features. Together, RadDiff and RadDiffBench provide the first method-and-benchmark foundation for systematically uncovering meaningful differences in radiological data.

Assistive Diagnostic Tool for Brain Tumor Detection using Computer Vision

Nov 17, 2020

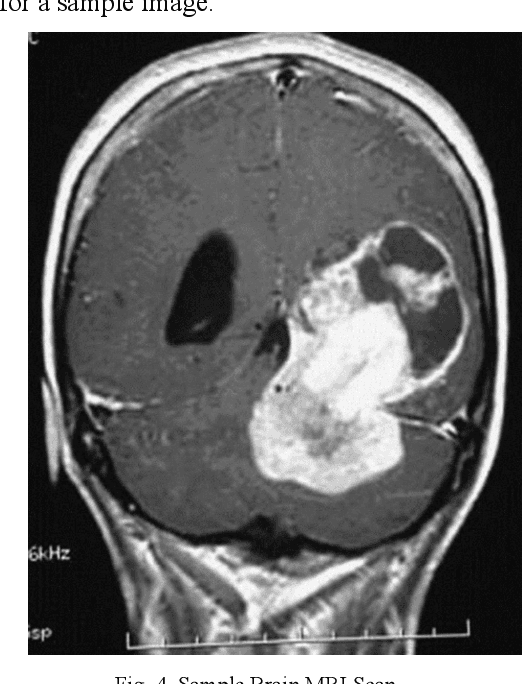

Abstract:Today, over 700,000 people are living with brain tumors in the United States. Brain tumors can spread very quickly to other parts of the brain and the spinal cord unless necessary preventive action is taken. Thus, the survival rate for this disease is less than 40% for both men and women. A conclusive and early diagnosis of a brain tumor could be the difference between life and death for some. However, brain tumor detection and segmentation are tedious and time-consuming processes as it can only be done by radiologists and clinical experts. The use of computer vision techniques, such as Mask R Convolutional Neural Network (Mask R CNN), to detect and segment brain tumors can mitigate the possibility of human error while increasing prediction accuracy rates. The goal of this project is to create an assistive diagnostics tool for brain tumor detection and segmentation. Transfer learning was used with the Mask R CNN, and necessary parameters were accordingly altered, as a starting point. The model was trained with 20 epochs and later tested. The prediction segmentation matched 90% with the ground truth. This suggests that the model was able to perform at a high level. Once the model was finalized, the application running on Flask was created. The application will serve as a tool for medical professionals. It allows doctors to upload patient brain tumor MRI images in order to receive immediate results on the diagnosis and segmentation for each patient.

A Novel Approach to the Diagnosis of Heart Disease using Machine Learning and Deep Neural Networks

Jul 25, 2020

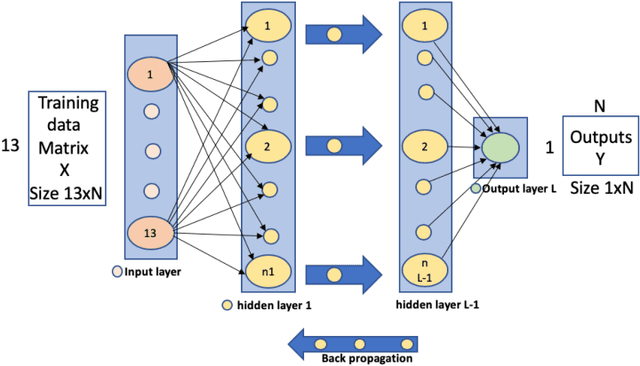

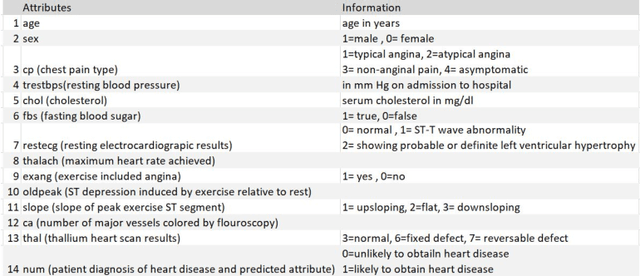

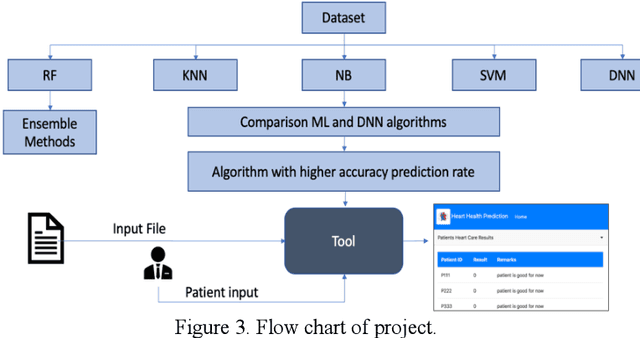

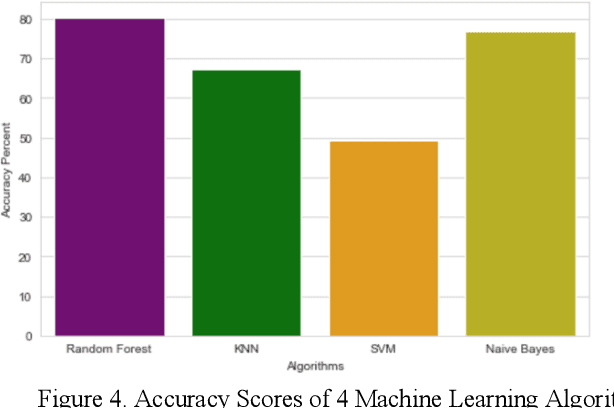

Abstract:Heart disease is the leading cause of death worldwide. Currently, 33% of cases are misdiagnosed, and approximately half of myocardial infarctions occur in people who are not predicted to be at risk. The use of Artificial Intelligence could reduce the chance of error, leading to possible earlier diagnoses, which could be the difference between life and death for some. The objective of this project was to develop an application for assisted heart disease diagnosis using Machine Learning (ML) and Deep Neural Network (DNN) algorithms. The dataset was provided from the Cleveland Clinic Foundation, and the models were built based on various optimization and hyper parametrization techniques including a Grid Search algorithm. The application, running on Flask, and utilizing Bootstrap was developed using the DNN, as it performed higher than the Random Forest ML model with a total accuracy rate of 92%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge