Runze Xu

The Devil is in Attention Sharing: Improving Complex Non-rigid Image Editing Faithfulness via Attention Synergy

Dec 17, 2025Abstract:Training-free image editing with large diffusion models has become practical, yet faithfully performing complex non-rigid edits (e.g., pose or shape changes) remains highly challenging. We identify a key underlying cause: attention collapse in existing attention sharing mechanisms, where either positional embeddings or semantic features dominate visual content retrieval, leading to over-editing or under-editing. To address this issue, we introduce SynPS, a method that Synergistically leverages Positional embeddings and Semantic information for faithful non-rigid image editing. We first propose an editing measurement that quantifies the required editing magnitude at each denoising step. Based on this measurement, we design an attention synergy pipeline that dynamically modulates the influence of positional embeddings, enabling SynPS to balance semantic modifications and fidelity preservation. By adaptively integrating positional and semantic cues, SynPS effectively avoids both over- and under-editing. Extensive experiments on public and newly curated benchmarks demonstrate the superior performance and faithfulness of our approach.

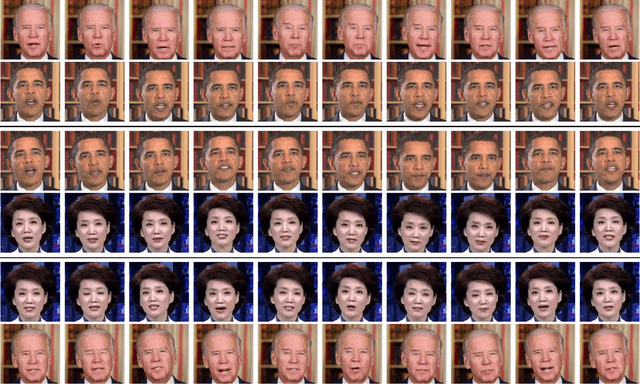

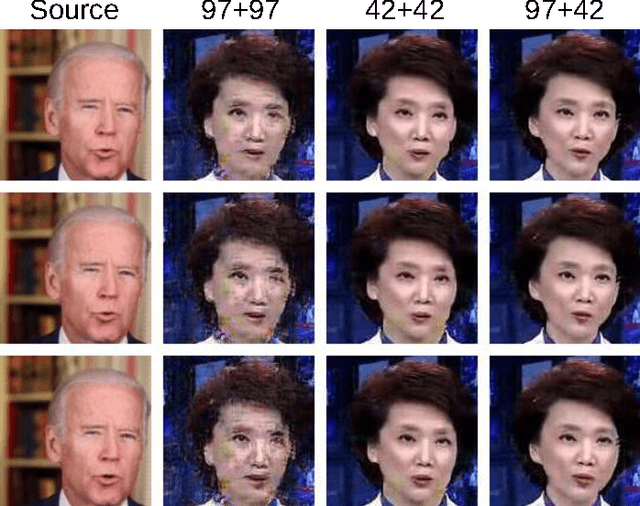

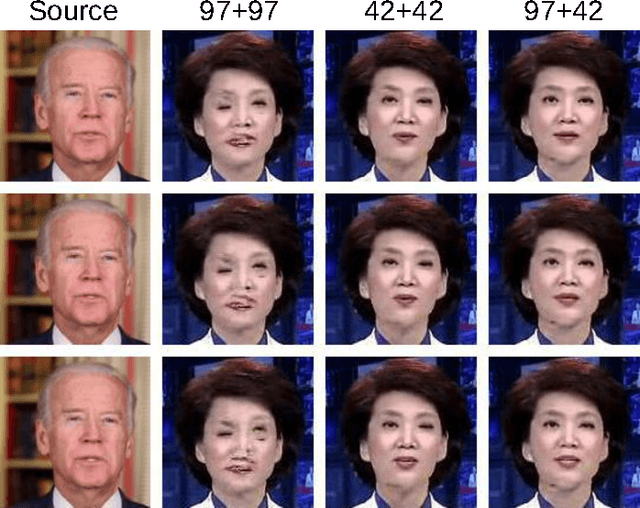

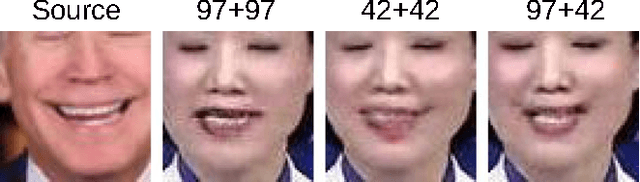

Face Transfer with Generative Adversarial Network

Oct 17, 2017

Abstract:Face transfer animates the facial performances of the character in the target video by a source actor. Traditional methods are typically based on face modeling. We propose an end-to-end face transfer method based on Generative Adversarial Network. Specifically, we leverage CycleGAN to generate the face image of the target character with the corresponding head pose and facial expression of the source. In order to improve the quality of generated videos, we adopt PatchGAN and explore the effect of different receptive field sizes on generated images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge