Roxana Istrate

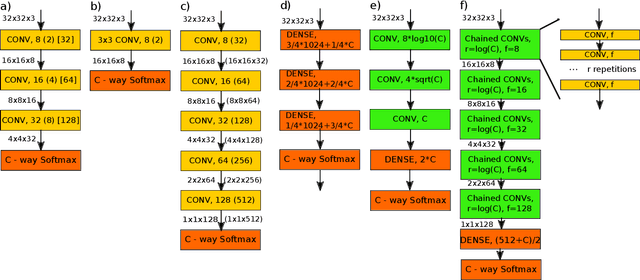

NeuNetS: An Automated Synthesis Engine for Neural Network Design

Jan 17, 2019

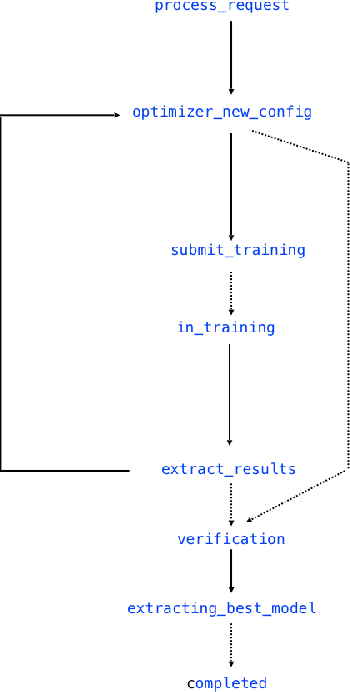

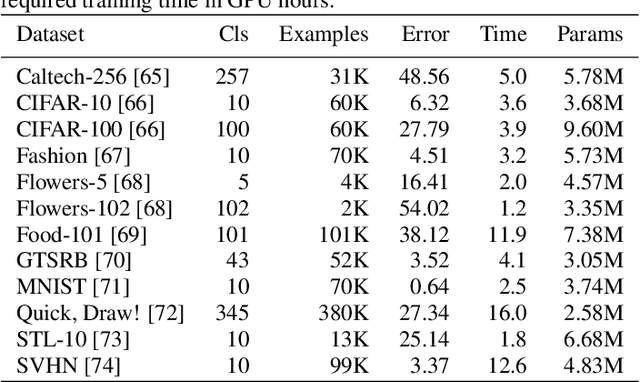

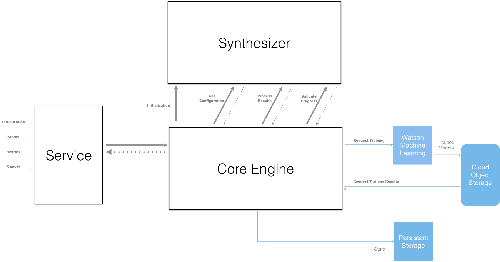

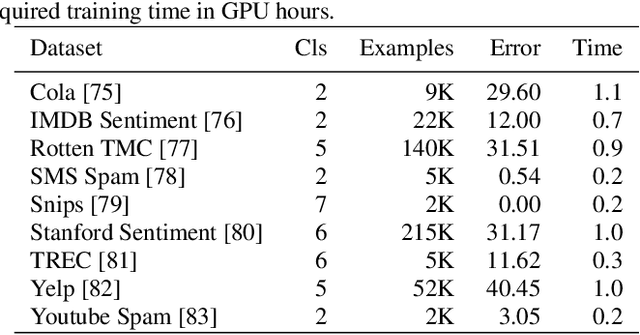

Abstract:Application of neural networks to a vast variety of practical applications is transforming the way AI is applied in practice. Pre-trained neural network models available through APIs or capability to custom train pre-built neural network architectures with customer data has made the consumption of AI by developers much simpler and resulted in broad adoption of these complex AI models. While prebuilt network models exist for certain scenarios, to try and meet the constraints that are unique to each application, AI teams need to think about developing custom neural network architectures that can meet the tradeoff between accuracy and memory footprint to achieve the tight constraints of their unique use-cases. However, only a small proportion of data science teams have the skills and experience needed to create a neural network from scratch, and the demand far exceeds the supply. In this paper, we present NeuNetS : An automated Neural Network Synthesis engine for custom neural network design that is available as part of IBM's AI OpenScale's product. NeuNetS is available for both Text and Image domains and can build neural networks for specific tasks in a fraction of the time it takes today with human effort, and with accuracy similar to that of human-designed AI models.

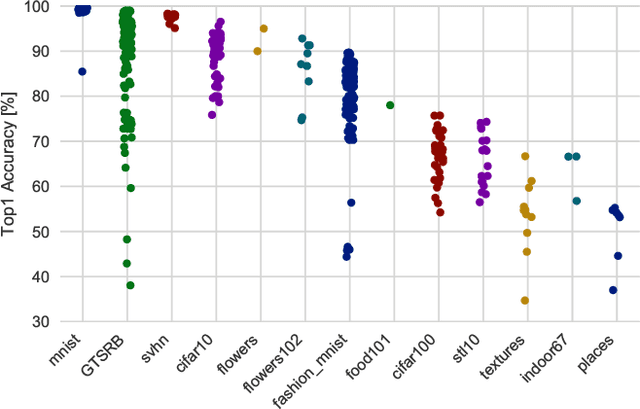

BAGAN: Data Augmentation with Balancing GAN

Jun 05, 2018

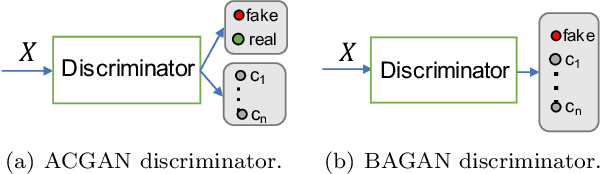

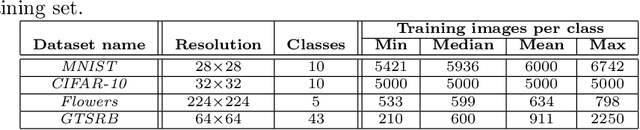

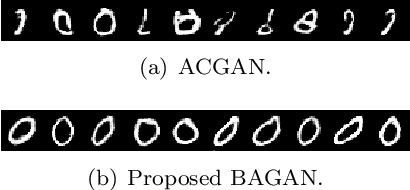

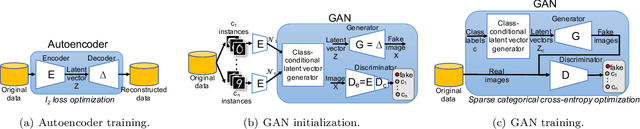

Abstract:Image classification datasets are often imbalanced, characteristic that negatively affects the accuracy of deep-learning classifiers. In this work we propose balancing GAN (BAGAN) as an augmentation tool to restore balance in imbalanced datasets. This is challenging because the few minority-class images may not be enough to train a GAN. We overcome this issue by including during the adversarial training all available images of majority and minority classes. The generative model learns useful features from majority classes and uses these to generate images for minority classes. We apply class conditioning in the latent space to drive the generation process towards a target class. The generator in the GAN is initialized with the encoder module of an autoencoder that enables us to learn an accurate class-conditioning in the latent space. We compare the proposed methodology with state-of-the-art GANs and demonstrate that BAGAN generates images of superior quality when trained with an imbalanced dataset.

Incremental Training of Deep Convolutional Neural Networks

Mar 27, 2018

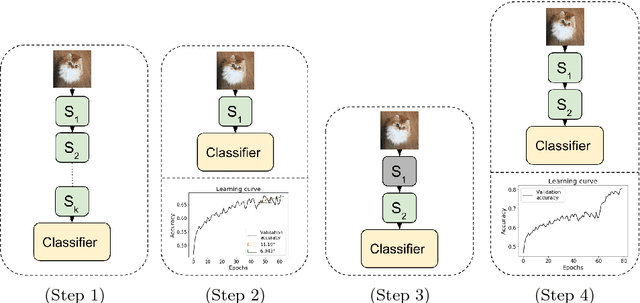

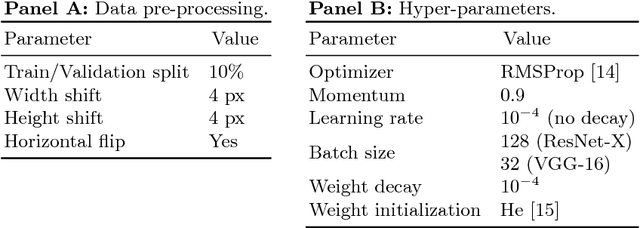

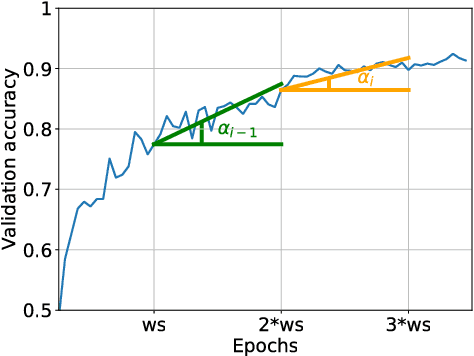

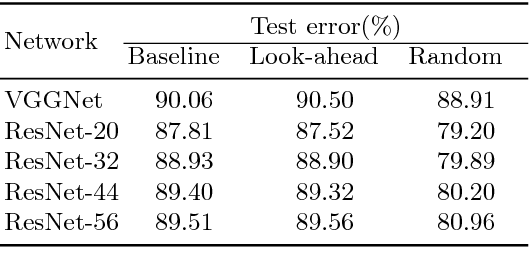

Abstract:We propose an incremental training method that partitions the original network into sub-networks, which are then gradually incorporated in the running network during the training process. To allow for a smooth dynamic growth of the network, we introduce a look-ahead initialization that outperforms the random initialization. We demonstrate that our incremental approach reaches the reference network baseline accuracy. Additionally, it allows to identify smaller partitions of the original state-of-the-art network, that deliver the same final accuracy, by using only a fraction of the global number of parameters. This allows for a potential speedup of the training time of several factors. We report training results on CIFAR-10 for ResNet and VGGNet.

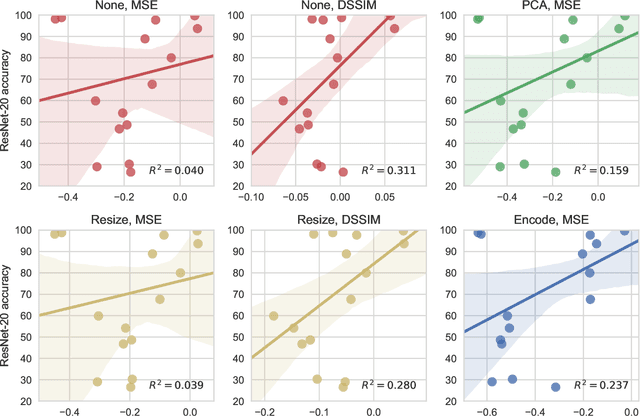

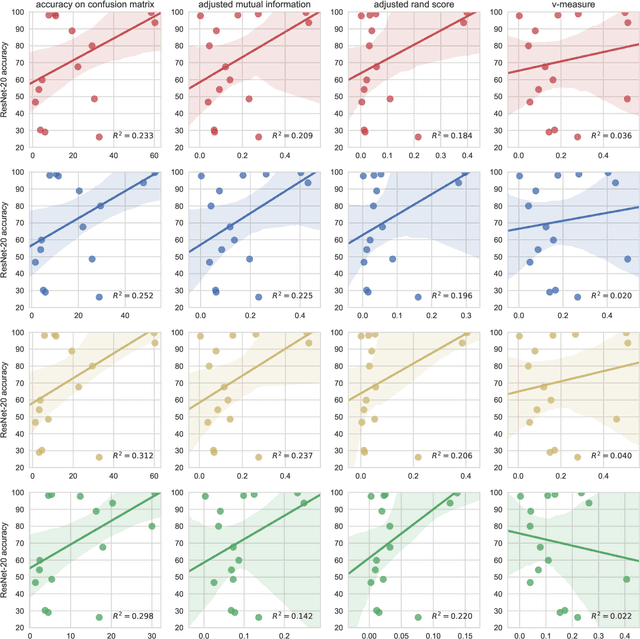

Efficient Image Dataset Classification Difficulty Estimation for Predicting Deep-Learning Accuracy

Mar 26, 2018

Abstract:In the deep-learning community new algorithms are published at an incredible pace. Therefore, solving an image classification problem for new datasets becomes a challenging task, as it requires to re-evaluate published algorithms and their different configurations in order to find a close to optimal classifier. To facilitate this process, before biasing our decision towards a class of neural networks or running an expensive search over the network space, we propose to estimate the classification difficulty of the dataset. Our method computes a single number that characterizes the dataset difficulty 27x faster than training state-of-the-art networks. The proposed method can be used in combination with network topology and hyper-parameter search optimizers to efficiently drive the search towards promising neural-network configurations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge