Benjamin Herta

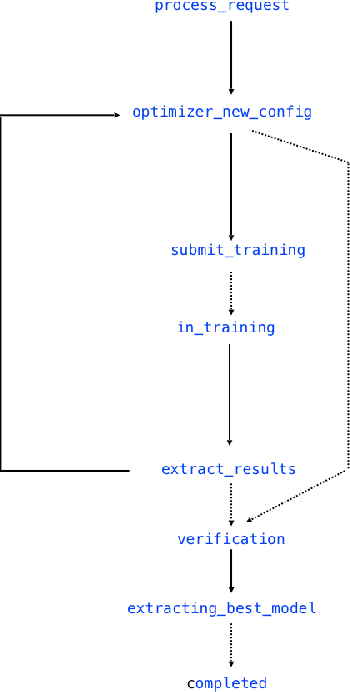

FfDL : A Flexible Multi-tenant Deep Learning Platform

Sep 14, 2019

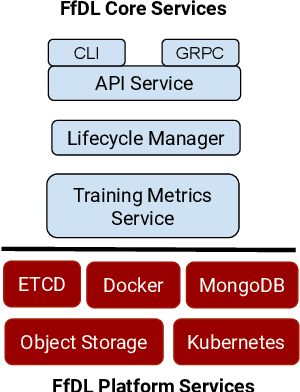

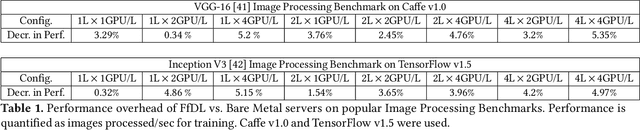

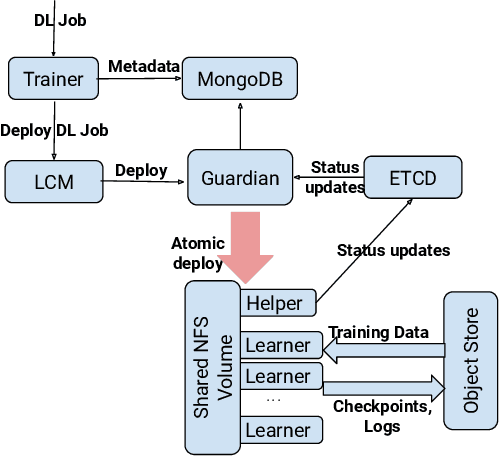

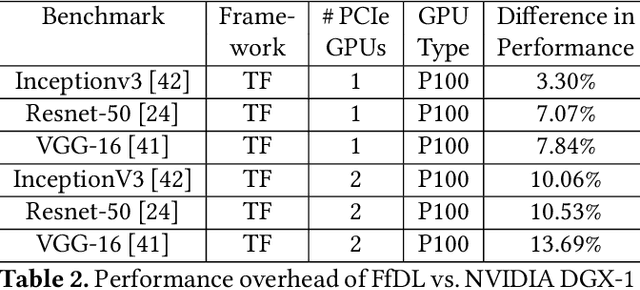

Abstract:Deep learning (DL) is becoming increasingly popular in several application domains and has made several new application features involving computer vision, speech recognition and synthesis, self-driving automobiles, drug design, etc. feasible and accurate. As a result, large scale on-premise and cloud-hosted deep learning platforms have become essential infrastructure in many organizations. These systems accept, schedule, manage and execute DL training jobs at scale. This paper describes the design, implementation and our experiences with FfDL, a DL platform used at IBM. We describe how our design balances dependability with scalability, elasticity, flexibility and efficiency. We examine FfDL qualitatively through a retrospective look at the lessons learned from building, operating, and supporting FfDL; and quantitatively through a detailed empirical evaluation of FfDL, including the overheads introduced by the platform for various deep learning models, the load and performance observed in a real case study using FfDL within our organization, the frequency of various faults observed including unanticipated faults, and experiments demonstrating the benefits of various scheduling policies. FfDL has been open-sourced.

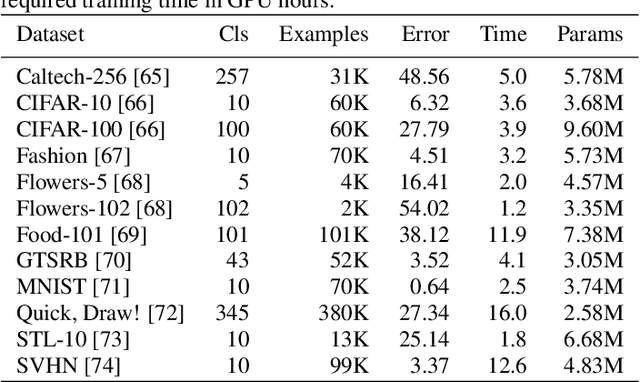

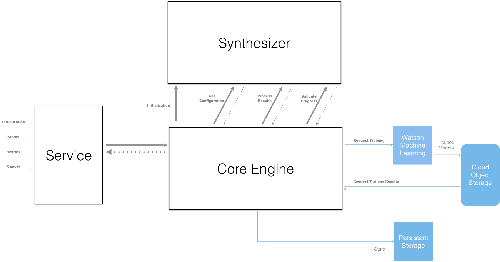

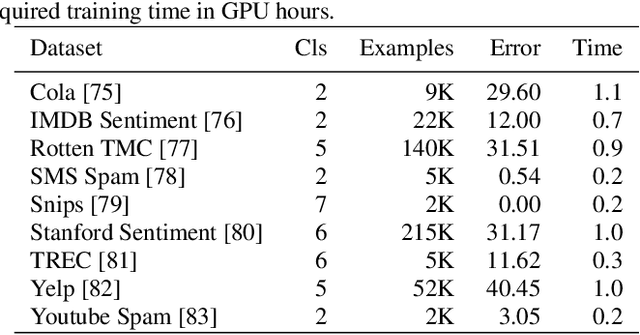

NeuNetS: An Automated Synthesis Engine for Neural Network Design

Jan 17, 2019

Abstract:Application of neural networks to a vast variety of practical applications is transforming the way AI is applied in practice. Pre-trained neural network models available through APIs or capability to custom train pre-built neural network architectures with customer data has made the consumption of AI by developers much simpler and resulted in broad adoption of these complex AI models. While prebuilt network models exist for certain scenarios, to try and meet the constraints that are unique to each application, AI teams need to think about developing custom neural network architectures that can meet the tradeoff between accuracy and memory footprint to achieve the tight constraints of their unique use-cases. However, only a small proportion of data science teams have the skills and experience needed to create a neural network from scratch, and the demand far exceeds the supply. In this paper, we present NeuNetS : An automated Neural Network Synthesis engine for custom neural network design that is available as part of IBM's AI OpenScale's product. NeuNetS is available for both Text and Image domains and can build neural networks for specific tasks in a fraction of the time it takes today with human effort, and with accuracy similar to that of human-designed AI models.

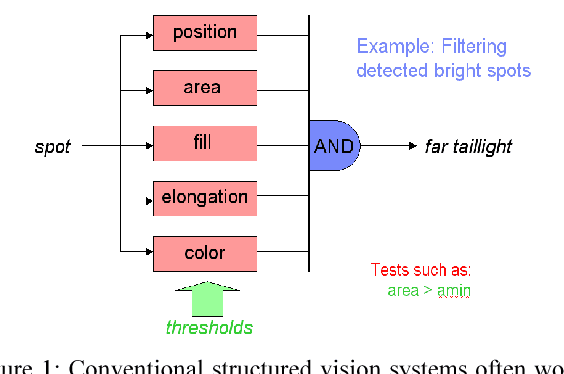

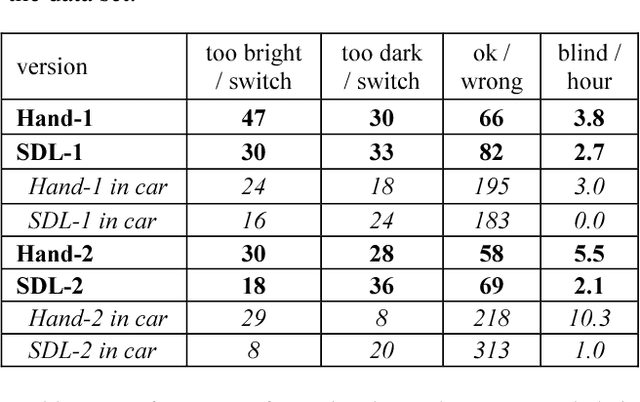

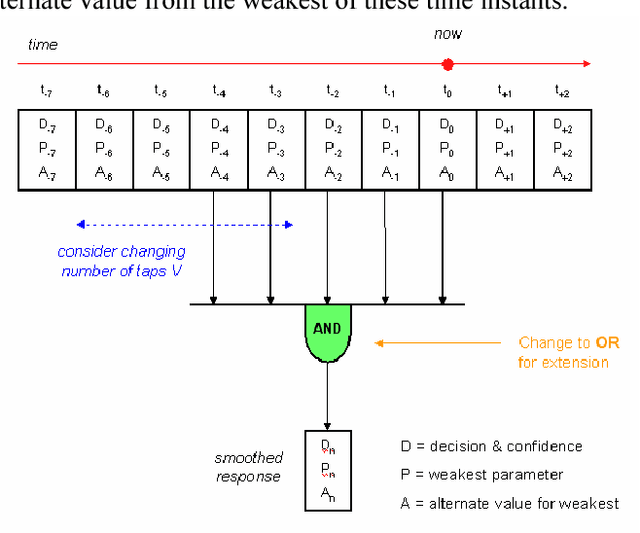

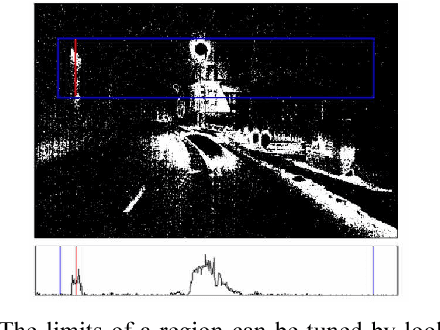

Structured Differential Learning for Automatic Threshold Setting

Aug 01, 2018

Abstract:We introduce a technique that can automatically tune the parameters of a rule-based computer vision system comprised of thresholds, combinational logic, and time constants. This lets us retain the flexibility and perspicacity of a conventionally structured system while allowing us to perform approximate gradient descent using labeled data. While this is only a heuristic procedure, as far as we are aware there is no other efficient technique for tuning such systems. We describe the components of the system and the associated supervised learning mechanism. We also demonstrate the utility of the algorithm by comparing its performance versus hand tuning for an automotive headlight controller. Despite having over 100 parameters, the method is able to profitably adjust the system values given just the desired output for a number of videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge