Rebecca Willett

Model Adaptation for Inverse Problems in Imaging

Nov 30, 2020

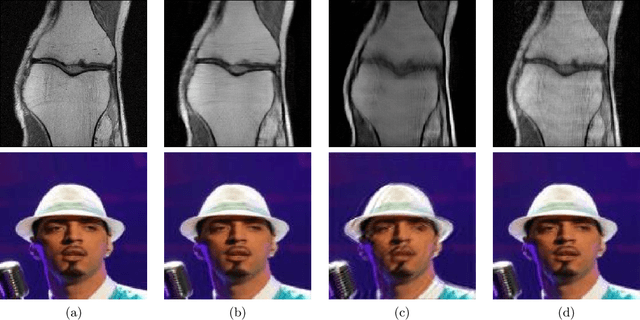

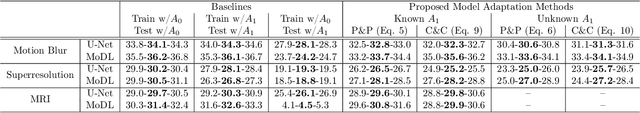

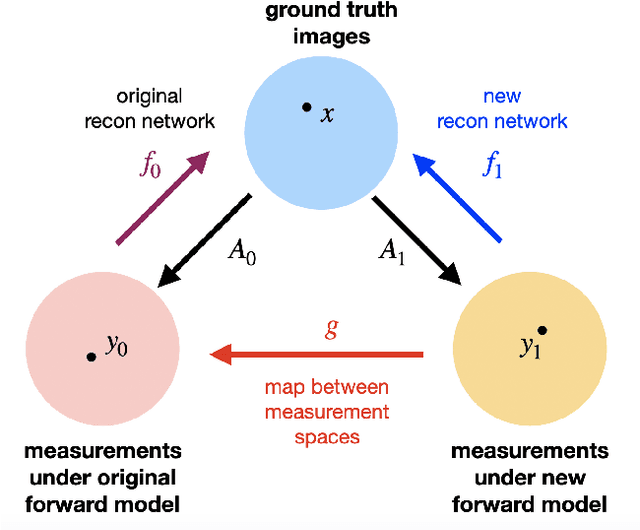

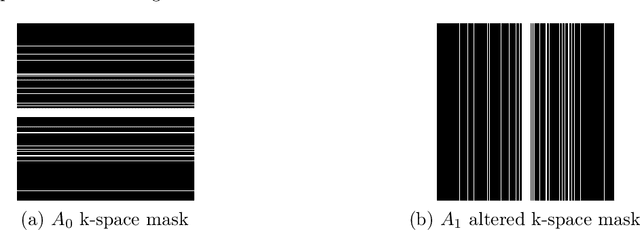

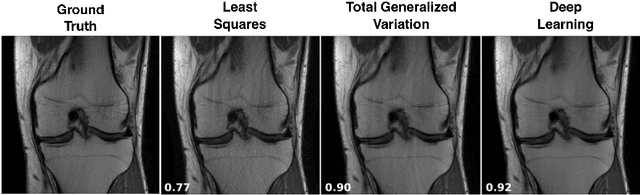

Abstract:Deep neural networks have been applied successfully to a wide variety of inverse problems arising in computational imaging. These networks are typically trained using a forward model that describes the measurement process to be inverted, which is often incorporated directly into the network itself. However, these approaches lack robustness to drift of the forward model: if at test time the forward model varies (even slightly) from the one the network was trained for, the reconstruction performance can degrade substantially. Given a network trained to solve an initial inverse problem with a known forward model, we propose two novel procedures that adapt the network to a perturbed forward model, even without full knowledge of the perturbation. Our approaches do not require access to more labeled data (i.e., ground truth images), but only a small set of calibration measurements. We show these simple model adaptation procedures empirically achieve robustness to changes in the forward model in a variety of settings, including deblurring, super-resolution, and undersampled image reconstruction in magnetic resonance imaging.

Deep Learning Techniques for Inverse Problems in Imaging

May 12, 2020

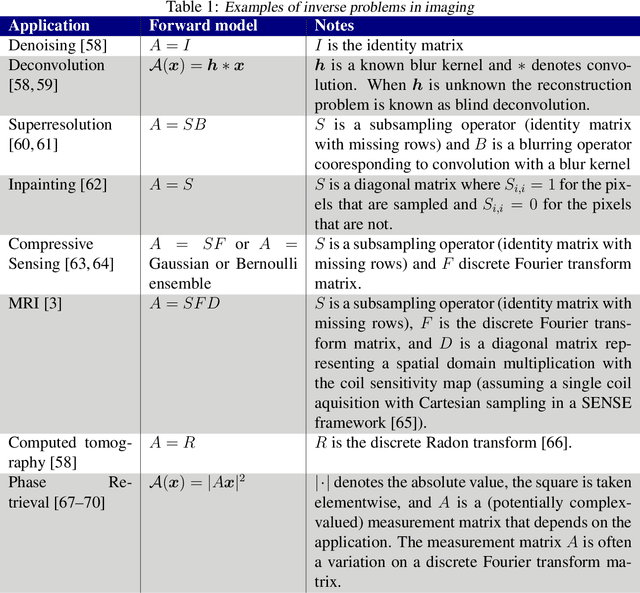

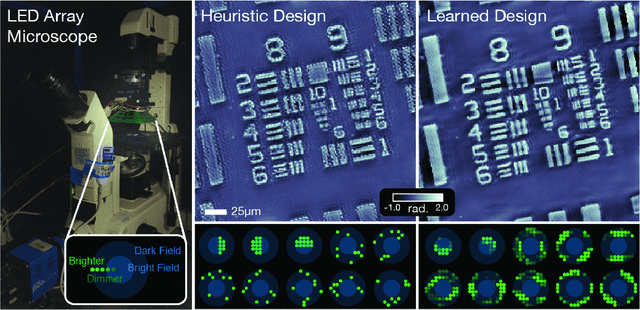

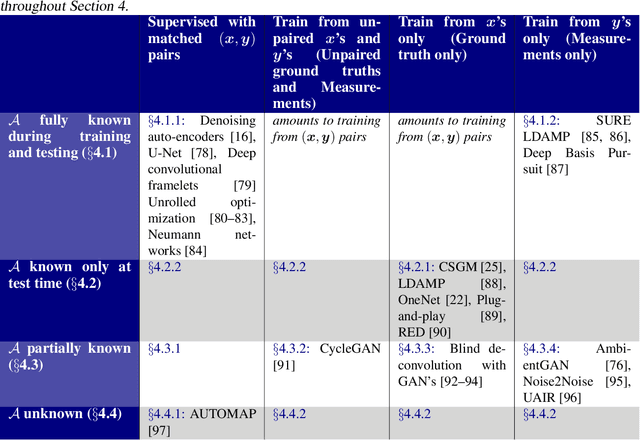

Abstract:Recent work in machine learning shows that deep neural networks can be used to solve a wide variety of inverse problems arising in computational imaging. We explore the central prevailing themes of this emerging area and present a taxonomy that can be used to categorize different problems and reconstruction methods. Our taxonomy is organized along two central axes: (1) whether or not a forward model is known and to what extent it is used in training and testing, and (2) whether or not the learning is supervised or unsupervised, i.e., whether or not the training relies on access to matched ground truth image and measurement pairs. We also discuss the trade-offs associated with these different reconstruction approaches, caveats and common failure modes, plus open problems and avenues for future work.

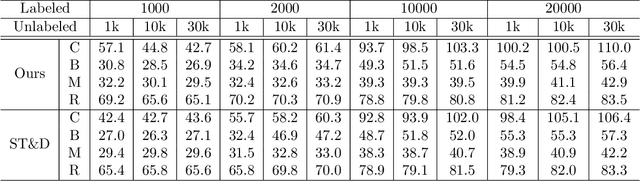

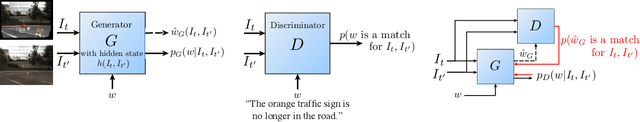

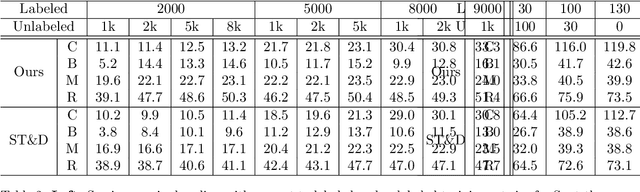

Detection and Description of Change in Visual Streams

Apr 09, 2020

Abstract:This paper presents a framework for the analysis of changes in visual streams: ordered sequences of images, possibly separated by significant time gaps. We propose a new approach to incorporating unlabeled data into training to generate natural language descriptions of change. We also develop a framework for estimating the time of change in visual stream. We use learned representations for change evidence and consistency of perceived change, and combine these in a regularized graph cut based change detector. Experimental evaluation on visual stream datasets, which we release as part of our contribution, shows that representation learning driven by natural language descriptions significantly improves change detection accuracy, compared to methods that do not rely on language.

Context-dependent self-exciting point processes: models, methods, and risk bounds in high dimensions

Mar 16, 2020

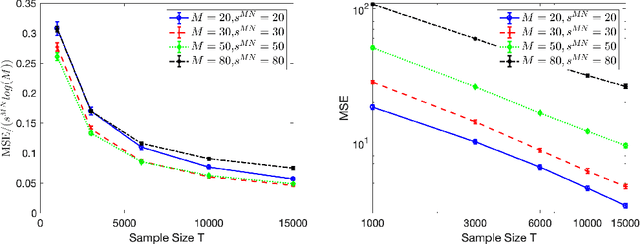

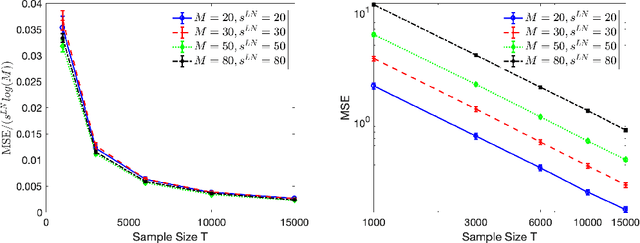

Abstract:High-dimensional autoregressive point processes model how current events trigger or inhibit future events, such as activity by one member of a social network can affect the future activity of his or her neighbors. While past work has focused on estimating the underlying network structure based solely on the times at which events occur on each node of the network, this paper examines the more nuanced problem of estimating context-dependent networks that reflect how features associated with an event (such as the content of a social media post) modulate the strength of influences among nodes. Specifically, we leverage ideas from compositional time series and regularization methods in machine learning to conduct network estimation for high-dimensional marked point processes. Two models and corresponding estimators are considered in detail: an autoregressive multinomial model suited to categorical marks and a logistic-normal model suited to marks with mixed membership in different categories. Importantly, the logistic-normal model leads to a convex negative log-likelihood objective and captures dependence across categories. We provide theoretical guarantees for both estimators, which we validate by simulations and a synthetic data-generating model. We further validate our methods through two real data examples and demonstrate the advantages and disadvantages of both approaches.

An Optimal Statistical and Computational Framework for Generalized Tensor Estimation

Feb 26, 2020

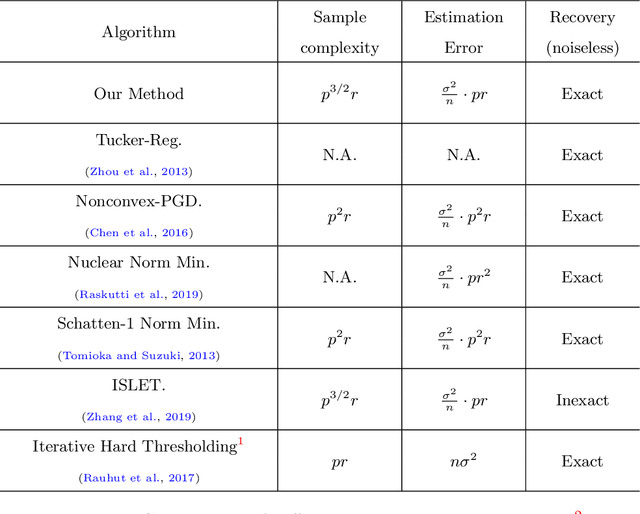

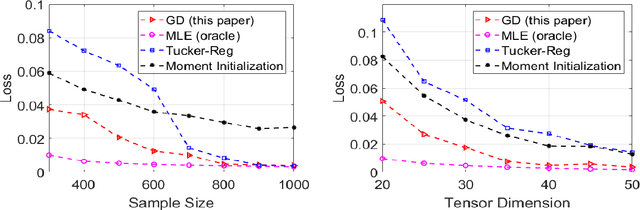

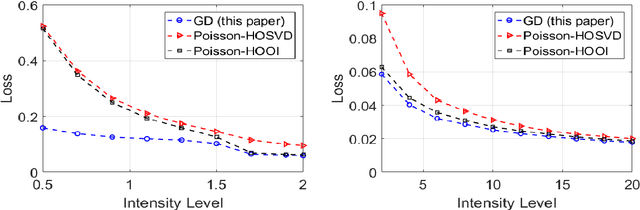

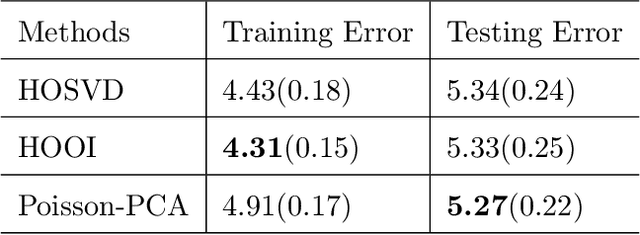

Abstract:This paper describes a flexible framework for generalized low-rank tensor estimation problems that includes many important instances arising from applications in computational imaging, genomics, and network analysis. The proposed estimator consists of finding a low-rank tensor fit to the data under generalized parametric models. To overcome the difficulty of non-convexity in these problems, we introduce a unified approach of projected gradient descent that adapts to the underlying low-rank structure. Under mild conditions on the loss function, we establish both an upper bound on statistical error and the linear rate of computational convergence through a general deterministic analysis. Then we further consider a suite of generalized tensor estimation problems, including sub-Gaussian tensor denoising, tensor regression, and Poisson and binomial tensor PCA. We prove that the proposed algorithm achieves the minimax optimal rate of convergence in estimation error. Finally, we demonstrate the superiority of the proposed framework via extensive experiments on both simulated and real data.

A Function Space View of Bounded Norm Infinite Width ReLU Nets: The Multivariate Case

Oct 03, 2019

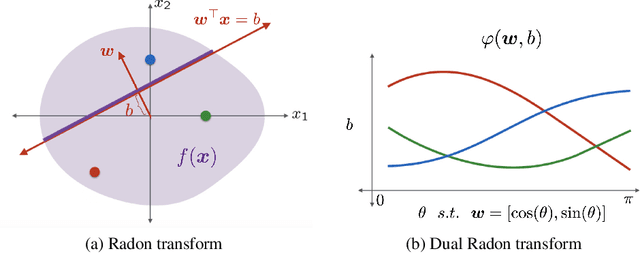

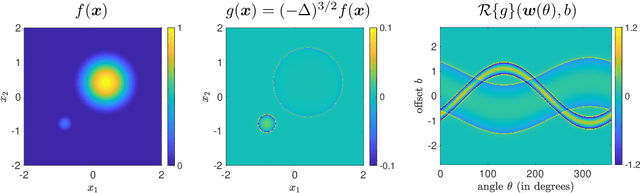

Abstract:A key element of understanding the efficacy of overparameterized neural networks is characterizing how they represent functions as the number of weights in the network approaches infinity. In this paper, we characterize the norm required to realize a function $f:\mathbb{R}^d\rightarrow\mathbb{R}$ as a single hidden-layer ReLU network with an unbounded number of units (infinite width), but where the Euclidean norm of the weights is bounded, including precisely characterizing which functions can be realized with finite norm. This was settled for univariate univariate functions in Savarese et al. (2019), where it was shown that the required norm is determined by the L1-norm of the second derivative of the function. We extend the characterization to multivariate functions (i.e., networks with d input units), relating the required norm to the L1-norm of the Radon transform of a (d+1)/2-power Laplacian of the function. This characterization allows us to show that all functions in Sobolev spaces $W^{s,1}(\mathbb{R})$, $s\geq d+1$, can be represented with bounded norm, to calculate the required norm for several specific functions, and to obtain a depth separation result. These results have important implications for understanding generalization performance and the distinction between neural networks and more traditional kernel learning.

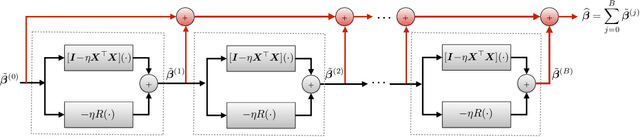

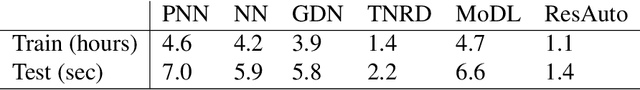

Neumann Networks for Inverse Problems in Imaging

Jan 13, 2019

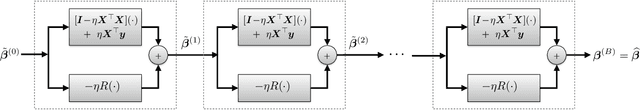

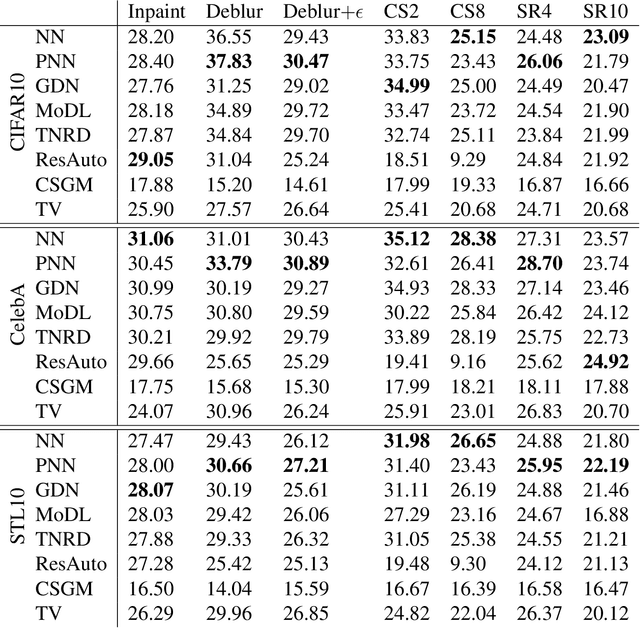

Abstract:Many challenging image processing tasks can be described by an ill-posed linear inverse problem: deblurring, deconvolution, inpainting, compressed sensing, and superresolution all lie in this framework. Traditional inverse problem solvers minimize a cost function consisting of a data-fit term, which measures how well an image matches the observations, and a regularizer, which reflects prior knowledge and promotes images with desirable properties like smoothness. Recent advances in machine learning and image processing have illustrated that it is often possible to learn a regularizer from training data that can outperform more traditional regularizers. We present an end-to-end, data-driven method of solving inverse problems inspired by the Neumann series, which we call a Neumann network. Rather than unroll an iterative optimization algorithm, we truncate a Neumann series which directly solves the linear inverse problem with a data-driven nonlinear regularizer. The Neumann network architecture outperforms traditional inverse problem solution methods, model-free deep learning approaches, and state-of-the-art unrolled iterative methods on standard datasets. Finally, when the images belong to a union of subspaces and under appropriate assumptions on the forward model, we prove there exists a Neumann network configuration that well-approximates the optimal oracle estimator for the inverse problem and demonstrate empirically that the trained Neumann network has the form predicted by theory.

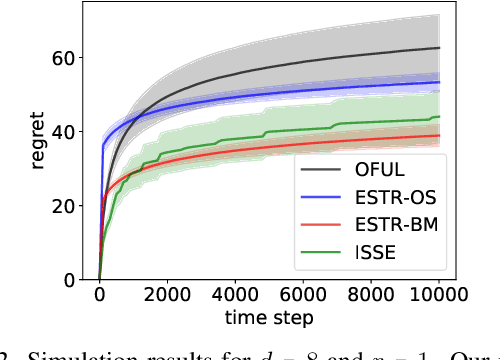

Bilinear Bandits with Low-rank Structure

Jan 08, 2019

Abstract:We introduce the bilinear bandit problem with low-rank structure where an action is a pair of arms from two different entity types, and the reward is a bilinear function of the known feature vectors of the arms. The problem is motivated by numerous applications in which the learner must recommend two different entity types as one action, such as a male / female pair in an online dating service. The unknown in the problem is a $d_1$ by $d_2$ matrix $\mathbf{\Theta}^*$ with rank $r \ll \min\{d_1,d_2\}$ governing the reward generation. Determination of $\mathbf{\Theta}^*$ with low-rank structure poses a significant challenge in finding the right exploration-exploitation tradeoff. In this work, we propose a new two-stage algorithm called "Explore-Subspace-Then-Refine" (ESTR). The first stage is an explicit subspace exploration, while the second stage is a linear bandit algorithm called "almost-low-dimensional OFUL" (LowOFUL) that exploits and further refines the estimated subspace via a regularization technique. We show that the regret of ESTR is $\tilde{O}((d_1+d_2)^{3/2} \sqrt{r T})$ (where $\tilde{O}$ hides logarithmic factors), which improves upon the regret of $\tilde{O}(d_1d_2\sqrt{T})$ of a naive linear bandit reduction. We conjecture that the regret bound of ESTR is unimprovable up to polylogarithmic factors.

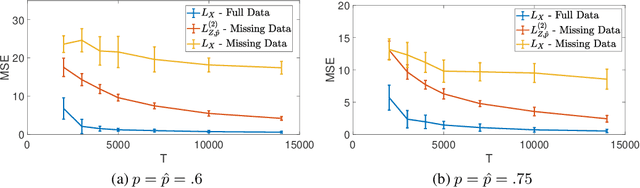

Estimating Network Structure from Incomplete Event Data

Nov 07, 2018

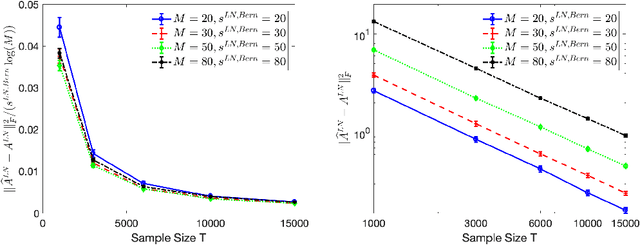

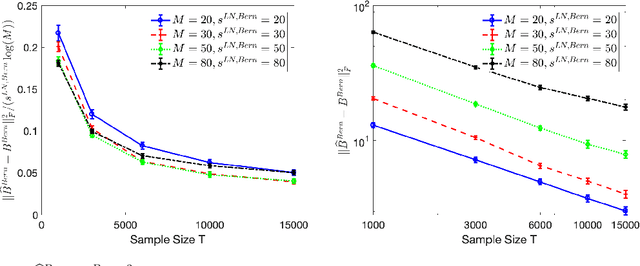

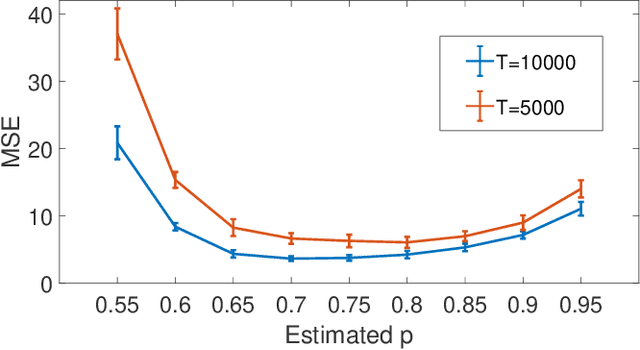

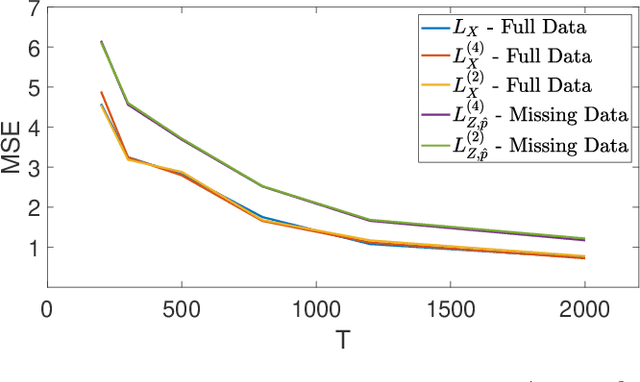

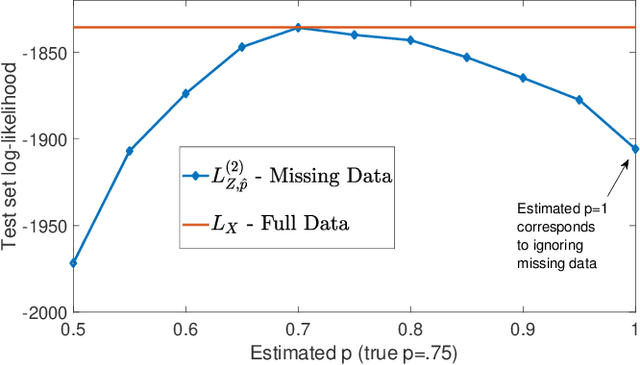

Abstract:Multivariate Bernoulli autoregressive (BAR) processes model time series of events in which the likelihood of current events is determined by the times and locations of past events. These processes can be used to model nonlinear dynamical systems corresponding to criminal activity, responses of patients to different medical treatment plans, opinion dynamics across social networks, epidemic spread, and more. Past work examines this problem under the assumption that the event data is complete, but in many cases only a fraction of events are observed. Incomplete observations pose a significant challenge in this setting because the unobserved events still govern the underlying dynamical system. In this work, we develop a novel approach to estimating the parameters of a BAR process in the presence of unobserved events via an unbiased estimator of the complete data log-likelihood function. We propose a computationally efficient estimation algorithm which approximates this estimator via Taylor series truncation and establish theoretical results for both the statistical error and optimization error of our algorithm. We further justify our approach by testing our method on both simulated data and a real data set consisting of crimes recorded by the city of Chicago.

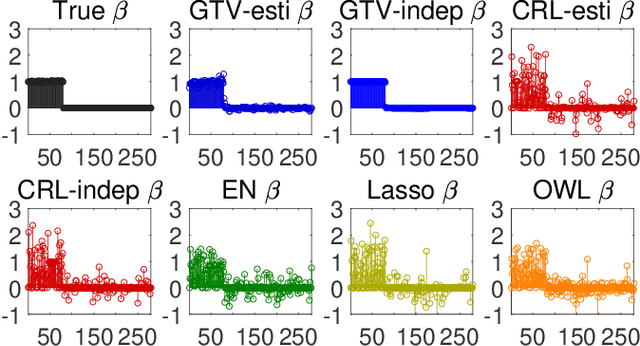

Graph-based regularization for regression problems with highly-correlated designs

Jun 05, 2018

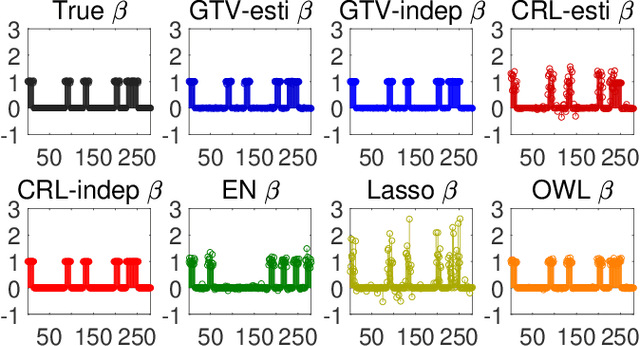

Abstract:Sparse models for high-dimensional linear regression and machine learning have received substantial attention over the past two decades. Model selection, or determining which features or covariates are the best explanatory variables, is critical to the interpretability of a learned model. Much of the current literature assumes that covariates are only mildly correlated. However, in modern applications ranging from functional MRI to genome-wide association studies, covariates are highly correlated and do not exhibit key properties (such as the restricted eigenvalue condition, RIP, or other related assumptions). This paper considers a high-dimensional regression setting in which a graph governs both correlations among the covariates and the similarity among regression coefficients. Using side information about the strength of correlations among features, we form a graph with edge weights corresponding to pairwise covariances. This graph is used to define a graph total variation regularizer that promotes similar weights for highly correlated features. The graph structure encapsulated by this regularizer helps precondition correlated features to yield provably accurate estimates. Using graph-based regularizers to develop theoretical guarantees for highly-correlated covariates has not been previously examined. This paper shows how our proposed graph-based regularization yields mean-squared error guarantees for a broad range of covariance graph structures and correlation strengths which in many cases are optimal by imposing additional structure on $\beta^{\star}$ which encourages \emph{alignment} with the covariance graph. Our proposed approach outperforms other state-of-the-art methods for highly-correlated design in a variety of experiments on simulated and real fMRI data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge