Rayid Ghani

Breaking the Cycle of Incarceration With Targeted Mental Health Outreach: A Case Study in Machine Learning for Public Policy

Sep 17, 2025Abstract:Many incarcerated individuals face significant and complex challenges, including mental illness, substance dependence, and homelessness, yet jails and prisons are often poorly equipped to address these needs. With little support from the existing criminal justice system, these needs can remain untreated and worsen, often leading to further offenses and a cycle of incarceration with adverse outcomes both for the individual and for public safety, with particularly large impacts on communities of color that continue to widen the already extensive racial disparities in criminal justice outcomes. Responding to these failures, a growing number of criminal justice stakeholders are seeking to break this cycle through innovative approaches such as community-driven and alternative approaches to policing, mentoring, community building, restorative justice, pretrial diversion, holistic defense, and social service connections. Here we report on a collaboration between Johnson County, Kansas, and Carnegie Mellon University to perform targeted, proactive mental health outreach in an effort to reduce reincarceration rates. This paper describes the data used, our predictive modeling approach and results, as well as the design and analysis of a field trial conducted to confirm our model's predictive power, evaluate the impact of this targeted outreach, and understand at what level of reincarceration risk outreach might be most effective. Through this trial, we find that our model is highly predictive of new jail bookings, with more than half of individuals in the trial's highest-risk group returning to jail in the following year. Outreach was most effective among these highest-risk individuals, with impacts on mental health utilization, EMS dispatches, and criminal justice involvement.

Towards Automated Scoping of AI for Social Good Projects

Apr 28, 2025Abstract:Artificial Intelligence for Social Good (AI4SG) is an emerging effort that aims to address complex societal challenges with the powerful capabilities of AI systems. These challenges range from local issues with transit networks to global wildlife preservation. However, regardless of scale, a critical bottleneck for many AI4SG initiatives is the laborious process of problem scoping -- a complex and resource-intensive task -- due to a scarcity of professionals with both technical and domain expertise. Given the remarkable applications of large language models (LLM), we propose a Problem Scoping Agent (PSA) that uses an LLM to generate comprehensive project proposals grounded in scientific literature and real-world knowledge. We demonstrate that our PSA framework generates proposals comparable to those written by experts through a blind review and AI evaluations. Finally, we document the challenges of real-world problem scoping and note several areas for future work.

Aequitas Flow: Streamlining Fair ML Experimentation

May 09, 2024Abstract:Aequitas Flow is an open-source framework for end-to-end Fair Machine Learning (ML) experimentation in Python. This package fills the existing integration gaps in other Fair ML packages of complete and accessible experimentation. It provides a pipeline for fairness-aware model training, hyperparameter optimization, and evaluation, enabling rapid and simple experiments and result analysis. Aimed at ML practitioners and researchers, the framework offers implementations of methods, datasets, metrics, and standard interfaces for these components to improve extensibility. By facilitating the development of fair ML practices, Aequitas Flow seeks to enhance the adoption of these concepts in AI technologies.

Preventing Eviction-Caused Homelessness through ML-Informed Distribution of Rental Assistance

Mar 19, 2024

Abstract:Rental assistance programs provide individuals with financial assistance to prevent housing instabilities caused by evictions and avert homelessness. Since these programs operate under resource constraints, they must decide who to prioritize. Typically, funding is distributed by a reactive or first-come-first serve allocation process that does not systematically consider risk of future homelessness. We partnered with Allegheny County, PA to explore a proactive allocation approach that prioritizes individuals facing eviction based on their risk of future homelessness. Our ML system that uses state and county administrative data to accurately identify individuals in need of support outperforms simpler prioritization approaches by at least 20% while being fair and equitable across race and gender. Furthermore, our approach would identify 28% of individuals who are overlooked by the current process and end up homeless. Beyond improvements to the rental assistance program in Allegheny County, this study can inform the development of evidence-based decision support tools in similar contexts, including lessons about data needs, model design, evaluation, and field validation.

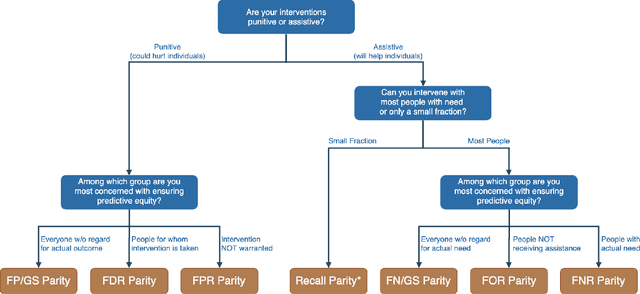

Toward Operationalizing Pipeline-aware ML Fairness: A Research Agenda for Developing Practical Guidelines and Tools

Sep 29, 2023

Abstract:While algorithmic fairness is a thriving area of research, in practice, mitigating issues of bias often gets reduced to enforcing an arbitrarily chosen fairness metric, either by enforcing fairness constraints during the optimization step, post-processing model outputs, or by manipulating the training data. Recent work has called on the ML community to take a more holistic approach to tackle fairness issues by systematically investigating the many design choices made through the ML pipeline, and identifying interventions that target the issue's root cause, as opposed to its symptoms. While we share the conviction that this pipeline-based approach is the most appropriate for combating algorithmic unfairness on the ground, we believe there are currently very few methods of \emph{operationalizing} this approach in practice. Drawing on our experience as educators and practitioners, we first demonstrate that without clear guidelines and toolkits, even individuals with specialized ML knowledge find it challenging to hypothesize how various design choices influence model behavior. We then consult the fair-ML literature to understand the progress to date toward operationalizing the pipeline-aware approach: we systematically collect and organize the prior work that attempts to detect, measure, and mitigate various sources of unfairness through the ML pipeline. We utilize this extensive categorization of previous contributions to sketch a research agenda for the community. We hope this work serves as the stepping stone toward a more comprehensive set of resources for ML researchers, practitioners, and students interested in exploring, designing, and testing pipeline-oriented approaches to algorithmic fairness.

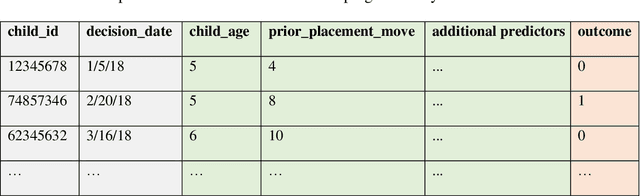

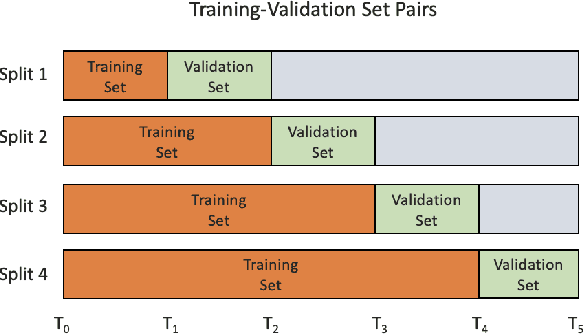

A Conceptual Framework for Using Machine Learning to Support Child Welfare Decisions

Jul 12, 2022

Abstract:Human services systems make key decisions that impact individuals in the society. The U.S. child welfare system makes such decisions, from screening-in hotline reports of suspected abuse or neglect for child protective investigations, placing children in foster care, to returning children to permanent home settings. These complex and impactful decisions on children's lives rely on the judgment of child welfare decisionmakers. Child welfare agencies have been exploring ways to support these decisions with empirical, data-informed methods that include machine learning (ML). This paper describes a conceptual framework for ML to support child welfare decisions. The ML framework guides how child welfare agencies might conceptualize a target problem that ML can solve; vet available administrative data for building ML; formulate and develop ML specifications that mirror relevant populations and interventions the agencies are undertaking; deploy, evaluate, and monitor ML as child welfare context, policy, and practice change over time. Ethical considerations, stakeholder engagement, and avoidance of common pitfalls underpin the framework's impact and success. From abstract to concrete, we describe one application of this framework to support a child welfare decision. This ML framework, though child welfare-focused, is generalizable to solving other public policy problems.

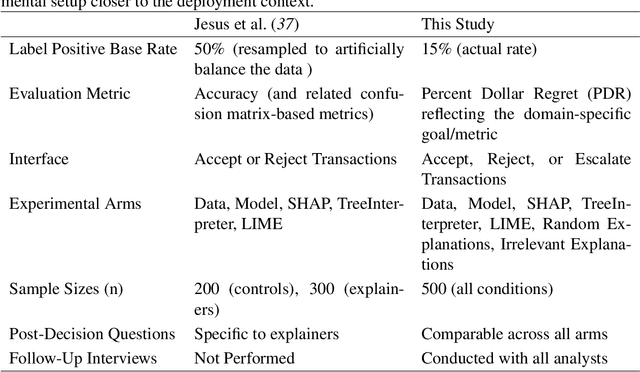

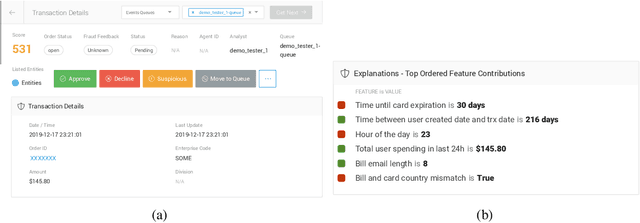

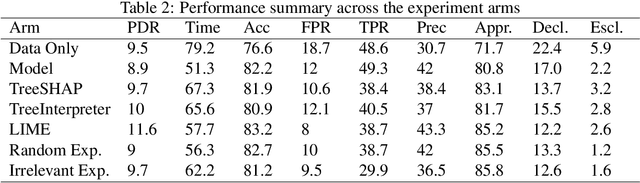

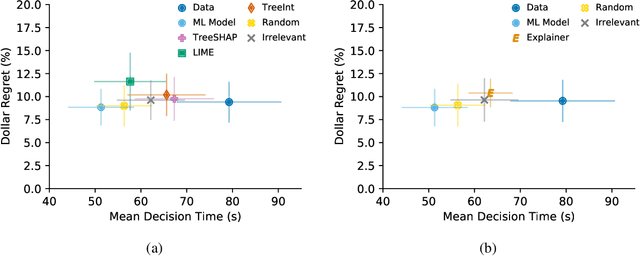

On the Importance of Application-Grounded Experimental Design for Evaluating Explainable ML Methods

Jun 30, 2022

Abstract:Machine Learning (ML) models now inform a wide range of human decisions, but using ``black box'' models carries risks such as relying on spurious correlations or errant data. To address this, researchers have proposed methods for supplementing models with explanations of their predictions. However, robust evaluations of these methods' usefulness in real-world contexts have remained elusive, with experiments tending to rely on simplified settings or proxy tasks. We present an experimental study extending a prior explainable ML evaluation experiment and bringing the setup closer to the deployment setting by relaxing its simplifying assumptions. Our empirical study draws dramatically different conclusions than the prior work, highlighting how seemingly trivial experimental design choices can yield misleading results. Beyond the present experiment, we believe this work holds lessons about the necessity of situating the evaluation of any ML method and choosing appropriate tasks, data, users, and metrics to match the intended deployment contexts.

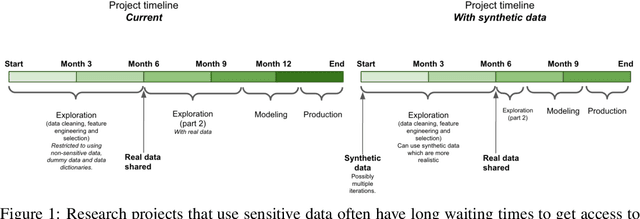

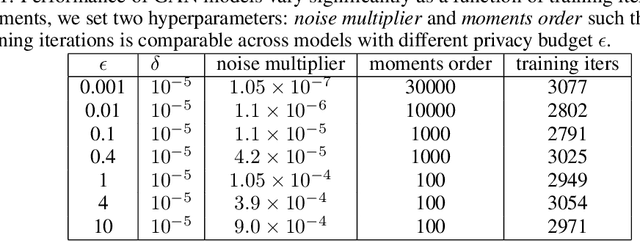

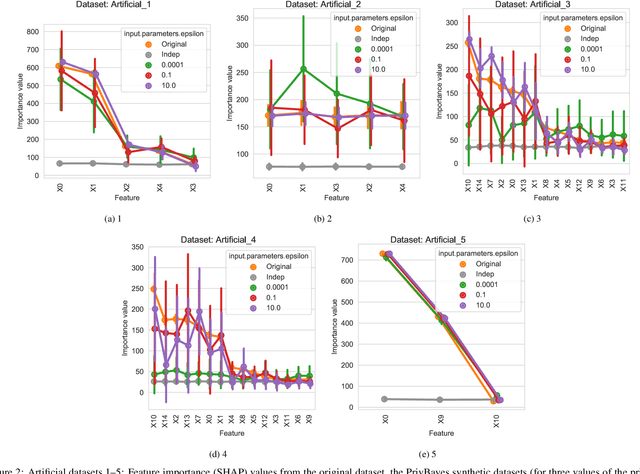

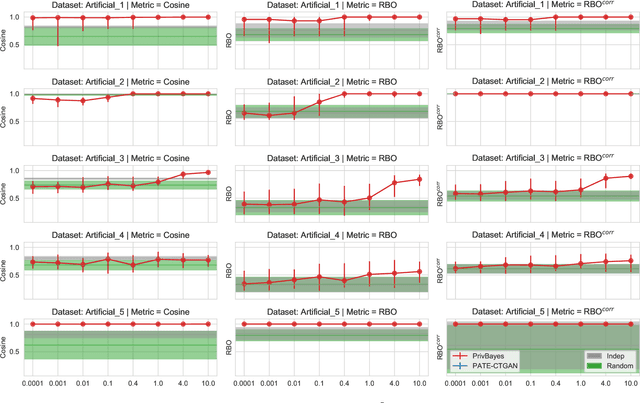

Faking feature importance: A cautionary tale on the use of differentially-private synthetic data

Mar 02, 2022

Abstract:Synthetic datasets are often presented as a silver-bullet solution to the problem of privacy-preserving data publishing. However, for many applications, synthetic data has been shown to have limited utility when used to train predictive models. One promising potential application of these data is in the exploratory phase of the machine learning workflow, which involves understanding, engineering and selecting features. This phase often involves considerable time, and depends on the availability of data. There would be substantial value in synthetic data that permitted these steps to be carried out while, for example, data access was being negotiated, or with fewer information governance restrictions. This paper presents an empirical analysis of the agreement between the feature importance obtained from raw and from synthetic data, on a range of artificially generated and real-world datasets (where feature importance represents how useful each feature is when predicting a the outcome). We employ two differentially-private methods to produce synthetic data, and apply various utility measures to quantify the agreement in feature importance as this varies with the level of privacy. Our results indicate that synthetic data can sometimes preserve several representations of the ranking of feature importance in simple settings but their performance is not consistent and depends upon a number of factors. Particular caution should be exercised in more nuanced real-world settings, where synthetic data can lead to differences in ranked feature importance that could alter key modelling decisions. This work has important implications for developing synthetic versions of highly sensitive data sets in fields such as finance and healthcare.

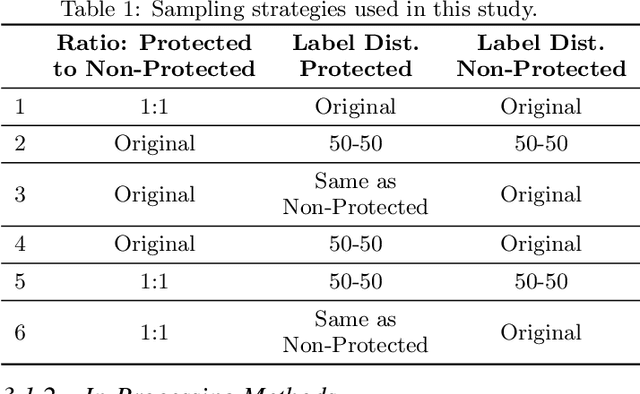

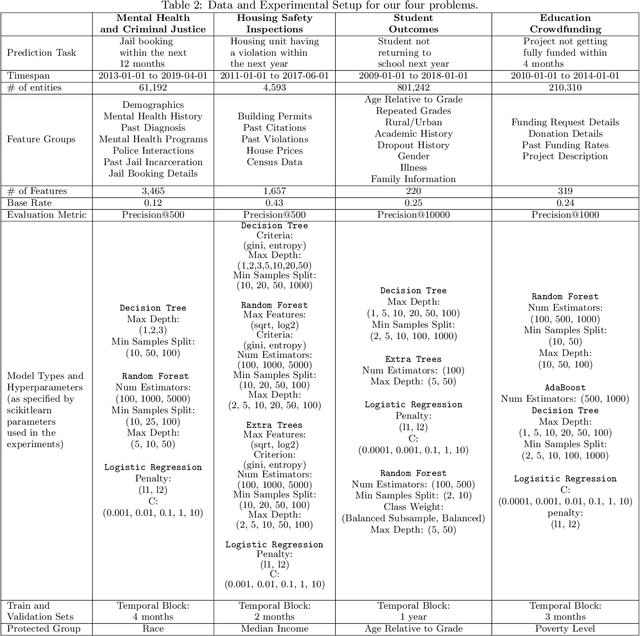

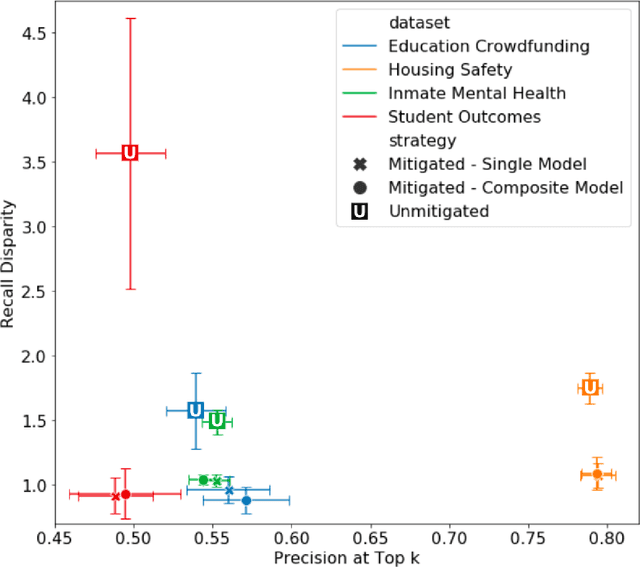

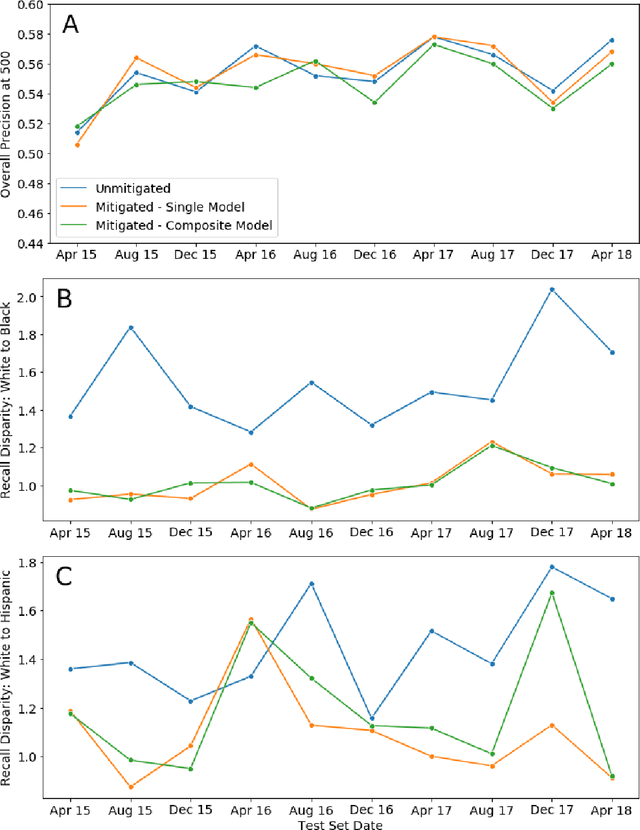

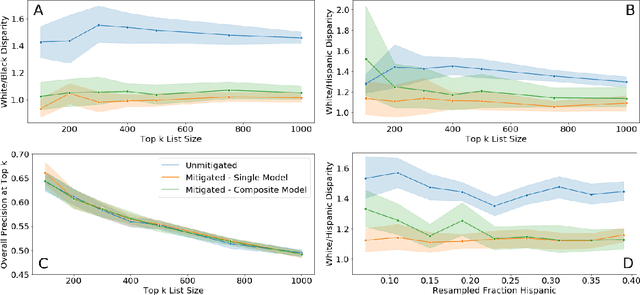

An Empirical Comparison of Bias Reduction Methods on Real-World Problems in High-Stakes Policy Settings

May 13, 2021

Abstract:Applications of machine learning (ML) to high-stakes policy settings -- such as education, criminal justice, healthcare, and social service delivery -- have grown rapidly in recent years, sparking important conversations about how to ensure fair outcomes from these systems. The machine learning research community has responded to this challenge with a wide array of proposed fairness-enhancing strategies for ML models, but despite the large number of methods that have been developed, little empirical work exists evaluating these methods in real-world settings. Here, we seek to fill this research gap by investigating the performance of several methods that operate at different points in the ML pipeline across four real-world public policy and social good problems. Across these problems, we find a wide degree of variability and inconsistency in the ability of many of these methods to improve model fairness, but post-processing by choosing group-specific score thresholds consistently removes disparities, with important implications for both the ML research community and practitioners deploying machine learning to inform consequential policy decisions.

Machine learning for public policy: Do we need to sacrifice accuracy to make models fair?

Dec 05, 2020

Abstract:Growing applications of machine learning in policy settings have raised concern for fairness implications, especially for racial minorities, but little work has studied the practical trade-offs between fairness and accuracy in real-world settings. This empirical study fills this gap by investigating the accuracy cost of mitigating disparities across several policy settings, focusing on the common context of using machine learning to inform benefit allocation in resource-constrained programs across education, mental health, criminal justice, and housing safety. In each setting, explicitly focusing on achieving equity and using our proposed post-hoc disparity mitigation methods, fairness was substantially improved without sacrificing accuracy, challenging the commonly held assumption that reducing disparities either requires accepting an appreciable drop in accuracy or the development of novel, complex methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge