Raja Giryes

School of Electrical Engineering, Tel Aviv University, Tel Aviv, Israel

Taco-VC: A Single Speaker Tacotron based Voice Conversion with Limited Data

Apr 16, 2019

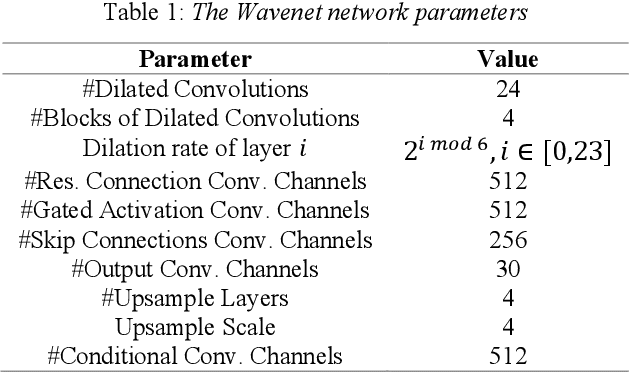

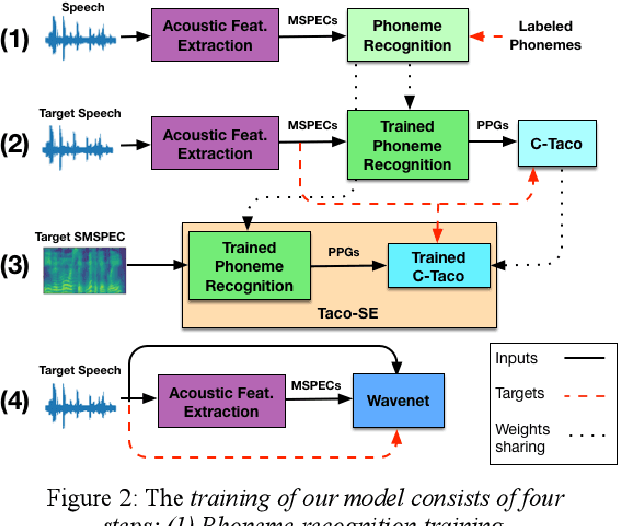

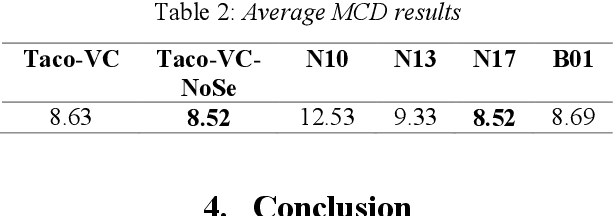

Abstract:This paper introduces Taco-VC, a novel architecture for voice conversion (VC) based on the Tacotron synthesizer, which is a sequence-to-sequence with attention model. Most current prosody preserving VC systems suffer from target similarity and quality issues in the converted speech. To address these problems, we first recover initial prosody preserving speech using a Phonetic Posteriorgrams (PPGs) based Tacotron synthesizer. Then, we enhance the quality of the converted speech using a novel speech-enhancement network, which is based on a combination of phoneme recognition and Tacotron networks. The final converted speech is generated by a Wavenet vocoder conditioned on Mel Spectrograms. Given the advantages of a single speaker Tacotron and Wavenet, we show how to adapt them to other speakers with limited training data. We evaluate our solution on the VCC 2018 SPOKE task. Using public mid-size datasets, our method outperforms the baseline and achieves competitive results

ASAP: Architecture Search, Anneal and Prune

Apr 08, 2019

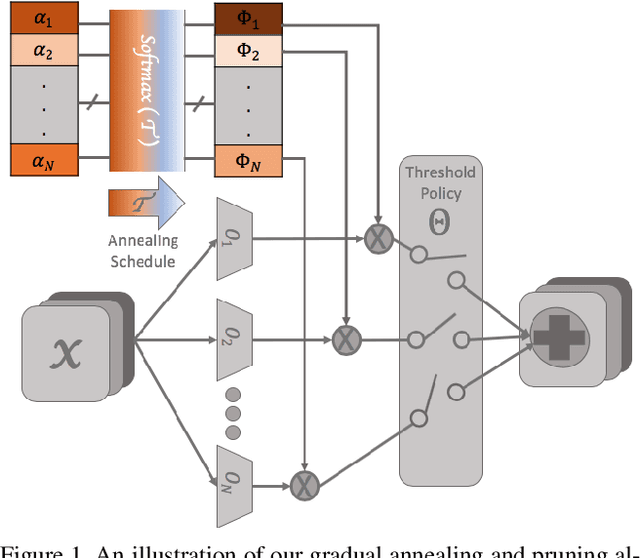

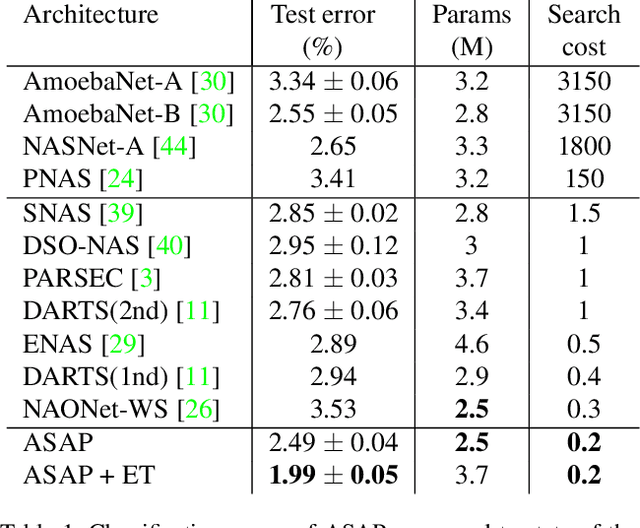

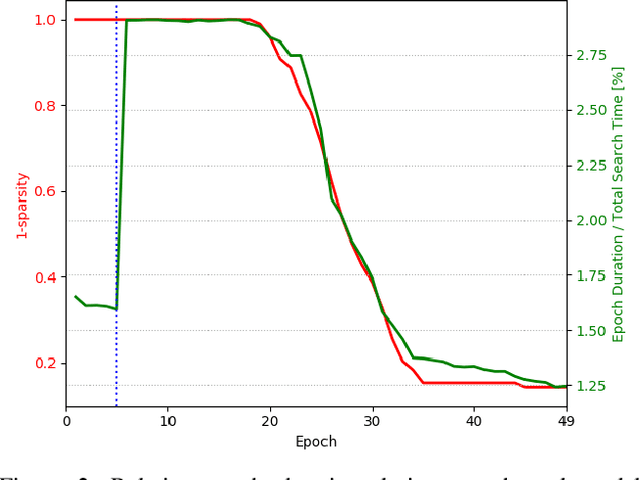

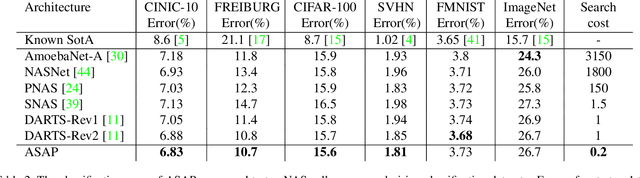

Abstract:Automatic methods for Neural Architecture Search (NAS) have been shown to produce state-of-the-art network models, yet, their main drawback is the computational complexity of the search process. As some primal methods optimized over a discrete search space, thousands of days of GPU were required for convergence. A recent approach is based on constructing a differentiable search space that enables gradient-based optimization, thus reducing the search time to a few days. While successful, such methods still include some incontinuous steps, e.g., the pruning of many weak connections at once. In this paper, we propose a differentiable search space that allows the annealing of architecture weights, while gradually pruning inferior operations, thus the search converges to a single output network in a continuous manner. Experiments on several vision datasets demonstrate the effectiveness of our method with respect to the search cost, accuracy and the memory footprint of the achieved model.

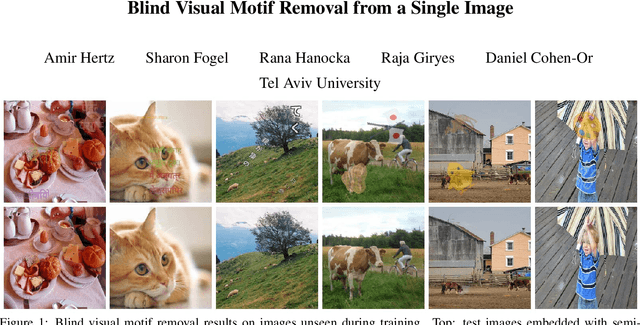

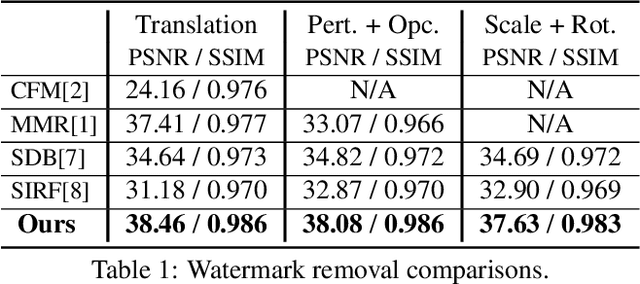

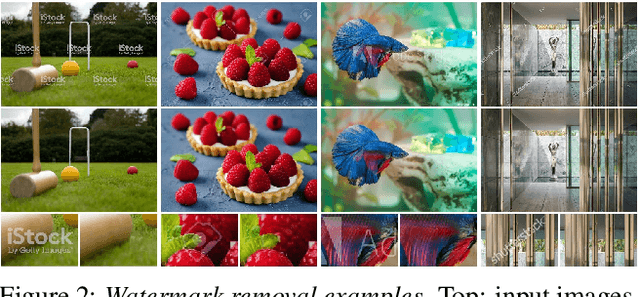

Blind Visual Motif Removal from a Single Image

Apr 04, 2019

Abstract:Many images shared over the web include overlaid objects, or visual motifs, such as text, symbols or drawings, which add a description or decoration to the image. For example, decorative text that specifies where the image was taken, repeatedly appears across a variety of different images. Often, the reoccurring visual motif, is semantically similar, yet, differs in location, style and content (e.g. text placement, font and letters). This work proposes a deep learning based technique for blind removal of such objects. In the blind setting, the location and exact geometry of the motif are unknown. Our approach simultaneously estimates which pixels contain the visual motif, and synthesizes the underlying latent image. It is applied to a single input image, without any user assistance in specifying the location of the motif, achieving state-of-the-art results for blind removal of both opaque and semi-transparent visual motifs.

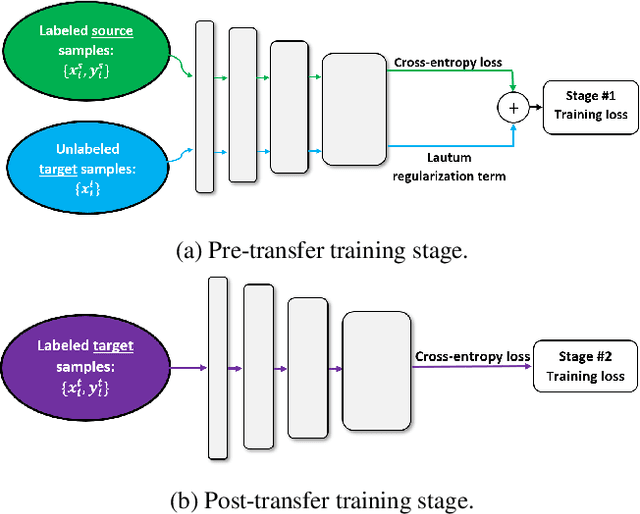

Lautum Regularization for Semi-supervised Transfer Learning

Apr 02, 2019

Abstract:Transfer learning is a very important tool in deep learning as it allows propagating information from one "source dataset" to another "target dataset", especially in the case of a small number of training examples in the latter. Yet, discrepancies between the underlying distributions of the source and target data are commonplace and are known to have a substantial impact on algorithm performance. In this work we suggest a novel information theoretic approach for the analysis of the performance of deep neural networks in the context of transfer learning. We focus on the task of semi-supervised transfer learning, in which unlabeled samples from the target dataset are available during the network training on the source dataset. Our theory suggests that one may improve the transferability of a deep neural network by imposing a Lautum information based regularization that relates the network weights to the target data. We demonstrate in various transfer learning experiments the effectiveness of the proposed approach.

LaSO: Label-Set Operations networks for multi-label few-shot learning

Feb 26, 2019

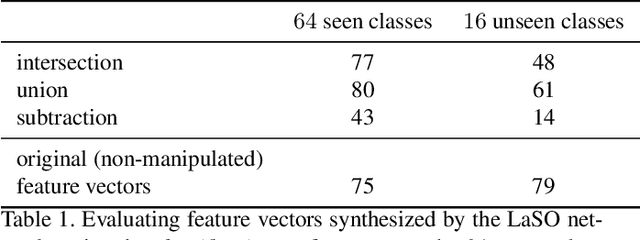

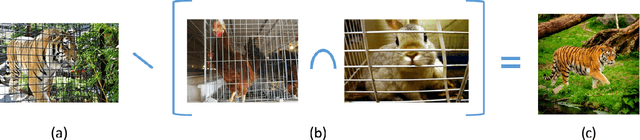

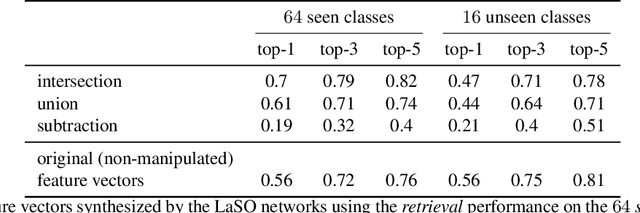

Abstract:Example synthesis is one of the leading methods to tackle the problem of few-shot learning, where only a small number of samples per class are available. However, current synthesis approaches only address the scenario of a single category label per image. In this work, we propose a novel technique for synthesizing samples with multiple labels for the (yet unhandled) multi-label few-shot classification scenario. We propose to combine pairs of given examples in feature space, so that the resulting synthesized feature vectors will correspond to examples whose label sets are obtained through certain set operations on the label sets of the corresponding input pairs. Thus, our method is capable of producing a sample containing the intersection, union or set-difference of labels present in two input samples. As we show, these set operations generalize to labels unseen during training. This enables performing augmentation on examples of novel categories, thus, facilitating multi-label few-shot classifier learning. We conduct numerous experiments showing promising results for the label-set manipulation capabilities of the proposed approach, both directly (using the classification and retrieval metrics), and in the context of performing data augmentation for multi-label few-shot learning. We propose a benchmark for this new and challenging task and show that our method compares favorably to all the common baselines.

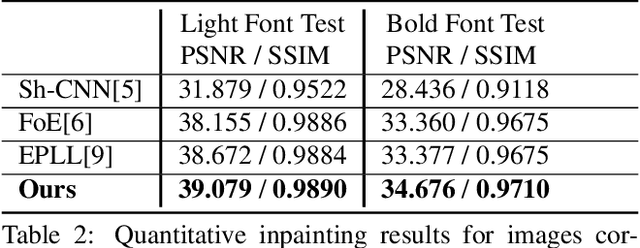

A Greedy Approach to $\ell_{0,\infty}$ Based Convolutional Sparse Coding

Dec 26, 2018

Abstract:Sparse coding techniques for image processing traditionally rely on a processing of small overlapping patches separately followed by averaging. This has the disadvantage that the reconstructed image no longer obeys the sparsity prior used in the processing. For this purpose convolutional sparse coding has been introduced, where a shift-invariant dictionary is used and the sparsity of the recovered image is maintained. Most such strategies target the $\ell_0$ "norm" or the $\ell_1$ norm of the whole image, which may create an imbalanced sparsity across various regions in the image. In order to face this challenge, the $\ell_{0,\infty}$ "norm" has been proposed as an alternative that "operates locally while thinking globally". The approaches taken for tackling the non-convexity of these optimization problems have been either using a convex relaxation or local pursuit algorithms. In this paper, we present an efficient greedy method for sparse coding and dictionary learning, which is specifically tailored to $\ell_{0,\infty}$, and is based on matching pursuit. We demonstrate the usage of our approach in salt-and-pepper noise removal and image inpainting. A code package which reproduces the experiments presented in this work is available at https://web.eng.tau.ac.il/~raja

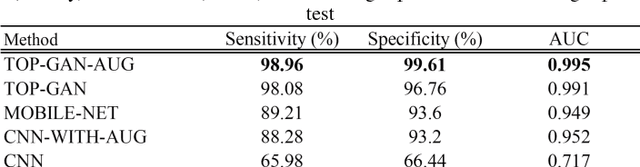

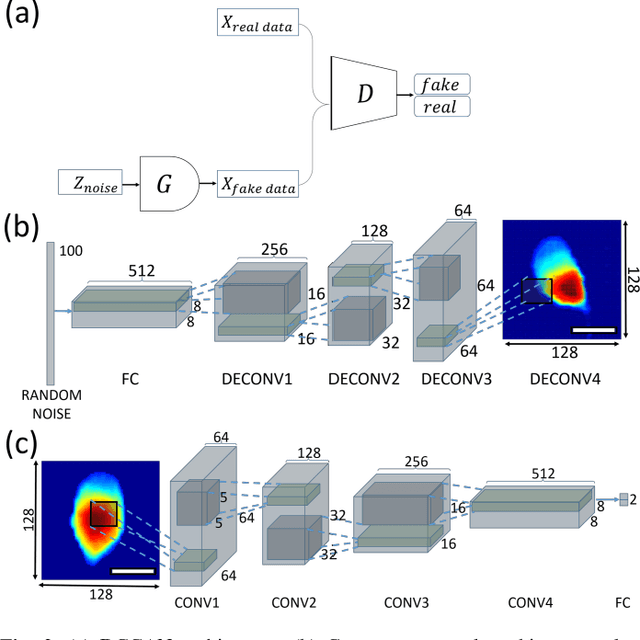

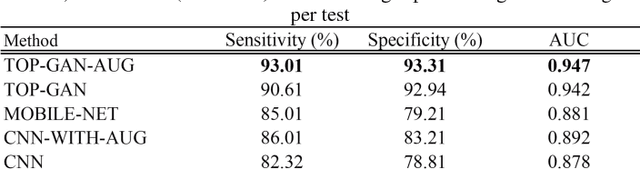

TOP-GAN: Label-Free Cancer Cell Classification Using Deep Learning with a Small Training Set

Dec 17, 2018

Abstract:We propose a new deep learning approach for medical imaging that copes with the problem of a small training set, the main bottleneck of deep learning, and apply it for classification of healthy and cancer cells acquired by quantitative phase imaging. The proposed method, called transferring of pre-trained generative adversarial network (TOP-GAN), is a hybridization between transfer learning and generative adversarial networks (GANs). Healthy cells and cancer cells of different metastatic potential have been imaged by low-coherence off-axis holography. After the acquisition, the optical path delay maps of the cells have been extracted and directly used as an input to the deep networks. In order to cope with the small number of classified images, we have used GANs to train a large number of unclassified images from another cell type (sperm cells). After this preliminary training, and after transforming the last layer of the network with new ones, we have designed an automatic classifier for the correct cell type (healthy/primary cancer/metastatic cancer) with 90-99% accuracy, although small training sets of down to several images have been used. These results are better in comparison to other classic methods that aim at coping with the same problem of a small training set. We believe that our approach makes the combination of holographic microscopy and deep learning networks more accessible to the medical field by enabling a rapid, automatic and accurate classification in stain-free imaging flow cytometry. Furthermore, our approach is expected to be applicable to many other medical image classification tasks, suffering from a small training set.

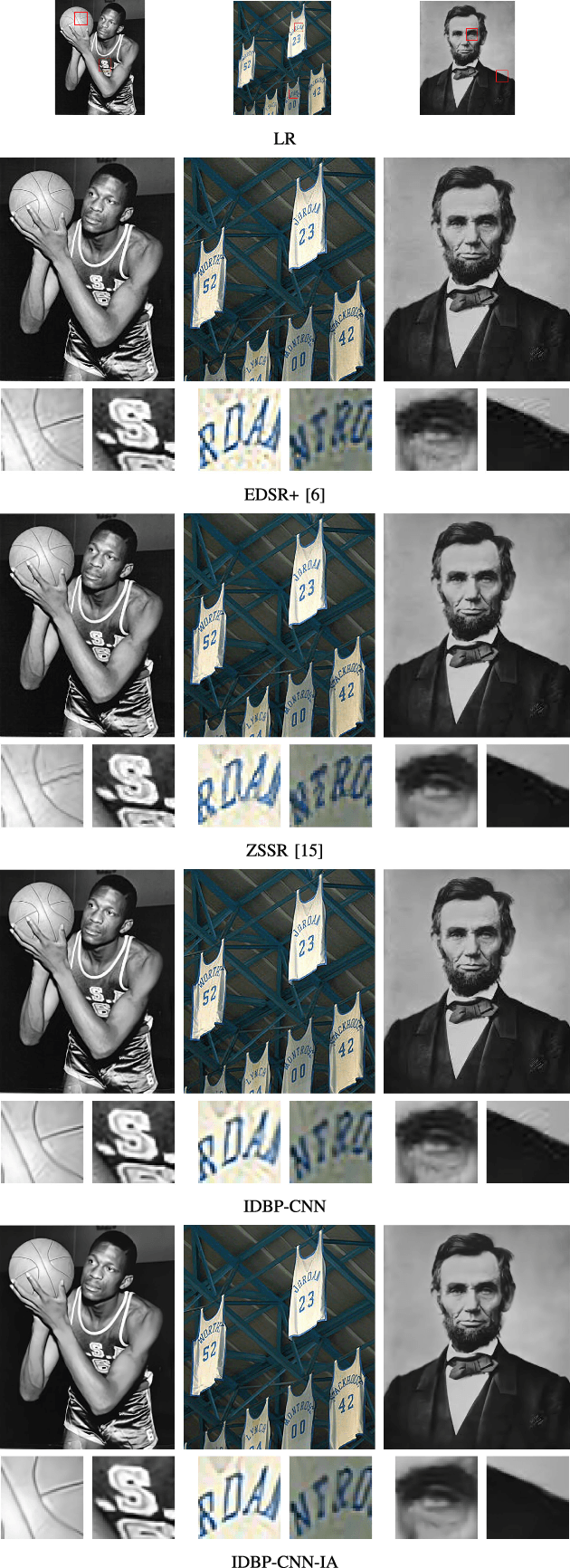

Super-Resolution based on Image-Adapted CNN Denoisers: Incorporating Generalization of Training Data and Internal Learning in Test Time

Nov 30, 2018

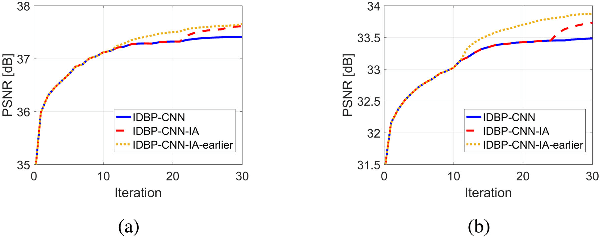

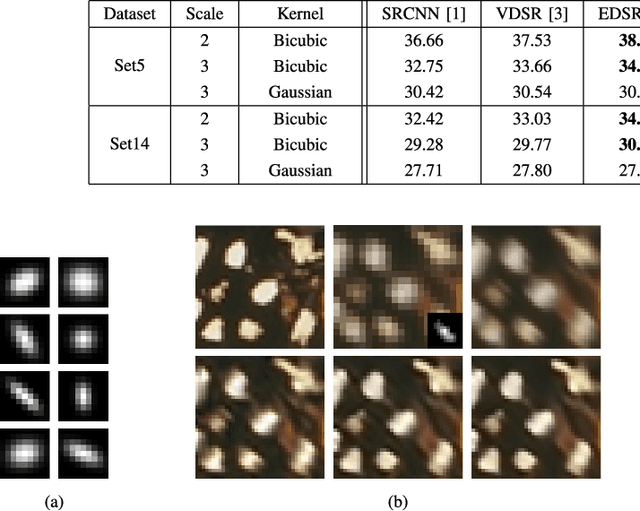

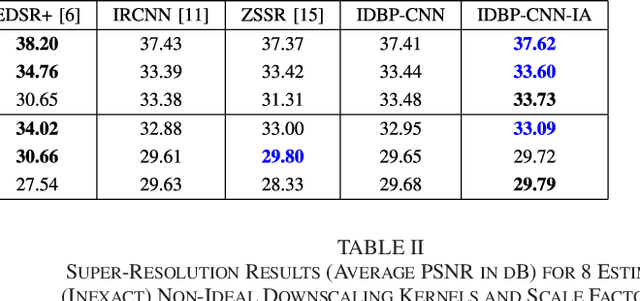

Abstract:While deep neural networks exhibit state-of-the-art results in the task of image super-resolution (SR) with a fixed known acquisition process (e.g., a bicubic downscaling kernel), they experience a huge performance loss when the real observation model mismatches the one used in training. Recently, two different techniques suggested to mitigate this deficiency, i.e., enjoy the advantages of deep learning without being restricted by the prior training. The first one follows the plug-and-play (P&P) approach that solves general inverse problems (e.g., SR) by plugging Gaussian denoisers into model-based optimization schemes. The second builds on internal recurrence of information inside a single image, and trains a super-resolver network at test time on examples synthesized from the low-resolution image. Our work incorporates the two strategies, enjoying the impressive generalization capabilities of deep learning, captured by the first, and further improving it through internal learning at test time. First, we apply a recent P&P strategy to SR. Then, we show how it may become image-adaptive in test time. This technique outperforms the above two strategies on popular datasets and gives better results than other state-of-the-art methods on real images.

Efficient non-uniform quantizer for quantized neural network targeting reconfigurable hardware

Nov 27, 2018

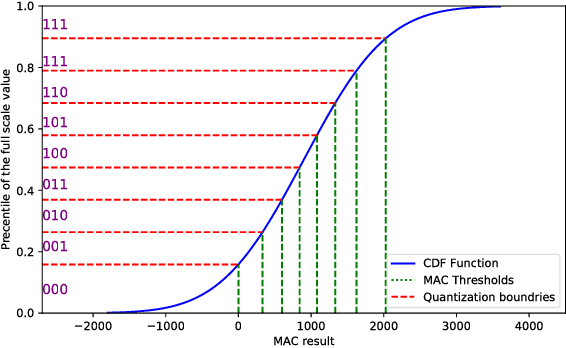

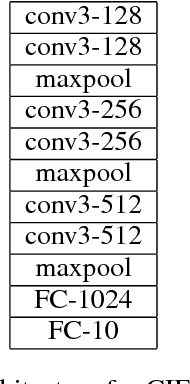

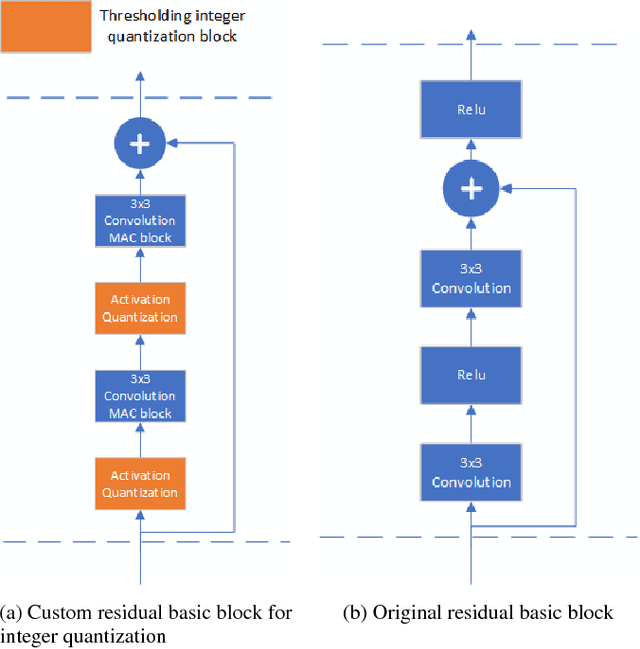

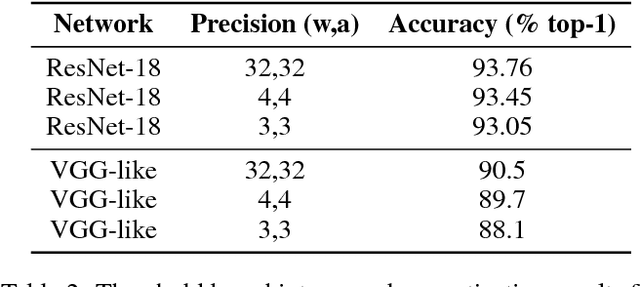

Abstract:Convolutional Neural Networks (CNN) has become more popular choice for various tasks such as computer vision, speech recognition and natural language processing. Thanks to their large computational capability and throughput, GPUs ,which are not power efficient and therefore does not suit low power systems such as mobile devices, are the most common platform for both training and inferencing tasks. Recent studies has shown that FPGAs can provide a good alternative to GPUs as a CNN accelerator, due to their re-configurable nature, low power and small latency. In order for FPGA-based accelerators outperform GPUs in inference task, both the parameters of the network and the activations must be quantized. While most works use uniform quantizers for both parameters and activations, it is not always the optimal one, and a non-uniform quantizer need to be considered. In this work we introduce a custom hardware-friendly approach to implement non-uniform quantizers. In addition, we use a single scale integer representation of both parameters and activations, for both training and inference. The combined method yields a hardware efficient non-uniform quantizer, fit for real-time applications. We have tested our method on CIFAR-10 and CIFAR-100 image classification datasets with ResNet-18 and VGG-like architectures, and saw little degradation in accuracy.

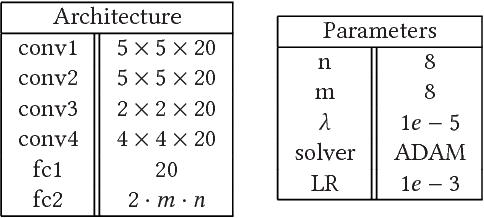

ALIGNet: Partial-Shape Agnostic Alignment via Unsupervised Learning

Oct 30, 2018

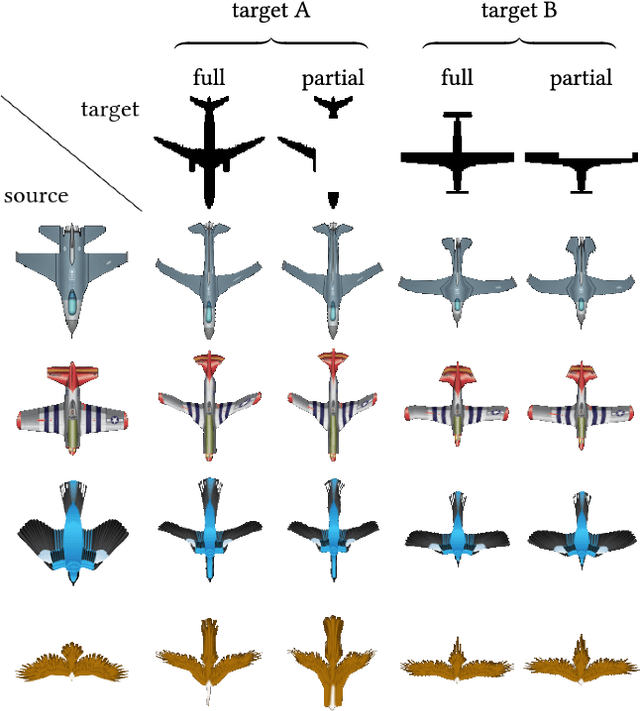

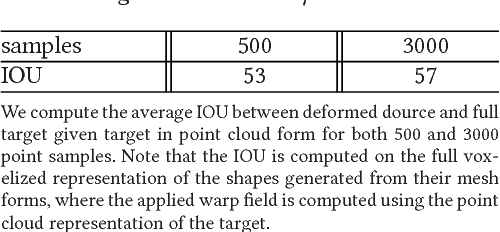

Abstract:The process of aligning a pair of shapes is a fundamental operation in computer graphics. Traditional approaches rely heavily on matching corresponding points or features to guide the alignment, a paradigm that falters when significant shape portions are missing. These techniques generally do not incorporate prior knowledge about expected shape characteristics, which can help compensate for any misleading cues left by inaccuracies exhibited in the input shapes. We present an approach based on a deep neural network, leveraging shape datasets to learn a shape-aware prior for source-to-target alignment that is robust to shape incompleteness. In the absence of ground truth alignments for supervision, we train a network on the task of shape alignment using incomplete shapes generated from full shapes for self-supervision. Our network, called ALIGNet, is trained to warp complete source shapes to incomplete targets, as if the target shapes were complete, thus essentially rendering the alignment partial-shape agnostic. We aim for the network to develop specialized expertise over the common characteristics of the shapes in each dataset, thereby achieving a higher-level understanding of the expected shape space to which a local approach would be oblivious. We constrain ALIGNet through an anisotropic total variation identity regularization to promote piecewise smooth deformation fields, facilitating both partial-shape agnosticism and post-deformation applications. We demonstrate that ALIGNet learns to align geometrically distinct shapes, and is able to infer plausible mappings even when the target shape is significantly incomplete. We show that our network learns the common expected characteristics of shape collections, without over-fitting or memorization, enabling it to produce plausible deformations on unseen data during test time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge