Radu Grosu

TU Wien

MMDVS-LF: A Multi-Modal Dynamic-Vision-Sensor Line Following Dataset

Sep 26, 2024

Abstract:Dynamic Vision Sensors (DVS), offer a unique advantage in control applications, due to their high temporal resolution, and asynchronous event-based data. Still, their adoption in machine learning algorithms remains limited. To address this gap, and promote the development of models that leverage the specific characteristics of DVS data, we introduce the Multi-Modal Dynamic-Vision-Sensor Line Following dataset (MMDVS-LF). This comprehensive dataset, is the first to integrate multiple sensor modalities, including DVS recordings, RGB video, odometry, and Inertial Measurement Unit (IMU) data, from a small-scale standardized vehicle. Additionally, the dataset includes eye-tracking and demographic data of drivers performing a Line Following task on a track. With its diverse range of data, MMDVS-LF opens new opportunities for developing deep learning algorithms, and conducting data science projects across various domains, supporting innovation in autonomous systems and control applications.

Segmentation of Prostate Tumour Volumes from PET Images is a Different Ball Game

Jul 15, 2024

Abstract:Accurate segmentation of prostate tumours from PET images presents a formidable challenge in medical image analysis. Despite considerable work and improvement in delineating organs from CT and MR modalities, the existing standards do not transfer well and produce quality results in PET related tasks. Particularly, contemporary methods fail to accurately consider the intensity-based scaling applied by the physicians during manual annotation of tumour contours. In this paper, we observe that the prostate-localised uptake threshold ranges are beneficial for suppressing outliers. Therefore, we utilize the intensity threshold values, to implement a new custom-feature-clipping normalisation technique. We evaluate multiple, established U-Net variants under different normalisation schemes, using the nnU-Net framework. All models were trained and tested on multiple datasets, obtained with two radioactive tracers: [68-Ga]Ga-PSMA-11 and [18-F]PSMA-1007. Our results show that the U-Net models achieve much better performance when the PET scans are preprocessed with our novel clipping technique.

Automated Immunophenotyping Assessment for Diagnosing Childhood Acute Leukemia using Set-Transformers

Jun 26, 2024

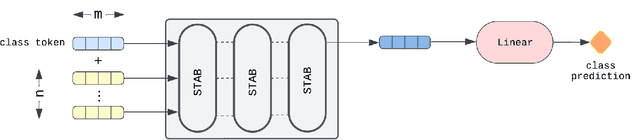

Abstract:Acute Leukemia is the most common hematologic malignancy in children and adolescents. A key methodology in the diagnostic evaluation of this malignancy is immunophenotyping based on Multiparameter Flow Cytometry (FCM). However, this approach is manual, and thus time-consuming and subjective. To alleviate this situation, we propose in this paper the FCM-Former, a machine learning, self-attention based FCM-diagnostic tool, automating the immunophenotyping assessment in Childhood Acute Leukemia. The FCM-Former is trained in a supervised manner, by directly using flow cytometric data. Our FCM-Former achieves an accuracy of 96.5% assigning lineage to each sample among 960 cases of either acute B-cell, T-cell lymphoblastic, and acute myeloid leukemia (B-ALL, T-ALL, AML). To the best of our knowledge, the FCM-Former is the first work that automates the immunophenotyping assessment with FCM data in diagnosing pediatric Acute Leukemia.

Scenario-Based Curriculum Generation for Multi-Agent Autonomous Driving

Mar 26, 2024Abstract:The automated generation of diverse and complex training scenarios has been an important ingredient in many complex learning tasks. Especially in real-world application domains, such as autonomous driving, auto-curriculum generation is considered vital for obtaining robust and general policies. However, crafting traffic scenarios with multiple, heterogeneous agents is typically considered as a tedious and time-consuming task, especially in more complex simulation environments. In our work, we introduce MATS-Gym, a Multi-Agent Traffic Scenario framework to train agents in CARLA, a high-fidelity driving simulator. MATS-Gym is a multi-agent training framework for autonomous driving that uses partial scenario specifications to generate traffic scenarios with variable numbers of agents. This paper unifies various existing approaches to traffic scenario description into a single training framework and demonstrates how it can be integrated with techniques from unsupervised environment design to automate the generation of adaptive auto-curricula. The code is available at https://github.com/AutonomousDrivingExaminer/mats-gym.

Unveiling the Unseen: Identifiable Clusters in Trained Depthwise Convolutional Kernels

Jan 25, 2024

Abstract:Recent advances in depthwise-separable convolutional neural networks (DS-CNNs) have led to novel architectures, that surpass the performance of classical CNNs, by a considerable scalability and accuracy margin. This paper reveals another striking property of DS-CNN architectures: discernible and explainable patterns emerge in their trained depthwise convolutional kernels in all layers. Through an extensive analysis of millions of trained filters, with different sizes and from various models, we employed unsupervised clustering with autoencoders, to categorize these filters. Astonishingly, the patterns converged into a few main clusters, each resembling the difference of Gaussian (DoG) functions, and their first and second-order derivatives. Notably, we were able to classify over 95\% and 90\% of the filters from state-of-the-art ConvNextV2 and ConvNeXt models, respectively. This finding is not merely a technological curiosity; it echoes the foundational models neuroscientists have long proposed for the vision systems of mammals. Our results thus deepen our understanding of the emergent properties of trained DS-CNNs and provide a bridge between artificial and biological visual processing systems. More broadly, they pave the way for more interpretable and biologically-inspired neural network designs in the future.

Neural Echos: Depthwise Convolutional Filters Replicate Biological Receptive Fields

Jan 18, 2024

Abstract:In this study, we present evidence suggesting that depthwise convolutional kernels are effectively replicating the structural intricacies of the biological receptive fields observed in the mammalian retina. We provide analytics of trained kernels from various state-of-the-art models substantiating this evidence. Inspired by this intriguing discovery, we propose an initialization scheme that draws inspiration from the biological receptive fields. Experimental analysis of the ImageNet dataset with multiple CNN architectures featuring depthwise convolutions reveals a marked enhancement in the accuracy of the learned model when initialized with biologically derived weights. This underlies the potential for biologically inspired computational models to further our understanding of vision processing systems and to improve the efficacy of convolutional networks.

Real-Time Recurrent Reinforcement Learning

Nov 08, 2023

Abstract:Recent advances in reinforcement learning, for partially-observable Markov decision processes (POMDPs), rely on the biologically implausible backpropagation through time algorithm (BPTT) to perform gradient-descent optimisation. In this paper we propose a novel reinforcement learning algorithm that makes use of random feedback local online learning (RFLO), a biologically plausible approximation of realtime recurrent learning (RTRL) to compute the gradients of the parameters of a recurrent neural network in an online manner. By combining it with TD($\lambda$), a variant of temporaldifference reinforcement learning with eligibility traces, we create a biologically plausible, recurrent actor-critic algorithm, capable of solving discrete and continuous control tasks in POMDPs. We compare BPTT, RTRL and RFLO as well as different network architectures, and find that RFLO can perform just as well as RTRL while exceeding even BPTT in terms of complexity. The proposed method, called real-time recurrent reinforcement learning (RTRRL), serves as a model of learning in biological neural networks mimicking reward pathways in the mammalian brain.

Learning Adaptive Safety for Multi-Agent Systems

Sep 19, 2023Abstract:Ensuring safety in dynamic multi-agent systems is challenging due to limited information about the other agents. Control Barrier Functions (CBFs) are showing promise for safety assurance but current methods make strong assumptions about other agents and often rely on manual tuning to balance safety, feasibility, and performance. In this work, we delve into the problem of adaptive safe learning for multi-agent systems with CBF. We show how emergent behavior can be profoundly influenced by the CBF configuration, highlighting the necessity for a responsive and dynamic approach to CBF design. We present ASRL, a novel adaptive safe RL framework, to fully automate the optimization of policy and CBF coefficients, to enhance safety and long-term performance through reinforcement learning. By directly interacting with the other agents, ASRL learns to cope with diverse agent behaviours and maintains the cost violations below a desired limit. We evaluate ASRL in a multi-robot system and a competitive multi-agent racing scenario, against learning-based and control-theoretic approaches. We empirically demonstrate the efficacy and flexibility of ASRL, and assess generalization and scalability to out-of-distribution scenarios. Code and supplementary material are public online.

Enhancing Robot Learning through Learned Human-Attention Feature Maps

Aug 29, 2023

Abstract:Robust and efficient learning remains a challenging problem in robotics, in particular with complex visual inputs. Inspired by human attention mechanism, with which we quickly process complex visual scenes and react to changes in the environment, we think that embedding auxiliary information about focus point into robot learning would enhance efficiency and robustness of the learning process. In this paper, we propose a novel approach to model and emulate the human attention with an approximate prediction model. We then leverage this output and feed it as a structured auxiliary feature map into downstream learning tasks. We validate this idea by learning a prediction model from human-gaze recordings of manual driving in the real world. We test our approach on two learning tasks - object detection and imitation learning. Our experiments demonstrate that the inclusion of predicted human attention leads to improved robustness of the trained models to out-of-distribution samples and faster learning in low-data regime settings. Our work highlights the potential of incorporating structured auxiliary information in representation learning for robotics and opens up new avenues for research in this direction. All code and data are available online.

Prediction of Tourism Flow with Sparse Geolocation Data

Aug 28, 2023Abstract:Modern tourism in the 21st century is facing numerous challenges. Among these the rapidly growing number of tourists visiting space-limited regions like historical cities, museums and bottlenecks such as bridges is one of the biggest. In this context, a proper and accurate prediction of tourism volume and tourism flow within a certain area is important and critical for visitor management tasks such as sustainable treatment of the environment and prevention of overcrowding. Static flow control methods like conventional low-level controllers or limiting access to overcrowded venues could not solve the problem yet. In this paper, we empirically evaluate the performance of state-of-the-art deep-learning methods such as RNNs, GNNs, and Transformers as well as the classic statistical ARIMA method. Granular limited data supplied by a tourism region is extended by exogenous data such as geolocation trajectories of individual tourists, weather and holidays. In the field of visitor flow prediction with sparse data, we are thereby capable of increasing the accuracy of our predictions, incorporating modern input feature handling as well as mapping geolocation data on top of discrete POI data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge