Sanja Dogramadzi

Hybrid Control Strategies for Safe and Adaptive Robot-Assisted Dressing

May 12, 2025Abstract:Safety, reliability, and user trust are crucial in human-robot interaction (HRI) where the robots must address hazards in real-time. This study presents hazard driven low-level control strategies implemented in robot-assisted dressing (RAD) scenarios where hazards like garment snags and user discomfort in real-time can affect task performance and user safety. The proposed control mechanisms include: (1) Garment Snagging Control Strategy, which detects excessive forces and either seeks user intervention via a chatbot or autonomously adjusts its trajectory, and (2) User Discomfort/Pain Mitigation Strategy, which dynamically reduces velocity based on user feedback and aborts the task if necessary. We used physical dressing trials in order to evaluate these control strategies. Results confirm that integrating force monitoring with user feedback improves safety and task continuity. The findings emphasise the need for hybrid approaches that balance autonomous intervention, user involvement, and controlled task termination, supported by bi-directional interaction and real-time user-driven adaptability, paving the way for more responsive and personalised HRI systems.

Symbolic Runtime Verification and Adaptive Decision-Making for Robot-Assisted Dressing

Apr 22, 2025Abstract:We present a control framework for robot-assisted dressing that augments low-level hazard response with runtime monitoring and formal verification. A parametric discrete-time Markov chain (pDTMC) models the dressing process, while Bayesian inference dynamically updates this pDTMC's transition probabilities based on sensory and user feedback. Safety constraints from hazard analysis are expressed in probabilistic computation tree logic, and symbolically verified using a probabilistic model checker. We evaluate reachability, cost, and reward trade-offs for garment-snag mitigation and escalation, enabling real-time adaptation. Our approach provides a formal yet lightweight foundation for safety-aware, explainable robotic assistance.

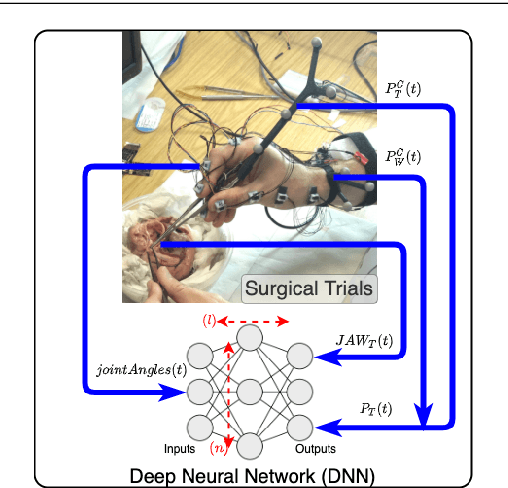

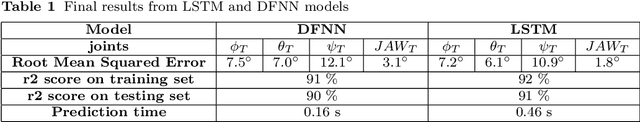

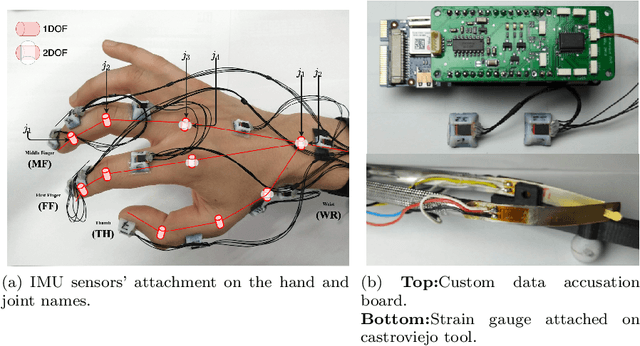

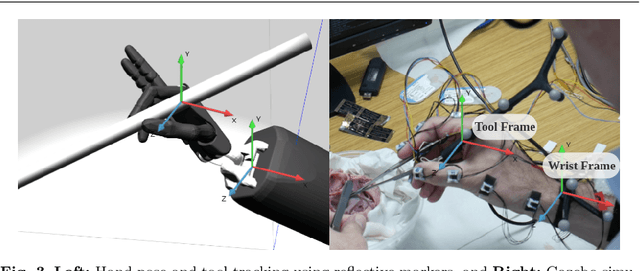

Mapping Surgeon's Hand/Finger Motion During Conventional Microsurgery to Enhance Intuitive Surgical Robot Teleoperation

Feb 21, 2021

Abstract:Purpose: Recent developments in robotics and artificial intelligence (AI) have led to significant advances in healthcare technologies enhancing robot-assisted minimally invasive surgery (RAMIS) in some surgical specialties. However, current human-robot interfaces lack intuitive teleoperation and cannot mimic surgeon's hand/finger sensing and fine motion. These limitations make tele-operated robotic surgery not suitable for micro-surgery and difficult to learn for established surgeons. We report a pilot study showing an intuitive way of recording and mapping surgeon's gross hand motion and the fine synergic motion during cardiac micro-surgery as a way to enhance future intuitive teleoperation. Methods: We set to develop a prototype system able to train a Deep Neural Net-work (DNN) by mapping wrist, hand and surgical tool real-time data acquisition(RTDA) inputs during mock-up heart micro-surgery procedures. The trained network was used to estimate the tools poses from refined hand joint angles. Results: Based on surgeon's feedback during mock micro-surgery, the developed wearable system with light-weight sensors for motion tracking did not interfere with the surgery and instrument handling. The wearable motion tracking system used 15 finger-thumb-wrist joint angle sensors to generate meaningful data-sets representing inputs of the DNN network with new hand joint angles added as necessary based on comparing the estimated tool poses against measured tool pose. The DNN architecture was optimized for the highest estimation accuracy and the ability to determine the tool pose with the least mean squared error. This novel approach showed that the surgical instrument's pose, an essential requirement for teleoperation, can be accurately estimated from recorded surgeon's hand/finger movements with a mean squared error (MSE) less than 0.3%

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge