Stylized Text Generation Using Wasserstein Autoencoders with a Mixture of Gaussian Prior

Nov 10, 2019Amirpasha Ghabussi, Lili Mou, Olga Vechtomova

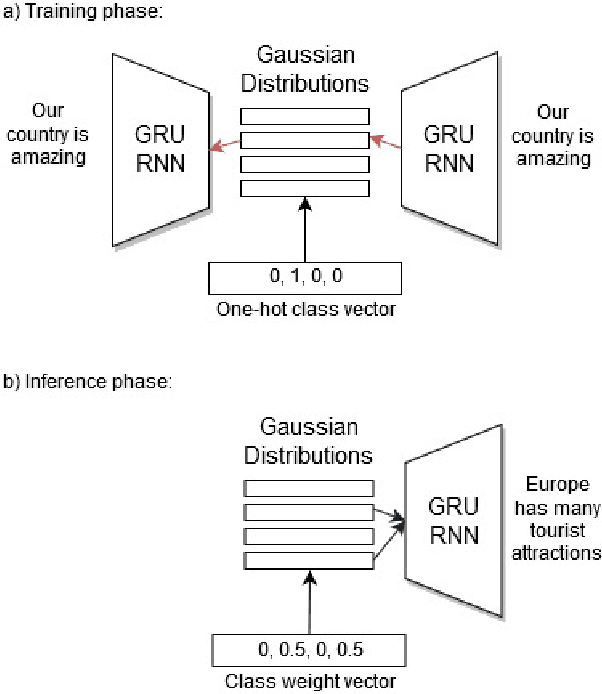

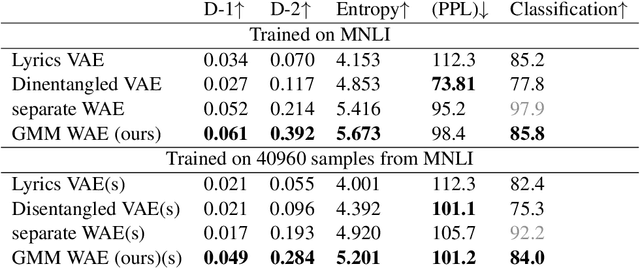

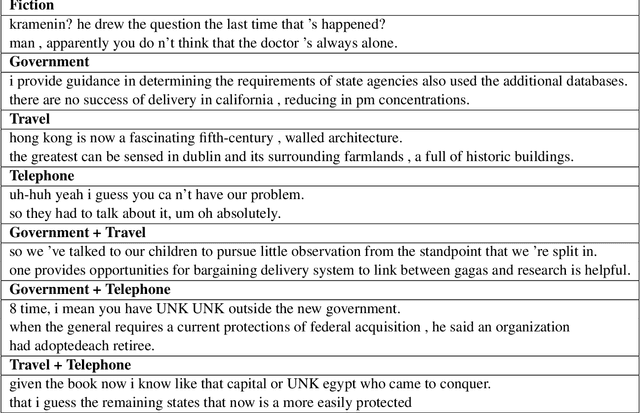

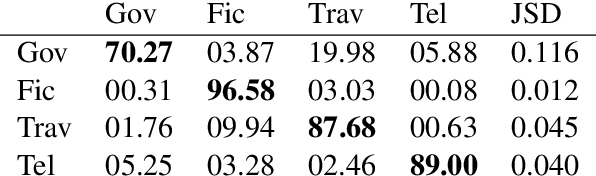

Wasserstein autoencoders are effective for text generation. They do not however provide any control over the style and topic of the generated sentences if the dataset has multiple classes and includes different topics. In this work, we present a semi-supervised approach for generating stylized sentences. Our model is trained on a multi-class dataset and learns the latent representation of the sentences using a mixture of Gaussian prior without any adversarial losses. This allows us to generate sentences in the style of a specified class or multiple classes by sampling from their corresponding prior distributions. Moreover, we can train our model on relatively small datasets and learn the latent representation of a specified class by adding external data with other styles/classes to our dataset. While a simple WAE or VAE cannot generate diverse sentences in this case, generated sentences with our approach are diverse, fluent, and preserve the style and the content of the desired classes.

Dynamic Fusion for Multimodal Data

Nov 10, 2019Gaurav Sahu, Olga Vechtomova

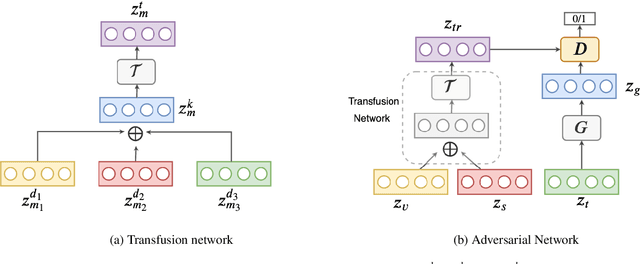

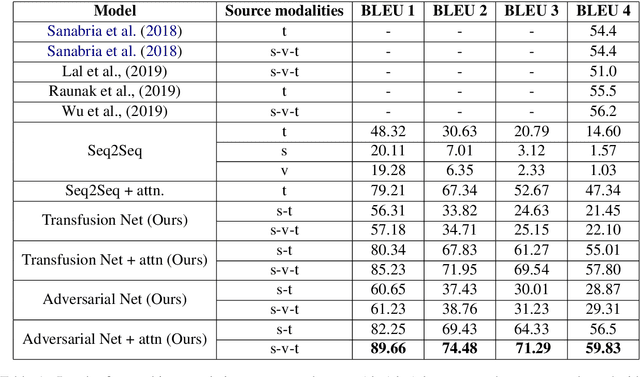

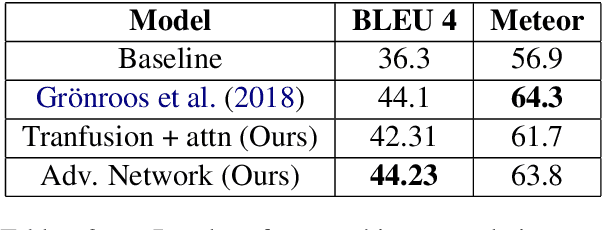

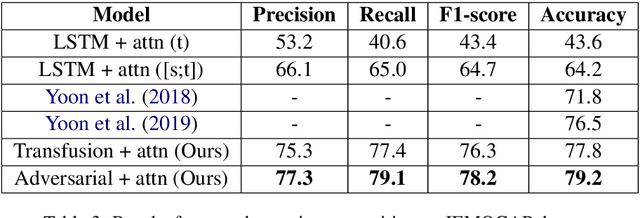

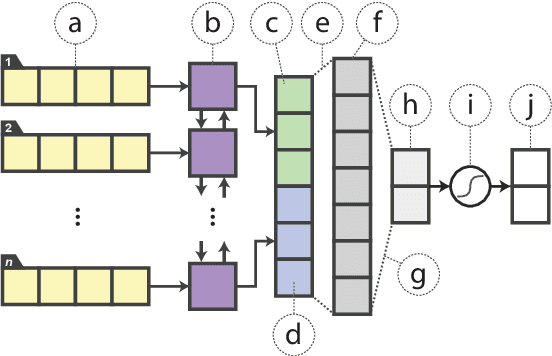

Effective fusion of data from multiple modalities, such as video, speech, and text, is challenging pertaining to the heterogeneous nature of multimodal data. In this paper, we propose dynamic fusion techniques that model context from different modalities efficiently. Instead of defining a deterministic fusion operation, such as concatenation, for the network, we let the network decide "how" to combine given multimodal features in the most optimal way. We propose two networks: 1) transfusion network, which learns to compress information from different modalities while preserving the context, and 2) a GAN-based network, which regularizes the learned latent space given context from complimenting modalities. A quantitative evaluation on the tasks of machine translation, and emotion recognition suggest that such adaptive networks are able to model context better than all existing methods.

Conditional Response Generation Using Variational Alignment

Nov 10, 2019Kashif Khan, Gaurav Sahu, Vikash Balasubramanian, Lili Mou, Olga Vechtomova

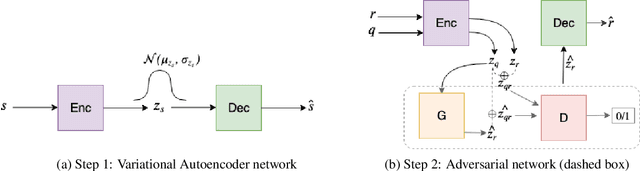

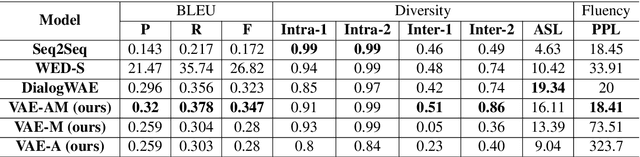

Generating relevant/conditioned responses in dialog is challenging, and requires not only proper modelling of context in the conversation, but also the ability to generate fluent sentences during inference. In this paper, we propose a two-step framework based on generative adversarial nets for generating conditioned responses. Our model first learns meaningful representations of sentences, and then uses a generator to \textit{match} the query with the response distribution. Latent codes from the latter are then used to generate responses. Both quantitative and qualitative evaluations show that our model generates more fluent, relevant and diverse responses than the existing state-of-the-art methods.

Generating Sentences from Disentangled Syntactic and Semantic Spaces

Jul 06, 2019Yu Bao, Hao Zhou, Shujian Huang, Lei Li, Lili Mou, Olga Vechtomova, Xinyu Dai, Jiajun Chen

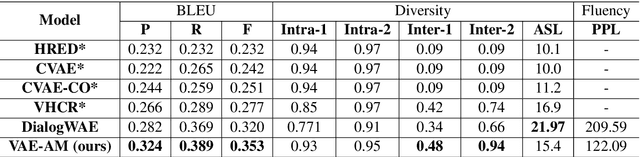

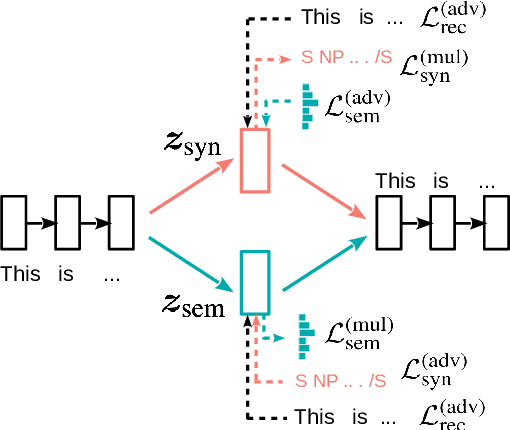

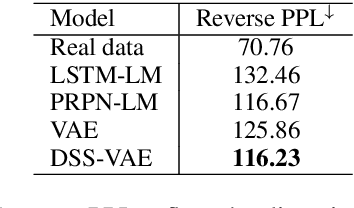

Variational auto-encoders (VAEs) are widely used in natural language generation due to the regularization of the latent space. However, generating sentences from the continuous latent space does not explicitly model the syntactic information. In this paper, we propose to generate sentences from disentangled syntactic and semantic spaces. Our proposed method explicitly models syntactic information in the VAE's latent space by using the linearized tree sequence, leading to better performance of language generation. Additionally, the advantage of sampling in the disentangled syntactic and semantic latent spaces enables us to perform novel applications, such as the unsupervised paraphrase generation and syntax-transfer generation. Experimental results show that our proposed model achieves similar or better performance in various tasks, compared with state-of-the-art related work.

Distilling Task-Specific Knowledge from BERT into Simple Neural Networks

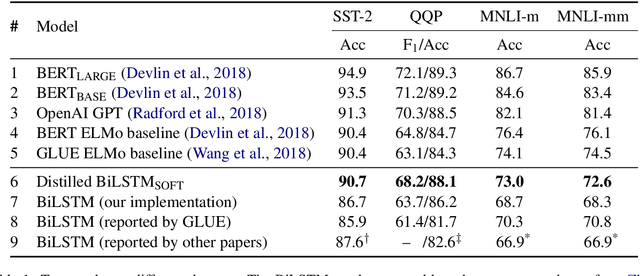

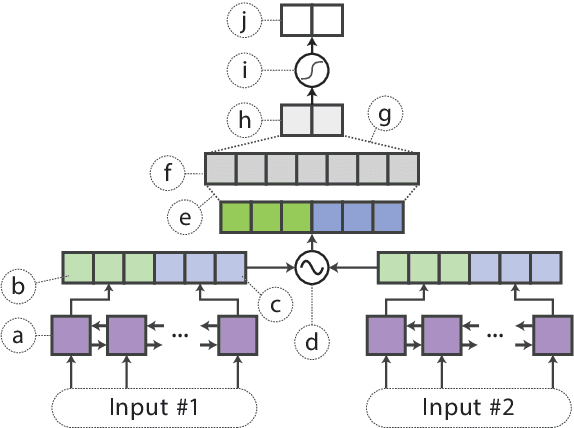

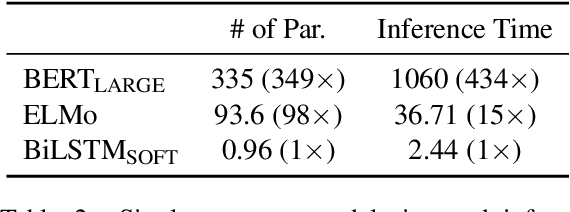

Mar 28, 2019Raphael Tang, Yao Lu, Linqing Liu, Lili Mou, Olga Vechtomova, Jimmy Lin

In the natural language processing literature, neural networks are becoming increasingly deeper and complex. The recent poster child of this trend is the deep language representation model, which includes BERT, ELMo, and GPT. These developments have led to the conviction that previous-generation, shallower neural networks for language understanding are obsolete. In this paper, however, we demonstrate that rudimentary, lightweight neural networks can still be made competitive without architecture changes, external training data, or additional input features. We propose to distill knowledge from BERT, a state-of-the-art language representation model, into a single-layer BiLSTM, as well as its siamese counterpart for sentence-pair tasks. Across multiple datasets in paraphrasing, natural language inference, and sentiment classification, we achieve comparable results with ELMo, while using roughly 100 times fewer parameters and 15 times less inference time.

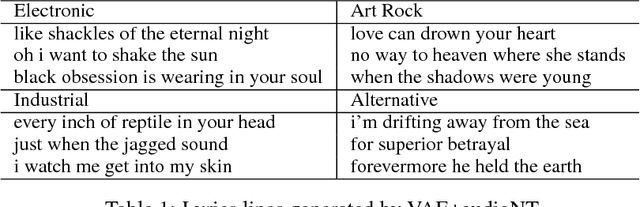

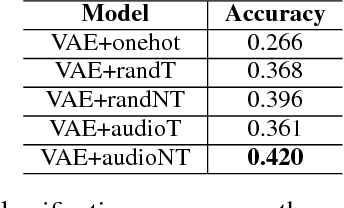

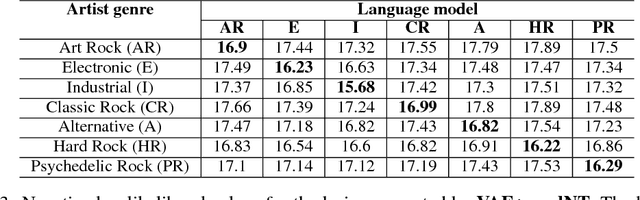

Generating lyrics with variational autoencoder and multi-modal artist embeddings

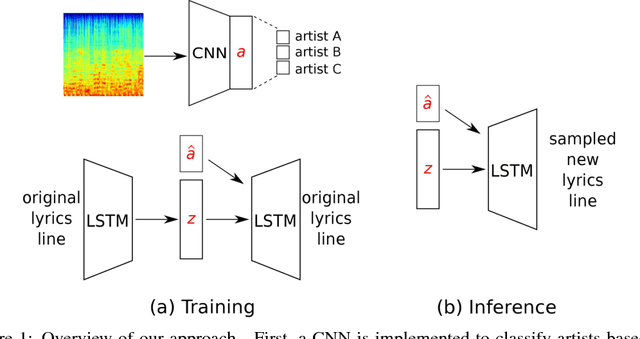

Dec 20, 2018Olga Vechtomova, Hareesh Bahuleyan, Amirpasha Ghabussi, Vineet John

We present a system for generating song lyrics lines conditioned on the style of a specified artist. The system uses a variational autoencoder with artist embeddings. We propose the pre-training of artist embeddings with the representations learned by a CNN classifier, which is trained to predict artists based on MEL spectrograms of their song clips. This work is the first step towards combining audio and text modalities of songs for generating lyrics conditioned on the artist's style. Our preliminary results suggest that there is a benefit in initializing artists' embeddings with the representations learned by a spectrogram classifier.

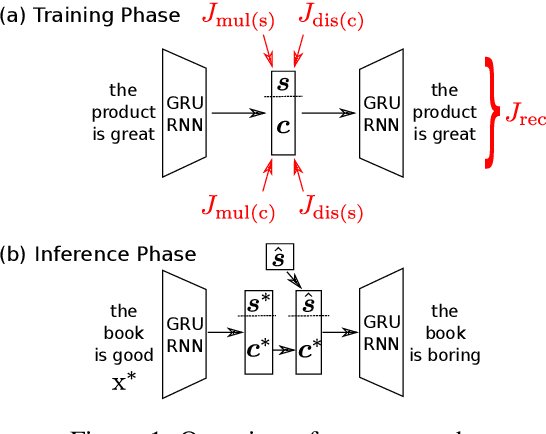

Disentangled Representation Learning for Non-Parallel Text Style Transfer

Sep 11, 2018Vineet John, Lili Mou, Hareesh Bahuleyan, Olga Vechtomova

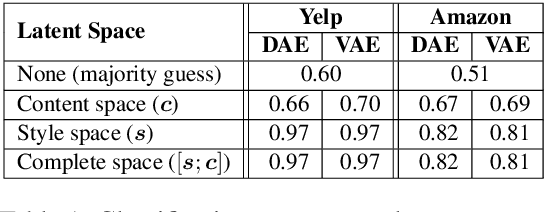

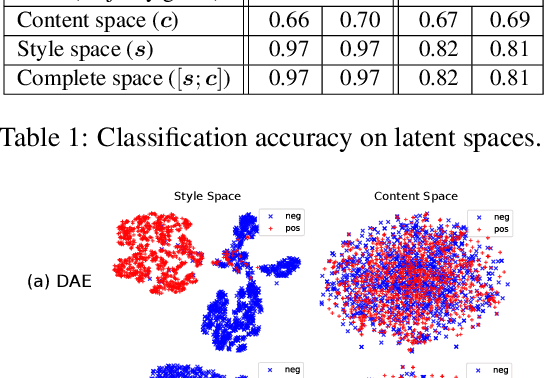

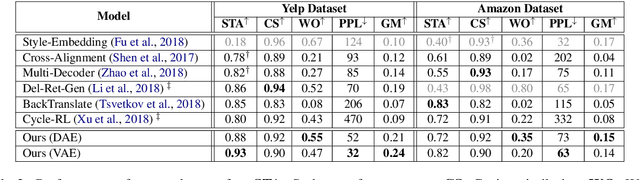

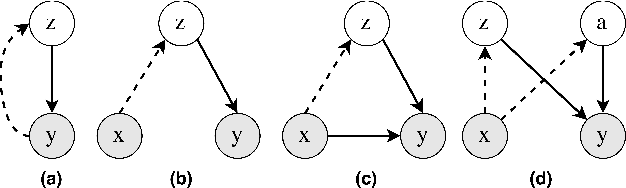

This paper tackles the problem of disentangling the latent variables of style and content in language models. We propose a simple yet effective approach, which incorporates auxiliary multi-task and adversarial objectives, for label prediction and bag-of-words prediction, respectively. We show, both qualitatively and quantitatively, that the style and content are indeed disentangled in the latent space. This disentangled latent representation learning method is applied to style transfer on non-parallel corpora. We achieve substantially better results in terms of transfer accuracy, content preservation and language fluency, in comparison to previous state-of-the-art approaches.

Probabilistic Natural Language Generation with Wasserstein Autoencoders

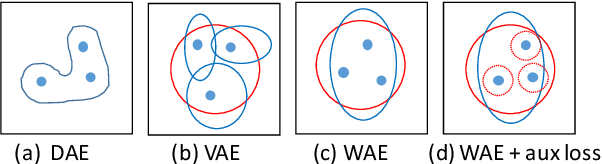

Jun 22, 2018Hareesh Bahuleyan, Lili Mou, Kartik Vamaraju, Hao Zhou, Olga Vechtomova

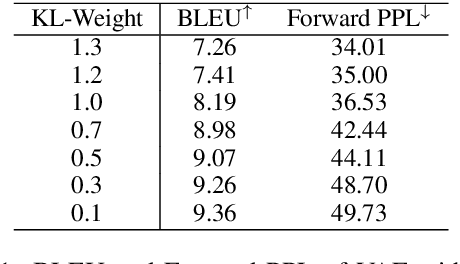

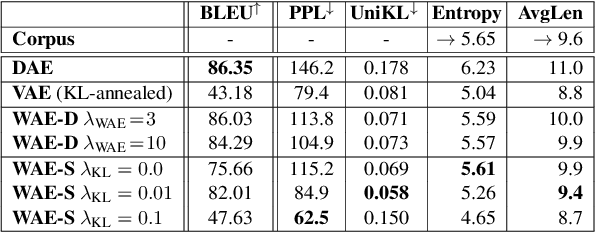

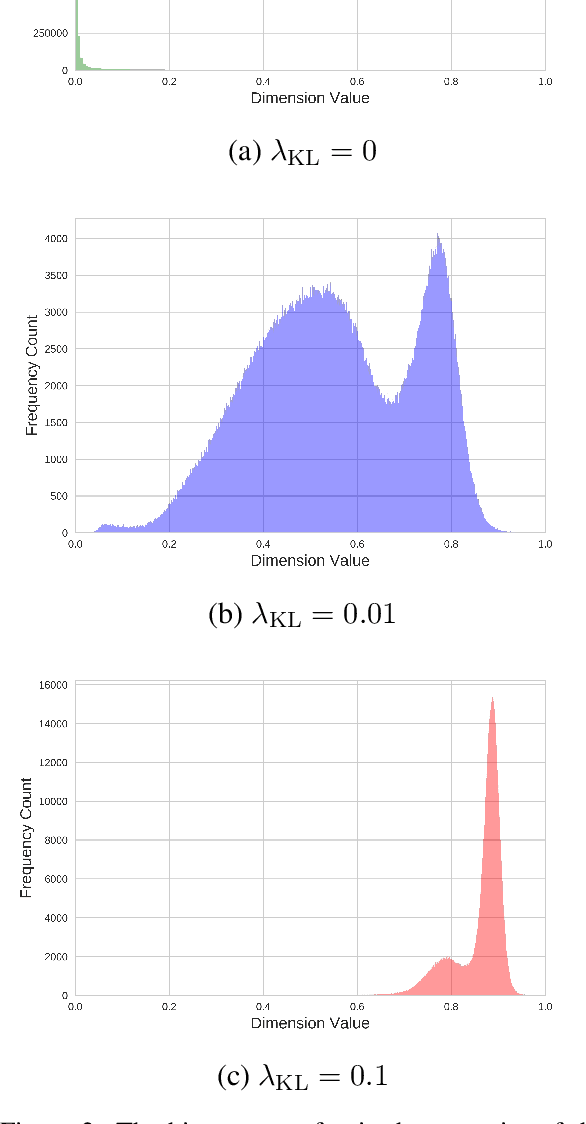

Probabilistic generation of natural language sentences is an important task in NLP. Existing models such as variational autoencoders (VAE) for sequence generation are extremely difficult to train due to the issues associated with the Kullback-Leibler (KL) loss collapsing to zero. One has to implement various heuristics such as KL weight annealing and word dropout in a carefully engineered manner to successfully train a text VAE. In this paper, we propose the use of Wasserstein autoencoders (WAE) for probabilistic natural language sentence generation. We show that sequence-to-sequence WAEs are more robust towards hyperparameters and can be trained in a straightforward manner without the need for any weight annealing. Empirical evidence shows that the latent space learned by WAEs exhibits properties of continuity and smoothness as in VAEs, while simultaneously achieving much higher BLEU scores for sentence reconstruction.

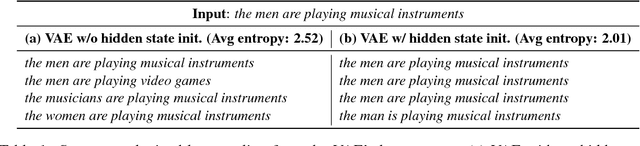

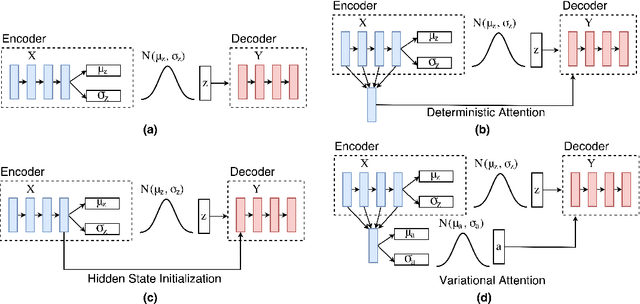

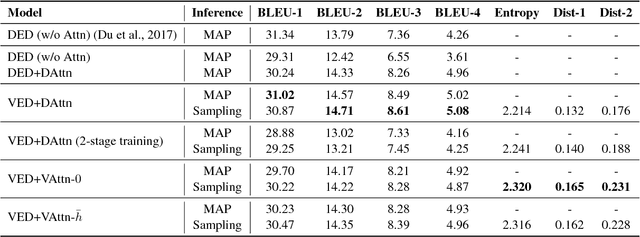

Variational Attention for Sequence-to-Sequence Models

Jun 21, 2018Hareesh Bahuleyan, Lili Mou, Olga Vechtomova, Pascal Poupart

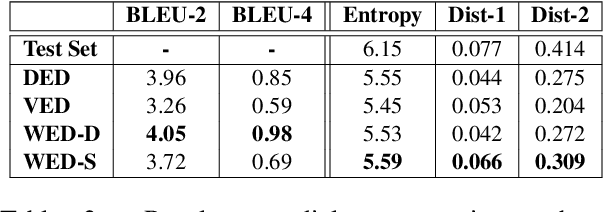

The variational encoder-decoder (VED) encodes source information as a set of random variables using a neural network, which in turn is decoded into target data using another neural network. In natural language processing, sequence-to-sequence (Seq2Seq) models typically serve as encoder-decoder networks. When combined with a traditional (deterministic) attention mechanism, the variational latent space may be bypassed by the attention model, and thus becomes ineffective. In this paper, we propose a variational attention mechanism for VED, where the attention vector is also modeled as Gaussian distributed random variables. Results on two experiments show that, without loss of quality, our proposed method alleviates the bypassing phenomenon as it increases the diversity of generated sentences.

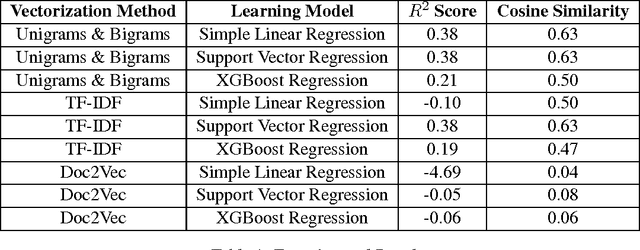

Sentiment Analysis on Financial News Headlines using Training Dataset Augmentation

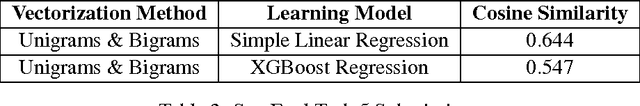

Jul 29, 2017Vineet John, Olga Vechtomova

This paper discusses the approach taken by the UWaterloo team to arrive at a solution for the Fine-Grained Sentiment Analysis problem posed by Task 5 of SemEval 2017. The paper describes the document vectorization and sentiment score prediction techniques used, as well as the design and implementation decisions taken while building the system for this task. The system uses text vectorization models, such as N-gram, TF-IDF and paragraph embeddings, coupled with regression model variants to predict the sentiment scores. Amongst the methods examined, unigrams and bigrams coupled with simple linear regression obtained the best baseline accuracy. The paper also explores data augmentation methods to supplement the training dataset. This system was designed for Subtask 2 (News Statements and Headlines).

* 5 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge