Oishee Bintey Hoque

PRISM-CAFO: Prior-conditioned Remote-sensing Infrastructure Segmentation and Mapping for CAFOs

Jan 16, 2026Abstract:Large-scale livestock operations pose significant risks to human health and the environment, while also being vulnerable to threats such as infectious diseases and extreme weather events. As the number of such operations continues to grow, accurate and scalable mapping has become increasingly important. In this work, we present an infrastructure-first, explainable pipeline for identifying and characterizing Concentrated Animal Feeding Operations (CAFOs) from aerial and satellite imagery. Our method (1) detects candidate infrastructure (e.g., barns, feedlots, manure lagoons, silos) with a domain-tuned YOLOv8 detector, then derives SAM2 masks from these boxes and filters component-specific criteria, (2) extracts structured descriptors (e.g., counts, areas, orientations, and spatial relations) and fuses them with deep visual features using a lightweight spatial cross-attention classifier, and (3) outputs both CAFO type predictions and mask-level attributions that link decisions to visible infrastructure. Through comprehensive evaluation, we show that our approach achieves state-of-the-art performance, with Swin-B+PRISM-CAFO surpassing the best performing baseline by up to 15\%. Beyond strong predictive performance across diverse U.S. regions, we run systematic gradient--activation analyses that quantify the impact of domain priors and show ho

IGraSS: Learning to Identify Infrastructure Networks from Satellite Imagery by Iterative Graph-constrained Semantic Segmentation

Jun 11, 2025Abstract:Accurate canal network mapping is essential for water management, including irrigation planning and infrastructure maintenance. State-of-the-art semantic segmentation models for infrastructure mapping, such as roads, rely on large, well-annotated remote sensing datasets. However, incomplete or inadequate ground truth can hinder these learning approaches. Many infrastructure networks have graph-level properties such as reachability to a source (like canals) or connectivity (roads) that can be leveraged to improve these existing ground truth. This paper develops a novel iterative framework IGraSS, combining a semantic segmentation module-incorporating RGB and additional modalities (NDWI, DEM)-with a graph-based ground-truth refinement module. The segmentation module processes satellite imagery patches, while the refinement module operates on the entire data viewing the infrastructure network as a graph. Experiments show that IGraSS reduces unreachable canal segments from around 18% to 3%, and training with refined ground truth significantly improves canal identification. IGraSS serves as a robust framework for both refining noisy ground truth and mapping canal networks from remote sensing imagery. We also demonstrate the effectiveness and generalizability of IGraSS using road networks as an example, applying a different graph-theoretic constraint to complete road networks.

Knowledge-Informed Deep Learning for Irrigation Type Mapping from Remote Sensing

May 13, 2025Abstract:Accurate mapping of irrigation methods is crucial for sustainable agricultural practices and food systems. However, existing models that rely solely on spectral features from satellite imagery are ineffective due to the complexity of agricultural landscapes and limited training data, making this a challenging problem. We present Knowledge-Informed Irrigation Mapping (KIIM), a novel Swin-Transformer based approach that uses (i) a specialized projection matrix to encode crop to irrigation probability, (ii) a spatial attention map to identify agricultural lands from non-agricultural lands, (iii) bi-directional cross-attention to focus complementary information from different modalities, and (iv) a weighted ensemble for combining predictions from images and crop information. Our experimentation on five states in the US shows up to 22.9\% (IoU) improvement over baseline with a 71.4% (IoU) improvement for hard-to-classify drip irrigation. In addition, we propose a two-phase transfer learning approach to enhance cross-state irrigation mapping, achieving a 51% IoU boost in a state with limited labeled data. The ability to achieve baseline performance with only 40% of the training data highlights its efficiency, reducing the dependency on extensive manual labeling efforts and making large-scale, automated irrigation mapping more feasible and cost-effective.

IrrMap: A Large-Scale Comprehensive Dataset for Irrigation Method Mapping

May 13, 2025

Abstract:We introduce IrrMap, the first large-scale dataset (1.1 million patches) for irrigation method mapping across regions. IrrMap consists of multi-resolution satellite imagery from LandSat and Sentinel, along with key auxiliary data such as crop type, land use, and vegetation indices. The dataset spans 1,687,899 farms and 14,117,330 acres across multiple western U.S. states from 2013 to 2023, providing a rich and diverse foundation for irrigation analysis and ensuring geospatial alignment and quality control. The dataset is ML-ready, with standardized 224x224 GeoTIFF patches, the multiple input modalities, carefully chosen train-test-split data, and accompanying dataloaders for seamless deep learning model training andbenchmarking in irrigation mapping. The dataset is also accompanied by a complete pipeline for dataset generation, enabling researchers to extend IrrMap to new regions for irrigation data collection or adapt it with minimal effort for other similar applications in agricultural and geospatial analysis. We also analyze the irrigation method distribution across crop groups, spatial irrigation patterns (using Shannon diversity indices), and irrigated area variations for both LandSat and Sentinel, providing insights into regional and resolution-based differences. To promote further exploration, we openly release IrrMap, along with the derived datasets, benchmark models, and pipeline code, through a GitHub repository: https://github.com/Nibir088/IrrMap and Data repository: https://huggingface.co/Nibir/IrrMap, providing comprehensive documentation and implementation details.

IrrNet: Advancing Irrigation Mapping with Incremental Patch Size Training on Remote Sensing Imagery

Apr 17, 2024Abstract:Irrigation mapping plays a crucial role in effective water management, essential for preserving both water quality and quantity, and is key to mitigating the global issue of water scarcity. The complexity of agricultural fields, adorned with diverse irrigation practices, especially when multiple systems coexist in close quarters, poses a unique challenge. This complexity is further compounded by the nature of Landsat's remote sensing data, where each pixel is rich with densely packed information, complicating the task of accurate irrigation mapping. In this study, we introduce an innovative approach that employs a progressive training method, which strategically increases patch sizes throughout the training process, utilizing datasets from Landsat 5 and 7, labeled with the WRLU dataset for precise labeling. This initial focus allows the model to capture detailed features, progressively shifting to broader, more general features as the patch size enlarges. Remarkably, our method enhances the performance of existing state-of-the-art models by approximately 20%. Furthermore, our analysis delves into the significance of incorporating various spectral bands into the model, assessing their impact on performance. The findings reveal that additional bands are instrumental in enabling the model to discern finer details more effectively. This work sets a new standard for leveraging remote sensing imagery in irrigation mapping.

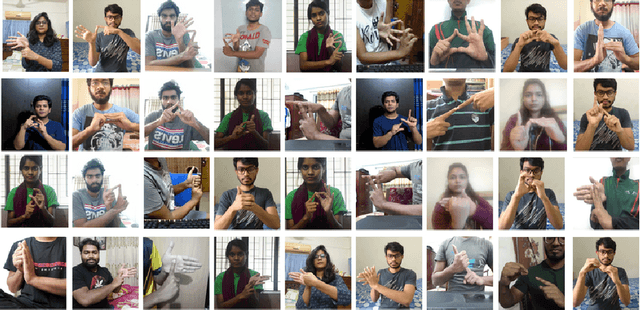

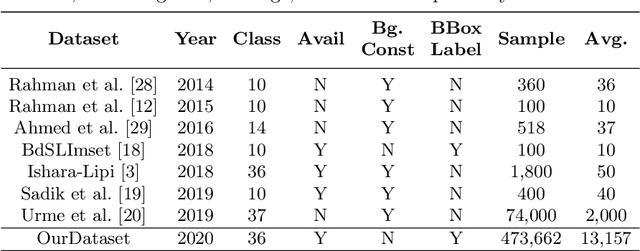

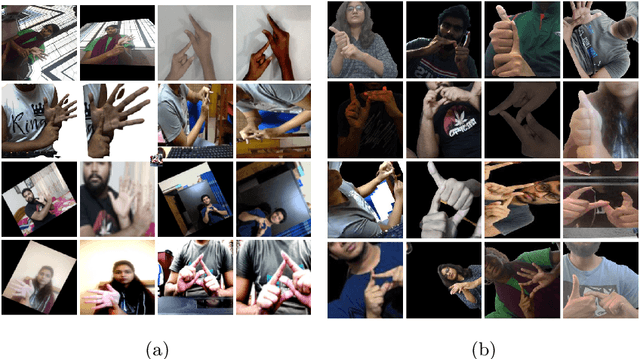

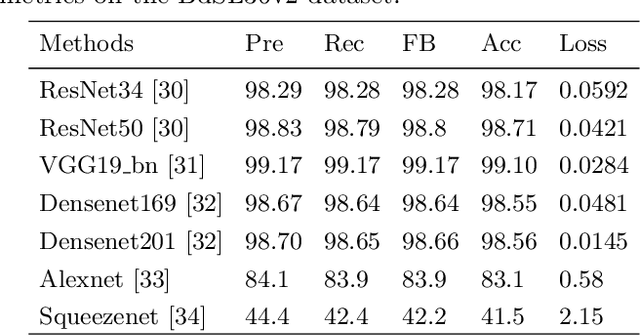

BdSL36: A Dataset for Bangladeshi Sign Letters Recognition

Oct 02, 2021

Abstract:Bangladeshi Sign Language (BdSL) is a commonly used medium of communication for the hearing-impaired people in Bangladesh. A real-time BdSL interpreter with no controlled lab environment has a broad social impact and an interesting avenue of research as well. Also, it is a challenging task due to the variation in different subjects (age, gender, color, etc.), complex features, and similarities of signs and clustered backgrounds. However, the existing dataset for BdSL classification task is mainly built in a lab friendly setup which limits the application of powerful deep learning technology. In this paper, we introduce a dataset named BdSL36 which incorporates background augmentation to make the dataset versatile and contains over four million images belonging to 36 categories. Besides, we annotate about 40,000 images with bounding boxes to utilize the potentiality of object detection algorithms. Furthermore, several intensive experiments are performed to establish the baseline performance of our BdSL36. Moreover, we employ beta testing of our classifiers at the user level to justify the possibilities of real-world application with this dataset. We believe our BdSL36 will expedite future research on practical sign letter classification. We make the datasets and all the pre-trained models available for further researcher.

Real Time Bangladeshi Sign Language Detection using Faster R-CNN

Nov 30, 2018

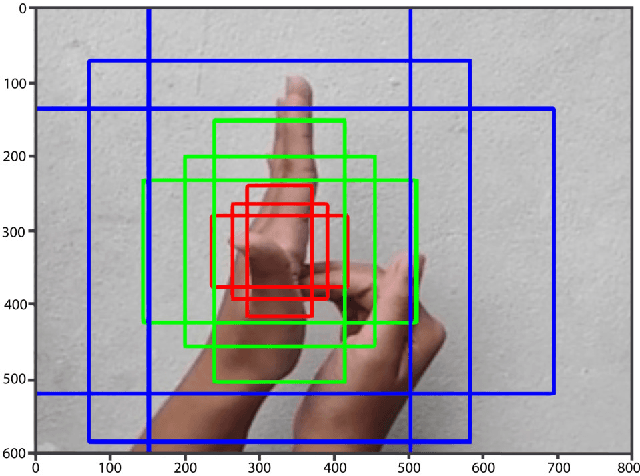

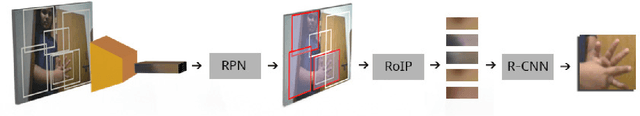

Abstract:Bangladeshi Sign Language (BdSL) is a commonly used medium of communication for the hearing-impaired people in Bangladesh. Developing a real time system to detect these signs from images is a great challenge. In this paper, we present a technique to detect BdSL from images that performs in real time. Our method uses Convolutional Neural Network based object detection technique to detect the presence of signs in the image region and to recognize its class. For this purpose, we adopted Faster Region-based Convolutional Network approach and developed a dataset $-$ BdSLImset $-$ to train our system. Previous research works in detecting BdSL generally depend on external devices while most of the other vision-based techniques do not perform efficiently in real time. Our approach, however, is free from such limitations and the experimental results demonstrate that the proposed method successfully identifies and recognizes Bangladeshi signs in real time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge