Nurul Huda Mahmood

Integrating Meteorological and Operational Data: A Novel Approach to Understanding Railway Delays in Finland

Jan 23, 2026Abstract:Train delays result from complex interactions between operational, technical, and environmental factors. While weather impacts railway reliability, particularly in Nordic regions, existing datasets rarely integrate meteorological information with operational train data. This study presents the first publicly available dataset combining Finnish railway operations with synchronized meteorological observations from 2018-2024. The dataset integrates operational metrics from Finland Digitraffic Railway Traffic Service with weather measurements from 209 environmental monitoring stations, using spatial-temporal alignment via Haversine distance. It encompasses 28 engineered features across operational variables and meteorological measurements, covering approximately 38.5 million observations from Finland's 5,915-kilometer rail network. Preprocessing includes strategic missing data handling through spatial fallback algorithms, cyclical encoding of temporal features, and robust scaling of weather data to address sensor outliers. Analysis reveals distinct seasonal patterns, with winter months exhibiting delay rates exceeding 25\% and geographic clustering of high-delay corridors in central and northern Finland. Furthermore, the work demonstrates applications of the data set in analysing the reliability of railway traffic in Finland. A baseline experiment using XGBoost regression achieved a Mean Absolute Error of 2.73 minutes for predicting station-specific delays, demonstrating the dataset's utility for machine learning applications. The dataset enables diverse applications, including train delay prediction, weather impact assessment, and infrastructure vulnerability mapping, providing researchers with a flexible resource for machine learning applications in railway operations research.

Improved GPR-Based CSI Acquisition via Spatial-Correlation Kernel

Jan 21, 2026Abstract:Accurate channel estimation with low pilot overhead and computational complexity is key to efficiently utilizing multi-antenna wireless systems. Motivated by the evolution from purely statistical descriptions toward physics- and geometry-aware propagation models, this work focuses on incorporating channel information into a Gaussian process regression (GPR) framework for improving the channel estimation accuracy. In this work, we propose a GPR-based channel estimation framework along with a novel Spatial-correlation (SC) kernel that explicitly captures the channel's second-order statistics. We derive a closed-form expression of the proposed SC-based GPR estimator and prove that its posterior mean is optimal in terms of minimum mean-square error (MMSE) under the same second-order statistics, without requiring the underlying channel distribution to be Gaussian. Our analysis reveals that, with up to 50% pilot overhead reduction, the proposed method achieves the lowest normalized mean-square error, the highest empirical 95% credible-interval coverage, and superior preservation of spectral efficiency compared to benchmark estimators, while maintaining lower computational complexity than the conventional MMSE estimator.

A Novel Geometry-Aware GPR-Based Energy-Efficient and Low-Overhead Channel Estimation Scheme

Dec 27, 2025Abstract:In this work, we model the wireless channel as a complex-valued Gaussian process (GP) over the transmit and receive antenna arrays. The channel covariance is characterized using an antenna-geometry-based spectral mixture covariance function (GB-SMCF), which captures the spatial structure of the antenna arrays. To address the problem of accurate channel state information (CSI) estimation from very few noisy observations, we develop a Gaussian process regression (GPR)-based channel estimation framework that employs the GB-SMCF as a prior covariance model with online hyperparameter optimization. In the proposed scheme, the full channel is learned by transmitting pilots from only a small subset of transmit antennas while receiving them at all receive antennas, resulting in noisy partial CSI at the receiver. These limited observations are then processed by the GPR framework, which updates the GB-SMCF hyperparameters online from incoming measurements and reconstructs the full CSI in real time. Simulation results demonstrate that the proposed GB-SMCF-based estimator outperforms baseline methods while reducing pilot overhead and training energy by up to 50$\%$ compared to conventional schemes.

Energy-Efficient and Reliable Data Collection in Receiver-Initiated Wake-up Radio Enabled IoT Networks

May 15, 2025

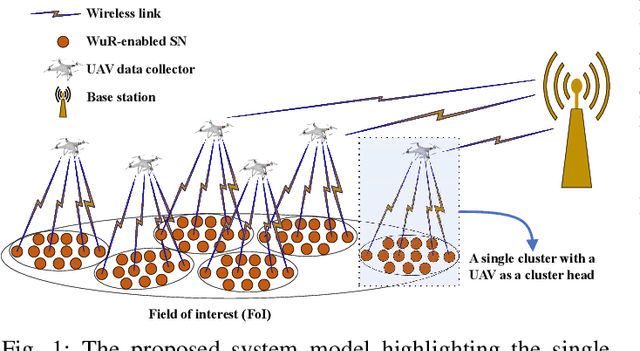

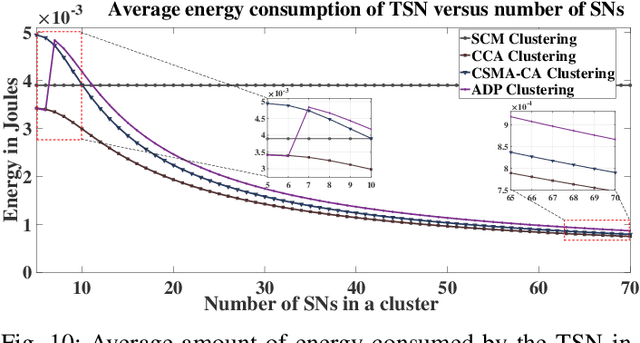

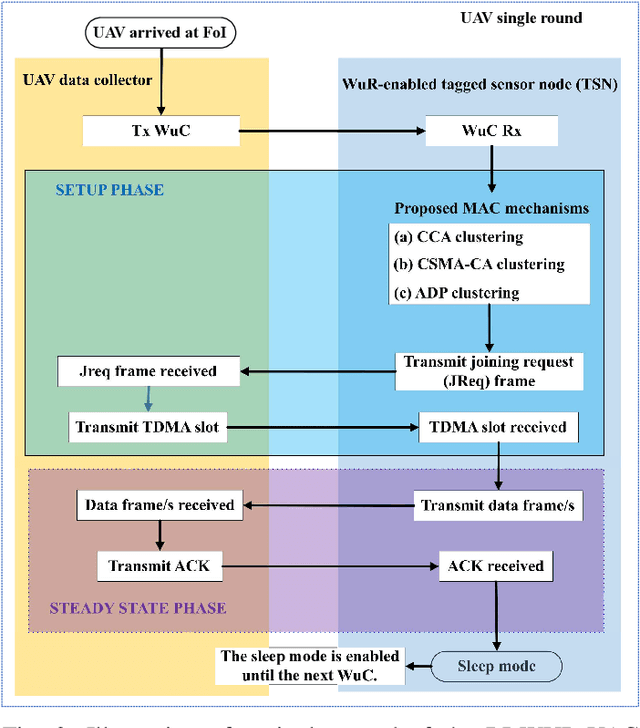

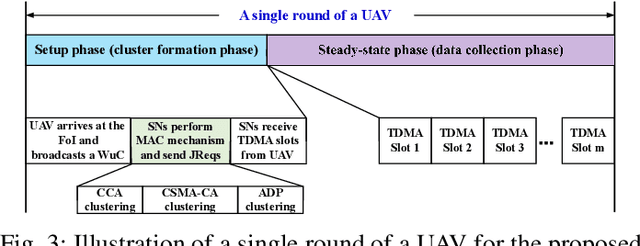

Abstract:In unmanned aerial vehicle (UAV)-assisted wake-up radio (WuR)-enabled internet of things (IoT) networks, UAVs can instantly activate the main radios (MRs) of the sensor nodes (SNs) with a wake-up call (WuC) for efficient data collection in mission-driven data collection scenarios. However, the spontaneous response of numerous SNs to the UAV's WuC can lead to significant packet loss and collisions, as WuR does not exhibit its superiority for high-traffic loads. To address this challenge, we propose an innovative receiver-initiated WuR UAV-assisted clustering (RI-WuR-UAC) medium access control (MAC) protocol to achieve low latency and high reliability in ultra-low power consumption applications. We model the proposed protocol using the $M/G/1/2$ queuing framework and derive expressions for key performance metrics, i.e., channel busyness probability, probability of successful clustering, average SN energy consumption, and average transmission delay. The RI-WuR-UAC protocol employs three distinct data flow models, tailored to different network traffic conditions, which perform three MAC mechanisms: channel assessment (CCA) clustering for light traffic loads, backoff plus CCA clustering for dense and heavy traffic, and adaptive clustering for variable traffic loads. Simulation results demonstrate that the RI-WuR-UAC protocol significantly outperforms the benchmark sub-carrier modulation clustering protocol. By varying the network load, we capture the trade-offs among the performance metrics, showcasing the superior efficiency and reliability of the RI-WuR-UAC protocol.

Max-Min Fairness for Stacked Intelligent Metasurface-Assisted Multi-User MISO Systems

Apr 20, 2025

Abstract:Stacked intelligent metasurface (SIM) is an emerging technology that uses multiple reconfigurable surface layers to enable flexible wave-based beamforming. In this paper, we focus on an \ac{SIM}-assisted multi-user multiple-input single-output system, where it is essential to ensure that all users receive a fair and reliable service level. To this end, we develop two max-min fairness algorithms based on instantaneous channel state information (CSI) and statistical CSI. For the instantaneous CSI case, we propose an alternating optimization algorithm that jointly optimizes power allocation using geometric programming and wave-based beamforming coefficients using the gradient descent-ascent method. For the statistical CSI case, since deriving an exact expression for the average minimum achievable rate is analytically intractable, we derive a tight upper bound and thereby formulate a stochastic optimization problem. This problem is then solved, capitalizing on an alternating approach combining geometric programming and gradient descent algorithms, to obtain the optimal policies. Our numerical results show significant improvements in the minimum achievable rate compared to the benchmark schemes. In particular, for the instantaneous CSI scenario, the individual impact of the optimal wave-based beamforming is significantly higher than that of the power allocation strategy. Moreover, the proposed upper bound is shown to be tight in the low signal-to-noise ratio regime under the statistical CSI.

Uplink Rate Splitting Multiple Access with Imperfect Channel State Information and Interference Cancellation

Jan 31, 2025

Abstract:This article investigates the performance of uplink rate splitting multiple access (RSMA) in a two-user scenario, addressing an under-explored domain compared to its downlink counterpart. With the increasing demand for uplink communication in applications like the Internet-of-Things, it is essential to account for practical imperfections, such as inaccuracies in channel state information at the receiver (CSIR) and limitations in successive interference cancellation (SIC), to provide realistic assessments of system performance. Specifically, we derive closed-form expressions for the outage probability, throughput, and asymptotic outage behavior of uplink users, considering imperfect CSIR and SIC. We validate the accuracy of these derived expressions using Monte Carlo simulations. Our findings reveal that at low transmit power levels, imperfect CSIR significantly affects system performance more severely than SIC imperfections. However, as the transmit power increases, the impact of imperfect CSIR diminishes, while the influence of SIC imperfections becomes more pronounced. Moreover, we highlight the impact of the rate allocation factor on user performance. Finally, our comparison with non-orthogonal multiple access (NOMA) highlights the outage performance trade-offs between RSMA and NOMA. RSMA proves to be more effective in managing imperfect CSIR and enhances performance through strategic message splitting, resulting in more robust communication.

Efficient Channel Prediction for Beyond Diagonal RIS-Assisted MIMO Systems with Channel Aging

Nov 21, 2024

Abstract:Novel reconfigurable intelligent surface (RIS) architectures, known as beyond diagonal RISs (BD-RISs), have been proposed to enhance reflection efficiency and expand RIS capabilities. However, their passive nature, non-diagonal reflection matrix, and the large number of coupled reflecting elements complicate the channel state information (CSI) estimation process. The challenge further escalates in scenarios with fast-varying channels. In this paper, we address this challenge by proposing novel joint channel estimation and prediction strategies with low overhead and high accuracy for two different RIS architectures in a BD-RIS-assisted multiple-input multiple-output system under correlated fast-fading environments with channel aging. The channel estimation procedure utilizes the Tucker2 decomposition with bilinear alternative least squares, which is exploited to decompose the cascade channels of the BD-RIS-assisted system into effective channels of reduced dimension. The channel prediction framework is based on a convolutional neural network combined with an autoregressive predictor. The estimated/predicted CSI is then utilized to optimize the RIS phase shifts aiming at the maximization of the downlink sum rate. Insightful simulation results demonstrate that our proposed approach is robust to channel aging, and exhibits a high estimation accuracy. Moreover, our scheme can deliver a high average downlink sum rate, outperforming other state-of-the-art channel estimation methods. The results also reveal a remarkable reduction in pilot overhead of up to 98\% compared to baseline schemes, all imposing low computational complexity.

Probabilistic Allocation of Payload Code Rate and Header Copies in LR-FHSS Networks

Oct 07, 2024

Abstract:We evaluate the performance of the LoRaWAN Long-Range Frequency Hopping Spread Spectrum (LR-FHSS) technique using a device-level probabilistic strategy for code rate and header replica allocation. Specifically, we investigate the effects of different header replica and code rate allocations at each end-device, guided by a probability distribution provided by the network server. As a benchmark, we compare the proposed strategy with the standardized LR-FHSS data rates DR8 and DR9. Our numerical results demonstrate that the proposed strategy consistently outperforms the DR8 and DR9 standard data rates across all considered scenarios. Notably, our findings reveal that the optimal distribution rarely includes data rate DR9, while data rate DR8 significantly contributes to the goodput and energy efficiency optimizations.

Assessment of the Sparsity-Diversity Trade-offs in Active Users Detection for mMTC

Feb 08, 2024

Abstract:Wireless communication systems must increasingly support a multitude of machine-type communications (MTC) devices, thus calling for advanced strategies for active user detection (AUD). Recent literature has delved into AUD techniques based on compressed sensing, highlighting the critical role of signal sparsity. This study investigates the relationship between frequency diversity and signal sparsity in the AUD problem. Single-antenna users transmit multiple copies of non-orthogonal pilots across multiple frequency channels and the base station independently performs AUD in each channel using the orthogonal matching pursuit algorithm. We note that, although frequency diversity may improve the likelihood of successful reception of the signals, it may also damage the channel sparsity level, leading to important trade-offs. We show that a sparser signal significantly benefits AUD, surpassing the advantages brought by frequency diversity in scenarios with limited temporal resources and/or high numbers of receive antennas. Conversely, with longer pilots and fewer receive antennas, investing in frequency diversity becomes more impactful, resulting in a tenfold AUD performance improvement.

Malicious RIS versus Massive MIMO: Securing Multiple Access against RIS-based Jamming Attacks

Jan 13, 2024

Abstract:In this letter, we study an attack that leverages a reconfigurable intelligent surface (RIS) to induce harmful interference toward multiple users in massive multiple-input multiple-output (mMIMO) systems during the data transmission phase. We propose an efficient and flexible weighted-sum projected gradient-based algorithm for the attacker to optimize the RIS reflection coefficients without knowing legitimate user channels. To counter such a threat, we propose two reception strategies. Simulation results demonstrate that our malicious algorithm outperforms baseline strategies while offering adaptability for targeting specific users. At the same time, our results show that our mitigation strategies are effective even if only an imperfect estimate of the cascade RIS channel is available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge