Chasing Your Long Tails: Differentially Private Prediction in Health Care Settings

Oct 13, 2020Vinith M. Suriyakumar, Nicolas Papernot, Anna Goldenberg, Marzyeh Ghassemi

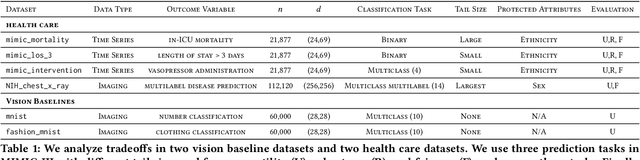

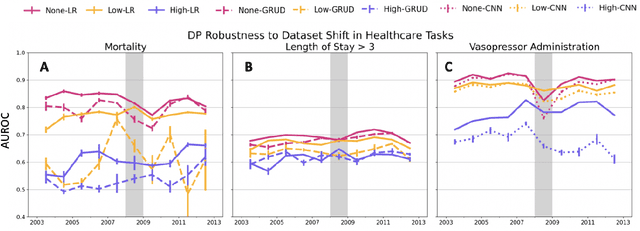

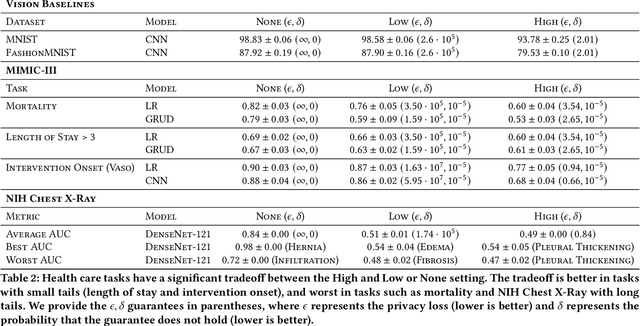

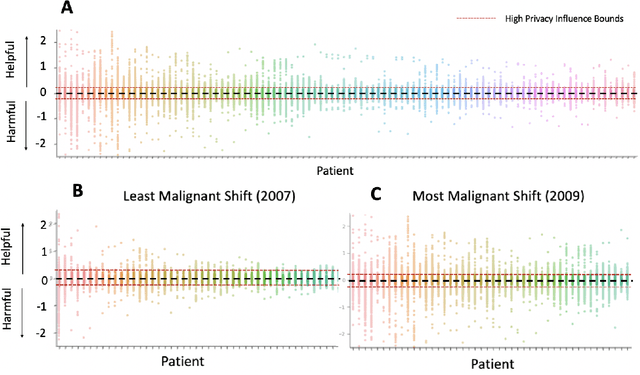

Machine learning models in health care are often deployed in settings where it is important to protect patient privacy. In such settings, methods for differentially private (DP) learning provide a general-purpose approach to learn models with privacy guarantees. Modern methods for DP learning ensure privacy through mechanisms that censor information judged as too unique. The resulting privacy-preserving models, therefore, neglect information from the tails of a data distribution, resulting in a loss of accuracy that can disproportionately affect small groups. In this paper, we study the effects of DP learning in health care. We use state-of-the-art methods for DP learning to train privacy-preserving models in clinical prediction tasks, including x-ray classification of images and mortality prediction in time series data. We use these models to perform a comprehensive empirical investigation of the tradeoffs between privacy, utility, robustness to dataset shift, and fairness. Our results highlight lesser-known limitations of methods for DP learning in health care, models that exhibit steep tradeoffs between privacy and utility, and models whose predictions are disproportionately influenced by large demographic groups in the training data. We discuss the costs and benefits of differentially private learning in health care.

Not My Deepfake: Towards Plausible Deniability for Machine-Generated Media

Aug 20, 2020Baiwu Zhang, Jin Peng Zhou, Ilia Shumailov, Nicolas Papernot

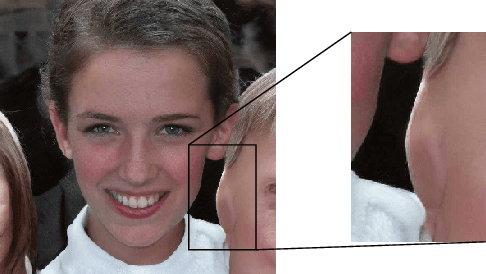

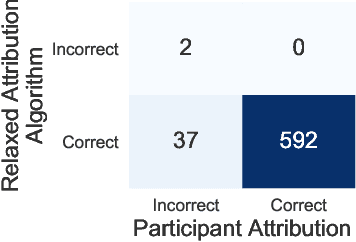

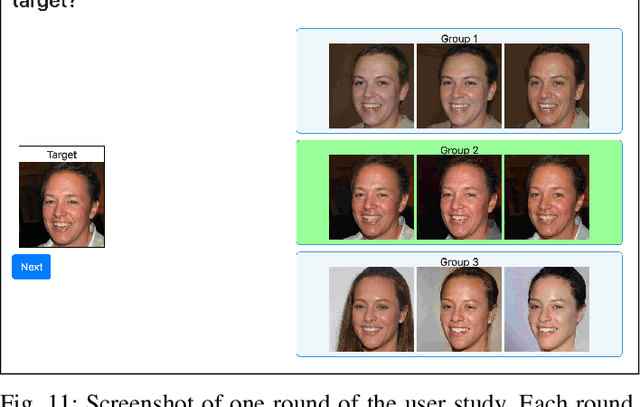

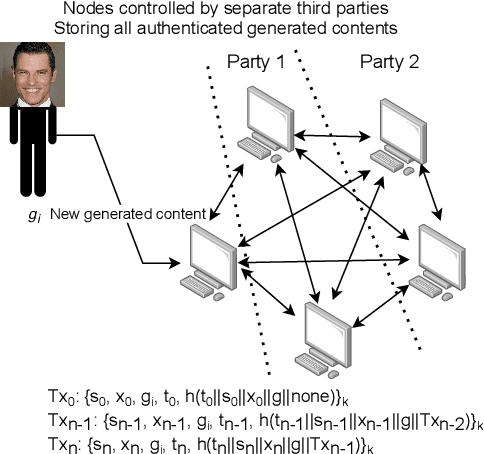

Progress in generative modelling, especially generative adversarial networks, have made it possible to efficiently synthesize and alter media at scale. Malicious individuals now rely on these machine-generated media, or deepfakes, to manipulate social discourse. In order to ensure media authenticity, existing research is focused on deepfake detection. Yet, the very nature of frameworks used for generative modeling suggests that progress towards detecting deepfakes will enable more realistic deepfake generation. Therefore, it comes at no surprise that developers of generative models are under the scrutiny of stakeholders dealing with misinformation campaigns. As such, there is a clear need to develop tools that ensure the transparent use of generative modeling, while minimizing the harm caused by malicious applications. We propose a framework to provide developers of generative models with plausible deniability. We introduce two techniques to provide evidence that a model developer did not produce media that they are being accused of. The first optimizes over the source of entropy of each generative model to probabilistically attribute a deepfake to one of the models. The second involves cryptography to maintain a tamper-proof and publicly-broadcasted record of all legitimate uses of the model. We evaluate our approaches on the seminal example of face synthesis, demonstrating that our first approach achieves 97.62% attribution accuracy, and is less sensitive to perturbations and adversarial examples. In cases where a machine learning approach is unable to provide plausible deniability, we find that involving cryptography as done in our second approach is required. We also discuss the ethical implications of our work, and highlight that a more meaningful legislative framework is required for a more transparent and ethical use of generative modeling.

Label-Only Membership Inference Attacks

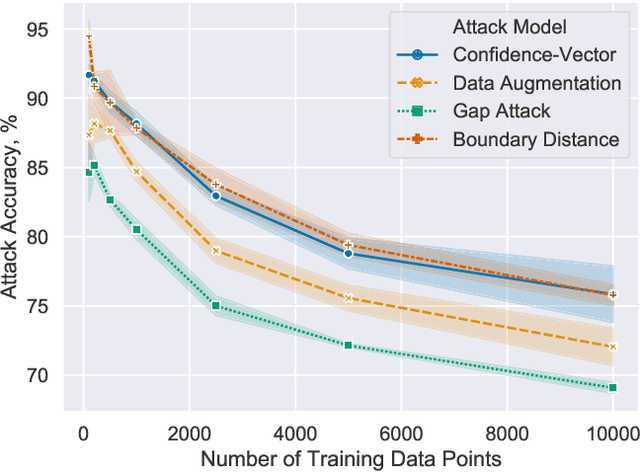

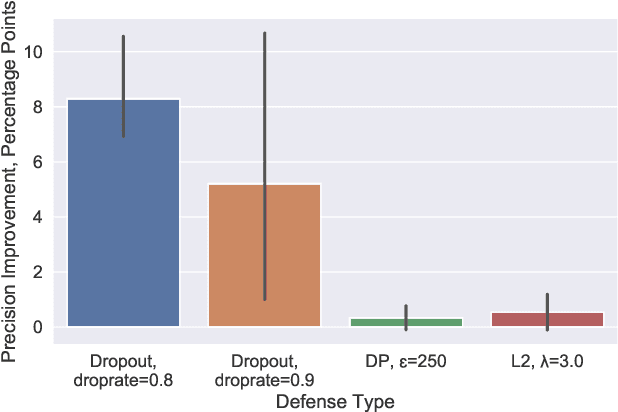

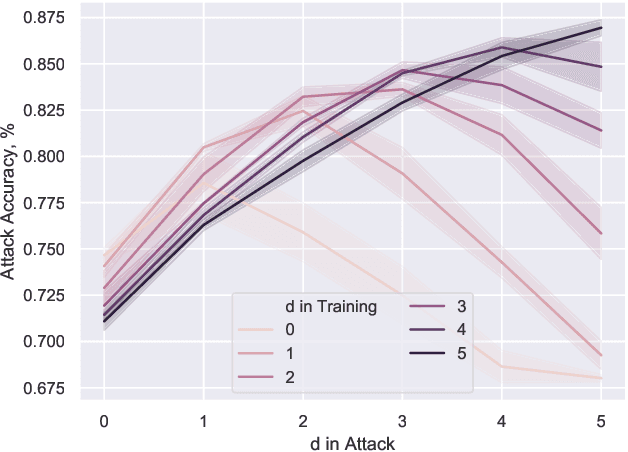

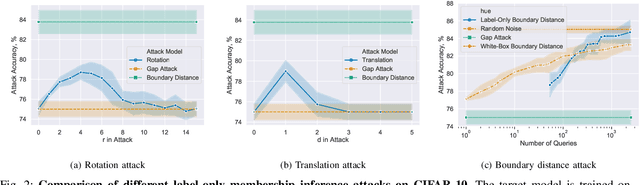

Jul 28, 2020Christopher A. Choquette Choo, Florian Tramer, Nicholas Carlini, Nicolas Papernot

Membership inference attacks are one of the simplest forms of privacy leakage for machine learning models: given a data point and model, determine whether the point was used to train the model. Existing membership inference attacks exploit models' abnormal confidence when queried on their training data. These attacks do not apply if the adversary only gets access to models' predicted labels, without a confidence measure. In this paper, we introduce label-only membership inference attacks. Instead of relying on confidence scores, our attacks evaluate the robustness of a model's predicted labels under perturbations to obtain a fine-grained membership signal. These perturbations include common data augmentations or adversarial examples. We empirically show that our label-only membership inference attacks perform on par with prior attacks that required access to model confidences. We further demonstrate that label-only attacks break multiple defenses against membership inference attacks that (implicitly or explicitly) rely on a phenomenon we call confidence masking. These defenses modify a model's confidence scores in order to thwart attacks, but leave the model's predicted labels unchanged. Our label-only attacks demonstrate that confidence-masking is not a viable defense strategy against membership inference. Finally, we investigate worst-case label-only attacks, that infer membership for a small number of outlier data points. We show that label-only attacks also match confidence-based attacks in this setting. We find that training models with differential privacy and (strong) L2 regularization are the only known defense strategies that successfully prevents all attacks. This remains true even when the differential privacy budget is too high to offer meaningful provable guarantees.

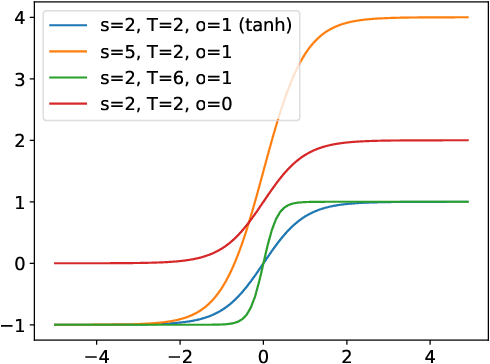

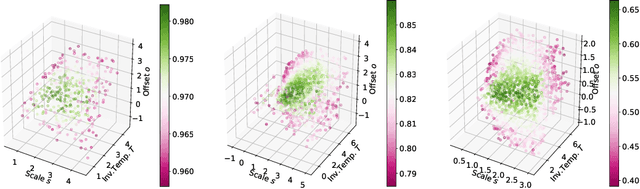

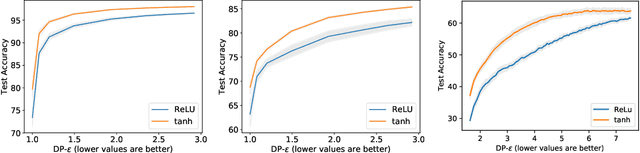

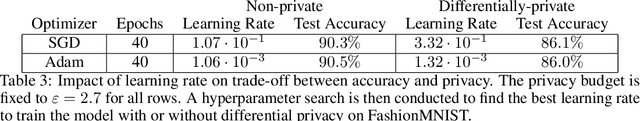

Tempered Sigmoid Activations for Deep Learning with Differential Privacy

Jul 28, 2020Nicolas Papernot, Abhradeep Thakurta, Shuang Song, Steve Chien, Úlfar Erlingsson

Because learning sometimes involves sensitive data, machine learning algorithms have been extended to offer privacy for training data. In practice, this has been mostly an afterthought, with privacy-preserving models obtained by re-running training with a different optimizer, but using the model architectures that already performed well in a non-privacy-preserving setting. This approach leads to less than ideal privacy/utility tradeoffs, as we show here. Instead, we propose that model architectures are chosen ab initio explicitly for privacy-preserving training. To provide guarantees under the gold standard of differential privacy, one must bound as strictly as possible how individual training points can possibly affect model updates. In this paper, we are the first to observe that the choice of activation function is central to bounding the sensitivity of privacy-preserving deep learning. We demonstrate analytically and experimentally how a general family of bounded activation functions, the tempered sigmoids, consistently outperform unbounded activation functions like ReLU. Using this paradigm, we achieve new state-of-the-art accuracy on MNIST, FashionMNIST, and CIFAR10 without any modification of the learning procedure fundamentals or differential privacy analysis.

SoK: The Faults in our ASRs: An Overview of Attacks against Automatic Speech Recognition and Speaker Identification Systems

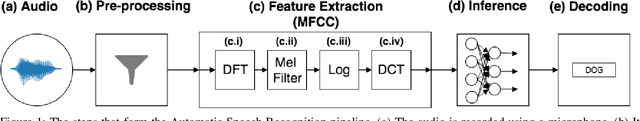

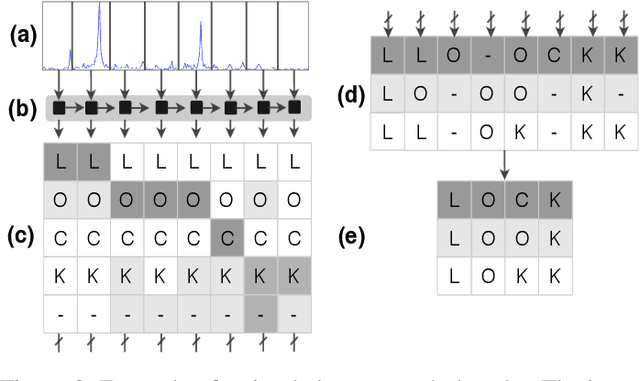

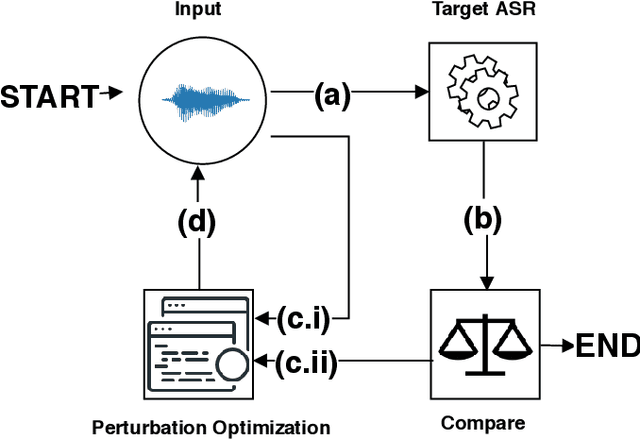

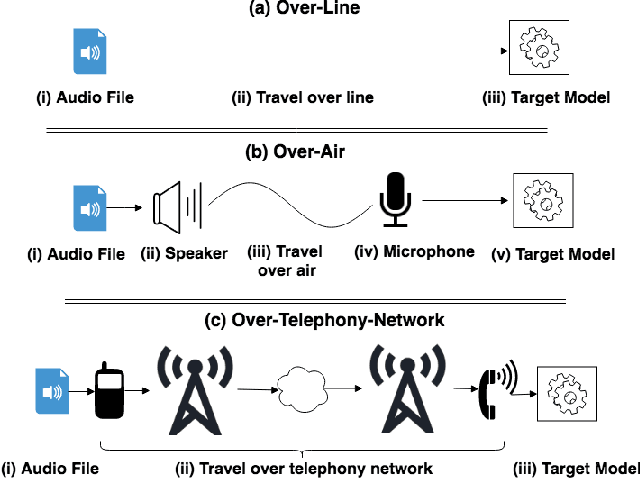

Jul 21, 2020Hadi Abdullah, Kevin Warren, Vincent Bindschaedler, Nicolas Papernot, Patrick Traynor

Speech and speaker recognition systems are employed in a variety of applications, from personal assistants to telephony surveillance and biometric authentication. The wide deployment of these systems has been made possible by the improved accuracy in neural networks. Like other systems based on neural networks, recent research has demonstrated that speech and speaker recognition systems are vulnerable to attacks using manipulated inputs. However, as we demonstrate in this paper, the end-to-end architecture of speech and speaker systems and the nature of their inputs make attacks and defenses against them substantially different than those in the image space. We demonstrate this first by systematizing existing research in this space and providing a taxonomy through which the community can evaluate future work. We then demonstrate experimentally that attacks against these models almost universally fail to transfer. In so doing, we argue that substantial additional work is required to provide adequate mitigations in this space.

The Faults in our ASRs: An Overview of Attacks against Automatic Speech Recognition and Speaker Identification Systems

Jul 13, 2020Hadi Abdullah, Kevin Warren, Vincent Bindschaedler, Nicolas Papernot, Patrick Traynor

Speech and speaker recognition systems are employed in a variety of applications, from personal assistants to telephony surveillance and biometric authentication. The wide deployment of these systems has been made possible by the improved accuracy in neural networks. Like other systems based on neural networks, recent research has demonstrated that speech and speaker recognition systems are vulnerable to attacks using manipulated inputs. However, as we demonstrate in this paper, the end-to-end architecture of speech and speaker systems and the nature of their inputs make attacks and defenses against them substantially different than those in the image space. We demonstrate this first by systematizing existing research in this space and providing a taxonomy through which the community can evaluate future work. We then demonstrate experimentally that attacks against these models almost universally fail to transfer. In so doing, we argue that substantial additional work is required to provide adequate mitigations in this space.

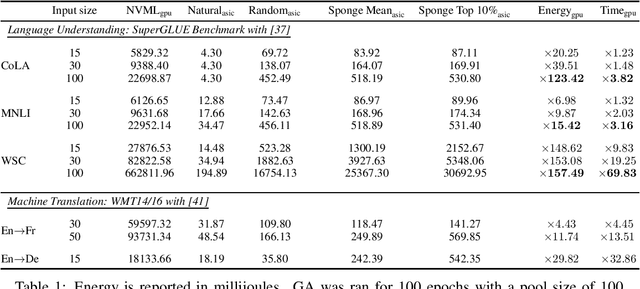

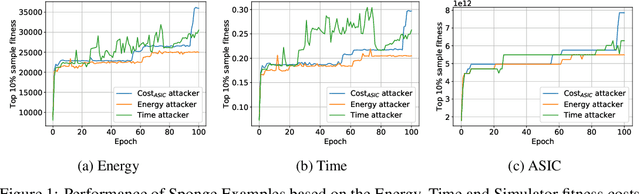

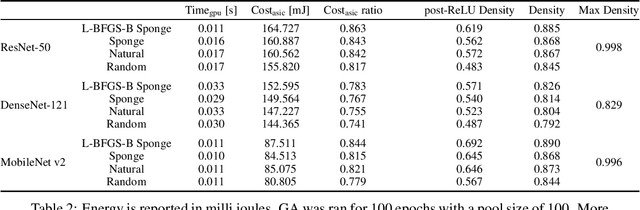

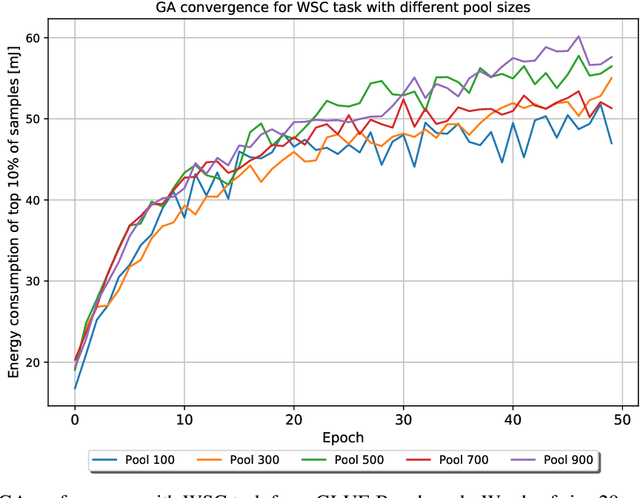

Sponge Examples: Energy-Latency Attacks on Neural Networks

Jun 05, 2020Ilia Shumailov, Yiren Zhao, Daniel Bates, Nicolas Papernot, Robert Mullins, Ross Anderson

The high energy costs of neural network training and inference led to the use of acceleration hardware such as GPUs and TPUs. While this enabled us to train large-scale neural networks in datacenters and deploy them on edge devices, the focus so far is on average-case performance. In this work, we introduce a novel threat vector against neural networks whose energy consumption or decision latency are critical. We show how adversaries can exploit carefully crafted $\boldsymbol{sponge}~\boldsymbol{examples}$, which are inputs designed to maximise energy consumption and latency. We mount two variants of this attack on established vision and language models, increasing energy consumption by a factor of 10 to 200. Our attacks can also be used to delay decisions where a network has critical real-time performance, such as in perception for autonomous vehicles. We demonstrate the portability of our malicious inputs across CPUs and a variety of hardware accelerator chips including GPUs, and an ASIC simulator. We conclude by proposing a defense strategy which mitigates our attack by shifting the analysis of energy consumption in hardware from an average-case to a worst-case perspective.

On the Robustness of Cooperative Multi-Agent Reinforcement Learning

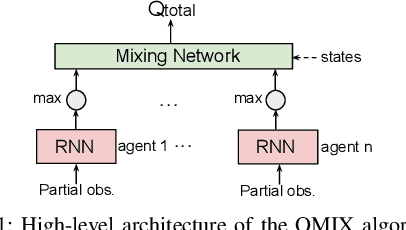

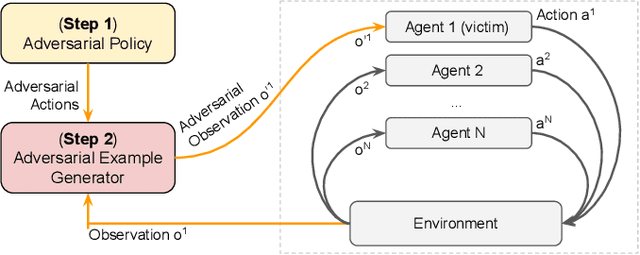

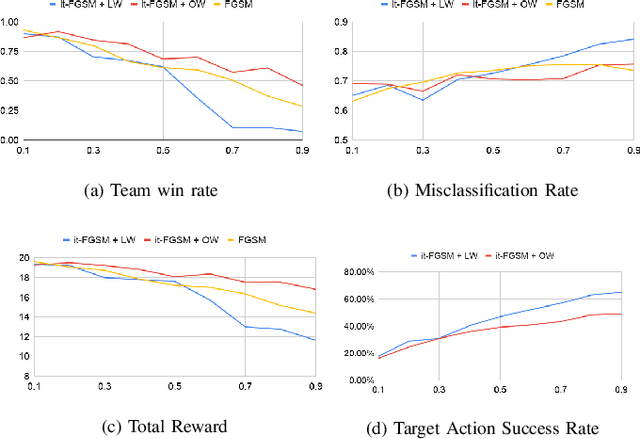

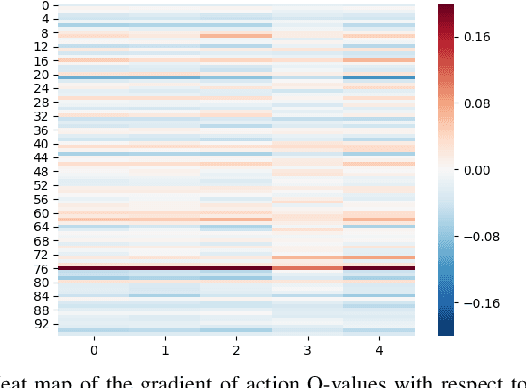

Mar 08, 2020Jieyu Lin, Kristina Dzeparoska, Sai Qian Zhang, Alberto Leon-Garcia, Nicolas Papernot

In cooperative multi-agent reinforcement learning (c-MARL), agents learn to cooperatively take actions as a team to maximize a total team reward. We analyze the robustness of c-MARL to adversaries capable of attacking one of the agents on a team. Through the ability to manipulate this agent's observations, the adversary seeks to decrease the total team reward. Attacking c-MARL is challenging for three reasons: first, it is difficult to estimate team rewards or how they are impacted by an agent mispredicting; second, models are non-differentiable; and third, the feature space is low-dimensional. Thus, we introduce a novel attack. The attacker first trains a policy network with reinforcement learning to find a wrong action it should encourage the victim agent to take. Then, the adversary uses targeted adversarial examples to force the victim to take this action. Our results on the StartCraft II multi-agent benchmark demonstrate that c-MARL teams are highly vulnerable to perturbations applied to one of their agent's observations. By attacking a single agent, our attack method has highly negative impact on the overall team reward, reducing it from 20 to 9.4. This results in the team's winning rate to go down from 98.9% to 0%.

On the Effectiveness of Mitigating Data Poisoning Attacks with Gradient Shaping

Feb 27, 2020Sanghyun Hong, Varun Chandrasekaran, Yiğitcan Kaya, Tudor Dumitraş, Nicolas Papernot

Machine learning algorithms are vulnerable to data poisoning attacks. Prior taxonomies that focus on specific scenarios, e.g., indiscriminate or targeted, have enabled defenses for the corresponding subset of known attacks. Yet, this introduces an inevitable arms race between adversaries and defenders. In this work, we study the feasibility of an attack-agnostic defense relying on artifacts that are common to all poisoning attacks. Specifically, we focus on a common element between all attacks: they modify gradients computed to train the model. We identify two main artifacts of gradients computed in the presence of poison: (1) their $\ell_2$ norms have significantly higher magnitudes than those of clean gradients, and (2) their orientation differs from clean gradients. Based on these observations, we propose the prerequisite for a generic poisoning defense: it must bound gradient magnitudes and minimize differences in orientation. We call this gradient shaping. As an exemplar tool to evaluate the feasibility of gradient shaping, we use differentially private stochastic gradient descent (DP-SGD), which clips and perturbs individual gradients during training to obtain privacy guarantees. We find that DP-SGD, even in configurations that do not result in meaningful privacy guarantees, increases the model's robustness to indiscriminate attacks. It also mitigates worst-case targeted attacks and increases the adversary's cost in multi-poison scenarios. The only attack we find DP-SGD to be ineffective against is a strong, yet unrealistic, indiscriminate attack. Our results suggest that, while we currently lack a generic poisoning defense, gradient shaping is a promising direction for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge