Natalia Ares

QuantGraph: A Receding-Horizon Quantum Graph Solver

Dec 17, 2025

Abstract:Dynamic programming is a cornerstone of graph-based optimization. While effective, it scales unfavorably with problem size. In this work, we present QuantGraph, a two-stage quantum-enhanced framework that casts local and global graph-optimization problems as quantum searches over discrete trajectory spaces. The solver is designed to operate efficiently by first finding a sequence of locally optimal transitions in the graph (local stage), without considering full trajectories. The accumulated cost of these transitions acts as a threshold that prunes the search space (up to 60% reduction for certain examples). The subsequent global stage, based on this threshold, refines the solution. Both stages utilize variants of the Grover-adaptive-search algorithm. To achieve scalability and robustness, we draw on principles from control theory and embed QuantGraph's global stage within a receding-horizon model-predictive-control scheme. This classical layer stabilizes and guides the quantum search, improving precision and reducing computational burden. In practice, the resulting closed-loop system exhibits robust behavior and lower overall complexity. Notably, for a fixed query budget, QuantGraph attains a 2x increase in control-discretization precision while still benefiting from Grover-search's inherent quadratic speedup compared to classical methods.

Learning Physical Systems: Symplectification via Gauge Fixing in Dirac Structures

Jun 23, 2025Abstract:Physics-informed deep learning has achieved remarkable progress by embedding geometric priors, such as Hamiltonian symmetries and variational principles, into neural networks, enabling structure-preserving models that extrapolate with high accuracy. However, in systems with dissipation and holonomic constraints, ubiquitous in legged locomotion and multibody robotics, the canonical symplectic form becomes degenerate, undermining the very invariants that guarantee stability and long-term prediction. In this work, we tackle this foundational limitation by introducing Presymplectification Networks (PSNs), the first framework to learn the symplectification lift via Dirac structures, restoring a non-degenerate symplectic geometry by embedding constrained systems into a higher-dimensional manifold. Our architecture combines a recurrent encoder with a flow-matching objective to learn the augmented phase-space dynamics end-to-end. We then attach a lightweight Symplectic Network (SympNet) to forecast constrained trajectories while preserving energy, momentum, and constraint satisfaction. We demonstrate our method on the dynamics of the ANYmal quadruped robot, a challenging contact-rich, multibody system. To the best of our knowledge, this is the first framework that effectively bridges the gap between constrained, dissipative mechanical systems and symplectic learning, unlocking a whole new class of geometric machine learning models, grounded in first principles yet adaptable from data.

Quantum computing and artificial intelligence: status and perspectives

May 29, 2025

Abstract:This white paper discusses and explores the various points of intersection between quantum computing and artificial intelligence (AI). It describes how quantum computing could support the development of innovative AI solutions. It also examines use cases of classical AI that can empower research and development in quantum technologies, with a focus on quantum computing and quantum sensing. The purpose of this white paper is to provide a long-term research agenda aimed at addressing foundational questions about how AI and quantum computing interact and benefit one another. It concludes with a set of recommendations and challenges, including how to orchestrate the proposed theoretical work, align quantum AI developments with quantum hardware roadmaps, estimate both classical and quantum resources - especially with the goal of mitigating and optimizing energy consumption - advance this emerging hybrid software engineering discipline, and enhance European industrial competitiveness while considering societal implications.

A Physics-Inspired Optimizer: Velocity Regularized Adam

May 19, 2025Abstract:We introduce Velocity-Regularized Adam (VRAdam), a physics-inspired optimizer for training deep neural networks that draws on ideas from quartic terms for kinetic energy with its stabilizing effects on various system dynamics. Previous algorithms, including the ubiquitous Adam, operate at the so called adaptive edge of stability regime during training leading to rapid oscillations and slowed convergence of loss. However, VRAdam adds a higher order penalty on the learning rate based on the velocity such that the algorithm automatically slows down whenever weight updates become large. In practice, we observe that the effective dynamic learning rate shrinks in high-velocity regimes, damping oscillations and allowing for a more aggressive base step size when necessary without divergence. By combining this velocity-based regularizer for global damping with per-parameter scaling of Adam to create a hybrid optimizer, we demonstrate that VRAdam consistently exceeds the performance against standard optimizers including AdamW. We benchmark various tasks such as image classification, language modeling, image generation and generative modeling using diverse architectures and training methodologies including Convolutional Neural Networks (CNNs), Transformers, and GFlowNets.

Meta-learning characteristics and dynamics of quantum systems

Mar 13, 2025Abstract:While machine learning holds great promise for quantum technologies, most current methods focus on predicting or controlling a specific quantum system. Meta-learning approaches, however, can adapt to new systems for which little data is available, by leveraging knowledge obtained from previous data associated with similar systems. In this paper, we meta-learn dynamics and characteristics of closed and open two-level systems, as well as the Heisenberg model. Based on experimental data of a Loss-DiVincenzo spin-qubit hosted in a Ge/Si core/shell nanowire for different gate voltage configurations, we predict qubit characteristics i.e. $g$-factor and Rabi frequency using meta-learning. The algorithm we introduce improves upon previous state-of-the-art meta-learning methods for physics-based systems by introducing novel techniques such as adaptive learning rates and a global optimizer for improved robustness and increased computational efficiency. We benchmark our method against other meta-learning methods, a vanilla transformer, and a multilayer perceptron, and demonstrate improved performance.

MetaSym: A Symplectic Meta-learning Framework for Physical Intelligence

Feb 23, 2025

Abstract:Scalable and generalizable physics-aware deep learning has long been considered a significant challenge with various applications across diverse domains ranging from robotics to molecular dynamics. Central to almost all physical systems are symplectic forms, the geometric backbone that underpins fundamental invariants like energy and momentum. In this work, we introduce a novel deep learning architecture, MetaSym. In particular, MetaSym combines a strong symplectic inductive bias obtained from a symplectic encoder and an autoregressive decoder with meta-attention. This principled design ensures that core physical invariants remain intact while allowing flexible, data-efficient adaptation to system heterogeneities. We benchmark MetaSym on highly varied datasets such as a high-dimensional spring mesh system (Otness et al., 2021), an open quantum system with dissipation and measurement backaction, and robotics-inspired quadrotor dynamics. Our results demonstrate superior performance in modeling dynamics under few-shot adaptation, outperforming state-of-the-art baselines with far larger models.

Quantum feedback control with a transformer neural network architecture

Nov 28, 2024Abstract:Attention-based neural networks such as transformers have revolutionized various fields such as natural language processing, genomics, and vision. Here, we demonstrate the use of transformers for quantum feedback control through a supervised learning approach. In particular, due to the transformer's ability to capture long-range temporal correlations and training efficiency, we show that it can surpass some of the limitations of previous control approaches, e.g.~those based on recurrent neural networks trained using a similar approach or reinforcement learning. We numerically show, for the example of state stabilization of a two-level system, that our bespoke transformer architecture can achieve unit fidelity to a target state in a short time even in the presence of inefficient measurement and Hamiltonian perturbations that were not included in the training set. We also demonstrate that this approach generalizes well to the control of non-Markovian systems. Our approach can be used for quantum error correction, fast control of quantum states in the presence of colored noise, as well as real-time tuning, and characterization of quantum devices.

Fully autonomous tuning of a spin qubit

Feb 06, 2024

Abstract:Spanning over two decades, the study of qubits in semiconductors for quantum computing has yielded significant breakthroughs. However, the development of large-scale semiconductor quantum circuits is still limited by challenges in efficiently tuning and operating these circuits. Identifying optimal operating conditions for these qubits is complex, involving the exploration of vast parameter spaces. This presents a real 'needle in the haystack' problem, which, until now, has resisted complete automation due to device variability and fabrication imperfections. In this study, we present the first fully autonomous tuning of a semiconductor qubit, from a grounded device to Rabi oscillations, a clear indication of successful qubit operation. We demonstrate this automation, achieved without human intervention, in a Ge/Si core/shell nanowire device. Our approach integrates deep learning, Bayesian optimization, and computer vision techniques. We expect this automation algorithm to apply to a wide range of semiconductor qubit devices, allowing for statistical studies of qubit quality metrics. As a demonstration of the potential of full automation, we characterise how the Rabi frequency and g-factor depend on barrier gate voltages for one of the qubits found by the algorithm. Twenty years after the initial demonstrations of spin qubit operation, this significant advancement is poised to finally catalyze the operation of large, previously unexplored quantum circuits.

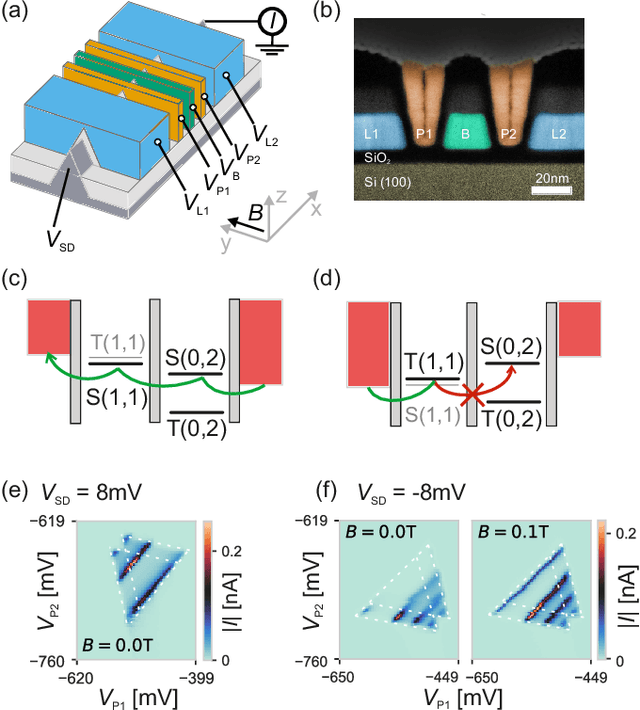

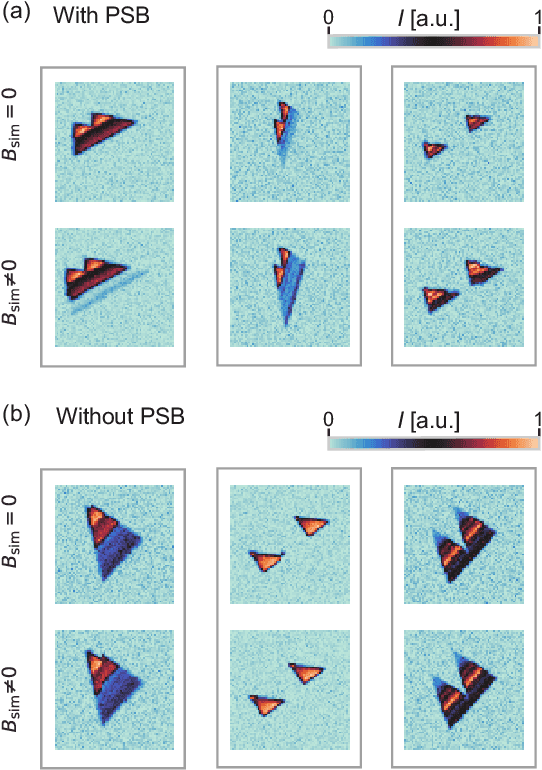

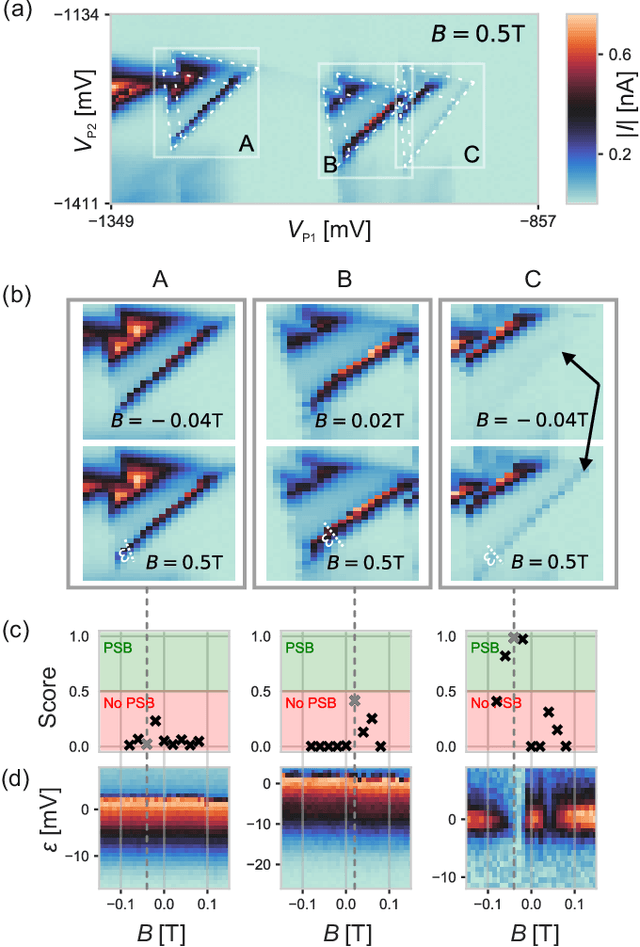

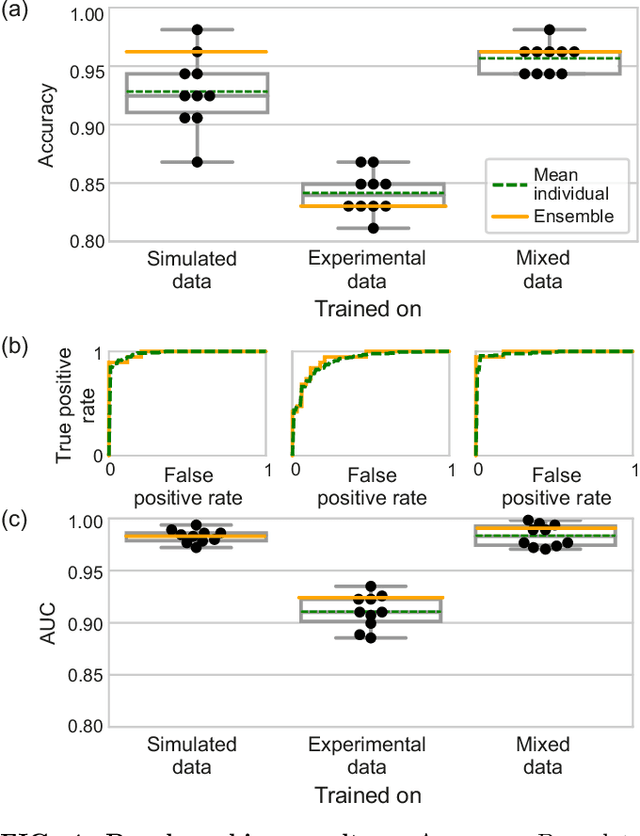

Identifying Pauli spin blockade using deep learning

Feb 01, 2022

Abstract:Pauli spin blockade (PSB) can be employed as a great resource for spin qubit initialisation and readout even at elevated temperatures but it can be difficult to identify. We present a machine learning algorithm capable of automatically identifying PSB using charge transport measurements. The scarcity of PSB data is circumvented by training the algorithm with simulated data and by using cross-device validation. We demonstrate our approach on a silicon field-effect transistor device and report an accuracy of 96% on different test devices, giving evidence that the approach is robust to device variability. The approach is expected to be employable across all types of quantum dot devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge