Generalization Bounds for Stochastic Gradient Descent via Localized $\varepsilon$-Covers

Sep 19, 2022Sejun Park, Umut Şimşekli, Murat A. Erdogdu

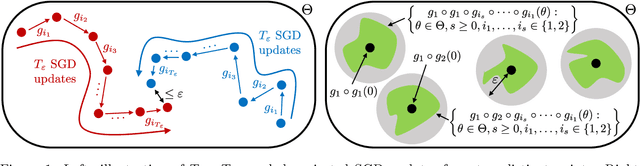

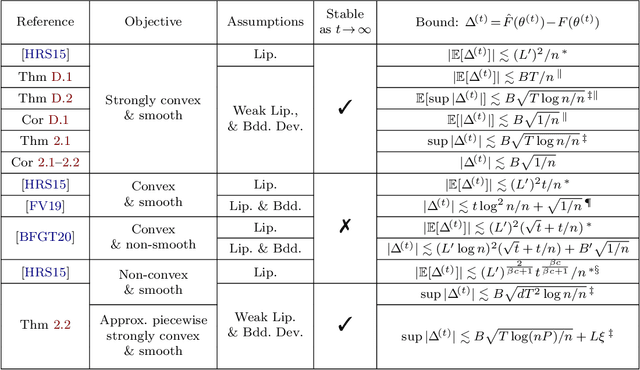

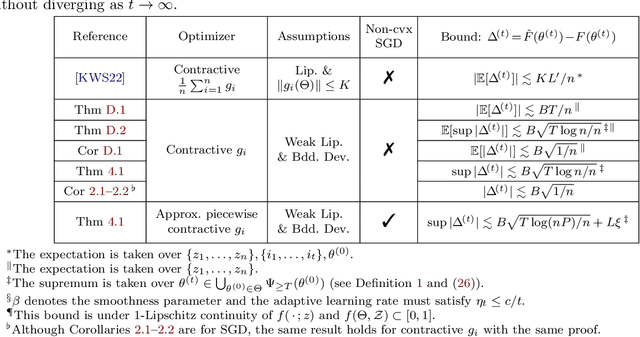

In this paper, we propose a new covering technique localized for the trajectories of SGD. This localization provides an algorithm-specific complexity measured by the covering number, which can have dimension-independent cardinality in contrast to standard uniform covering arguments that result in exponential dimension dependency. Based on this localized construction, we show that if the objective function is a finite perturbation of a piecewise strongly convex and smooth function with $P$ pieces, i.e. non-convex and non-smooth in general, the generalization error can be upper bounded by $O(\sqrt{(\log n\log(nP))/n})$, where $n$ is the number of data samples. In particular, this rate is independent of dimension and does not require early stopping and decaying step size. Finally, we employ these results in various contexts and derive generalization bounds for multi-index linear models, multi-class support vector machines, and $K$-means clustering for both hard and soft label setups, improving the known state-of-the-art rates.

$p$-DkNN: Out-of-Distribution Detection Through Statistical Testing of Deep Representations

Jul 25, 2022Adam Dziedzic, Stephan Rabanser, Mohammad Yaghini, Armin Ale, Murat A. Erdogdu, Nicolas Papernot

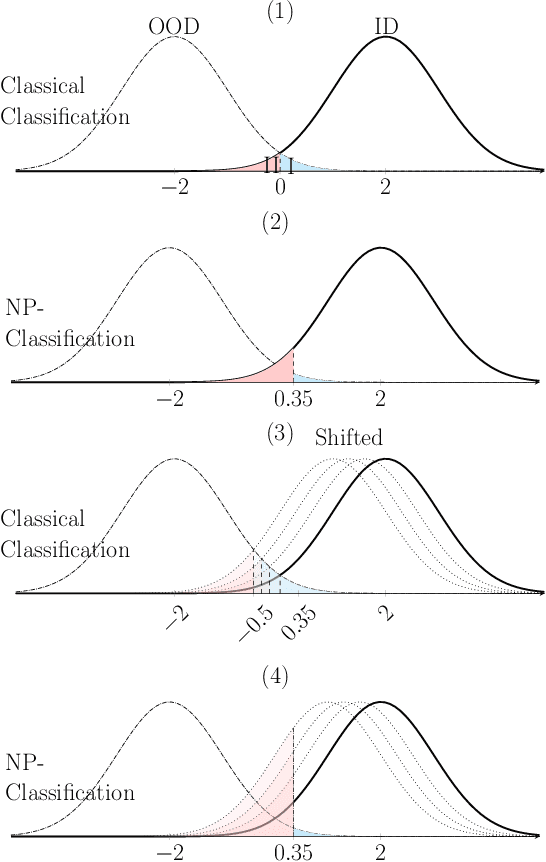

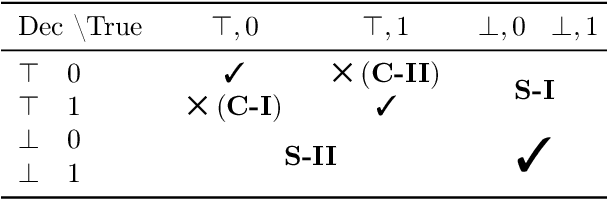

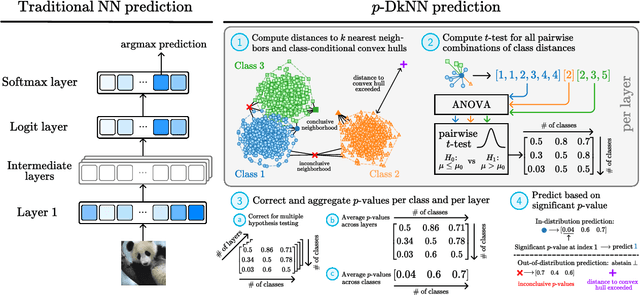

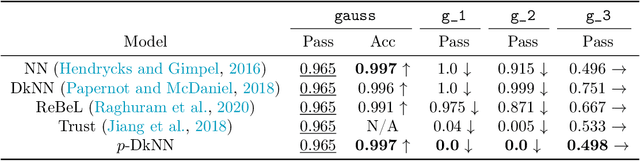

The lack of well-calibrated confidence estimates makes neural networks inadequate in safety-critical domains such as autonomous driving or healthcare. In these settings, having the ability to abstain from making a prediction on out-of-distribution (OOD) data can be as important as correctly classifying in-distribution data. We introduce $p$-DkNN, a novel inference procedure that takes a trained deep neural network and analyzes the similarity structures of its intermediate hidden representations to compute $p$-values associated with the end-to-end model prediction. The intuition is that statistical tests performed on latent representations can serve not only as a classifier, but also offer a statistically well-founded estimation of uncertainty. $p$-DkNN is scalable and leverages the composition of representations learned by hidden layers, which makes deep representation learning successful. Our theoretical analysis builds on Neyman-Pearson classification and connects it to recent advances in selective classification (reject option). We demonstrate advantageous trade-offs between abstaining from predicting on OOD inputs and maintaining high accuracy on in-distribution inputs. We find that $p$-DkNN forces adaptive attackers crafting adversarial examples, a form of worst-case OOD inputs, to introduce semantically meaningful changes to the inputs.

High-dimensional Asymptotics of Feature Learning: How One Gradient Step Improves the Representation

May 03, 2022Jimmy Ba, Murat A. Erdogdu, Taiji Suzuki, Zhichao Wang, Denny Wu, Greg Yang

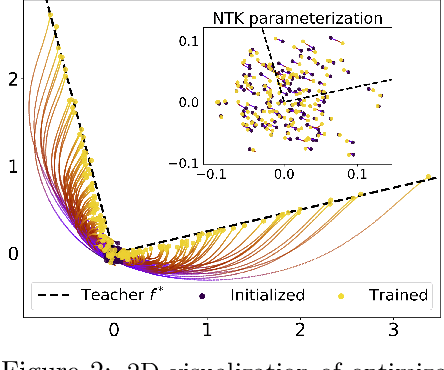

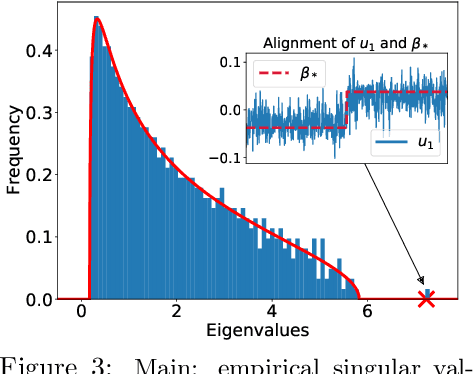

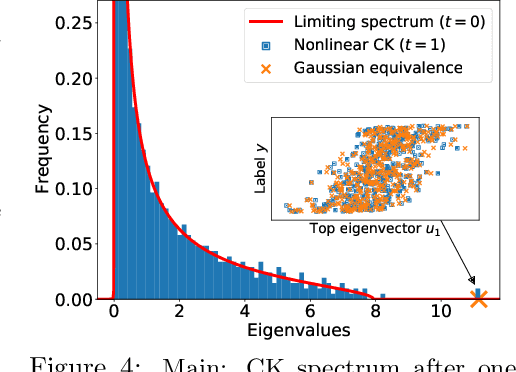

We study the first gradient descent step on the first-layer parameters $\boldsymbol{W}$ in a two-layer neural network: $f(\boldsymbol{x}) = \frac{1}{\sqrt{N}}\boldsymbol{a}^\top\sigma(\boldsymbol{W}^\top\boldsymbol{x})$, where $\boldsymbol{W}\in\mathbb{R}^{d\times N}, \boldsymbol{a}\in\mathbb{R}^{N}$ are randomly initialized, and the training objective is the empirical MSE loss: $\frac{1}{n}\sum_{i=1}^n (f(\boldsymbol{x}_i)-y_i)^2$. In the proportional asymptotic limit where $n,d,N\to\infty$ at the same rate, and an idealized student-teacher setting, we show that the first gradient update contains a rank-1 "spike", which results in an alignment between the first-layer weights and the linear component of the teacher model $f^*$. To characterize the impact of this alignment, we compute the prediction risk of ridge regression on the conjugate kernel after one gradient step on $\boldsymbol{W}$ with learning rate $\eta$, when $f^*$ is a single-index model. We consider two scalings of the first step learning rate $\eta$. For small $\eta$, we establish a Gaussian equivalence property for the trained feature map, and prove that the learned kernel improves upon the initial random features model, but cannot defeat the best linear model on the input. Whereas for sufficiently large $\eta$, we prove that for certain $f^*$, the same ridge estimator on trained features can go beyond this "linear regime" and outperform a wide range of random features and rotationally invariant kernels. Our results demonstrate that even one gradient step can lead to a considerable advantage over random features, and highlight the role of learning rate scaling in the initial phase of training.

Mirror Descent Strikes Again: Optimal Stochastic Convex Optimization under Infinite Noise Variance

Feb 23, 2022Nuri Mert Vural, Lu Yu, Krishnakumar Balasubramanian, Stanislav Volgushev, Murat A. Erdogdu

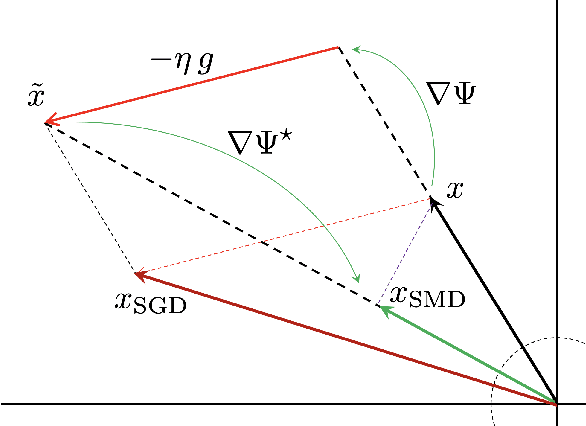

We study stochastic convex optimization under infinite noise variance. Specifically, when the stochastic gradient is unbiased and has uniformly bounded $(1+\kappa)$-th moment, for some $\kappa \in (0,1]$, we quantify the convergence rate of the Stochastic Mirror Descent algorithm with a particular class of uniformly convex mirror maps, in terms of the number of iterations, dimensionality and related geometric parameters of the optimization problem. Interestingly this algorithm does not require any explicit gradient clipping or normalization, which have been extensively used in several recent empirical and theoretical works. We complement our convergence results with information-theoretic lower bounds showing that no other algorithm using only stochastic first-order oracles can achieve improved rates. Our results have several interesting consequences for devising online/streaming stochastic approximation algorithms for problems arising in robust statistics and machine learning.

Towards a Theory of Non-Log-Concave Sampling: First-Order Stationarity Guarantees for Langevin Monte Carlo

Feb 10, 2022Krishnakumar Balasubramanian, Sinho Chewi, Murat A. Erdogdu, Adil Salim, Matthew Zhang

For the task of sampling from a density $\pi \propto \exp(-V)$ on $\mathbb{R}^d$, where $V$ is possibly non-convex but $L$-gradient Lipschitz, we prove that averaged Langevin Monte Carlo outputs a sample with $\varepsilon$-relative Fisher information after $O( L^2 d^2/\varepsilon^2)$ iterations. This is the sampling analogue of complexity bounds for finding an $\varepsilon$-approximate first-order stationary points in non-convex optimization and therefore constitutes a first step towards the general theory of non-log-concave sampling. We discuss numerous extensions and applications of our result; in particular, it yields a new state-of-the-art guarantee for sampling from distributions which satisfy a Poincar\'e inequality.

Heavy-tailed Sampling via Transformed Unadjusted Langevin Algorithm

Jan 20, 2022Ye He, Krishnakumar Balasubramanian, Murat A. Erdogdu

We analyze the oracle complexity of sampling from polynomially decaying heavy-tailed target densities based on running the Unadjusted Langevin Algorithm on certain transformed versions of the target density. The specific class of closed-form transformation maps that we construct are shown to be diffeomorphisms, and are particularly suited for developing efficient diffusion-based samplers. We characterize the precise class of heavy-tailed densities for which polynomial-order oracle complexities (in dimension and inverse target accuracy) could be obtained, and provide illustrative examples. We highlight the relationship between our assumptions and functional inequalities (super and weak Poincar\'e inequalities) based on non-local Dirichlet forms defined via fractional Laplacian operators, used to characterize the heavy-tailed equilibrium densities of certain stable-driven stochastic differential equations.

Analysis of Langevin Monte Carlo from Poincaré to Log-Sobolev

Dec 23, 2021Sinho Chewi, Murat A. Erdogdu, Mufan Bill Li, Ruoqi Shen, Matthew Zhang

Classically, the continuous-time Langevin diffusion converges exponentially fast to its stationary distribution $\pi$ under the sole assumption that $\pi$ satisfies a Poincar\'e inequality. Using this fact to provide guarantees for the discrete-time Langevin Monte Carlo (LMC) algorithm, however, is considerably more challenging due to the need for working with chi-squared or R\'enyi divergences, and prior works have largely focused on strongly log-concave targets. In this work, we provide the first convergence guarantees for LMC assuming that $\pi$ satisfies either a Lata{\l}a--Oleszkiewicz or modified log-Sobolev inequality, which interpolates between the Poincar\'e and log-Sobolev settings. Unlike prior works, our results allow for weak smoothness and do not require convexity or dissipativity conditions.

On Empirical Risk Minimization with Dependent and Heavy-Tailed Data

Sep 10, 2021Abhishek Roy, Krishnakumar Balasubramanian, Murat A. Erdogdu

In this work, we establish risk bounds for the Empirical Risk Minimization (ERM) with both dependent and heavy-tailed data-generating processes. We do so by extending the seminal works of Mendelson [Men15, Men18] on the analysis of ERM with heavy-tailed but independent and identically distributed observations, to the strictly stationary exponentially $\beta$-mixing case. Our analysis is based on explicitly controlling the multiplier process arising from the interaction between the noise and the function evaluations on inputs. It allows for the interaction to be even polynomially heavy-tailed, which covers a significantly large class of heavy-tailed models beyond what is analyzed in the learning theory literature. We illustrate our results by deriving rates of convergence for the high-dimensional linear regression problem with dependent and heavy-tailed data.

Fractal Structure and Generalization Properties of Stochastic Optimization Algorithms

Jun 09, 2021Alexander Camuto, George Deligiannidis, Murat A. Erdogdu, Mert Gürbüzbalaban, Umut Şimşekli, Lingjiong Zhu

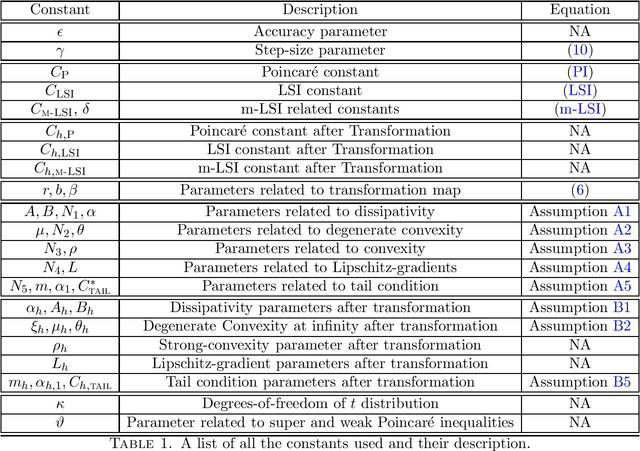

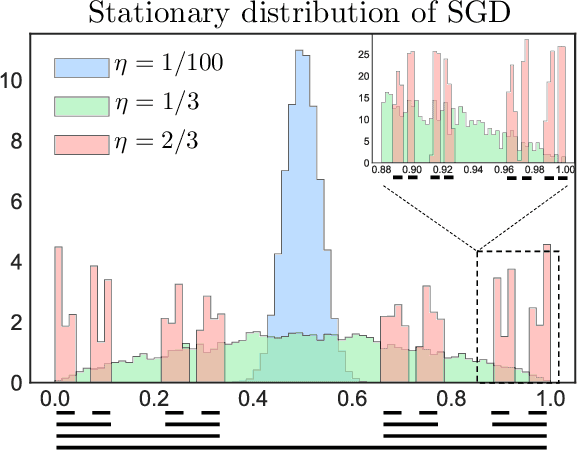

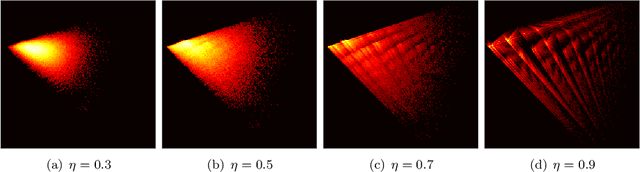

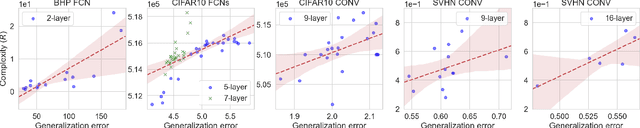

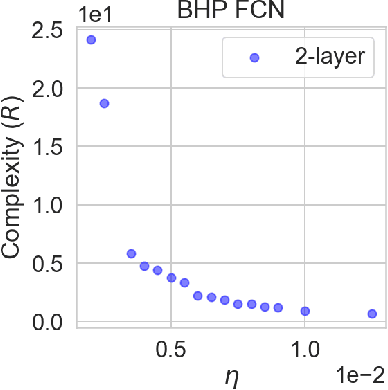

Understanding generalization in deep learning has been one of the major challenges in statistical learning theory over the last decade. While recent work has illustrated that the dataset and the training algorithm must be taken into account in order to obtain meaningful generalization bounds, it is still theoretically not clear which properties of the data and the algorithm determine the generalization performance. In this study, we approach this problem from a dynamical systems theory perspective and represent stochastic optimization algorithms as random iterated function systems (IFS). Well studied in the dynamical systems literature, under mild assumptions, such IFSs can be shown to be ergodic with an invariant measure that is often supported on sets with a fractal structure. As our main contribution, we prove that the generalization error of a stochastic optimization algorithm can be bounded based on the `complexity' of the fractal structure that underlies its invariant measure. Leveraging results from dynamical systems theory, we show that the generalization error can be explicitly linked to the choice of the algorithm (e.g., stochastic gradient descent -- SGD), algorithm hyperparameters (e.g., step-size, batch-size), and the geometry of the problem (e.g., Hessian of the loss). We further specialize our results to specific problems (e.g., linear/logistic regression, one hidden-layered neural networks) and algorithms (e.g., SGD and preconditioned variants), and obtain analytical estimates for our bound.For modern neural networks, we develop an efficient algorithm to compute the developed bound and support our theory with various experiments on neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge