Muli Yang

Your AI-Generated Image Detector Can Secretly Achieve SOTA Accuracy, If Calibrated

Feb 02, 2026Abstract:Despite being trained on balanced datasets, existing AI-generated image detectors often exhibit systematic bias at test time, frequently misclassifying fake images as real. We hypothesize that this behavior stems from distributional shift in fake samples and implicit priors learned during training. Specifically, models tend to overfit to superficial artifacts that do not generalize well across different generation methods, leading to a misaligned decision threshold when faced with test-time distribution shift. To address this, we propose a theoretically grounded post-hoc calibration framework based on Bayesian decision theory. In particular, we introduce a learnable scalar correction to the model's logits, optimized on a small validation set from the target distribution while keeping the backbone frozen. This parametric adjustment compensates for distributional shift in model output, realigning the decision boundary even without requiring ground-truth labels. Experiments on challenging benchmarks show that our approach significantly improves robustness without retraining, offering a lightweight and principled solution for reliable and adaptive AI-generated image detection in the open world. Code is available at https://github.com/muliyangm/AIGI-Det-Calib.

Towards Interpretable Hallucination Analysis and Mitigation in LVLMs via Contrastive Neuron Steering

Jan 31, 2026Abstract:LVLMs achieve remarkable multimodal understanding and generation but remain susceptible to hallucinations. Existing mitigation methods predominantly focus on output-level adjustments, leaving the internal mechanisms that give rise to these hallucinations largely unexplored. To gain a deeper understanding, we adopt a representation-level perspective by introducing sparse autoencoders (SAEs) to decompose dense visual embeddings into sparse, interpretable neurons. Through neuron-level analysis, we identify distinct neuron types, including always-on neurons and image-specific neurons. Our findings reveal that hallucinations often result from disruptions or spurious activations of image-specific neurons, while always-on neurons remain largely stable. Moreover, selectively enhancing or suppressing image-specific neurons enables controllable intervention in LVLM outputs, improving visual grounding and reducing hallucinations. Building on these insights, we propose Contrastive Neuron Steering (CNS), which identifies image-specific neurons via contrastive analysis between clean and noisy inputs. CNS selectively amplifies informative neurons while suppressing perturbation-induced activations, producing more robust and semantically grounded visual representations. This not only enhances visual understanding but also effectively mitigates hallucinations. By operating at the prefilling stage, CNS is fully compatible with existing decoding-stage methods. Extensive experiments on both hallucination-focused and general multimodal benchmarks demonstrate that CNS consistently reduces hallucinations while preserving overall multimodal understanding.

Towards Arbitrary Motion Completing via Hierarchical Continuous Representation

Dec 24, 2025

Abstract:Physical motions are inherently continuous, and higher camera frame rates typically contribute to improved smoothness and temporal coherence. For the first time, we explore continuous representations of human motion sequences, featuring the ability to interpolate, inbetween, and even extrapolate any input motion sequences at arbitrary frame rates. To achieve this, we propose a novel parametric activation-induced hierarchical implicit representation framework, referred to as NAME, based on Implicit Neural Representations (INRs). Our method introduces a hierarchical temporal encoding mechanism that extracts features from motion sequences at multiple temporal scales, enabling effective capture of intricate temporal patterns. Additionally, we integrate a custom parametric activation function, powered by Fourier transformations, into the MLP-based decoder to enhance the expressiveness of the continuous representation. This parametric formulation significantly augments the model's ability to represent complex motion behaviors with high accuracy. Extensive evaluations across several benchmark datasets demonstrate the effectiveness and robustness of our proposed approach.

A Turn Toward Better Alignment: Few-Shot Generative Adaptation with Equivariant Feature Rotation

Dec 24, 2025

Abstract:Few-shot image generation aims to effectively adapt a source generative model to a target domain using very few training images. Most existing approaches introduce consistency constraints-typically through instance-level or distribution-level loss functions-to directly align the distribution patterns of source and target domains within their respective latent spaces. However, these strategies often fall short: overly strict constraints can amplify the negative effects of the domain gap, leading to distorted or uninformative content, while overly relaxed constraints may fail to leverage the source domain effectively. This limitation primarily stems from the inherent discrepancy in the underlying distribution structures of the source and target domains. The scarcity of target samples further compounds this issue by hindering accurate estimation of the target domain's distribution. To overcome these limitations, we propose Equivariant Feature Rotation (EFR), a novel adaptation strategy that aligns source and target domains at two complementary levels within a self-rotated proxy feature space. Specifically, we perform adaptive rotations within a parameterized Lie Group to transform both source and target features into an equivariant proxy space, where alignment is conducted. These learnable rotation matrices serve to bridge the domain gap by preserving intra-domain structural information without distortion, while the alignment optimization facilitates effective knowledge transfer from the source to the target domain. Comprehensive experiments on a variety of commonly used datasets demonstrate that our method significantly enhances the generative performance within the targeted domain.

Tempo as the Stable Cue: Hierarchical Mixture of Tempo and Beat Experts for Music to 3D Dance Generation

Dec 21, 2025Abstract:Music to 3D dance generation aims to synthesize realistic and rhythmically synchronized human dance from music. While existing methods often rely on additional genre labels to further improve dance generation, such labels are typically noisy, coarse, unavailable, or insufficient to capture the diversity of real-world music, which can result in rhythm misalignment or stylistic drift. In contrast, we observe that tempo, a core property reflecting musical rhythm and pace, remains relatively consistent across datasets and genres, typically ranging from 60 to 200 BPM. Based on this finding, we propose TempoMoE, a hierarchical tempo-aware Mixture-of-Experts module that enhances the diffusion model and its rhythm perception. TempoMoE organizes motion experts into tempo-structured groups for different tempo ranges, with multi-scale beat experts capturing fine- and long-range rhythmic dynamics. A Hierarchical Rhythm-Adaptive Routing dynamically selects and fuses experts from music features, enabling flexible, rhythm-aligned generation without manual genre labels. Extensive experiments demonstrate that TempoMoE achieves state-of-the-art results in dance quality and rhythm alignment.

Revealing Perception and Generation Dynamics in LVLMs: Mitigating Hallucinations via Validated Dominance Correction

Dec 21, 2025Abstract:Large Vision-Language Models (LVLMs) have shown remarkable capabilities, yet hallucinations remain a persistent challenge. This work presents a systematic analysis of the internal evolution of visual perception and token generation in LVLMs, revealing two key patterns. First, perception follows a three-stage GATE process: early layers perform a Global scan, intermediate layers Approach and Tighten on core content, and later layers Explore supplementary regions. Second, generation exhibits an SAD (Subdominant Accumulation to Dominant) pattern, where hallucinated tokens arise from the repeated accumulation of subdominant tokens lacking support from attention (visual perception) or feed-forward network (internal knowledge). Guided by these findings, we devise the VDC (Validated Dominance Correction) strategy, which detects unsupported tokens and replaces them with validated dominant ones to improve output reliability. Extensive experiments across multiple models and benchmarks confirm that VDC substantially mitigates hallucinations.

A Tale of Two Experts: Cooperative Learning for Source-Free Unsupervised Domain Adaptation

Sep 26, 2025Abstract:Source-Free Unsupervised Domain Adaptation (SFUDA) addresses the realistic challenge of adapting a source-trained model to a target domain without access to the source data, driven by concerns over privacy and cost. Existing SFUDA methods either exploit only the source model's predictions or fine-tune large multimodal models, yet both neglect complementary insights and the latent structure of target data. In this paper, we propose the Experts Cooperative Learning (EXCL). EXCL contains the Dual Experts framework and Retrieval-Augmentation-Interaction optimization pipeline. The Dual Experts framework places a frozen source-domain model (augmented with Conv-Adapter) and a pretrained vision-language model (with a trainable text prompt) on equal footing to mine consensus knowledge from unlabeled target samples. To effectively train these plug-in modules under purely unsupervised conditions, we introduce Retrieval-Augmented-Interaction(RAIN), a three-stage pipeline that (1) collaboratively retrieves pseudo-source and complex target samples, (2) separately fine-tunes each expert on its respective sample set, and (3) enforces learning object consistency via a shared learning result. Extensive experiments on four benchmark datasets demonstrate that our approach matches state-of-the-art performance.

Siamese Contrastive Embedding Network for Compositional Zero-Shot Learning

Jun 29, 2022

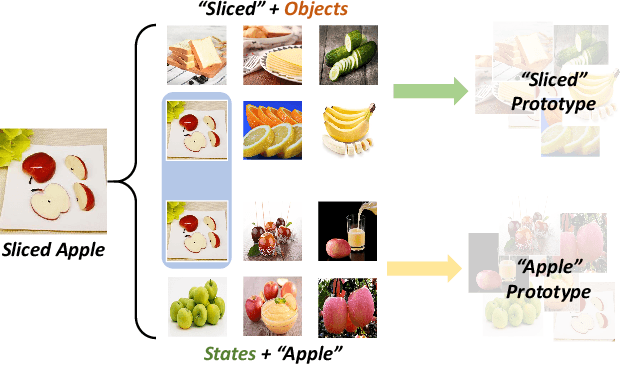

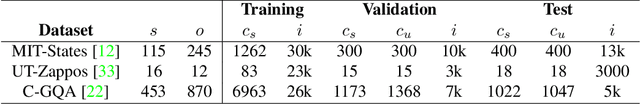

Abstract:Compositional Zero-Shot Learning (CZSL) aims to recognize unseen compositions formed from seen state and object during training. Since the same state may be various in the visual appearance while entangled with different objects, CZSL is still a challenging task. Some methods recognize state and object with two trained classifiers, ignoring the impact of the interaction between object and state; the other methods try to learn the joint representation of the state-object compositions, leading to the domain gap between seen and unseen composition sets. In this paper, we propose a novel Siamese Contrastive Embedding Network (SCEN) (Code: https://github.com/XDUxyLi/SCEN-master) for unseen composition recognition. Considering the entanglement between state and object, we embed the visual feature into a Siamese Contrastive Space to capture prototypes of them separately, alleviating the interaction between state and object. In addition, we design a State Transition Module (STM) to increase the diversity of training compositions, improving the robustness of the recognition model. Extensive experiments indicate that our method significantly outperforms the state-of-the-art approaches on three challenging benchmark datasets, including the recent proposed C-QGA dataset.

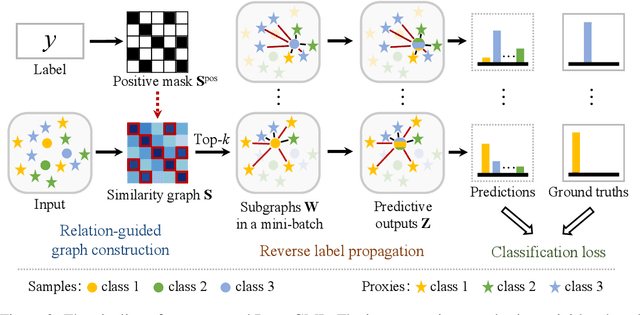

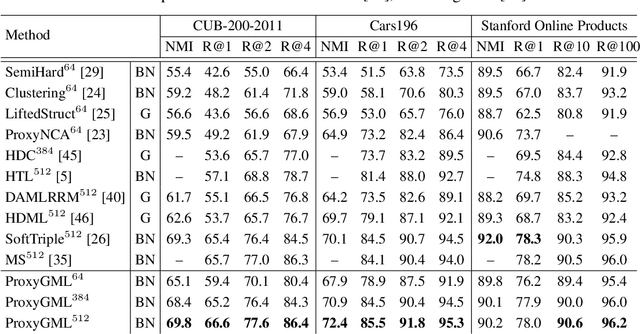

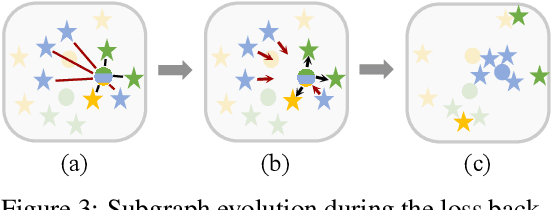

Fewer is More: A Deep Graph Metric Learning Perspective Using Fewer Proxies

Oct 26, 2020

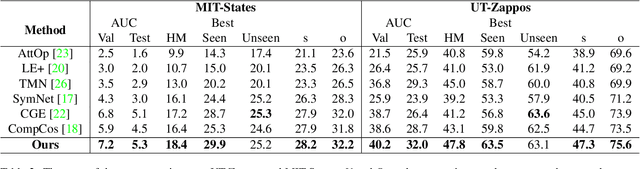

Abstract:Deep metric learning plays a key role in various machine learning tasks. Most of the previous works have been confined to sampling from a mini-batch, which cannot precisely characterize the global geometry of the embedding space. Although researchers have developed proxy- and classification-based methods to tackle the sampling issue, those methods inevitably incur a redundant computational cost. In this paper, we propose a novel Proxy-based deep Graph Metric Learning (ProxyGML) approach from the perspective of graph classification, which uses fewer proxies yet achieves better comprehensive performance. Specifically, multiple global proxies are leveraged to collectively approximate the original data points for each class. To efficiently capture local neighbor relationships, a small number of such proxies are adaptively selected to construct similarity subgraphs between these proxies and each data point. Further, we design a novel reverse label propagation algorithm, by which the neighbor relationships are adjusted according to ground-truth labels, so that a discriminative metric space can be learned during the process of subgraph classification. Extensive experiments carried out on widely-used CUB-200-2011, Cars196, and Stanford Online Products datasets demonstrate the superiority of the proposed ProxyGML over the state-of-the-art methods in terms of both effectiveness and efficiency. The source code is publicly available at https://github.com/YuehuaZhu/ProxyGML.

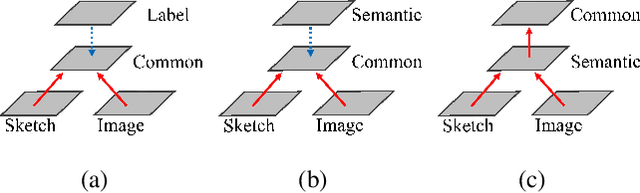

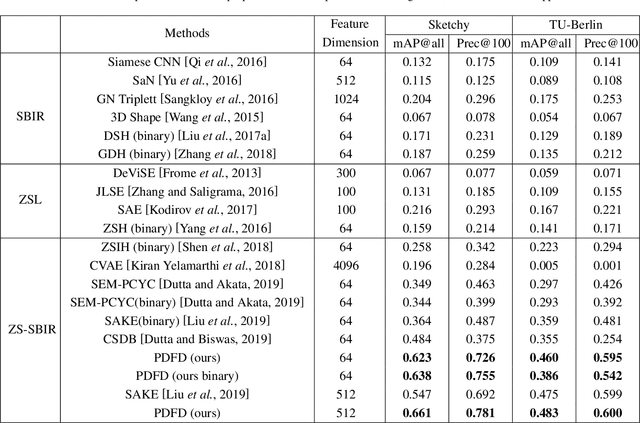

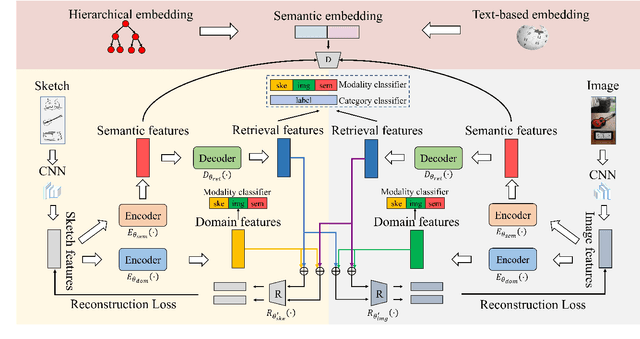

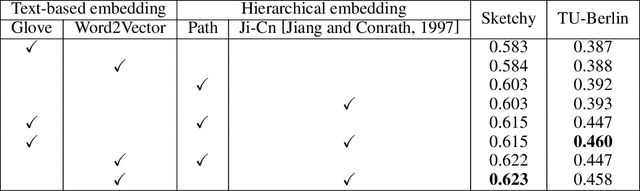

Progressive Domain-Independent Feature Decomposition Network for Zero-Shot Sketch-Based Image Retrieval

Mar 22, 2020

Abstract:Zero-shot sketch-based image retrieval (ZS-SBIR) is a specific cross-modal retrieval task for searching natural images given free-hand sketches under the zero-shot scenario. Most existing methods solve this problem by simultaneously projecting visual features and semantic supervision into a low-dimensional common space for efficient retrieval. However, such low-dimensional projection destroys the completeness of semantic knowledge in original semantic space, so that it is unable to transfer useful knowledge well when learning semantic from different modalities. Moreover, the domain information and semantic information are entangled in visual features, which is not conducive for cross-modal matching since it will hinder the reduction of domain gap between sketch and image. In this paper, we propose a Progressive Domain-independent Feature Decomposition (PDFD) network for ZS-SBIR. Specifically, with the supervision of original semantic knowledge, PDFD decomposes visual features into domain features and semantic ones, and then the semantic features are projected into common space as retrieval features for ZS-SBIR. The progressive projection strategy maintains strong semantic supervision. Besides, to guarantee the retrieval features to capture clean and complete semantic information, the cross-reconstruction loss is introduced to encourage that any combinations of retrieval features and domain features can reconstruct the visual features. Extensive experiments demonstrate the superiority of our PDFD over state-of-the-art competitors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge