Mohammad Abu Alsheikh

Privacy-Preserving Driver Drowsiness Detection with Spatial Self-Attention and Federated Learning

Aug 01, 2025Abstract:Driver drowsiness is one of the main causes of road accidents and is recognized as a leading contributor to traffic-related fatalities. However, detecting drowsiness accurately remains a challenging task, especially in real-world settings where facial data from different individuals is decentralized and highly diverse. In this paper, we propose a novel framework for drowsiness detection that is designed to work effectively with heterogeneous and decentralized data. Our approach develops a new Spatial Self-Attention (SSA) mechanism integrated with a Long Short-Term Memory (LSTM) network to better extract key facial features and improve detection performance. To support federated learning, we employ a Gradient Similarity Comparison (GSC) that selects the most relevant trained models from different operators before aggregation. This improves the accuracy and robustness of the global model while preserving user privacy. We also develop a customized tool that automatically processes video data by extracting frames, detecting and cropping faces, and applying data augmentation techniques such as rotation, flipping, brightness adjustment, and zooming. Experimental results show that our framework achieves a detection accuracy of 89.9% in the federated learning settings, outperforming existing methods under various deployment scenarios. The results demonstrate the effectiveness of our approach in handling real-world data variability and highlight its potential for deployment in intelligent transportation systems to enhance road safety through early and reliable drowsiness detection.

Towards Autonomous Riding: A Review of Perception, Planning, and Control in Intelligent Two-Wheelers

Jul 16, 2025Abstract:The rapid adoption of micromobility solutions, particularly two-wheeled vehicles like e-scooters and e-bikes, has created an urgent need for reliable autonomous riding (AR) technologies. While autonomous driving (AD) systems have matured significantly, AR presents unique challenges due to the inherent instability of two-wheeled platforms, limited size, limited power, and unpredictable environments, which pose very serious concerns about road users' safety. This review provides a comprehensive analysis of AR systems by systematically examining their core components, perception, planning, and control, through the lens of AD technologies. We identify critical gaps in current AR research, including a lack of comprehensive perception systems for various AR tasks, limited industry and government support for such developments, and insufficient attention from the research community. The review analyses the gaps of AR from the perspective of AD to highlight promising research directions, such as multimodal sensor techniques for lightweight platforms and edge deep learning architectures. By synthesising insights from AD research with the specific requirements of AR, this review aims to accelerate the development of safe, efficient, and scalable autonomous riding systems for future urban mobility.

End-to-End Human Pose Reconstruction from Wearable Sensors for 6G Extended Reality Systems

Mar 06, 2025Abstract:Full 3D human pose reconstruction is a critical enabler for extended reality (XR) applications in future sixth generation (6G) networks, supporting immersive interactions in gaming, virtual meetings, and remote collaboration. However, achieving accurate pose reconstruction over wireless networks remains challenging due to channel impairments, bit errors, and quantization effects. Existing approaches often assume error-free transmission in indoor settings, limiting their applicability to real-world scenarios. To address these challenges, we propose a novel deep learning-based framework for human pose reconstruction over orthogonal frequency-division multiplexing (OFDM) systems. The framework introduces a two-stage deep learning receiver: the first stage jointly estimates the wireless channel and decodes OFDM symbols, and the second stage maps the received sensor signals to full 3D body poses. Simulation results demonstrate that the proposed neural receiver reduces bit error rate (BER), thus gaining a 5 dB gap at $10^{-4}$ BER, compared to the baseline method that employs separate signal detection steps, i.e., least squares channel estimation and linear minimum mean square error equalization. Additionally, our empirical findings show that 8-bit quantization is sufficient for accurate pose reconstruction, achieving a mean squared error of $5\times10^{-4}$ for reconstructed sensor signals, and reducing joint angular error by 37\% for the reconstructed human poses compared to the baseline.

Balancing Security and Accuracy: A Novel Federated Learning Approach for Cyberattack Detection in Blockchain Networks

Sep 08, 2024

Abstract:This paper presents a novel Collaborative Cyberattack Detection (CCD) system aimed at enhancing the security of blockchain-based data-sharing networks by addressing the complex challenges associated with noise addition in federated learning models. Leveraging the theoretical principles of differential privacy, our approach strategically integrates noise into trained sub-models before reconstructing the global model through transmission. We systematically explore the effects of various noise types, i.e., Gaussian, Laplace, and Moment Accountant, on key performance metrics, including attack detection accuracy, deep learning model convergence time, and the overall runtime of global model generation. Our findings reveal the intricate trade-offs between ensuring data privacy and maintaining system performance, offering valuable insights into optimizing these parameters for diverse CCD environments. Through extensive simulations, we provide actionable recommendations for achieving an optimal balance between data protection and system efficiency, contributing to the advancement of secure and reliable blockchain networks.

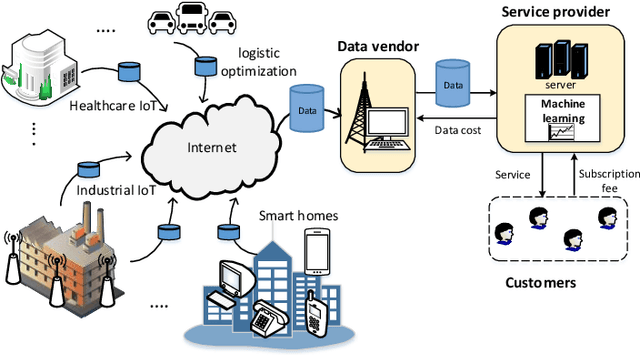

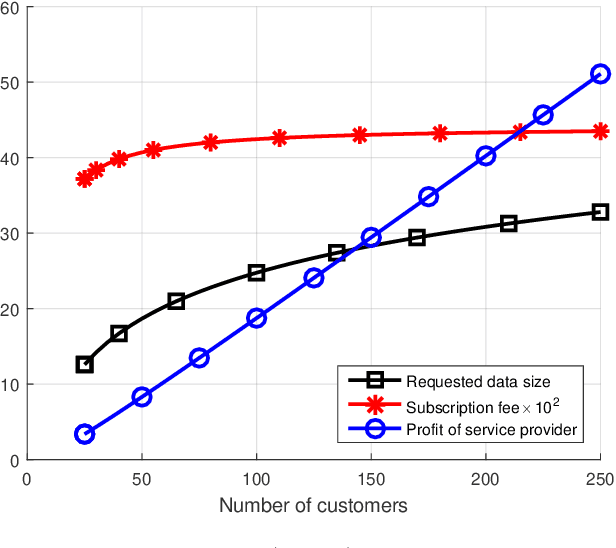

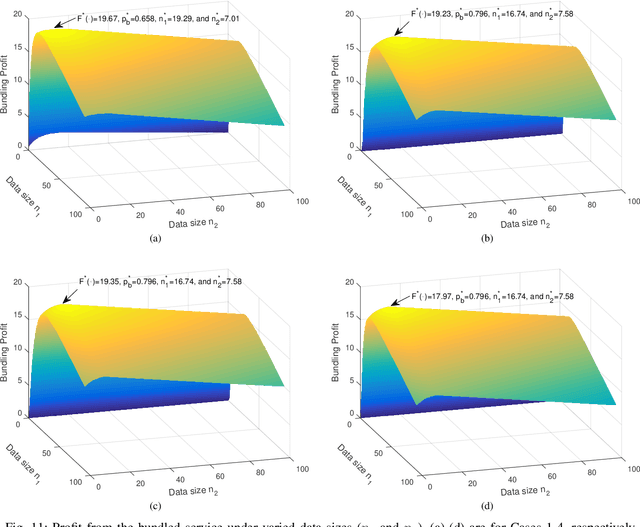

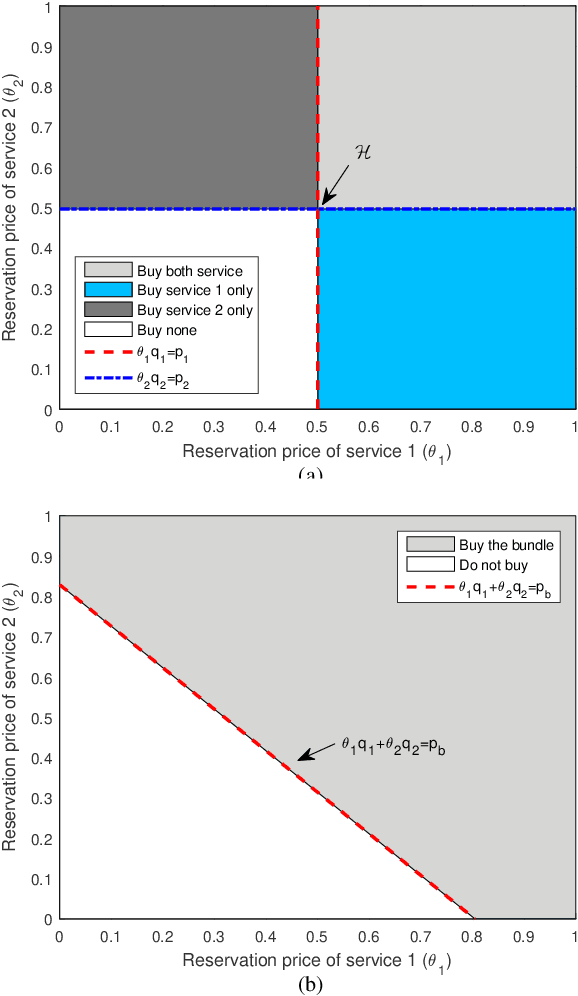

Optimal Pricing of Internet of Things: A Machine Learning Approach

Feb 14, 2020

Abstract:Internet of things (IoT) produces massive data from devices embedded with sensors. The IoT data allows creating profitable services using machine learning. However, previous research does not address the problem of optimal pricing and bundling of machine learning-based IoT services. In this paper, we define the data value and service quality from a machine learning perspective. We present an IoT market model which consists of data vendors selling data to service providers, and service providers offering IoT services to customers. Then, we introduce optimal pricing schemes for the standalone and bundled selling of IoT services. In standalone service sales, the service provider optimizes the size of bought data and service subscription fee to maximize its profit. For service bundles, the subscription fee and data sizes of the grouped IoT services are optimized to maximize the total profit of cooperative service providers. We show that bundling IoT services maximizes the profit of service providers compared to the standalone selling. For profit sharing of bundled services, we apply the concepts of core and Shapley solutions from cooperative game theory as efficient and fair allocations of payoffs among the cooperative service providers in the bundling coalition.

* 17 pages

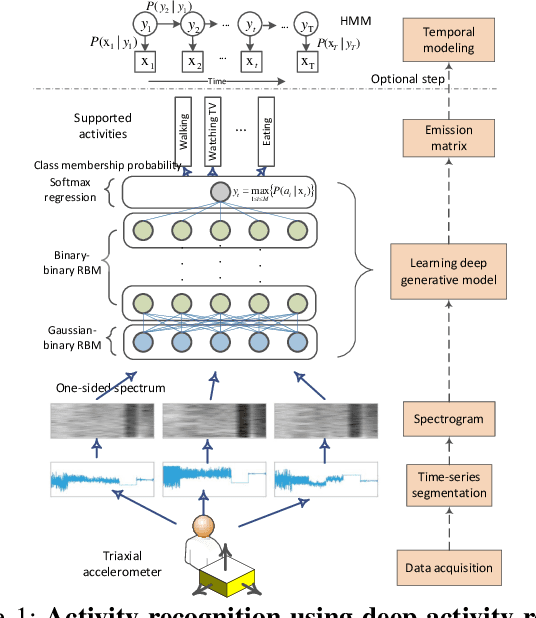

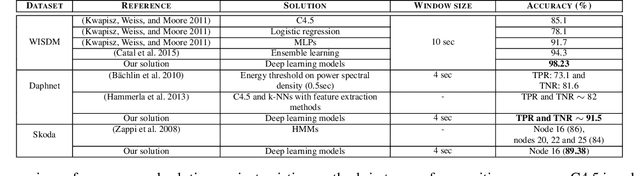

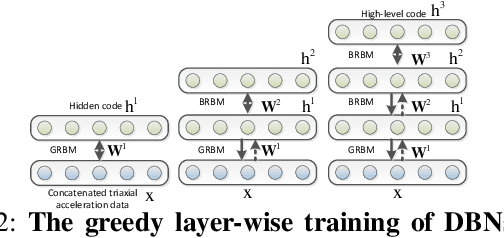

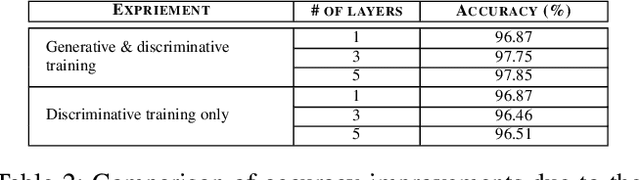

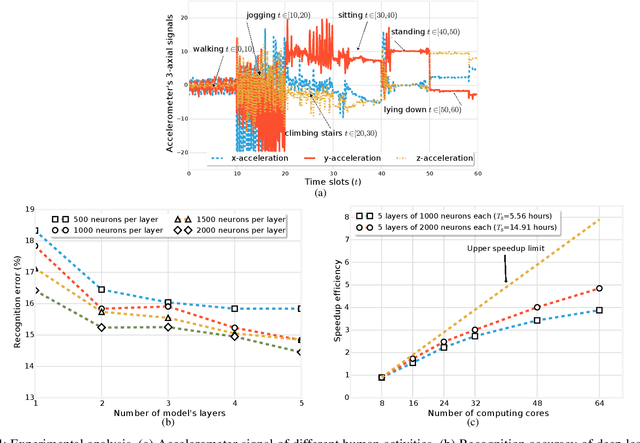

Deep Activity Recognition Models with Triaxial Accelerometers

Oct 25, 2016

Abstract:Despite the widespread installation of accelerometers in almost all mobile phones and wearable devices, activity recognition using accelerometers is still immature due to the poor recognition accuracy of existing recognition methods and the scarcity of labeled training data. We consider the problem of human activity recognition using triaxial accelerometers and deep learning paradigms. This paper shows that deep activity recognition models (a) provide better recognition accuracy of human activities, (b) avoid the expensive design of handcrafted features in existing systems, and (c) utilize the massive unlabeled acceleration samples for unsupervised feature extraction. Moreover, a hybrid approach of deep learning and hidden Markov models (DL-HMM) is presented for sequential activity recognition. This hybrid approach integrates the hierarchical representations of deep activity recognition models with the stochastic modeling of temporal sequences in the hidden Markov models. We show substantial recognition improvement on real world datasets over state-of-the-art methods of human activity recognition using triaxial accelerometers.

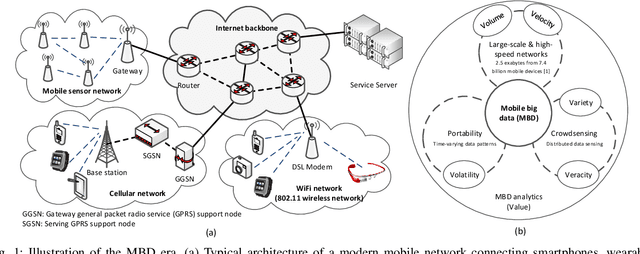

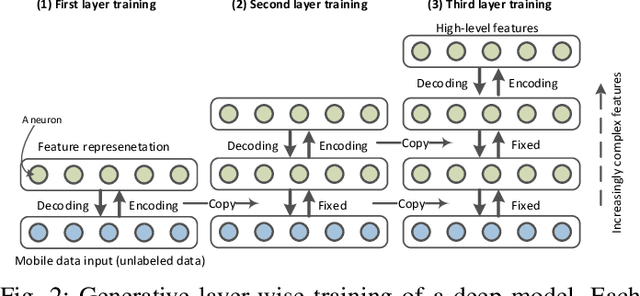

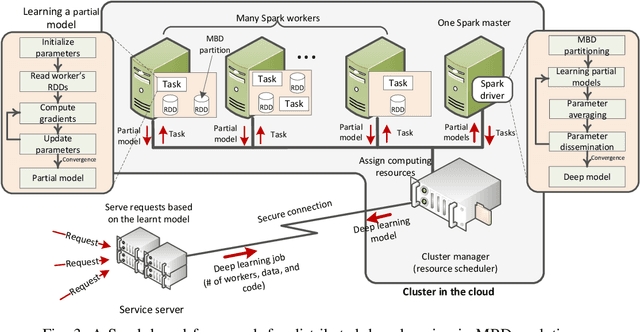

Mobile Big Data Analytics Using Deep Learning and Apache Spark

Feb 23, 2016

Abstract:The proliferation of mobile devices, such as smartphones and Internet of Things (IoT) gadgets, results in the recent mobile big data (MBD) era. Collecting MBD is unprofitable unless suitable analytics and learning methods are utilized for extracting meaningful information and hidden patterns from data. This article presents an overview and brief tutorial of deep learning in MBD analytics and discusses a scalable learning framework over Apache Spark. Specifically, a distributed deep learning is executed as an iterative MapReduce computing on many Spark workers. Each Spark worker learns a partial deep model on a partition of the overall MBD, and a master deep model is then built by averaging the parameters of all partial models. This Spark-based framework speeds up the learning of deep models consisting of many hidden layers and millions of parameters. We use a context-aware activity recognition application with a real-world dataset containing millions of samples to validate our framework and assess its speedup effectiveness.

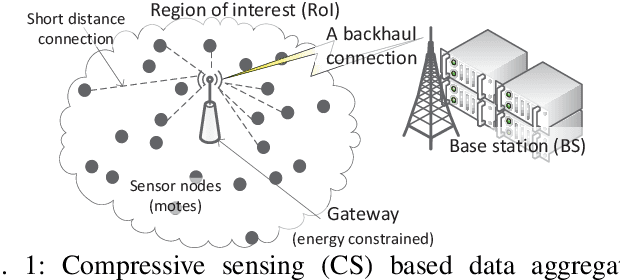

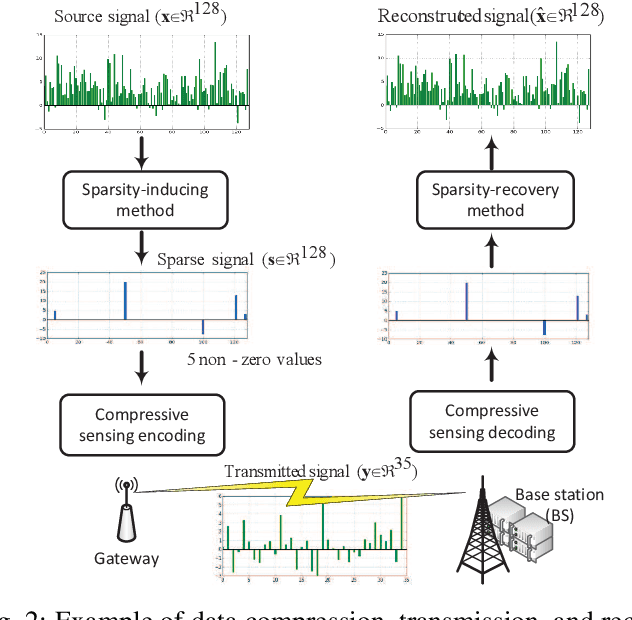

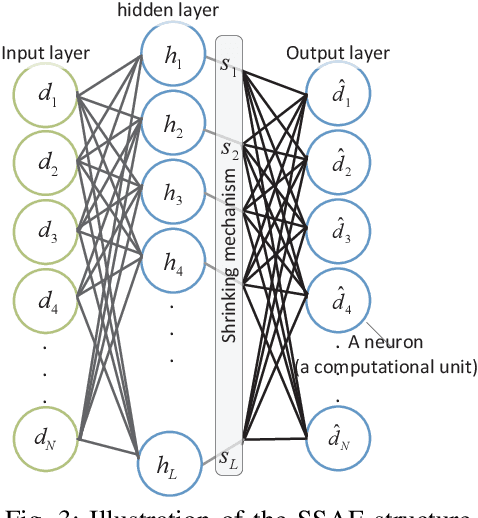

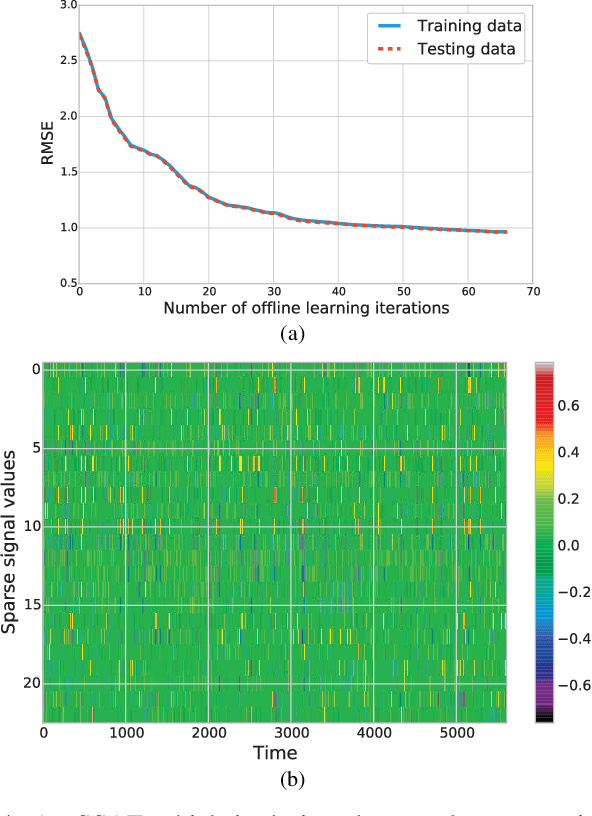

Toward a Robust Sparse Data Representation for Wireless Sensor Networks

Aug 02, 2015

Abstract:Compressive sensing has been successfully used for optimized operations in wireless sensor networks. However, raw data collected by sensors may be neither originally sparse nor easily transformed into a sparse data representation. This paper addresses the problem of transforming source data collected by sensor nodes into a sparse representation with a few nonzero elements. Our contributions that address three major issues include: 1) an effective method that extracts population sparsity of the data, 2) a sparsity ratio guarantee scheme, and 3) a customized learning algorithm of the sparsifying dictionary. We introduce an unsupervised neural network to extract an intrinsic sparse coding of the data. The sparse codes are generated at the activation of the hidden layer using a sparsity nomination constraint and a shrinking mechanism. Our analysis using real data samples shows that the proposed method outperforms conventional sparsity-inducing methods.

* 8 pages

Machine Learning in Wireless Sensor Networks: Algorithms, Strategies, and Applications

Mar 19, 2015

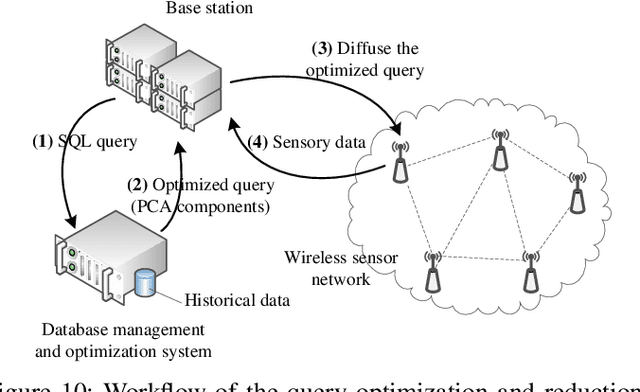

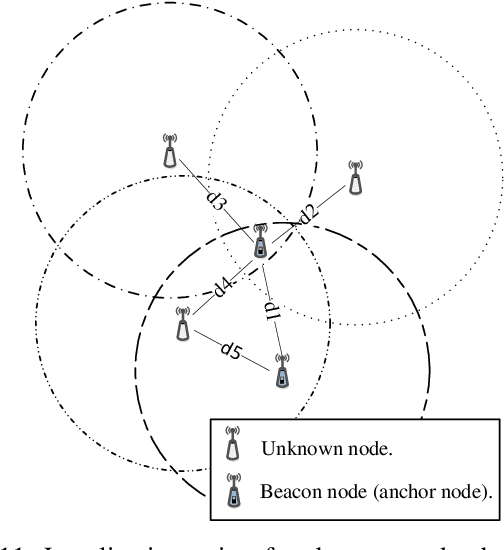

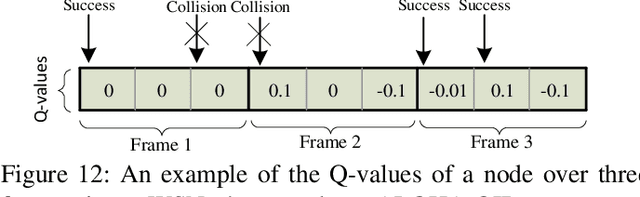

Abstract:Wireless sensor networks monitor dynamic environments that change rapidly over time. This dynamic behavior is either caused by external factors or initiated by the system designers themselves. To adapt to such conditions, sensor networks often adopt machine learning techniques to eliminate the need for unnecessary redesign. Machine learning also inspires many practical solutions that maximize resource utilization and prolong the lifespan of the network. In this paper, we present an extensive literature review over the period 2002-2013 of machine learning methods that were used to address common issues in wireless sensor networks (WSNs). The advantages and disadvantages of each proposed algorithm are evaluated against the corresponding problem. We also provide a comparative guide to aid WSN designers in developing suitable machine learning solutions for their specific application challenges.

* Accepted for publication in IEEE Communications Surveys and Tutorials

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge