Michael C. Yip

Markerless Camera-to-Robot Pose Estimation via Self-supervised Sim-to-Real Transfer

Mar 21, 2023

Abstract:Solving the camera-to-robot pose is a fundamental requirement for vision-based robot control, and is a process that takes considerable effort and cares to make accurate. Traditional approaches require modification of the robot via markers, and subsequent deep learning approaches enabled markerless feature extraction. Mainstream deep learning methods only use synthetic data and rely on Domain Randomization to fill the sim-to-real gap, because acquiring the 3D annotation is labor-intensive. In this work, we go beyond the limitation of 3D annotations for real-world data. We propose an end-to-end pose estimation framework that is capable of online camera-to-robot calibration and a self-supervised training method to scale the training to unlabeled real-world data. Our framework combines deep learning and geometric vision for solving the robot pose, and the pipeline is fully differentiable. To train the Camera-to-Robot Pose Estimation Network (CtRNet), we leverage foreground segmentation and differentiable rendering for image-level self-supervision. The pose prediction is visualized through a renderer and the image loss with the input image is back-propagated to train the neural network. Our experimental results on two public real datasets confirm the effectiveness of our approach over existing works. We also integrate our framework into a visual servoing system to demonstrate the promise of real-time precise robot pose estimation for automation tasks.

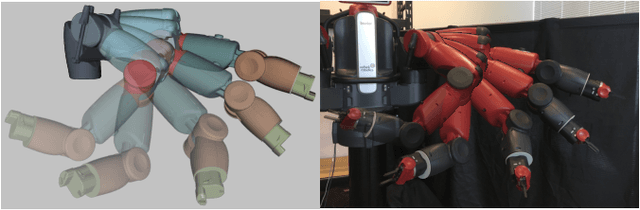

Image-based Pose Estimation and Shape Reconstruction for Robot Manipulators and Soft, Continuum Robots via Differentiable Rendering

Feb 27, 2023

Abstract:State estimation from measured data is crucial for robotic applications as autonomous systems rely on sensors to capture the motion and localize in the 3D world. Among sensors that are designed for measuring a robot's pose, or for soft robots, their shape, vision sensors are favorable because they are information-rich, easy to set up, and cost-effective. With recent advancements in computer vision, deep learning-based methods no longer require markers for identifying feature points on the robot. However, learning-based methods are data-hungry and hence not suitable for soft and prototyping robots, as building such bench-marking datasets is usually infeasible. In this work, we achieve image-based robot pose estimation and shape reconstruction from camera images. Our method requires no precise robot meshes, but rather utilizes a differentiable renderer and primitive shapes. It hence can be applied to robots for which CAD models might not be available or are crude. Our parameter estimation pipeline is fully differentiable. The robot shape and pose are estimated iteratively by back-propagating the image loss to update the parameters. We demonstrate that our method of using geometrical shape primitives can achieve high accuracy in shape reconstruction for a soft continuum robot and pose estimation for a robot manipulator.

Design and Mechanics of Cable-Driven Rolling Diaphragm Transmission for High-Transparency Robotic Motion

Feb 24, 2023

Abstract:Applications of rolling diaphragm transmissions for medical and teleoperated robotics are of great interest, due to the low friction of rolling diaphragms combined with the power density and stiffness of hydraulic transmissions. However, the stiffness-enabling pressure preloads can form a tradeoff against bearing loading in some rolling diaphragm layouts, and transmission setup can be difficult. Utilization of cable drives compliment the rolling diaphragm transmission's advantages, but maintaining cable tension is crucial for optimal and consistent performance. In this paper, a coaxial opposed rolling diaphragm layout with cable drive and an electronic transmission control system are investigated, with a focus on system reliability and scalability. Mechanical features are proposed which enable force balancing, decoupling of transmission pressure from bearing loads, and maintenance of cable tension. Key considerations and procedures for automation of transmission setup, phasing, and operation are also presented. We also present an analysis of system stiffness to identify key compliance contributors, and conduct experiments to validate prototype design performance.

Mobility Analysis of Screw-Based Locomotion and Propulsion in Various Media

Jan 26, 2023

Abstract:Robots "in-the-wild" encounter and must traverse widely varying terrain, ranging from solid ground to granular materials like sand to full liquids. Numerous approaches exist, including wheeled and legged robots, each excelling in specific domains. Screw-based locomotion is a promising approach for multi-domain mobility, leveraged in exploratory robotic designs, including amphibious vehicles and snake robotics. However, unlike other forms of locomotion, there is a limited exploration of the models, parameter effects, and efficiency for multi-terrain Archimedes screw locomotion. In this work, we present work towards this missing component in understanding screw-based locomotion: comprehensive experimental results and performance analysis across different media. We designed a mobile test bed for indoor and outdoor experimentation to collect this data. Beyond quantitatively showing the multi-domain mobility of screw-based locomotion, we envision future researchers and engineers using the presented results to design effective screw-based locomotion systems.

Semantic-SuPer: A Semantic-aware Surgical Perception Framework for Endoscopic Tissue Classification, Reconstruction, and Tracking

Oct 29, 2022

Abstract:Accurate and robust tracking and reconstruction of the surgical scene is a critical enabling technology toward autonomous robotic surgery. Existing algorithms for 3D perception in surgery mainly rely on geometric information, while we propose to also leverage semantic information inferred from the endoscopic video using image segmentation algorithms. In this paper, we present a novel, comprehensive surgical perception framework, Semantic-SuPer, that integrates geometric and semantic information to facilitate data association, 3D reconstruction, and tracking of endoscopic scenes, benefiting downstream tasks like surgical navigation. The proposed framework is demonstrated on challenging endoscopic data with deforming tissue, showing its advantages over our baseline and several other state-of the-art approaches. Our code and dataset will be available at https://github.com/ucsdarclab/Python-SuPer.

Knowledge-Grounded Reinforcement Learning

Oct 07, 2022

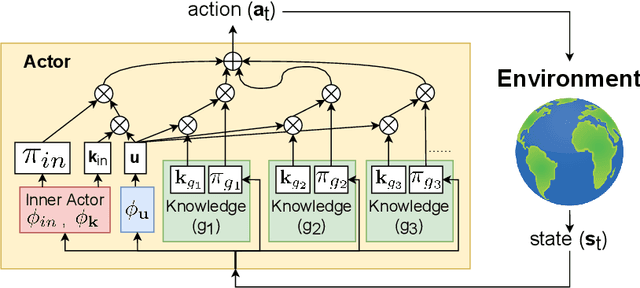

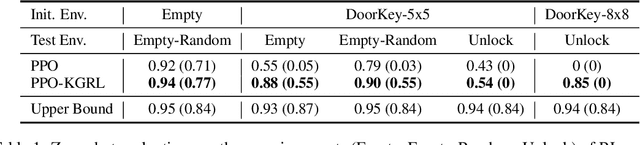

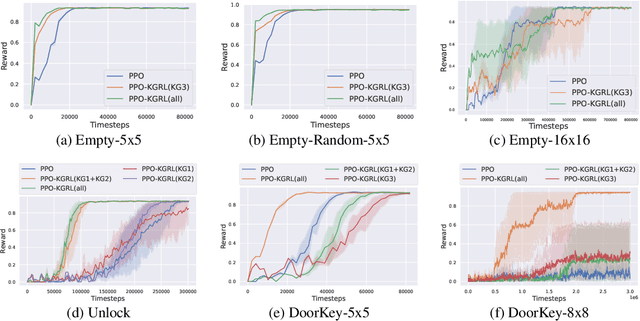

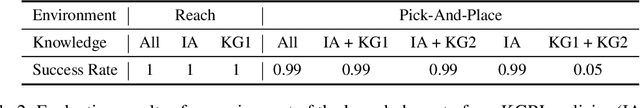

Abstract:Receiving knowledge, abiding by laws, and being aware of regulations are common behaviors in human society. Bearing in mind that reinforcement learning (RL) algorithms benefit from mimicking humanity, in this work, we propose that an RL agent can act on external guidance in both its learning process and model deployment, making the agent more socially acceptable. We introduce the concept, Knowledge-Grounded RL (KGRL), with a formal definition that an agent learns to follow external guidelines and develop its own policy. Moving towards the goal of KGRL, we propose a novel actor model with an embedding-based attention mechanism that can attend to either a learnable internal policy or external knowledge. The proposed method is orthogonal to training algorithms, and the external knowledge can be flexibly recomposed, rearranged, and reused in both training and inference stages. Through experiments on tasks with discrete and continuous action space, our KGRL agent is shown to be more sample efficient and generalizable, and it has flexibly rearrangeable knowledge embeddings and interpretable behaviors.

Differentiable Robotic Manipulation of Deformable Rope-like Objects Using Compliant Position-based Dynamics

Feb 20, 2022

Abstract:Robot manipulation of rope-like objects is an interesting problem that has some critical applications, such as autonomous robotic suturing. Solving for and controlling rope is difficult due to the complexity of rope physics and the challenge of building fast and accurate models of deformable materials. While more data-driven approaches have become more popular for finding controllers that learn to do a single task, there is still a strong motivation for a model-based method that could be used to solve a large variety of optimization problems. Towards this end, we introduced compliant, position-based dynamics (XPBD) to model rope-like objects. Using geometric constraints, the model can represent the coupling of shear/stretch and bend/twist effects. Of crucial importance is that our formulation is differentiable, which can solve parameter estimation problems and improve the matching of rope physics to real-life scenarios (i.e., the real-to-sim problem). For the generality of rope-like objects, two different solvers are proposed to handle the inextensible and extensible effects of varied material stiffness for the rope. We demonstrate our framework's robustness and accuracy on real-to-sim experimental setups using the Baxter robot and the da Vinci research kit (DVRK). Our work leads to a new path for robotic manipulation of the deformable rope-like object taking advantage of the ready-to-use gradients.

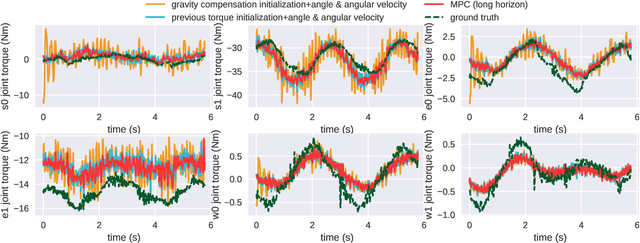

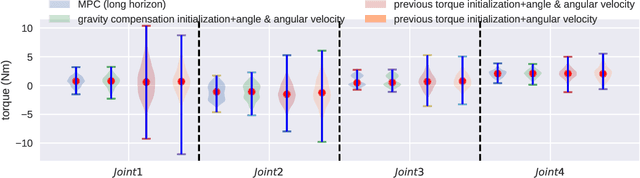

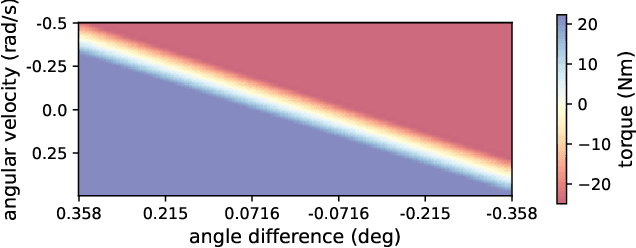

Parameter Identification and Motion Control for Articulated Rigid Body Robots Using Differentiable Position-based Dynamics

Jan 15, 2022

Abstract:Simulation modeling of robots, objects, and environments is the backbone for all model-based control and learning. It is leveraged broadly across dynamic programming and model-predictive control, as well as data generation for imitation, transfer, and reinforcement learning. In addition to fidelity, key features of models in these control and learning contexts are speed, stability, and native differentiability. However, many popular simulation platforms for robotics today lack at least one of the features above. More recently, position-based dynamics (PBD) has become a very popular simulation tool for modeling complex scenes of rigid and non-rigid object interactions, due to its speed and stability, and is starting to gain significant interest in robotics for its potential use in model-based control and learning. Thus, in this paper, we present a mathematical formulation for coupling position-based dynamics (PBD) simulation and optimal robot design, model-based motion control and system identification. Our framework breaks down PBD definitions and derivations for various types of joint-based articulated rigid bodies. We present a back-propagation method with automatic differentiation, which can integrate both positional and angular geometric constraints. Our framework can critically provide the native gradient information and perform gradient-based optimization tasks. We also propose articulated joint model representations and simulation workflow for our differentiable framework. We demonstrate the capability of the framework in efficient optimal robot design, accurate trajectory torque estimation and supporting spring stiffness estimation, where we achieve minor errors. We also implement impedance control in real robots to demonstrate the potential of our differentiable framework in human-in-the-loop applications.

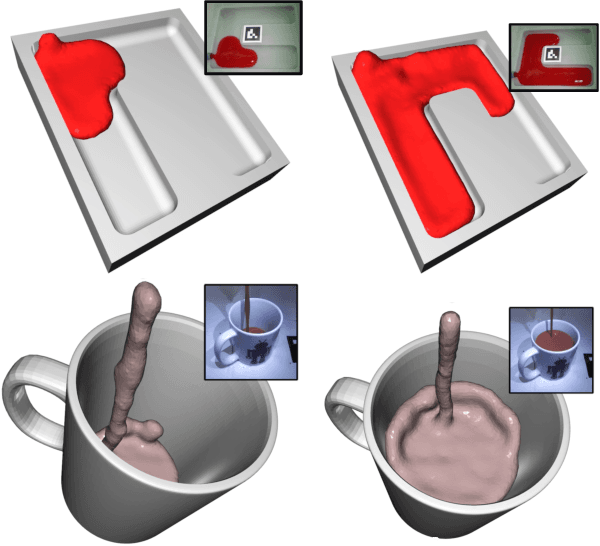

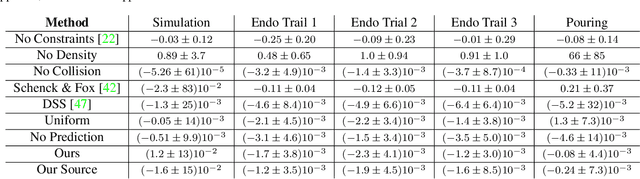

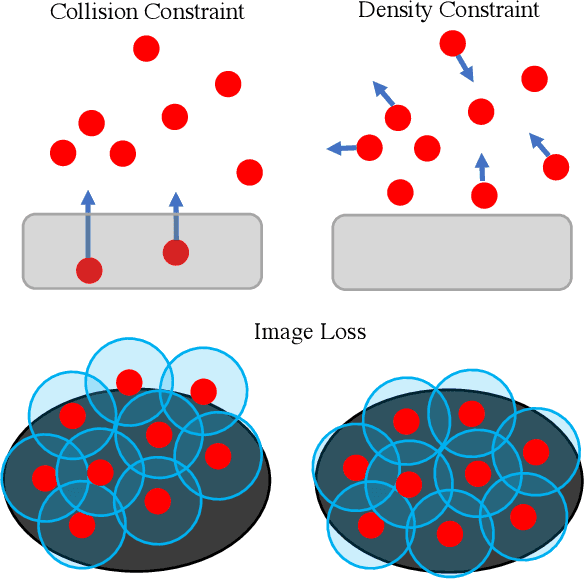

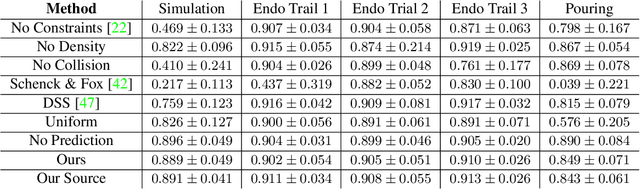

Image Based Reconstruction of Liquids from 2D Surface Detections

Nov 22, 2021

Abstract:In this work, we present a solution to the challenging problem of reconstructing liquids from image data. The challenges in reconstructing liquids, which is not faced in previous reconstruction works on rigid and deforming surfaces, lies in the inability to use depth sensing and color features due the variable index of refraction, opacity, and environmental reflections. Therefore, we limit ourselves to only surface detections (i.e. binary mask) of liquids as observations and do not assume any prior knowledge on the liquids properties. A novel optimization problem is posed which reconstructs the liquid as particles by minimizing the error between a rendered surface from the particles and the surface detections while satisfying liquid constraints. Our solvers to this optimization problem are presented and no training data is required to apply them. We also propose a dynamic prediction to seed the reconstruction optimization from the previous time-step. We test our proposed methods in simulation and on two new liquid datasets which we open source so the broader research community can continue developing in this under explored area.

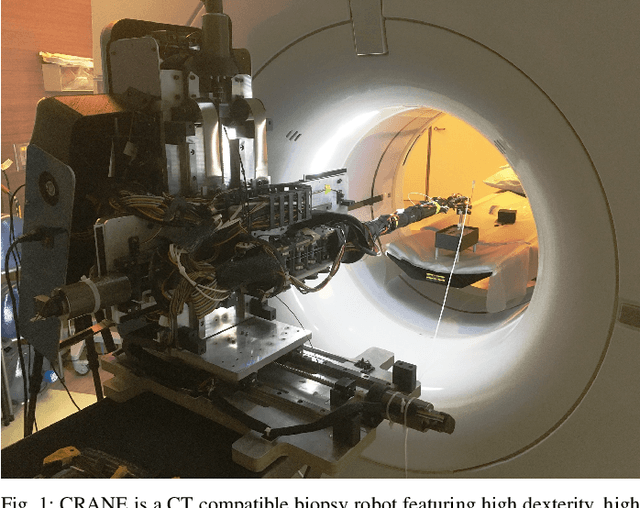

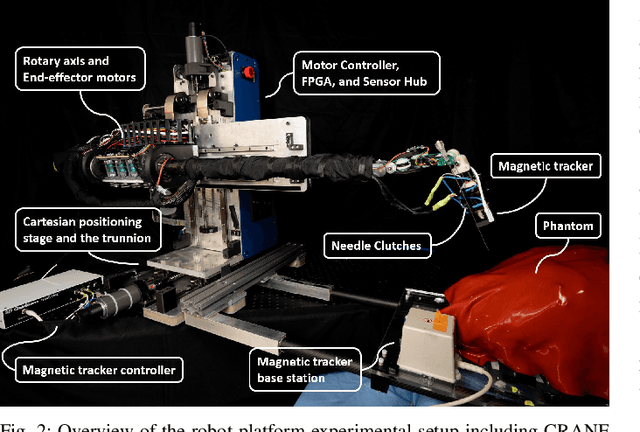

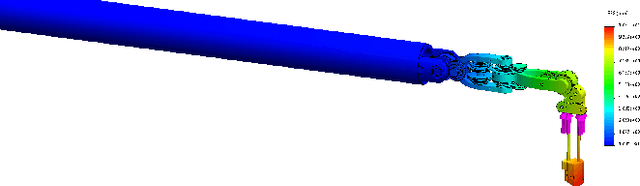

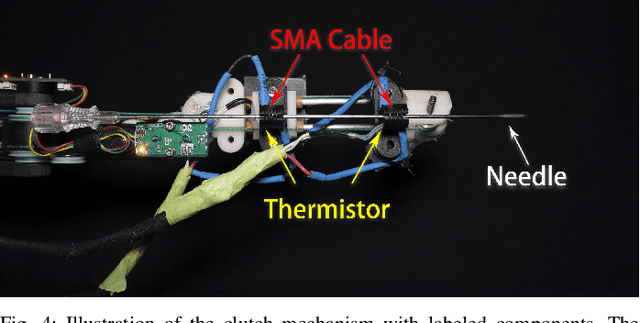

CRANE: a 10 Degree-of-Freedom, Tele-surgical System for Dexterous Manipulation within Imaging Bores

Sep 28, 2021

Abstract:Physicians perform minimally invasive percutaneous procedures under Computed Tomography (CT) image guidance both for the diagnosis and treatment of numerous diseases. For these procedures performed within Computed Tomography Scanners, robots can enable physicians to more accurately target sub-dermal lesions while increasing safety. However, existing robots for this application have limited dexterity, workspace, or accuracy. This paper describes the design, manufacture, and performance of a highly dexterous, low-profile, 8+2 Degree-ofFreedom (DoF) robotic arm for CT guided percutaneous needle biopsy. In this article, we propose CRANE: CT Robot and Needle Emplacer. The design focuses on system dexterity with high accuracy: extending physicians' ability to manipulate and insert needles within the scanner bore while providing the high accuracy possible with a robot. We also propose and validate a system architecture and control scheme for low profile and highly accurate image-guided robotics, that meets the clinical requirements for target accuracy during an in-situ evaluation. The accuracy is additionally evaluated through a trajectory tracking evaluation resulting in <0.2mm and <0.71degree tracking error. Finally, we present a novel needle driving and grasping mechanism with controlling electronics that provides simple manufacturing, sterilization, and adaptability to accommodate different sizes and types of needles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge