Mark W. Mueller

Duawlfin: A Drone with Unified Actuation for Wheeled Locomotion and Flight Operation

May 20, 2025Abstract:This paper presents Duawlfin, a drone with unified actuation for wheeled locomotion and flight operation that achieves efficient, bidirectional ground mobility. Unlike existing hybrid designs, Duawlfin eliminates the need for additional actuators or propeller-driven ground propulsion by leveraging only its standard quadrotor motors and introducing a differential drivetrain with one-way bearings. This innovation simplifies the mechanical system, significantly reduces energy usage, and prevents the disturbance caused by propellers spinning near the ground, such as dust interference with sensors. Besides, the one-way bearings minimize the power transfer from motors to propellers in the ground mode, which enables the vehicle to operate safely near humans. We provide a detailed mechanical design, present control strategies for rapid and smooth mode transitions, and validate the concept through extensive experimental testing. Flight-mode tests confirm stable aerial performance comparable to conventional quadcopters, while ground-mode experiments demonstrate efficient slope climbing (up to 30{\deg}) and agile turning maneuvers approaching 1g lateral acceleration. The seamless transitions between aerial and ground modes further underscore the practicality and effectiveness of our approach for applications like urban logistics and indoor navigation. All the materials including 3-D model files, demonstration video and other assets are open-sourced at https://sites.google.com/view/Duawlfin.

A Tactile Feedback Approach to Path Recovery after High-Speed Impacts for Collision-Resilient Drones

Oct 18, 2024

Abstract:Aerial robots are a well-established solution for exploration, monitoring, and inspection, thanks to their superior maneuverability and agility. However, in many environments of interest, they risk crashing and sustaining damage following collisions. Traditional methods focus on avoiding obstacles entirely to prevent damage, but these approaches can be limiting, particularly in complex environments where collisions may be unavoidable, or on weight and compute-constrained platforms. This paper presents a novel approach to enhance the robustness and autonomy of drones in such scenarios by developing a path recovery and adjustment method for a high-speed collision-resistant drone equipped with binary contact sensors. The proposed system employs an estimator that explicitly models collisions, using pre-collision velocities and rates to predict post-collision dynamics, thereby improving the drone's state estimation accuracy. Additionally, we introduce a vector-field-based path representation which guarantees convergence to the path. Post-collision, the contact point is incorporated into the vector field as a repulsive potential, enabling the drone to avoid obstacles while naturally converging to the original path. The effectiveness of this method is validated through Monte Carlo simulations and demonstrated on a physical prototype, showing successful path following and adjustment through collisions as well as recovery from collisions at speeds up to 3.7 m / s.

Synthesizing Interpretable Control Policies through Large Language Model Guided Search

Oct 07, 2024

Abstract:The combination of Large Language Models (LLMs), systematic evaluation, and evolutionary algorithms has enabled breakthroughs in combinatorial optimization and scientific discovery. We propose to extend this powerful combination to the control of dynamical systems, generating interpretable control policies capable of complex behaviors. With our novel method, we represent control policies as programs in standard languages like Python. We evaluate candidate controllers in simulation and evolve them using a pre-trained LLM. Unlike conventional learning-based control techniques, which rely on black box neural networks to encode control policies, our approach enhances transparency and interpretability. We still take advantage of the power of large AI models, but leverage it at the policy design phase, ensuring that all system components remain interpretable and easily verifiable at runtime. Additionally, the use of standard programming languages makes it straightforward for humans to finetune or adapt the controllers based on their expertise and intuition. We illustrate our method through its application to the synthesis of an interpretable control policy for the pendulum swing-up and the ball in cup tasks. We make the code available at https://github.com/muellerlab/synthesizing_interpretable_control_policies.git

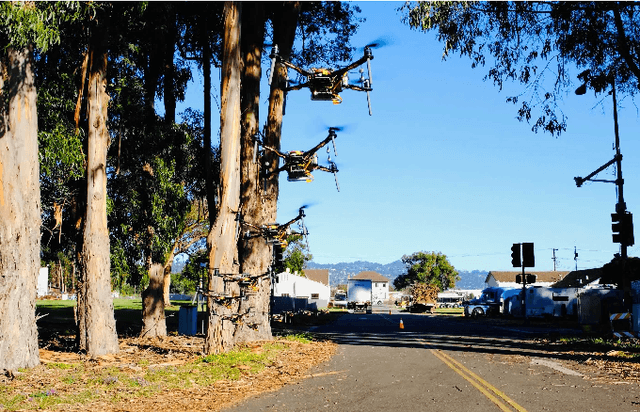

Towards Safe and Efficient Through-the-Canopy Autonomous Fruit Counting with UAVs

Sep 26, 2024

Abstract:We present an autonomous aerial system for safe and efficient through-the-canopy fruit counting. Aerial robot applications in large-scale orchards face significant challenges due to the complexity of fine-tuning flight paths based on orchard layouts, canopy density, and plant variability. Through-the-canopy navigation is crucial for minimizing occlusion by leaves and branches but is more challenging due to the complex and dense environment compared to traditional over-the-canopy flights. Our system addresses these challenges by integrating: i) a high-fidelity simulation framework for optimizing flight trajectories, ii) a low-cost autonomy stack for canopy-level navigation and data collection, and iii) a robust workflow for fruit detection and counting using RGB images. We validate our approach through fruit counting with canopy-level aerial images and by demonstrating the autonomous navigation capabilities of our experimental vehicle.

Automated Layout Design and Control of Robust Cooperative Grasped-Load Aerial Transportation Systems

Oct 11, 2023

Abstract:We present a novel approach to cooperative aerial transportation through a team of drones, using optimal control theory and a hierarchical control strategy. We assume the drones are connected to the payload through rigid attachments, essentially transforming the whole system into a larger flying object with "thrust modules" at the attachment locations of the drones. We investigate the optimal arrangement of the thrust modules around the payload, so that the resulting system is robust to disturbances. We choose the $\mathcal{H}_2$ norm as a measure of robustness, and propose an iterative optimization routine to compute the optimal layout of the vehicles around the object. We experimentally validate our approach using four drones and comparing the disturbance rejection performances achieved by two different layouts (the optimal one and a sub-optimal one), and observe that the results match our predictions.

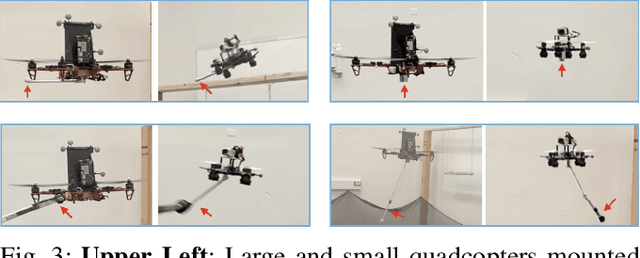

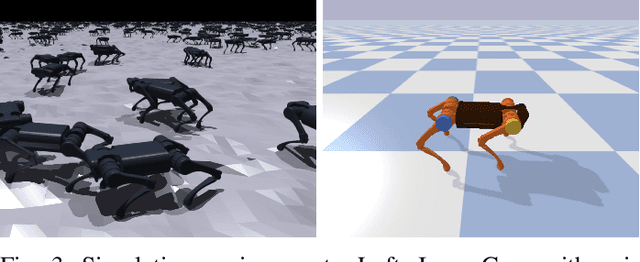

Design and control of a collision-resilient aerial vehicle with an icosahedron tensegrity structure

Nov 22, 2022

Abstract:We present the tensegrity aerial vehicle, a design of collision-resilient rotor robots with icosahedron tensegrity structures. The tensegrity aerial vehicles can withstand high-speed impacts and resume operation after collisions. To guide the design process of these aerial vehicles, we propose a model-based methodology that predicts the stresses in the structure with a dynamics simulation and selects components that can withstand the predicted stresses. Meanwhile, an autonomous re-orientation controller is created to help the tensegrity aerial vehicles resume flight after collisions. The re-orientation controller can rotate the vehicles from arbitrary orientations on the ground to ones easy for takeoff. With collision resilience and re-orientation ability, the tensegrity aerial vehicles can operate in cluttered environments without complex collision-avoidance strategies. Moreover, by adopting an inertial navigation strategy of replacing flight with short hops to mitigate the growth of state estimation error, the tensegrity aerial vehicles can conduct short-range operations without external sensors. These capabilities are validated by a test of an experimental tensegrity aerial vehicle operating with only onboard inertial sensors in a previously-unknown forest.

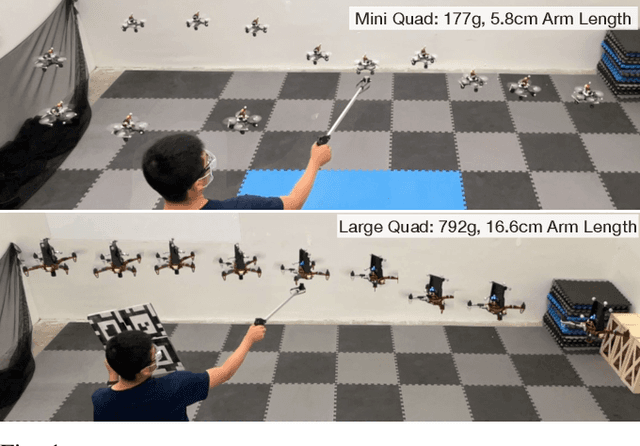

A Zero-Shot Adaptive Quadcopter Controller

Sep 19, 2022

Abstract:This paper proposes a universal adaptive controller for quadcopters, which can be deployed zero-shot to quadcopters of very different mass, arm lengths and motor constants, and also shows rapid adaptation to unknown disturbances during runtime. The core algorithmic idea is to learn a single policy that can adapt online at test time not only to the disturbances applied to the drone, but also to the robot dynamics and hardware in the same framework. We achieve this by training a neural network to estimate a latent representation of the robot and environment parameters, which is used to condition the behaviour of the controller, also represented as a neural network. We train both networks exclusively in simulation with the goal of flying the quadcopters to goal positions and avoiding crashes to the ground. We directly deploy the same controller trained in the simulation without any modifications on two quadcopters with differences in mass, inertia, and maximum motor speed of up to 4 times. In addition, we show rapid adaptation to sudden and large disturbances (up to 35.7%) in the mass and inertia of the quadcopters. We perform an extensive evaluation in both simulation and the physical world, where we outperform a state-of-the-art learning-based adaptive controller and a traditional PID controller specifically tuned to each platform individually. Video results can be found at https://dz298.github.io/universal-drone-controller/.

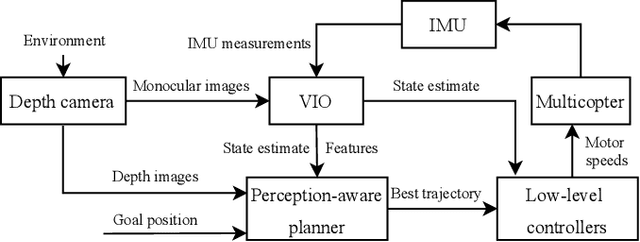

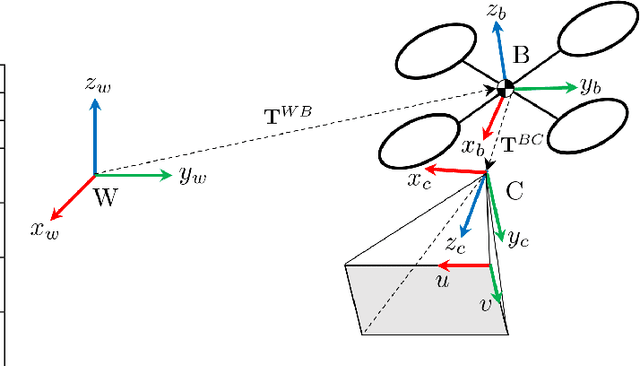

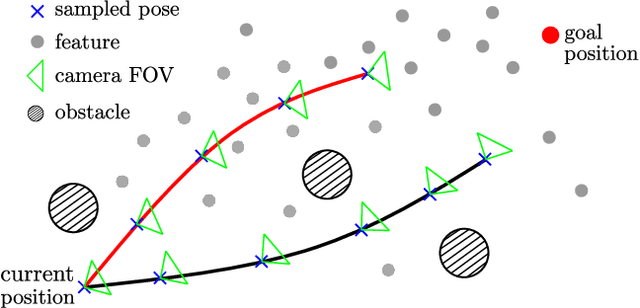

Perception-aware receding horizon trajectory planning for multicopters with visual-inertial odometry

Apr 07, 2022

Abstract:Visual inertial odometry (VIO) is widely used for the state estimation of multicopters, but it may function poorly in environments with few visual features or in overly aggressive flights. In this work, we propose a perception-aware collision avoidance local planner for multicopters. Our approach is able to fly the vehicle to a goal position at high speed, avoiding obstacles in the environment while achieving good VIO state estimation accuracy. The proposed planner samples a group of minimum jerk trajectories and finds collision-free trajectories among them, which are then evaluated based on their speed to the goal and perception quality. Both the features' motion blur and their locations are considered for the perception quality. The best trajectory from the evaluation is tracked by the vehicle and is updated in a receding horizon manner when new images are received from the camera. All the sampled trajectories have zero speed and acceleration at the end, and the planner assumes no other visual features except those already found by the VIO. As a result, the vehicle will follow the current trajectory to the end and stop safely if no new trajectories are found, avoiding collision or flying into areas without features. The proposed method can run in real time on a small embedded computer on board. We validated the effectiveness of our proposed approach through experiments in indoor and outdoor environments. Compared to a perception-agnostic planner, the proposed planner kept more features in the camera's view and made the flight less aggressive, making the VIO more accurate. It also reduced VIO failures, which occurred for the perception-agnostic planner but not for the proposed planner. The experiment video can be found at https://youtu.be/LjZju4KEH9Q.

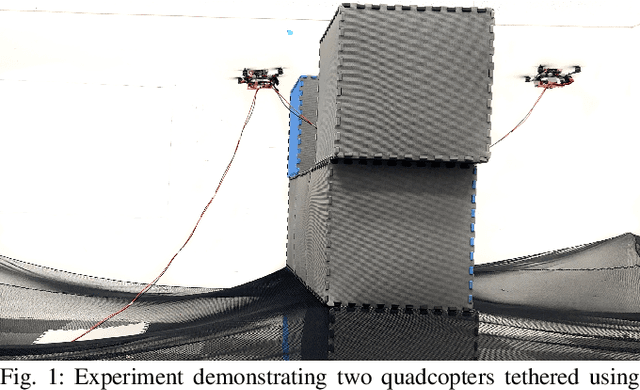

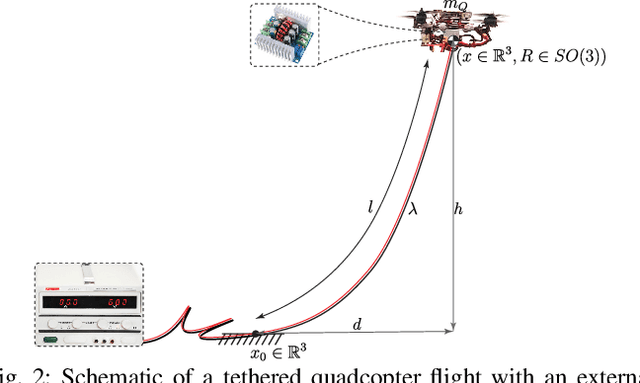

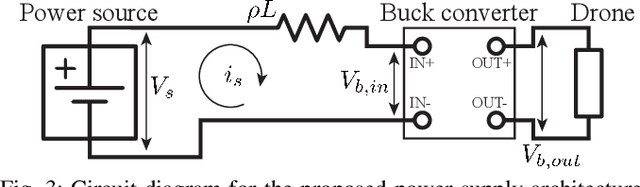

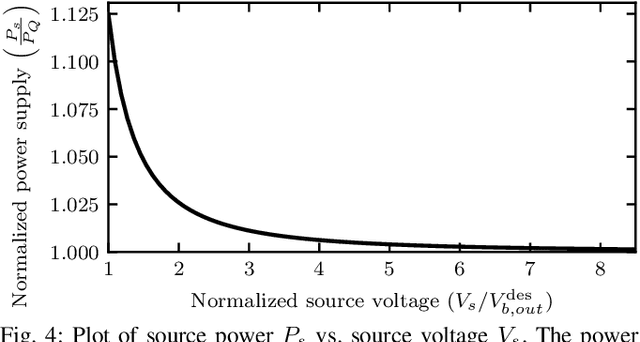

Tethered Power Supply for Quadcopters: Architecture, Analysis and Experiments

Mar 15, 2022

Abstract:Tethered quadcopters are used for extended flight operations where the necessary power to the system is provided through the tether from an external power source on the ground. In this work, we study the design factors such as the tether mass, electrical resistance, voltage conversion efficiency, etc. that influence the power requirements. We present analytical formulations to predict the power requirement for a given setup. Additionally, we show the existence of a critical hover height for a single-quadcopter tether system, beyond which it is physically (electrically) impossible for the quadcopter to hover. We then present experimental results for single and two-quadcopter tethered systems. Power supply readings from the experiments in various configurations are consistent with the predictions from the analysis (within 5%), which experimentally validates the presented analysis. A two-quadcopter system powered via a single tether is flown through a corridor to demonstrate one of the applications of having multiple quadcopters on the same tether.

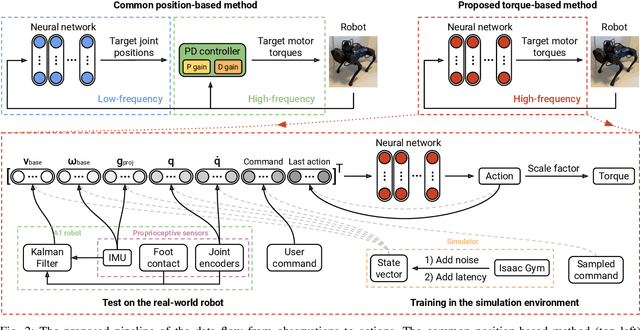

Learning Torque Control for Quadrupedal Locomotion

Mar 10, 2022

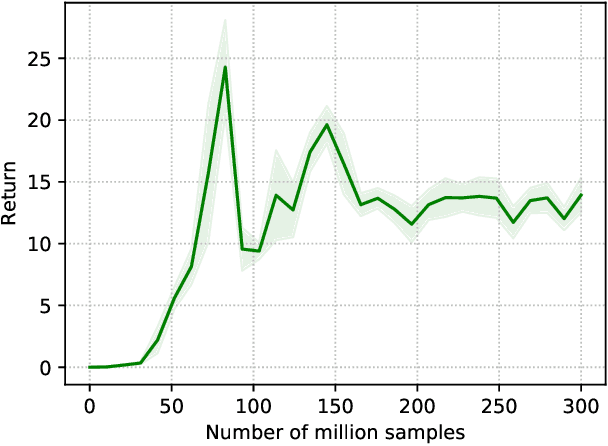

Abstract:Reinforcement learning (RL) is a promising tool for developing controllers for quadrupedal locomotion. The design of most learning-based locomotion controllers adopts the joint position-based paradigm, wherein a low-frequency RL policy outputs target joint positions that are then tracked by a high-frequency proportional-derivative (PD) controller that outputs joint torques. However, the low frequency of such a policy hinders the advancement of highly dynamic locomotion behaviors. Moreover, determining the PD gains for optimal tracking performance is laborious and dependent on the task at hand. In this paper, we introduce a learning torque control framework for quadrupedal locomotion, which trains an RL policy that directly predicts joint torques at a high frequency, thus circumventing the use of PD controllers. We validate the proposed framework with extensive experiments where the robot is able to both traverse various terrains and resist external pushes, given user-specified commands. To our knowledge, this is the first attempt of learning torque control for quadrupedal locomotion with an end-to-end single neural network that has led to successful real-world experiments among recent research on learning-based quadrupedal locomotion which is mostly position-based.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge