Liulong Ma

Scene-Adaptive Motion Planning with Explicit Mixture of Experts and Interaction-Oriented Optimization

May 18, 2025

Abstract:Despite over a decade of development, autonomous driving trajectory planning in complex urban environments continues to encounter significant challenges. These challenges include the difficulty in accommodating the multi-modal nature of trajectories, the limitations of single expert in managing diverse scenarios, and insufficient consideration of environmental interactions. To address these issues, this paper introduces the EMoE-Planner, which incorporates three innovative approaches. Firstly, the Explicit MoE (Mixture of Experts) dynamically selects specialized experts based on scenario-specific information through a shared scene router. Secondly, the planner utilizes scene-specific queries to provide multi-modal priors, directing the model's focus towards relevant target areas. Lastly, it enhances the prediction model and loss calculation by considering the interactions between the ego vehicle and other agents, thereby significantly boosting planning performance. Comparative experiments were conducted using the Nuplan dataset against the state-of-the-art methods. The simulation results demonstrate that our model consistently outperforms SOTA models across nearly all test scenarios.

Learning to Navigate in Indoor Environments: from Memorizing to Reasoning

Apr 21, 2019

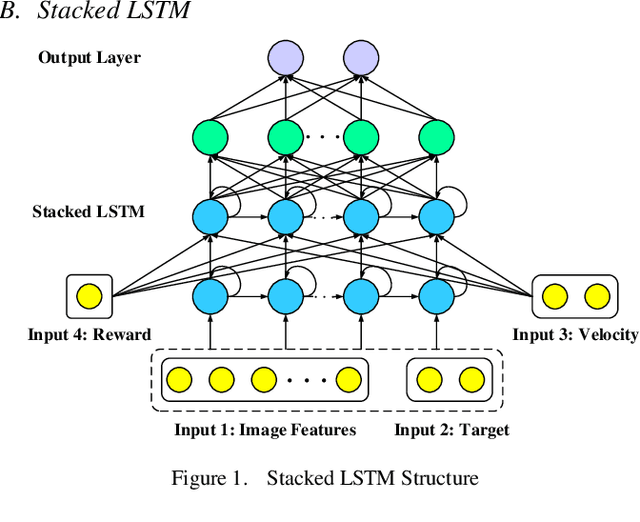

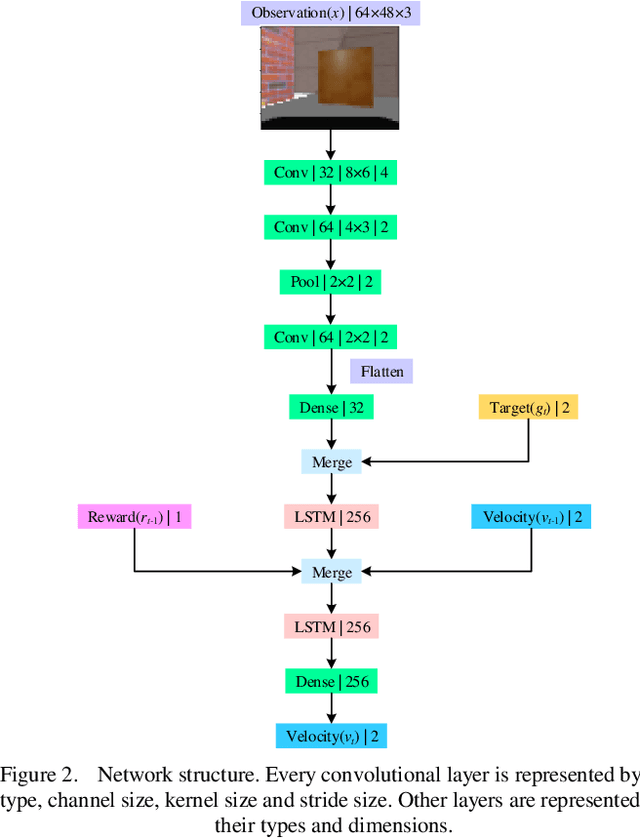

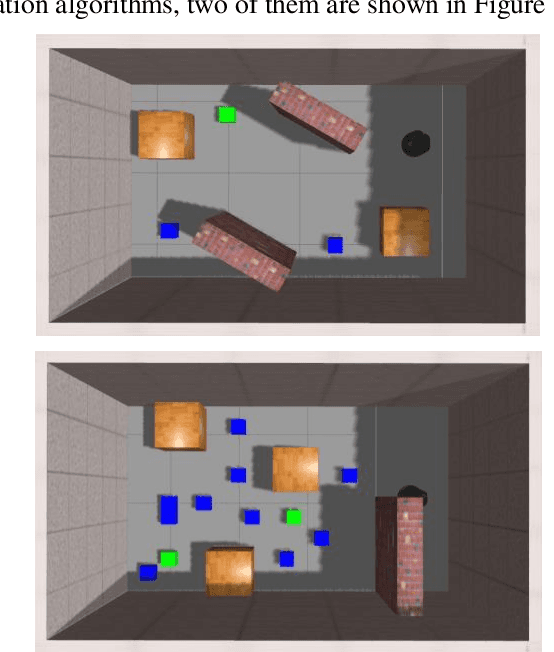

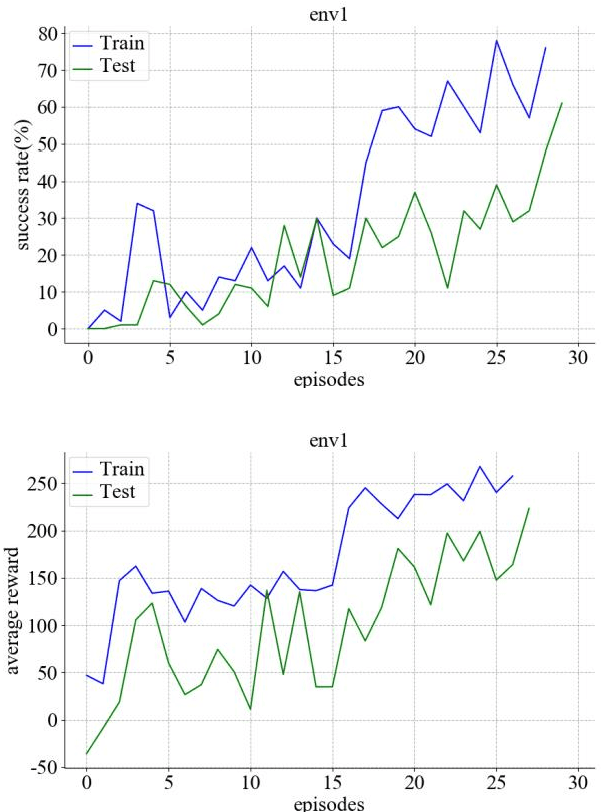

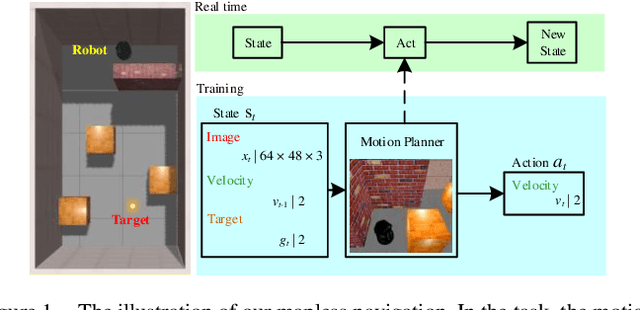

Abstract:Autonomous navigation is an essential capability of smart mobility for mobile robots. Traditional methods must have the environment map to plan a collision-free path in workspace. Deep reinforcement learning (DRL) is a promising technique to realize the autonomous navigation task without a map, with which deep neural network can fit the mapping from observation to reasonable action through explorations. It should not only memorize the trained target, but more importantly, the planner can reason out the unseen goal. We proposed a new motion planner based on deep reinforcement learning that can arrive at new targets that have not been trained before in the indoor environment with RGB image and odometry only. The model has a structure of stacked Long Short-Term memory (LSTM). Finally, experiments were implemented in both simulated and real environments. The source code is available: https://github.com/marooncn/navbot.

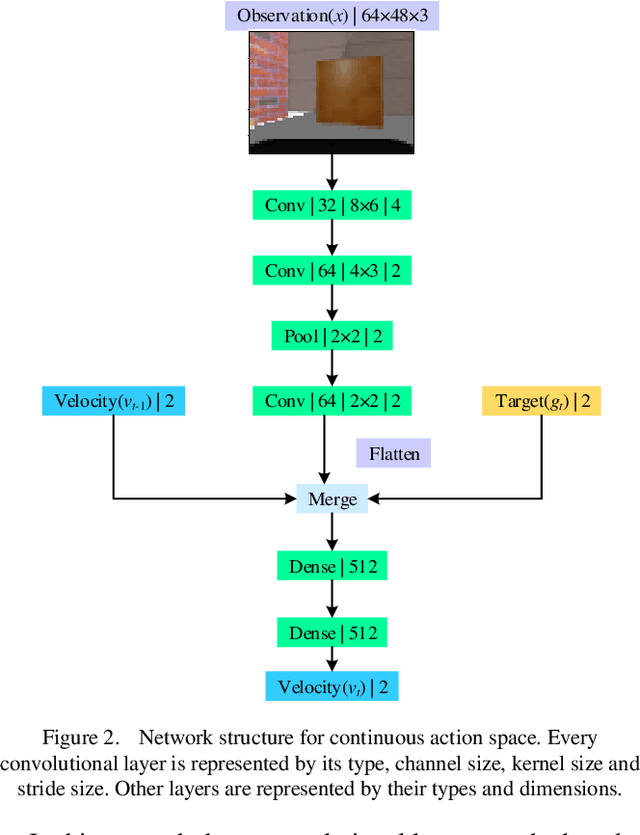

Using RGB Image as Visual Input for Mapless Robot Navigation

Apr 16, 2019

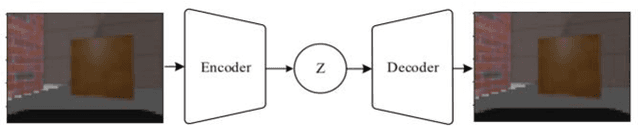

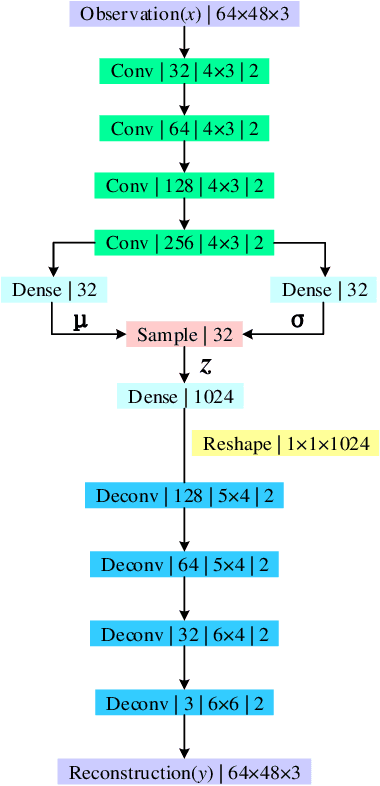

Abstract:Robot navigation in mapless environment is one of the essential problems and challenges in mobile robots. Deep reinforcement learning is a promising technique to tackle the task of mapless navigation. Since reinforcement learning requires a lot of explorations, it is usually necessary to train the agent in the simulator and then migrate to the real environment. The big reality gap makes RGB image, the most common visual sensor, rarely used. In this paper we present a learning-based mapless motion planner by taking RGB images as visual inputs. Many parameters in end-to-end navigation network taking RGB images as visual input are used to extract visual features. Therefore, we decouple visual features extracted module from the reinforcement learning network to reduce the need of interactions between agent and environment. We use Variational Autoencoder (VAE) to encode the image, and input the obtained latent vector as low-dimensional visual features into the network together with the target and motion information, so that the sampling efficiency of the agent is greatly improved. We built simulation environment as robot navigation environment for algorithm comparison. In the test environment, the proposed method was compared with the end-to-end network, which proved its effectiveness and efficiency. The source code is available: https://github.com/marooncn/navbot.

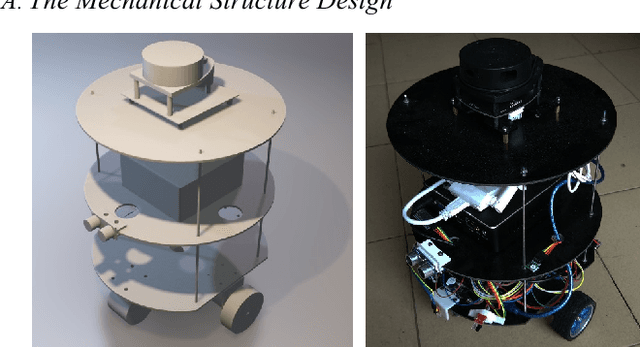

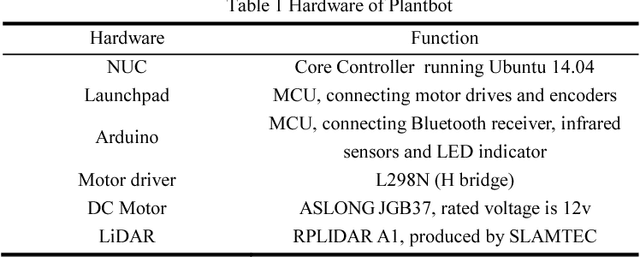

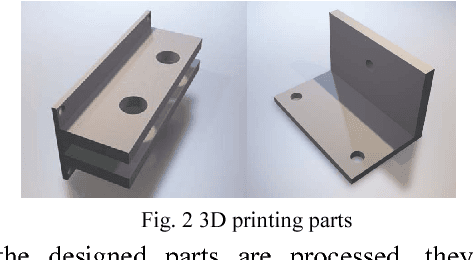

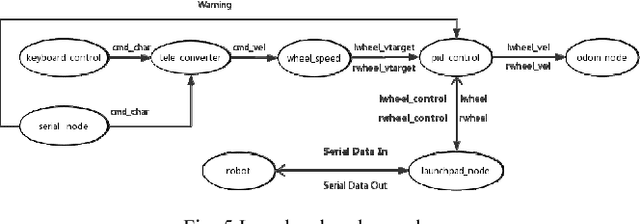

Plantbot: A New ROS-based Robot Platform for Fast Building and Developing

Apr 08, 2018

Abstract:In recent years, the Robot Operating System (ROS) is developing rapidly and has been widely used in robotics research because of its flexible, open source, and extensive advantages. In scientific research, the corresponding hardware platform is indispensable for the experiment. In the field of mobile robots, PR2, Turtlebot2, and Fetch are commonly used as research platforms. Although these platforms are fully functional and widely used, they are expensive and bulky. What\u2019s more, these robots are not easily redesigned and expanded according to requirements. To overcome these limitations, we propose Plantbot, an easy-building, low-cost robot platform that can be redesigned and expanded according to requirements. It can be applied to not only fast algorithm verification, but also simple factory inspection and ROS teaching. This article describes this robot platform from several aspects such as hardware design, kinematics, and control methods. At last two experiments, SLAM and Navigation, on the robot platform are performed. The source code of this platform is available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge