Lijun Yin

Weakly-Supervised Text-driven Contrastive Learning for Facial Behavior Understanding

Mar 31, 2023Abstract:Contrastive learning has shown promising potential for learning robust representations by utilizing unlabeled data. However, constructing effective positive-negative pairs for contrastive learning on facial behavior datasets remains challenging. This is because such pairs inevitably encode the subject-ID information, and the randomly constructed pairs may push similar facial images away due to the limited number of subjects in facial behavior datasets. To address this issue, we propose to utilize activity descriptions, coarse-grained information provided in some datasets, which can provide high-level semantic information about the image sequences but is often neglected in previous studies. More specifically, we introduce a two-stage Contrastive Learning with Text-Embeded framework for Facial behavior understanding (CLEF). The first stage is a weakly-supervised contrastive learning method that learns representations from positive-negative pairs constructed using coarse-grained activity information. The second stage aims to train the recognition of facial expressions or facial action units by maximizing the similarity between image and the corresponding text label names. The proposed CLEF achieves state-of-the-art performance on three in-the-lab datasets for AU recognition and three in-the-wild datasets for facial expression recognition.

A Transformer-based Deep Learning Algorithm to Auto-record Undocumented Clinical One-Lung Ventilation Events

Feb 16, 2023Abstract:As a team studying the predictors of complications after lung surgery, we have encountered high missingness of data on one-lung ventilation (OLV) start and end times due to high clinical workload and cognitive overload during surgery. Such missing data limit the precision and clinical applicability of our findings. We hypothesized that available intraoperative mechanical ventilation and physiological time-series data combined with other clinical events could be used to accurately predict missing start and end times of OLV. Such a predictive model can recover existing miss-documented records and relieves the documentation burden by deploying it in clinical settings. To this end, we develop a deep learning model to predict the occurrence and timing of OLV based on routinely collected intraoperative data. Our approach combines the variables' spatial and frequency domain features, using Transformer encoders to model the temporal evolution and convolutional neural network to abstract frequency-of-interest from wavelet spectrum images. The performance of the proposed method is evaluated on a benchmark dataset curated from Massachusetts General Hospital (MGH) and Brigham and Women's Hospital (BWH). Experiments show our approach outperforms baseline methods significantly and produces a satisfactory accuracy for clinical use.

Multimodal Learning with Channel-Mixing and Masked Autoencoder on Facial Action Unit Detection

Sep 25, 2022

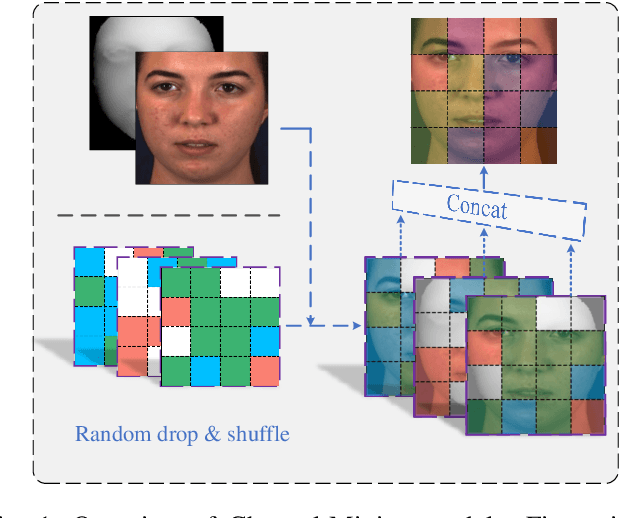

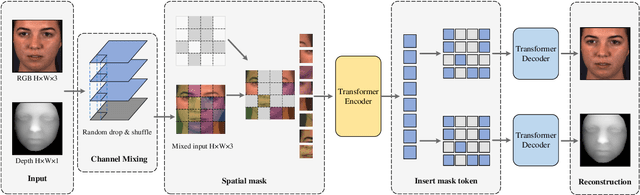

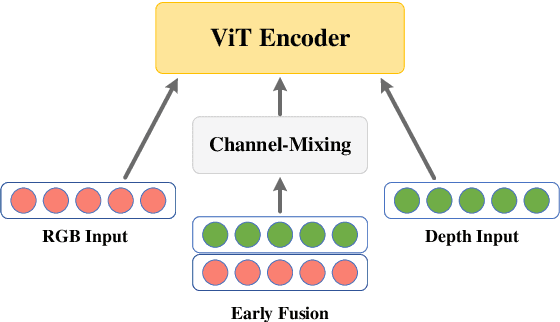

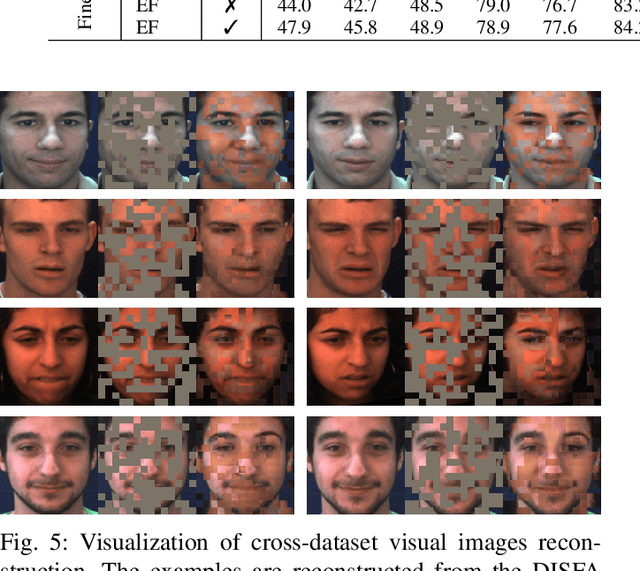

Abstract:Recent studies utilizing multi-modal data aimed at building a robust model for facial Action Unit (AU) detection. However, due to the heterogeneity of multi-modal data, multi-modal representation learning becomes one of the main challenges. On one hand, it is difficult to extract the relevant features from multi-modalities by only one feature extractor, on the other hand, previous studies have not fully explored the potential of multi-modal fusion strategies. For example, early fusion usually required all modalities to be present during inference, while late fusion and middle fusion increased the network size for feature learning. In contrast to a large amount of work on late fusion, there are few works on early fusion to explore the channel information. This paper presents a novel multi-modal network called Multi-modal Channel-Mixing (MCM), as a pre-trained model to learn a robust representation in order to facilitate the multi-modal fusion. We evaluate the learned representation on a downstream task of automatic facial action units detection. Specifically, it is a single stream encoder network that uses a channel-mixing module in early fusion, requiring only one modality in the downstream detection task. We also utilize the masked ViT encoder to learn features from the fusion image and reconstruct back two modalities with two ViT decoders. We have conducted extensive experiments on two public datasets, known as BP4D and DISFA, to evaluate the effectiveness and robustness of the proposed multimodal framework. The results show our approach is comparable or superior to the state-of-the-art baseline methods.

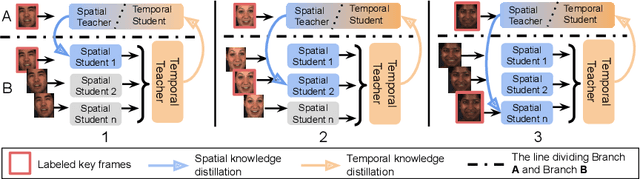

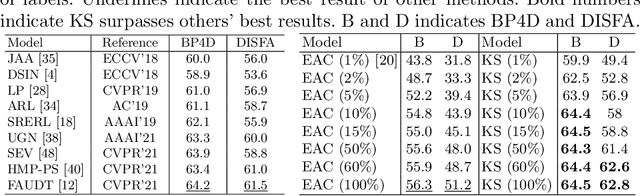

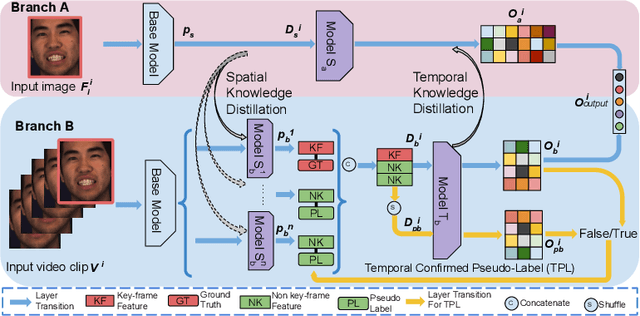

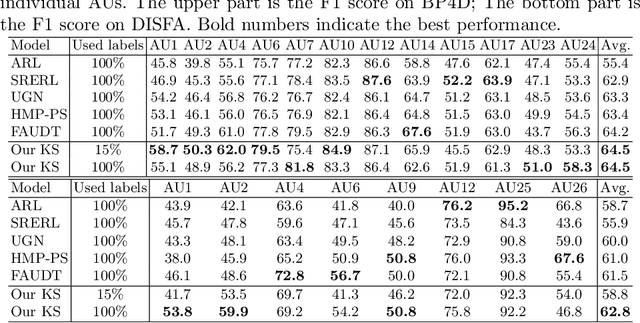

Knowledge-Spreader: Learning Facial Action Unit Dynamics with Extremely Limited Labels

Mar 30, 2022

Abstract:Recent studies on the automatic detection of facial action unit (AU) have extensively relied on large-sized annotations. However, manually AU labeling is difficult, time-consuming, and costly. Most existing semi-supervised works ignore the informative cues from the temporal domain, and are highly dependent on densely annotated videos, making the learning process less efficient. To alleviate these problems, we propose a deep semi-supervised framework Knowledge-Spreader (KS), which differs from conventional methods in two aspects. First, rather than only encoding human knowledge as constraints, KS also learns the Spatial-Temporal AU correlation knowledge in order to strengthen its out-of-distribution generalization ability. Second, we approach KS by applying consistency regularization and pseudo-labeling in multiple student networks alternately and dynamically. It spreads the spatial knowledge from labeled frames to unlabeled data, and completes the temporal information of partially labeled video clips. Thus, the design allows KS to learn AU dynamics from video clips with only one label allocated, which significantly reduce the requirements of using annotations. Extensive experiments demonstrate that the proposed KS achieves competitive performance as compared to the state of the arts under the circumstances of using only 2% labels on BP4D and 5% labels on DISFA. In addition, we test it on our newly developed large-scale comprehensive emotion database, which contains considerable samples across well-synchronized and aligned sensor modalities for easing the scarcity issue of annotations and identities in human affective computing. The new database will be released to the research community.

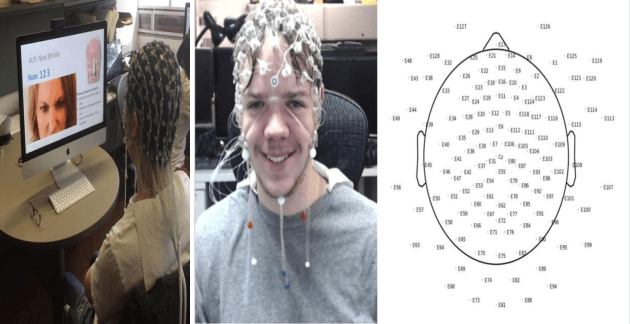

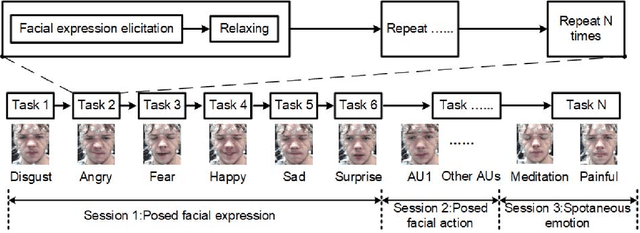

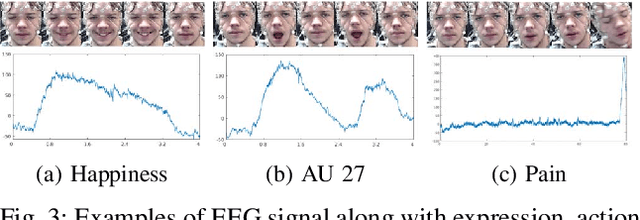

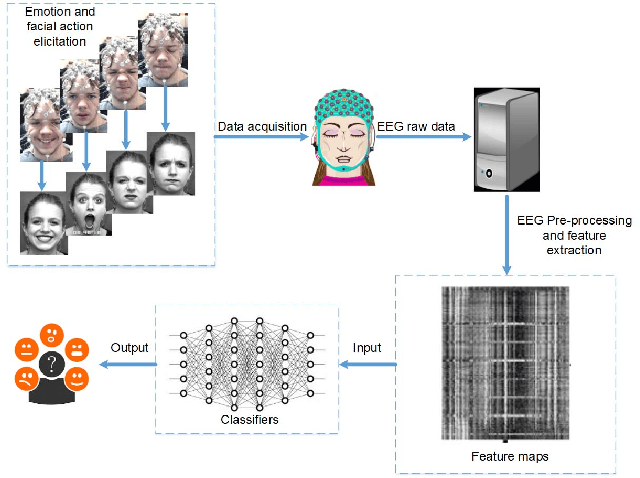

An EEG-Based Multi-Modal Emotion Database with Both Posed and Authentic Facial Actions for Emotion Analysis

Mar 29, 2022

Abstract:Emotion is an experience associated with a particular pattern of physiological activity along with different physiological, behavioral and cognitive changes. One behavioral change is facial expression, which has been studied extensively over the past few decades. Facial behavior varies with a person's emotion according to differences in terms of culture, personality, age, context, and environment. In recent years, physiological activities have been used to study emotional responses. A typical signal is the electroencephalogram (EEG), which measures brain activity. Most of existing EEG-based emotion analysis has overlooked the role of facial expression changes. There exits little research on the relationship between facial behavior and brain signals due to the lack of dataset measuring both EEG and facial action signals simultaneously. To address this problem, we propose to develop a new database by collecting facial expressions, action units, and EEGs simultaneously. We recorded the EEGs and face videos of both posed facial actions and spontaneous expressions from 29 participants with different ages, genders, ethnic backgrounds. Differing from existing approaches, we designed a protocol to capture the EEG signals by evoking participants' individual action units explicitly. We also investigated the relation between the EEG signals and facial action units. As a baseline, the database has been evaluated through the experiments on both posed and spontaneous emotion recognition with images alone, EEG alone, and EEG fused with images, respectively. The database will be released to the research community to advance the state of the art for automatic emotion recognition.

Your "Attention" Deserves Attention: A Self-Diversified Multi-Channel Attention for Facial Action Analysis

Mar 23, 2022

Abstract:Visual attention has been extensively studied for learning fine-grained features in both facial expression recognition (FER) and Action Unit (AU) detection. A broad range of previous research has explored how to use attention modules to localize detailed facial parts (e,g. facial action units), learn discriminative features, and learn inter-class correlation. However, few related works pay attention to the robustness of the attention module itself. Through experiments, we found neural attention maps initialized with different feature maps yield diverse representations when learning to attend the identical Region of Interest (ROI). In other words, similar to general feature learning, the representational quality of attention maps also greatly affects the performance of a model, which means unconstrained attention learning has lots of randomnesses. This uncertainty lets conventional attention learning fall into sub-optimal. In this paper, we propose a compact model to enhance the representational and focusing power of neural attention maps and learn the "inter-attention" correlation for refined attention maps, which we term the "Self-Diversified Multi-Channel Attention Network (SMA-Net)". The proposed method is evaluated on two benchmark databases (BP4D and DISFA) for AU detection and four databases (CK+, MMI, BU-3DFE, and BP4D+) for facial expression recognition. It achieves superior performance compared to the state-of-the-art methods.

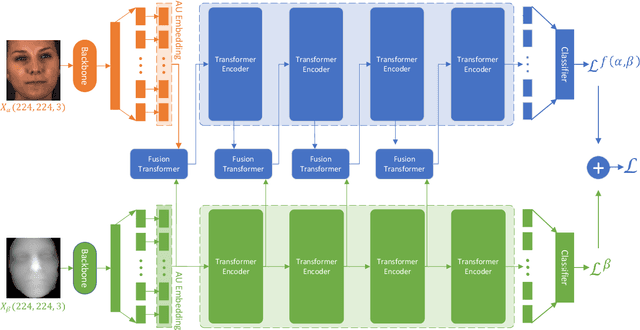

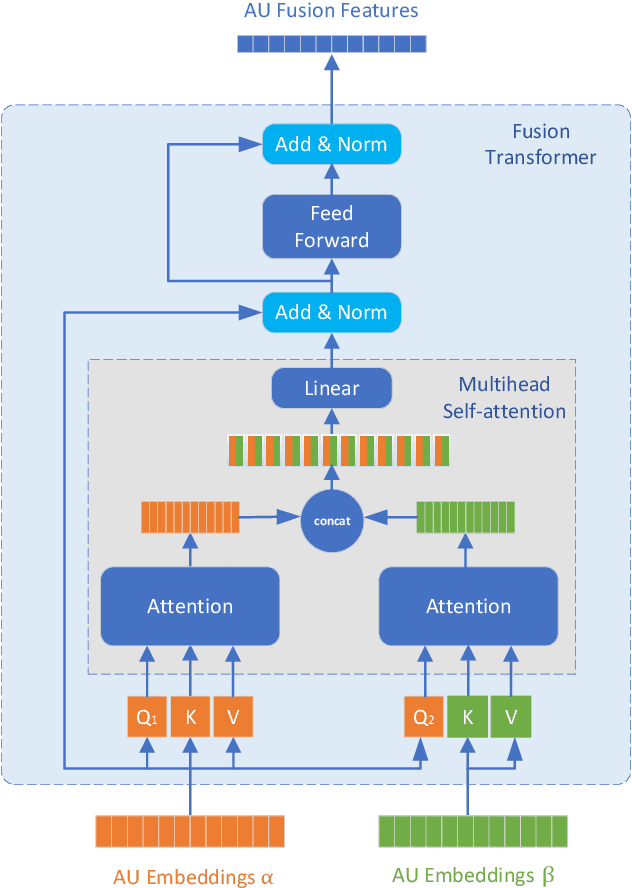

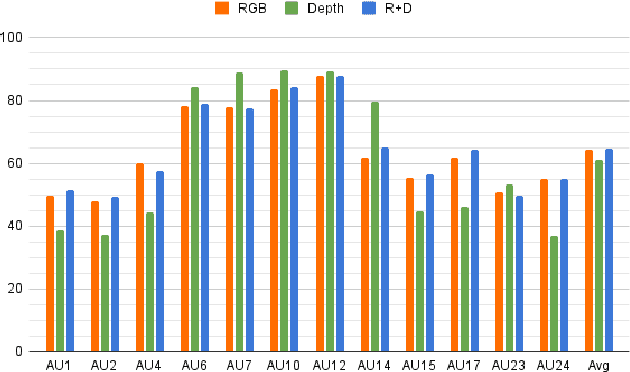

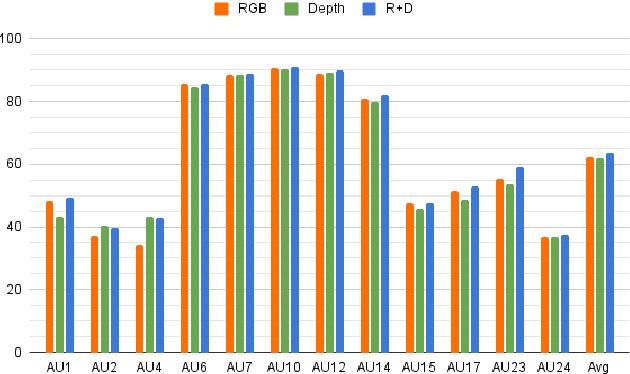

Multi-Modal Learning for AU Detection Based on Multi-Head Fused Transformers

Mar 22, 2022

Abstract:Multi-modal learning has been intensified in recent years, especially for applications in facial analysis and action unit detection whilst there still exist two main challenges in terms of 1) relevant feature learning for representation and 2) efficient fusion for multi-modalities. Recently, there are a number of works have shown the effectiveness in utilizing the attention mechanism for AU detection, however, most of them are binding the region of interest (ROI) with features but rarely apply attention between features of each AU. On the other hand, the transformer, which utilizes a more efficient self-attention mechanism, has been widely used in natural language processing and computer vision tasks but is not fully explored in AU detection tasks. In this paper, we propose a novel end-to-end Multi-Head Fused Transformer (MFT) method for AU detection, which learns AU encoding features representation from different modalities by transformer encoder and fuses modalities by another fusion transformer module. Multi-head fusion attention is designed in the fusion transformer module for the effective fusion of multiple modalities. Our approach is evaluated on two public multi-modal AU databases, BP4D, and BP4D+, and the results are superior to the state-of-the-art algorithms and baseline models. We further analyze the performance of AU detection from different modalities.

The First Vision For Vitals Challenge for Non-Contact Video-Based Physiological Estimation

Sep 22, 2021

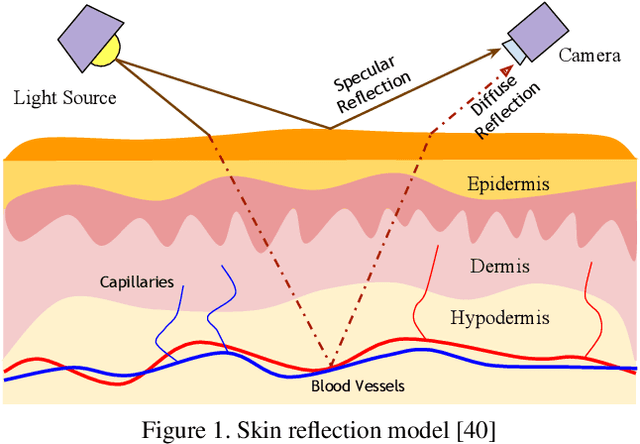

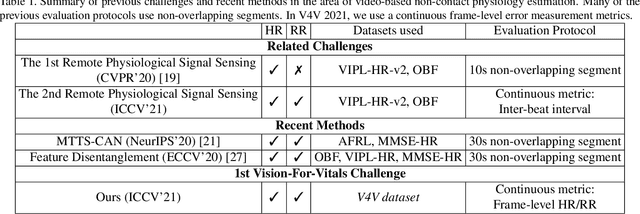

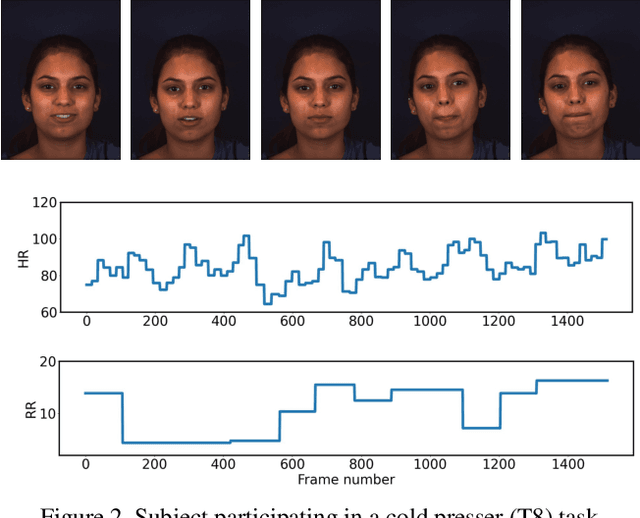

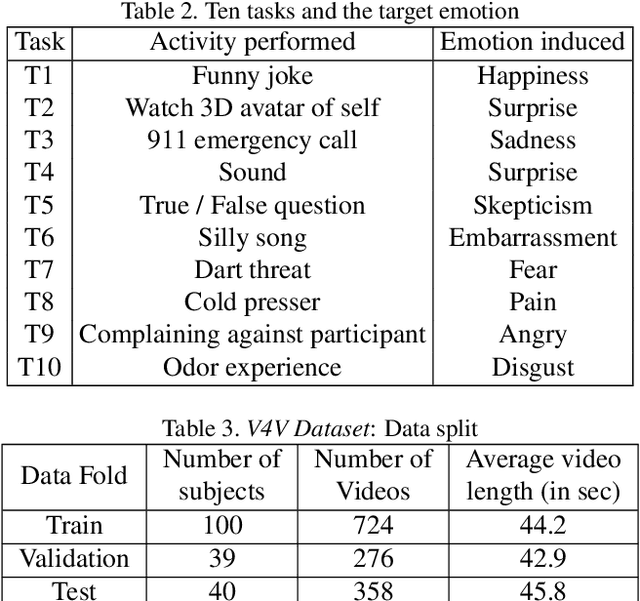

Abstract:Telehealth has the potential to offset the high demand for help during public health emergencies, such as the COVID-19 pandemic. Remote Photoplethysmography (rPPG) - the problem of non-invasively estimating blood volume variations in the microvascular tissue from video - would be well suited for these situations. Over the past few years a number of research groups have made rapid advances in remote PPG methods for estimating heart rate from digital video and obtained impressive results. How these various methods compare in naturalistic conditions, where spontaneous behavior, facial expressions, and illumination changes are present, is relatively unknown. To enable comparisons among alternative methods, the 1st Vision for Vitals Challenge (V4V) presented a novel dataset containing high-resolution videos time-locked with varied physiological signals from a diverse population. In this paper, we outline the evaluation protocol, the data used, and the results. V4V is to be held in conjunction with the 2021 International Conference on Computer Vision.

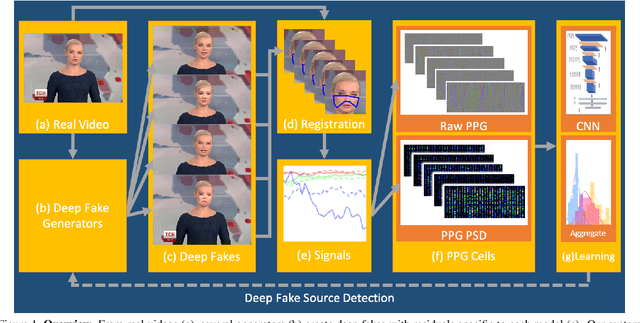

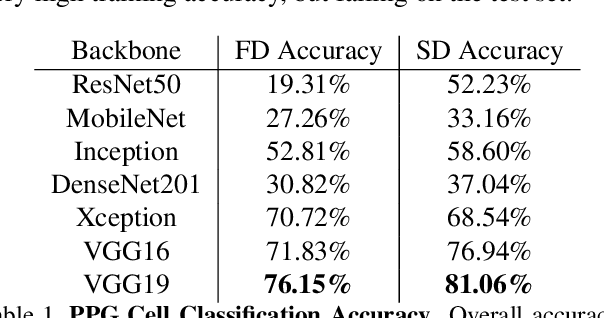

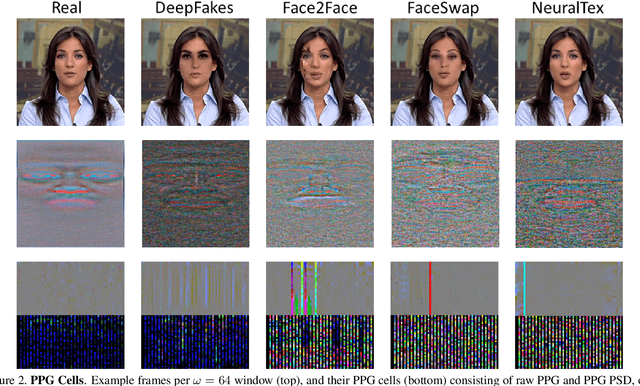

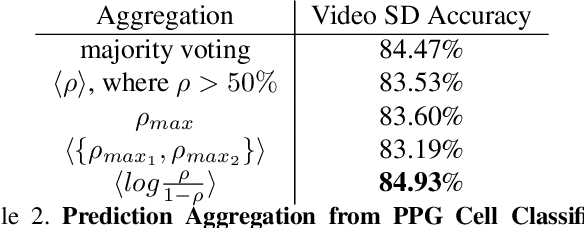

How Do the Hearts of Deep Fakes Beat? Deep Fake Source Detection via Interpreting Residuals with Biological Signals

Aug 26, 2020

Abstract:Fake portrait video generation techniques have been posing a new threat to the society with photorealistic deep fakes for political propaganda, celebrity imitation, forged evidences, and other identity related manipulations. Following these generation techniques, some detection approaches have also been proved useful due to their high classification accuracy. Nevertheless, almost no effort was spent to track down the source of deep fakes. We propose an approach not only to separate deep fakes from real videos, but also to discover the specific generative model behind a deep fake. Some pure deep learning based approaches try to classify deep fakes using CNNs where they actually learn the residuals of the generator. We believe that these residuals contain more information and we can reveal these manipulation artifacts by disentangling them with biological signals. Our key observation yields that the spatiotemporal patterns in biological signals can be conceived as a representative projection of residuals. To justify this observation, we extract PPG cells from real and fake videos and feed these to a state-of-the-art classification network for detecting the generative model per video. Our results indicate that our approach can detect fake videos with 97.29% accuracy, and the source model with 93.39% accuracy.

FERA 2017 - Addressing Head Pose in the Third Facial Expression Recognition and Analysis Challenge

Feb 14, 2017

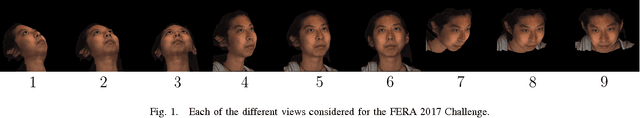

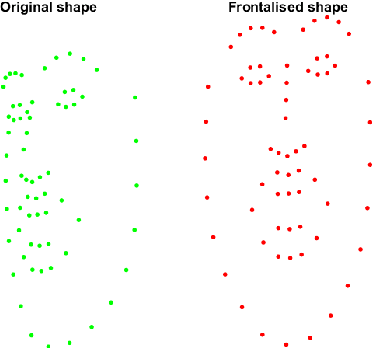

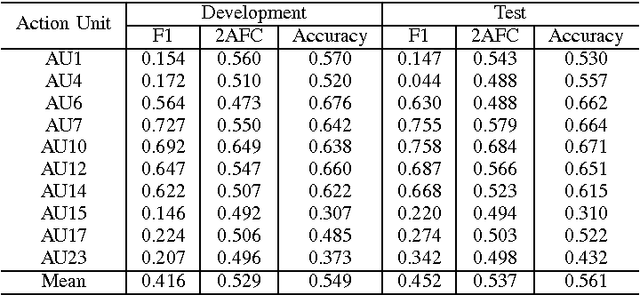

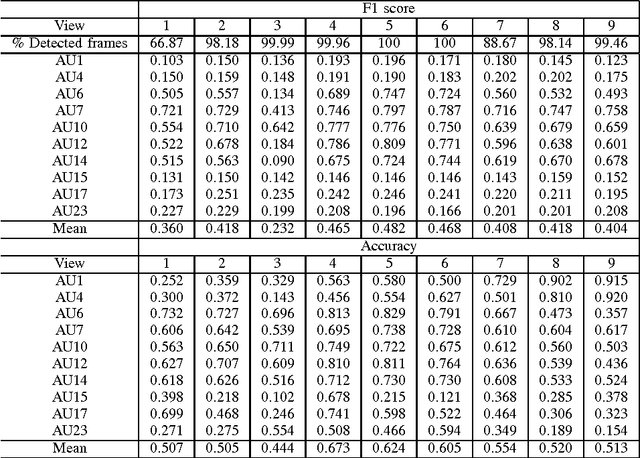

Abstract:The field of Automatic Facial Expression Analysis has grown rapidly in recent years. However, despite progress in new approaches as well as benchmarking efforts, most evaluations still focus on either posed expressions, near-frontal recordings, or both. This makes it hard to tell how existing expression recognition approaches perform under conditions where faces appear in a wide range of poses (or camera views), displaying ecologically valid expressions. The main obstacle for assessing this is the availability of suitable data, and the challenge proposed here addresses this limitation. The FG 2017 Facial Expression Recognition and Analysis challenge (FERA 2017) extends FERA 2015 to the estimation of Action Units occurrence and intensity under different camera views. In this paper we present the third challenge in automatic recognition of facial expressions, to be held in conjunction with the 12th IEEE conference on Face and Gesture Recognition, May 2017, in Washington, United States. Two sub-challenges are defined: the detection of AU occurrence, and the estimation of AU intensity. In this work we outline the evaluation protocol, the data used, and the results of a baseline method for both sub-challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge