Junyan Wang

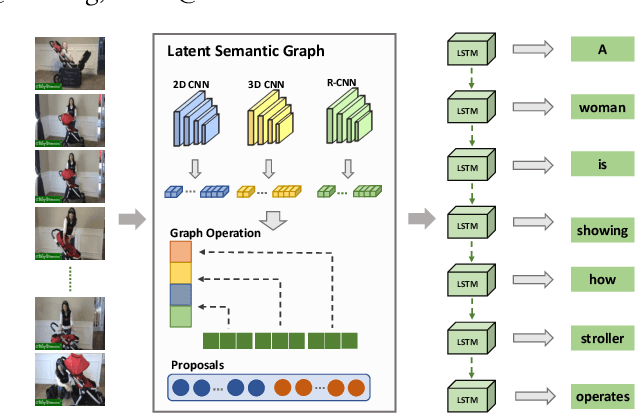

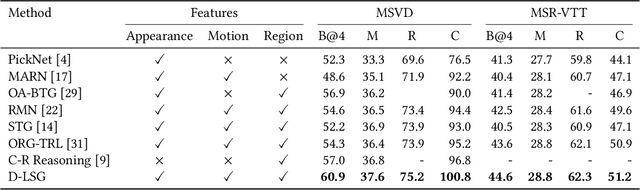

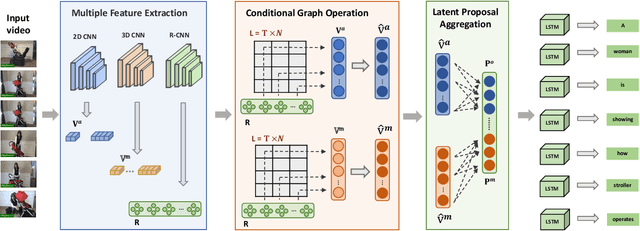

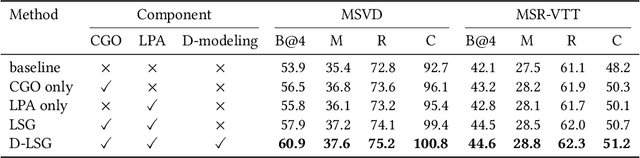

Discriminative Latent Semantic Graph for Video Captioning

Aug 10, 2021

Abstract:Video captioning aims to automatically generate natural language sentences that can describe the visual contents of a given video. Existing generative models like encoder-decoder frameworks cannot explicitly explore the object-level interactions and frame-level information from complex spatio-temporal data to generate semantic-rich captions. Our main contribution is to identify three key problems in a joint framework for future video summarization tasks. 1) Enhanced Object Proposal: we propose a novel Conditional Graph that can fuse spatio-temporal information into latent object proposal. 2) Visual Knowledge: Latent Proposal Aggregation is proposed to dynamically extract visual words with higher semantic levels. 3) Sentence Validation: A novel Discriminative Language Validator is proposed to verify generated captions so that key semantic concepts can be effectively preserved. Our experiments on two public datasets (MVSD and MSR-VTT) manifest significant improvements over state-of-the-art approaches on all metrics, especially for BLEU-4 and CIDEr. Our code is available at https://github.com/baiyang4/D-LSG-Video-Caption.

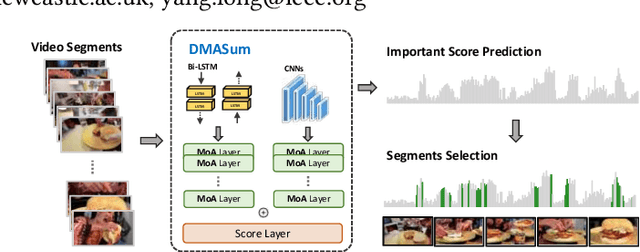

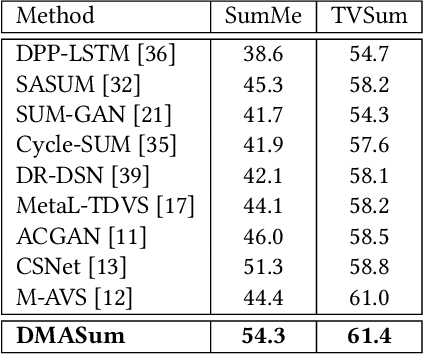

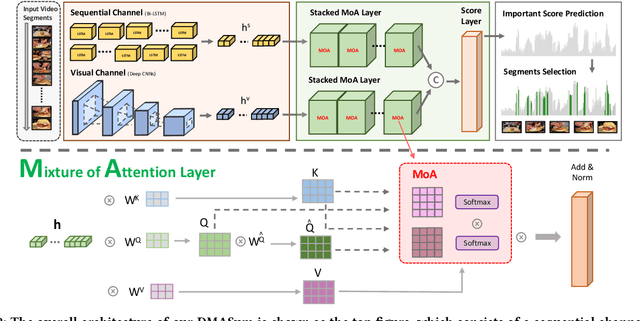

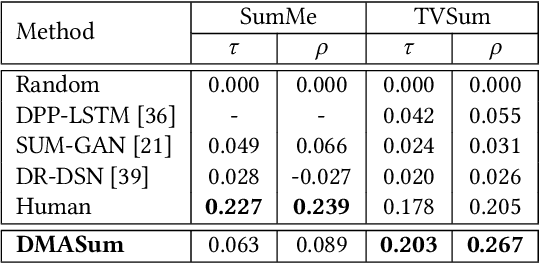

Query Twice: Dual Mixture Attention Meta Learning for Video Summarization

Aug 19, 2020

Abstract:Video summarization aims to select representative frames to retain high-level information, which is usually solved by predicting the segment-wise importance score via a softmax function. However, softmax function suffers in retaining high-rank representations for complex visual or sequential information, which is known as the Softmax Bottleneck problem. In this paper, we propose a novel framework named Dual Mixture Attention (DMASum) model with Meta Learning for video summarization that tackles the softmax bottleneck problem, where the Mixture of Attention layer (MoA) effectively increases the model capacity by employing twice self-query attention that can capture the second-order changes in addition to the initial query-key attention, and a novel Single Frame Meta Learning rule is then introduced to achieve more generalization to small datasets with limited training sources. Furthermore, the DMASum significantly exploits both visual and sequential attention that connects local key-frame and global attention in an accumulative way. We adopt the new evaluation protocol on two public datasets, SumMe, and TVSum. Both qualitative and quantitative experiments manifest significant improvements over the state-of-the-art methods.

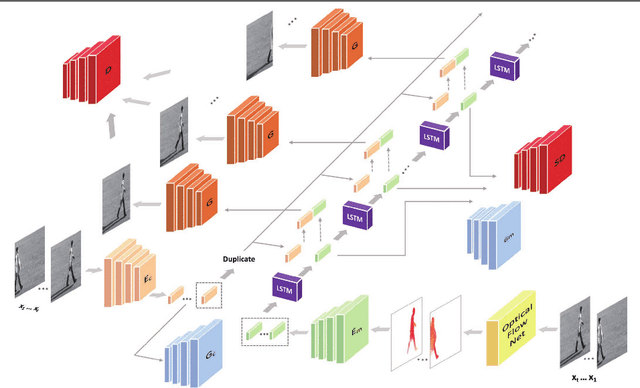

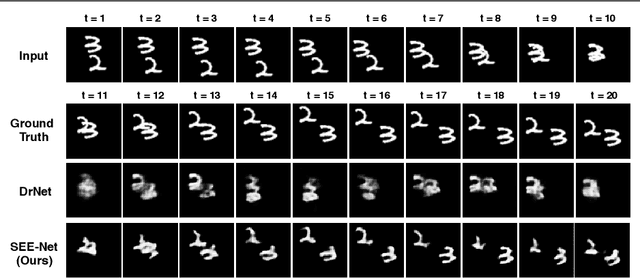

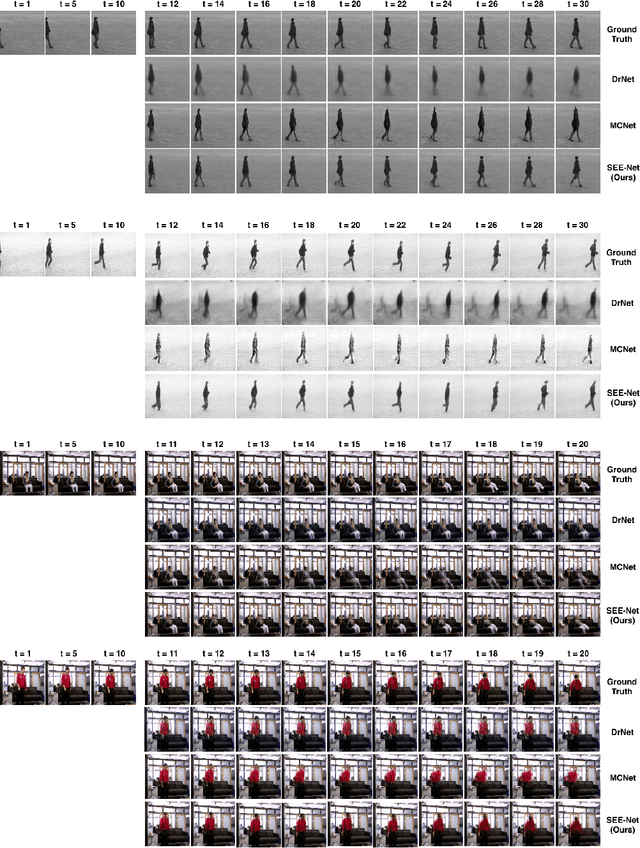

Order Matters: Shuffling Sequence Generation for Video Prediction

Jul 20, 2019

Abstract:Predicting future frames in natural video sequences is a new challenge that is receiving increasing attention in the computer vision community. However, existing models suffer from severe loss of temporal information when the predicted sequence is long. Compared to previous methods focusing on generating more realistic contents, this paper extensively studies the importance of sequential order information for video generation. A novel Shuffling sEquence gEneration network (SEE-Net) is proposed that can learn to discriminate unnatural sequential orders by shuffling the video frames and comparing them to the real video sequence. Systematic experiments on three datasets with both synthetic and real-world videos manifest the effectiveness of shuffling sequence generation for video prediction in our proposed model and demonstrate state-of-the-art performance by both qualitative and quantitative evaluations. The source code is available at https://github.com/andrewjywang/SEENet.

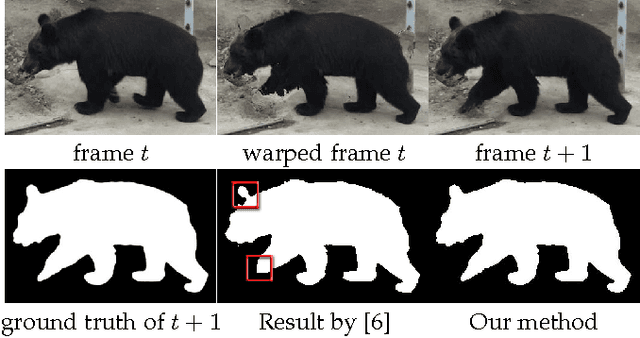

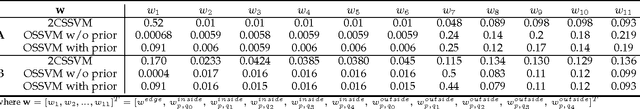

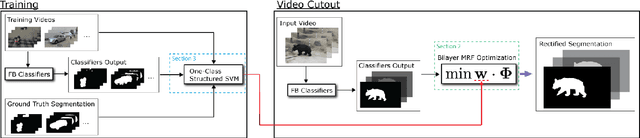

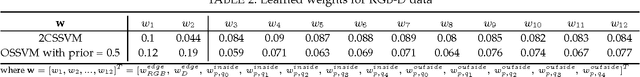

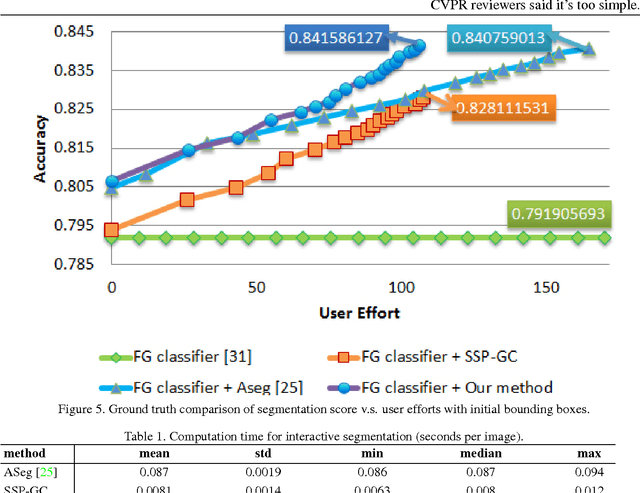

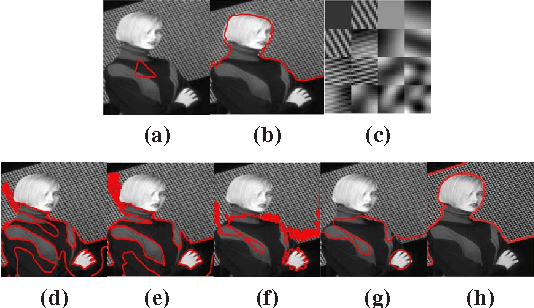

Segmentation Rectification for Video Cutout via One-Class Structured Learning

Feb 16, 2016

Abstract:Recent works on interactive video object cutout mainly focus on designing dynamic foreground-background (FB) classifiers for segmentation propagation. However, the research on optimally removing errors from the FB classification is sparse, and the errors often accumulate rapidly, causing significant errors in the propagated frames. In this work, we take the initial steps to addressing this problem, and we call this new task \emph{segmentation rectification}. Our key observation is that the possibly asymmetrically distributed false positive and false negative errors were handled equally in the conventional methods. We, alternatively, propose to optimally remove these two types of errors. To this effect, we propose a novel bilayer Markov Random Field (MRF) model for this new task. We also adopt the well-established structured learning framework to learn the optimal model from data. Additionally, we propose a novel one-class structured SVM (OSSVM) which greatly speeds up the structured learning process. Our method naturally extends to RGB-D videos as well. Comprehensive experiments on both RGB and RGB-D data demonstrate that our simple and effective method significantly outperforms the segmentation propagation methods adopted in the state-of-the-art video cutout systems, and the results also suggest the potential usefulness of our method in image cutout system.

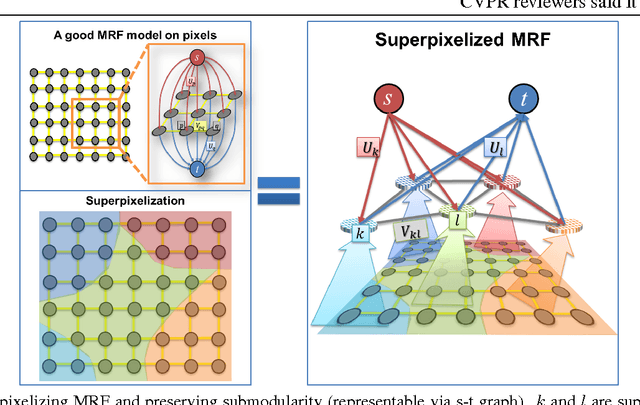

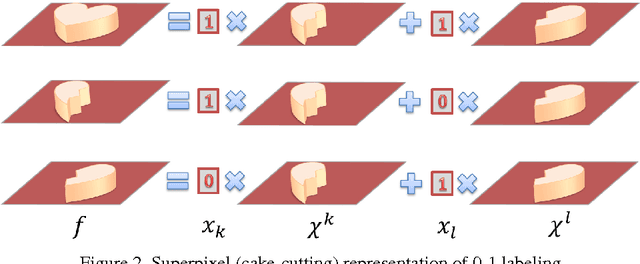

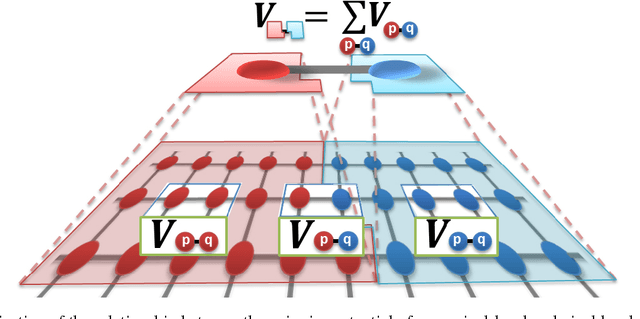

Superpixelizing Binary MRF for Image Labeling Problems

Mar 23, 2015

Abstract:Superpixels have become prevalent in computer vision. They have been used to achieve satisfactory performance at a significantly smaller computational cost for various tasks. People have also combined superpixels with Markov random field (MRF) models. However, it often takes additional effort to formulate MRF on superpixel-level, and to the best of our knowledge there exists no principled approach to obtain this formulation. In this paper, we show how generic pixel-level binary MRF model can be solved in the superpixel space. As the main contribution of this paper, we show that a superpixel-level MRF can be derived from the pixel-level MRF by substituting the superpixel representation of the pixelwise label into the original pixel-level MRF energy. The resultant superpixel-level MRF energy also remains submodular for a submodular pixel-level MRF. The derived formula hence gives us a handy way to formulate MRF energy in superpixel-level. In the experiments, we demonstrate the efficacy of our approach on several computer vision problems.

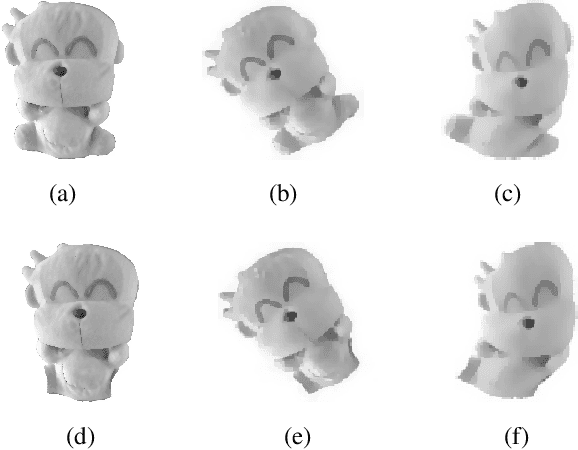

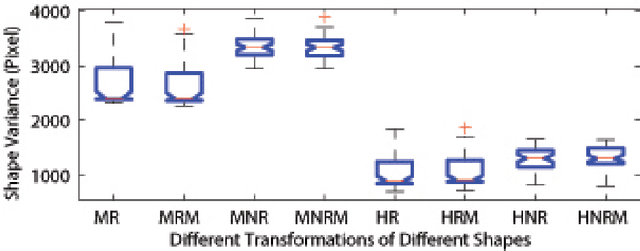

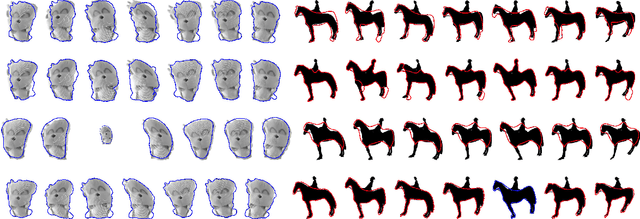

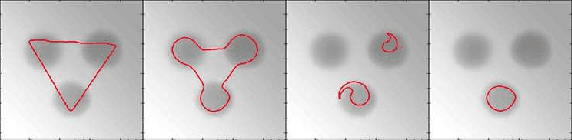

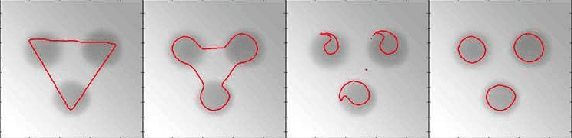

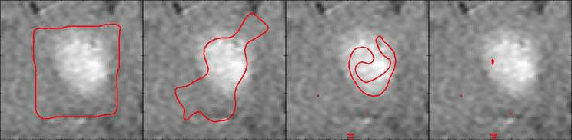

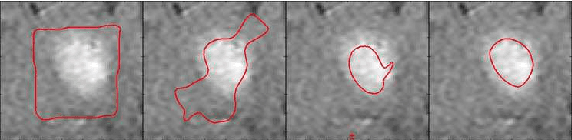

Rigid and Non-rigid Shape Evolutions for Shape Alignment and Recovery in Images

Dec 29, 2014

Abstract:The same type of objects in different images may vary in their shapes because of rigid and non-rigid shape deformations, occluding foreground as well as cluttered background. The problem concerned in this work is the shape extraction in such challenging situations. We approach the shape extraction through shape alignment and recovery. This paper presents a novel and general method for shape alignment and recovery by using one example shapes based on deterministic energy minimization. Our idea is to use general model of shape deformation in minimizing active contour energies. Given \emph{a priori} form of the shape deformation, we show how the curve evolution equation corresponding to the shape deformation can be derived. The curve evolution is called the prior variation shape evolution (PVSE). We also derive the energy-minimizing PVSE for minimizing active contour energies. For shape recovery, we propose to use the PVSE that deforms the shape while preserving its shape characteristics. For choosing such shape-preserving PVSE, a theory of shape preservability of the PVSE is established. Experimental results validate the theory and the formulations, and they demonstrate the effectiveness of our method.

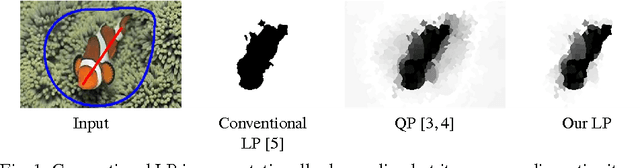

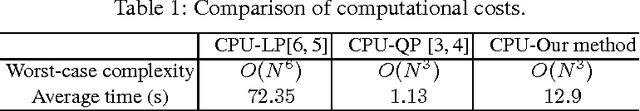

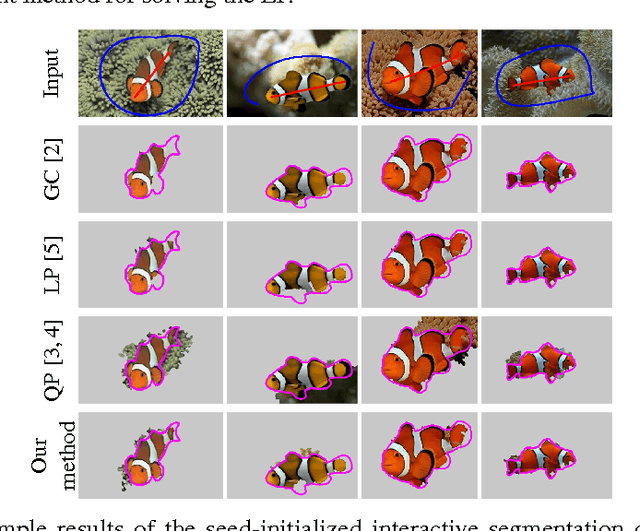

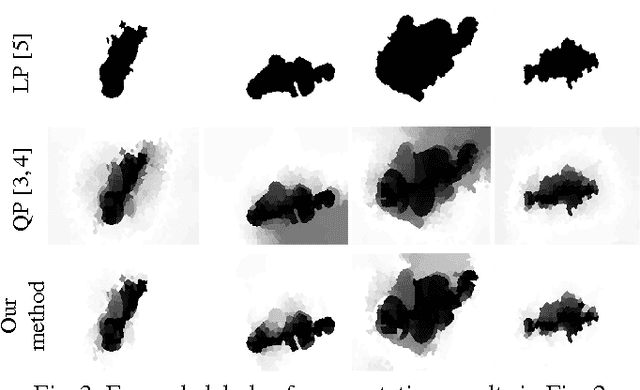

A Compact Linear Programming Relaxation for Binary Sub-modular MRF

Apr 09, 2014

Abstract:We propose a novel compact linear programming (LP) relaxation for binary sub-modular MRF in the context of object segmentation. Our model is obtained by linearizing an $l_1^+$-norm derived from the quadratic programming (QP) form of the MRF energy. The resultant LP model contains significantly fewer variables and constraints compared to the conventional LP relaxation of the MRF energy. In addition, unlike QP which can produce ambiguous labels, our model can be viewed as a quasi-total-variation minimization problem, and it can therefore preserve the discontinuities in the labels. We further establish a relaxation bound between our LP model and the conventional LP model. In the experiments, we demonstrate our method for the task of interactive object segmentation. Our LP model outperforms QP when converting the continuous labels to binary labels using different threshold values on the entire Oxford interactive segmentation dataset. The computational complexity of our LP is of the same order as that of the QP, and it is significantly lower than the conventional LP relaxation.

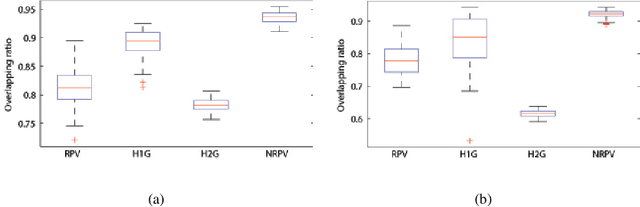

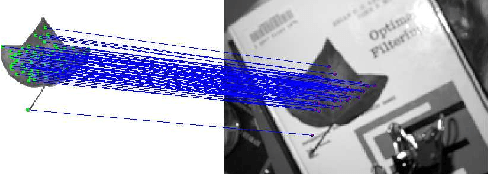

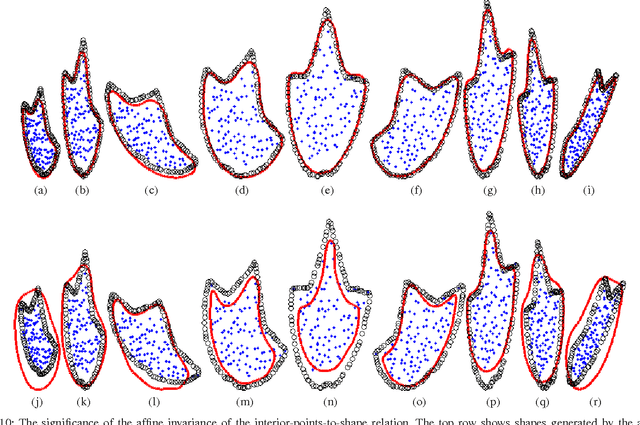

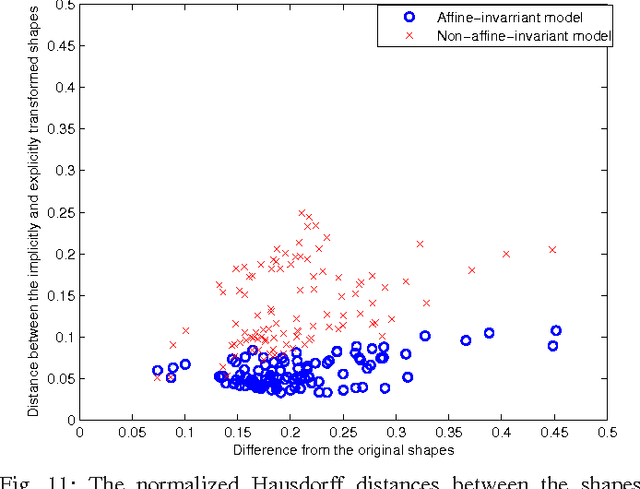

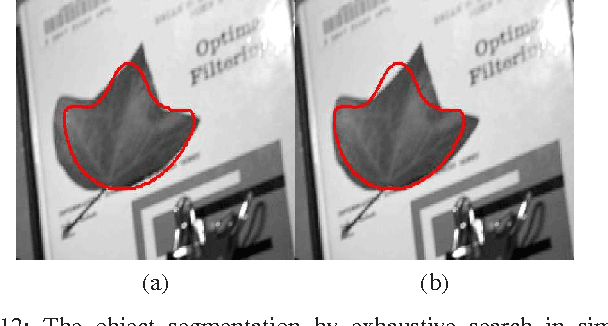

Matching-Constrained Active Contours

Jul 24, 2013

Abstract:In object segmentation by active contours, the initial contour is often required. Conventionally, the initial contour is provided by the user. This paper extends the conventional active contour model by incorporating feature matching in the formulation, which gives rise to a novel matching-constrained active contour. The numerical solution to the new optimization model provides an automated framework of object segmentation without user intervention. The main idea is to incorporate feature point matching as a constraint in active contour models. To this effect, we obtain a mathematical model of interior points to boundary contour such that matching of interior feature points gives contour alignment, and we formulate the matching score as a constraint to active contour model such that the feature matching of maximum score that gives the contour alignment provides the initial feasible solution to the constrained optimization model of segmentation. The constraint also ensures that the optimal contour does not deviate too much from the initial contour. Projected-gradient descent equations are derived to solve the constrained optimization. In the experiments, we show that our method is capable of achieving the automatic object segmentation, and it outperforms the related methods.

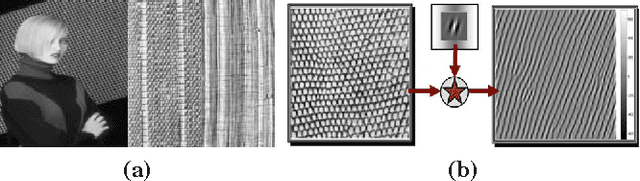

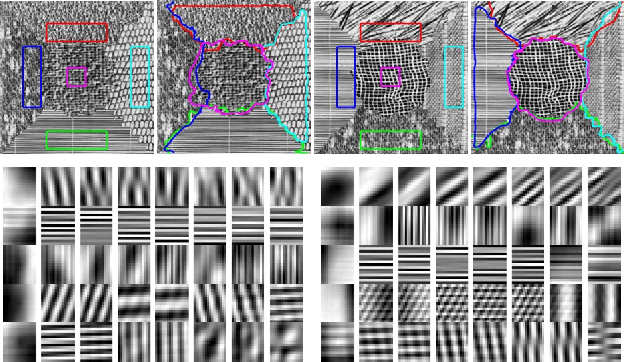

Piecewise Linear Patch Reconstruction for Segmentation and Description of Non-smooth Image Structures

Jul 21, 2012

Abstract:In this paper, we propose a unified energy minimization model for the segmentation of non-smooth image structures. The energy of piecewise linear patch reconstruction is considered as an objective measure of the quality of the segmentation of non-smooth structures. The segmentation is achieved by minimizing the single energy without any separate process of feature extraction. We also prove that the error of segmentation is bounded by the proposed energy functional, meaning that minimizing the proposed energy leads to reducing the error of segmentation. As a by-product, our method produces a dictionary of optimized orthonormal descriptors for each segmented region. The unique feature of our method is that it achieves the simultaneous segmentation and description for non-smooth image structures under the same optimization framework. The experiments validate our theoretical claims and show the clear superior performance of our methods over other related methods for segmentation of various image textures. We show that our model can be coupled with the piecewise smooth model to handle both smooth and non-smooth structures, and we demonstrate that the proposed model is capable of coping with multiple different regions through the one-against-all strategy.

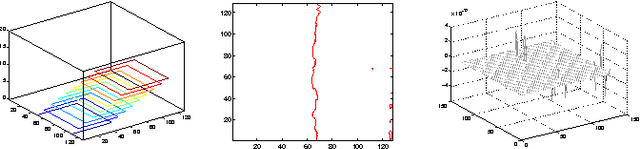

The Stability of Convergence of Curve Evolutions in Vector Fields

Jun 17, 2012

Abstract:Curve evolution is often used to solve computer vision problems. If the curve evolution fails to converge, we would not be able to solve the targeted problem in a lifetime. This paper studies the theoretical aspect of the convergence of a type of general curve evolutions. We establish a theory for analyzing and improving the stability of the convergence of the general curve evolutions. Based on this theory, we ascertain that the convergence of a known curve evolution is marginal stable. We propose a way of modifying the original curve evolution equation to improve the stability of the convergence according to our theory. Numerical experiments show that the modification improves the convergence of the curve evolution, which validates our theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge